Business System Analysis & Decision Making

advertisement

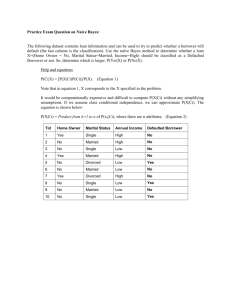

Lecture Notes 16: Bayes’ Theorem and Data Mining Zhangxi Lin ISQS 6347 1 Modeling Uncertainty Probability Review Bayes Classifier Value of Information Conditional Probability and Bayes’ Theorem Expected Value of Perfect Information Expected Value of Imperfect Information 2 Probability Review P(A|B) = P(A and B) / P(B) “Probability of A given B” Example, there are 40 female students in a class of 100. 10 of them are from some foreign countries. 20 male students are also foreign students. Even A: student from a foreign country Even B: a female student If randomly choosing a female student to present in the class, the probability she is a foreign student: P(A|B) = 10 / 40 = 0.25, or P(A|B) = P (A & B) / P (B) = (10 /100) / (40 / 100) = 0.1 / 0.4 = 0.25 That is, P(A|B) = # of A&B / # of B = (# of A&B / Total) / (# of B / Total) = P(A & B) / P(B) 3 Venn Diagrams 30+10 = 40 Female (30) Male non-foreign student (40) 20+10 = 30 Foreign (10) Student (20) Female foreign student (10) 4 Probability Review Complement P( A ) 1 P( A) P( B ) 1 P( B) Non Female Female Non Foreign Student Foreign student 5 Bayes Classifier 6 Bayes’ Theorem (From Wikipedia) In probability theory, Bayes' theorem (often called Bayes' Law) relates the conditional and marginal probabilities of two random events. It is often used to compute posterior probabilities given observations. For example, a patient may be observed to have certain symptoms. Bayes' theorem can be used to compute the probability that a proposed diagnosis is correct, given that observation. As a formal theorem, Bayes' theorem is valid in all interpretations of probability. However, it plays a central role in the debate around the foundations of statistics: frequentist and Bayesian interpretations disagree about the ways in which probabilities should be assigned in applications. Frequentists assign probabilities to random events according to their frequencies of occurrence or to subsets of populations as proportions of the whole, while Bayesians describe probabilities in terms of beliefs and degrees of uncertainty. The articles on Bayesian probability and frequentist probability discuss these debates at greater length. 7 Bayes’ Theorem P( A & B) P( A | B) P( B) P( A & B) P( B) P( A & B) P( B | A) P( B | A) P( A) P( A & B) P( A) P( A | B) So: P( A | B) P( B) P( B | A) P( A) P( B | A) P( A | B) P( B) P( A | B) P( B) P( A) P( A | B) P( B) P( A | B ) P( B ) The above formula is referred to as Bayes’ theorem. It is extremely Useful in decision analysis when using information. 8 Example of Bayes Theorem Given: A doctor knows that meningitis (M) causes stiff neck (S) 50% of the time Prior probability of any patient having meningitis is 1/50,000 Prior probability of any patient having stiff neck is 1/20 If a patient has stiff neck, what’s the probability he/she has meningitis? P( S | M ) P( M ) 0.5 1 / 50000 P( M | S ) 0.0002 P( S ) 1 / 20 9 Bayes Classifiers Consider each attribute and class label as random variables Given a record with attributes (A1, A2,…,An) Goal is to predict class C (= (c1, c2, …, cm)) Specifically, we want to find the value of C that maximizes P(C| A1, A2,…,An ) Can we estimate P(C| A1, A2,…,An ) directly from data? 10 Bayes Classifiers Approach: compute the posterior probability P(C | A1, A2, …, An) for all values of C using the Bayes theorem P(C | A A A ) 1 2 n P( A A A | C ) P(C ) P( A A A ) 1 2 n 1 2 n Choose value of C that maximizes P(C | A1, A2, …, An) Equivalent to choosing value of C that maximizes P(A1, A2, …, An|C) P(C) How to estimate P(A1, A2, …, An | C )? 11 Example ca C: Evade (Yes, No) A1: Refund (Yes, No) A2: Marital Status (Single, t o g e l a ric ca t o g e l a ric n o c u it n s u o s s a cl Tid Refund Marital Status Taxable Income Evade 1 Yes Single 125K No 2 No Married 100K No 3 No Single 70K No 4 Yes Married 120K No We can obtain P(A1, A2, 5 No Divorced 95K Yes A3|C), P(A1, A2, A3), and P(C) from the data set Then calculate P(C|A1, A2, A3) for predictions given A1, A2, and A3, while C is unknown. 6 No Married No 7 Yes Divorced 220K No 8 No Single 85K Yes 9 No Married 75K No 10 No Single 90K Yes Married, Divorced) A3: Taxable income (60k – 220k) 60K 10 12 Naïve Bayes Classifier Assume independence among attributes Ai when class is given: P(A1, A2, …, An |C) = P(A1| Cj) P(A2| Cj)… P(An| Cj) Can estimate P(Ai| Cj) for all Ai and Cj. New point is classified to Cj if P(Cj) P(Ai| Cj) is maximal. Note: The above is equivalent to find i such that P(Ai| Cj) is maximal, since P(Cj) is identical. 13 How to Estimate Probabilities from Data? l l c Tid 10 at Refund o eg a c i r c at o eg a c i r co in nt u s u o s s a cl Marital Status Taxable Income Evade Class: P(C) = Nc/N e.g., P(No) = 7/10, P(Yes) = 3/10 1 Yes Single 125K No 2 No Married 100K No 3 No Single 70K No For discrete attributes: 4 Yes Married 120K No P(Ai | Ck) = |Aik|/ Nc 5 No Divorced 95K Yes 6 No Married 60K No 7 Yes Divorced 220K No 8 No Single 85K Yes 9 No Married 75K No 10 No Single 90K Yes k where |Aik| is number of instances having attribute Ai and belongs to class Ck Examples: P(Status=Married|No) = 4/7 P(Refund=Yes|Yes)=0 14 *How to Estimate Probabilities from Data? For continuous attributes: Discretize the range into bins one ordinal attribute per bin violates independence assumption Two-way split: (A < v) or (A > v) choose only one of the two splits as new attribute Probability density estimation: Assume attribute follows a normal distribution Use data to estimate parameters of distribution (e.g., mean and standard deviation) Once probability distribution is known, can use it to estimate the conditional probability P(Ai|c) 15 l l s a u *How toricaEstimate Probabilities from Data? c i r uo c Tid at Refund o g e c at o g e co in t n as l c Marital Status Taxable Income Evade 1 Yes Single 125K No 2 No Married 100K No 3 No Single 70K No 4 Yes Married 120K No 5 No Divorced 95K Yes 6 No Married 60K No 7 Yes Divorced 220K No 8 No Single 85K Yes 9 No Married 75K No 10 No Single 90K Yes s Normal distribution: 1 P( A | c ) e 2 i j ( Ai ij ) 2 2 ij2 2 ij One for each (Ai,ci) pair For (Income, Class=No): If Class=No sample mean = 110 sample variance = 2975 10 1 P( Income 120 | No) e 2 (54.54) ( 120110) 2 2 ( 2975) 0.0072 16 Example of Naïve Bayes Classifier Given a Test Record: X (Refund No, Married, Income 120K) naive Bayes Classifier: P(Refund=Yes|No) = 3/7 P(Refund=No|No) = 4/7 P(Refund=Yes|Yes) = 0 P(Refund=No|Yes) = 1 P(Marital Status=Single|No) = 2/7 P(Marital Status=Divorced|No)=1/7 P(Marital Status=Married|No) = 4/7 P(Marital Status=Single|Yes) = 2/7 P(Marital Status=Divorced|Yes)=1/7 P(Marital Status=Married|Yes) = 0 For taxable income: If class=No: sample mean=110 sample variance=2975 If class=Yes: sample mean=90 sample variance=25 P(X|Class=No) = P(Refund=No|Class=No) P(Married| Class=No) P(Income=120K| Class=No) = 4/7 4/7 0.0072 = 0.0024 P(X|Class=Yes) = P(Refund=No| Class=Yes) P(Married| Class=Yes) P(Income=120K| Class=Yes) = 1 0 1.2 10-9 = 0 Since P(X|No)P(No) > P(X|Yes)P(Yes) Therefore P(No|X) > P(Yes|X) => Class = No 17 Naïve Bayes Classifier If one of the conditional probability is zero, then the entire expression becomes zero Probability estimation: N ic Original : P( Ai | C ) Nc c: number of classes N ic 1 Laplace : P( Ai | C ) Nc c m - estimate : P( Ai | C ) N ic mp Nc m p: prior probability m: parameter 18 *Example of Naïve Bayes Classifier Name human python salmon whale frog komodo bat pigeon cat leopard shark turtle penguin porcupine eel salamander gila monster platypus owl dolphin eagle Give Birth yes Give Birth yes no no yes no no yes no yes yes no no yes no no no no no yes no Can Fly no no no no no no yes yes no no no no no no no no no yes no yes Can Fly no Live in Water Have Legs no no yes yes sometimes no no no no yes sometimes sometimes no yes sometimes no no no yes no Class yes no no no yes yes yes yes yes no yes yes yes no yes yes yes yes no yes mammals non-mammals non-mammals mammals non-mammals non-mammals mammals non-mammals mammals non-mammals non-mammals non-mammals mammals non-mammals non-mammals non-mammals mammals non-mammals mammals non-mammals Live in Water Have Legs yes no Class A: attributes M: mammals N: non-mammals 6 6 2 2 P( A | M ) 0.06 7 7 7 7 1 10 3 4 P( A | N ) 0.0042 13 13 13 13 7 P( A | M ) P( M ) 0.06 0.021 20 13 P( A | N ) P( N ) 0.004 0.0027 20 P(A|M)P(M) > P(A|N)P(N) => Mammals ? 19 Naïve Bayes (Summary) Robust to isolated noise points Handle missing values by ignoring the instance during probability estimate calculations Robust to irrelevant attributes Independence assumption may not hold for some attributes Use other techniques such as Bayesian Belief Networks (BBN) 20 Value of Information When facing uncertain prospects we need information in order to reduce uncertainty Information gathering includes consulting experts, conducting surveys, performing mathematical or statistical analyses, etc. 21 Expected Value of Perfect Information (EVPI) Problem: An buyer is to buy something online Seller type Bad Not use insurance Pay $100 Net gain - $100 0.01 EMV = $18.8 0.99 Buyer Good $20 Bad - $2 0.01 EMV = $17.8 Use insurance Pay $100+$2 = $102 Good 0.99 $18 22 Expected Value of Imperfect Information (EVII) We rarely access to perfect information, which is common. Thus we must extend our analysis to deal with imperfect information. Now suppose we can access the online reputation to estimate the risk in trading with a seller. Someone provide their suggestions to you according to their experience. Their predictions are not 100% correct: If the product is actually good, the person’s prediction is 90% correct, whereas the remaining 10% is suggested bad. If the product is actually bad, the person’s prediction is 80% correct, whereas the remaining 20% is suggested good. Although the estimate is not accurate enough, it can be used to improve our decision making: If we predict the risk is high to buy the product online, we purchase insurance 23 Decision Tree Extended from the previous online trading question Seller type No Ins Predicted Good Insurance Buyer No Ins - $100 Good (?) $20 Bad (?) - $2 Good (?) $18 Bad (?) - $100 Good (?) $20 Bad (?) Predicted Bad Insurance Questions: Bad (?) Good (?) 1. Given the suggestion What is your decision? 2. What is the probability wrt the decision you made? 3. How do you estimate The accuracy of a prediction? - $2 $18 24 Applying Bayes’ Theorem Let “Good” be even A Let “Bad” be even B Let “Predicted Good” be event G Let “Predicted Bad” be event W According to the previous information, for example by data mining the historical data, we know: P(G|A) = 0.9, P(W|A) = 0.1 P(W|B) = 0.8, P(G|B) = 0.2 P(A) = 0.99, P(B) = 0.01 We want to learn the probability the outcome is good providing the prediction is “good”. i.e. P(A|G) = ? We want to learn the probability the outcome is bad providing the prediction is “bad”. i.e. P(B|W) = ? We may apply Bayes theorem to solve this with imperfect information 25 Calculate P(G) and P(W) P(G) = P(G|A)P(A) + P(G|B)P(B) = 0.9 * 0.99 + 0.2 * 0.01 = 0.893 P(W) = P(W|B)P(B) + P(W|A)P(A) = 0.8 * 0.01 + 0.1 * 0.99 = 0.107 = 1 - P(G) 26 Applying Bayes’ Theorem We have P(A|G) = P(G|A)P(A) / P(G) = P(G|A)P(A) / [P(G|A)P(A) + P(G|B)P(B)] = P(G|A)P(A) / [P(G|A)P(A) + P(G|B)(1 - P(A))] = 0.9 * 0.99 / [0.9 * 0.99 + 0.2 * 0.01] = 0.9978 > 0.99 P(B|W) = P(W|B)P(B) / P(W) = P(W|B)P(B) / [P(W|B)P(B) + P(W|A)P(A)] = P(W|B)P(B) / [P(W|B)P(B) + P(W|A)(1 - P(B))] = 0.8 * 0.01 / [0.8 * 0.01 + 0.1 * 0.99] = 0.0748 > 0.01 Apparently, data mining provides good information and changes the original probability 27 Decision Tree P(A) = 0.99, P(B) = 0.01 Seller type Predicted Good P(G) = 0.893 No Ins Bad (0.0022) - $100 Good (0.9978) $20 Bad (0.0022) - $2 EMV = $19.87 Your choice EMV = $17.78 Insurance Buyer No Ins Good (0.9978) $18 Bad (0.0748) - $100 EMV = $11.03 Good (0.9252) Bad (0.0748) Predicted Bad P(W) = 0.107 Insurance Good (0.9252) $20 - $2 $18 EMV = $16.50 Your choice Data mining can significantly improve your decision making accuracy! 28 Consequences of a Decision Actual Good (A) Actual Bad (B) Predicted Good (G) (not to buy insurance) Predicted Bad (W) (need to buy insurance $2) a Gain $20 b Net Gain $18 P(A) = (a + b) / (a + b + c + d) =0.99 d Cost $2 P(B) = (c + d) / (a + b + c + d) = 0.01 c Lose $100 P(G) = (a + c) / (a + b + c + d) = 0.893 P(W) = (b + d) / (a + b + c + d) = 0.107 P(G|A) = a / (a + b) = 0.9, P(W|A) = b / (a + b) = 0.1 P(W|B) = c / (c + d) = 0.8, P(G|B) = d / (c + d) = 0.2 29 German Bank Credit Decision Computed Good (Action A, B) Computed Bad (Action A, B) Actual Good True Positive 600 ($6, 0) False Negative 100 (0, -$1) 700 Actual Bad False Positive 80 (-$2, -$1) True Negative 220 (-$20, 0) 300 680 320 This is a modified version of the German Bank credit decision problem. 1. Assume because of the anti-discrimination regulation there could be a cost in FN depending on the action taken. 2. The bank has two choices of actions: A & B. Each will have different results. 3.Question 1: When the classification model suggests that a specific loan applicant has a probability 0.8 to be GOOD, which action should be taken? 4. Question 2: When the classification model suggests that a specific loan applicant has a probability 0.6 to be GOOD, which action should be taken? 30 The Payoffs from Two Actions Computed Good (Action A) Computed Bad (Action A) Actual Good True Positive 600 ($6) False Negative 100 (0) 700 Actual Bad False Positive 100 (-$2) True Negative 200 (-$20) 300 700 Computed Good (Action B) 300 Computed Bad (Action B) Actual Good True Positive 600 (0) False Negative 100 (-$1) 700 Actual Bad False Positive 100 (-$1) True Negative 200 (0) 300 700 300 31 Summary There are two decision scenarios In previous classification problems, when predicted target is 1 then take an action, otherwise do nothing. Only the action will make something different. There is a cutoff value for this kind of decision. A riskaversion person may set a higher level of cutoff value, when the utility function is not linear with regard to the monetary result. The risk-aversion person may opt for earn less without the emotional worry of the risk. In current Bayesian decision problem, when the predicted target is 1 then take action A, otherwise take Action B. Both actions will result in some outcomes. 32 Web Page Browsing P0 Problem: When a browsing user Entered P5 from P2, What is the probability He will proceed to P3? P1 P2 0.7 P5 P4 0.3 P3 How to solve the problem in general? 1. Assume this is the first Order Markovian chain. 2. Construct a transition probability matrix We notice that 1. P(P2|P4P0) may not equal to P(P2|P4P1) 2. There is only one entrance of the web site at P0 3. There is no link from P3 to other pages. 33 Transition Probabilities P(K,L)=Probability of traveling FROM K TO L P0/H P1 P2 P3 P4 P5 Exit P0/H P(H,H) P(H,1) P(H,2) P(H,3) P(H,4) P(H,5) P(H,E) P1 P(1,H) P(1,1) P(1,2) P(1,3) P(1,4) P(1,5) P(1,E) P2 P(2,H) P(2,1) P(2,2) P(2,3) P(2,4) P(2,5) P(2,E) P3 P(3,H) P(3,1) P(3,2) P(3,3) P(3,4) P(3,5) P(3,E) P4 P(4,H) P(4,1) P(4,2) P(4,3) P(4,4) P(4,5) P(4,E) P5 P(5,H) P(5,1) P(5,2) P(5,3) P(5,4) P(5,5) P(5,E) Exit 0 0 0 0 0 0 0 34 Demonstration Dataset: Commrex web log data Data Exploration Link analysis The links among nodes Calculate the transition matrix The Bayesian network model for the web log data Reference: David Heckerman, “A Tutorial on Learning With Bayesian Networks,” March 1995 (Revised November 1996), Technical Report, MSR-TR-9506\\BASRV1\ISQS6347\tr-95-06.pdf 35 Readings SPB, Chapter 3 RG, Chapter 10 36