Resesarch Process Revisited - Gail Johnson's Research Demystified

advertisement

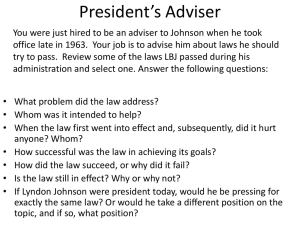

Summing Up: Research Process Revisited Research Methods for Public Administrators Dr. Gail Johnson Dr. G. Johnson, www.ResearchDemystified.org 1 What is Research? A systematic search for answers to questions. Search: to uncover, examine, find by exploration, to investigate, to inquire. Research: "the systematic inquiry into a subject in order to discover or revise facts, theories Dr. G. Johnson, www.ResearchDemystified.org 2 Deductive Vs. Inductive Logics Deductive: Sherlock Holmes Testing theory Inductive: Building theory from the ground up Dr. G. Johnson, www.ResearchDemystified.org 3 Planning Phase 1. Determining your questions 2. Identifying your measures and measurement strategy 3. Selecting a research design 4. Developing your data collection strategy A. Methods B. Sample 5. Identifying your analysis strategy 6. Reviewing and testing your plan Dr. G. Johnson, www.ResearchDemystified.org 4 Planning: An Iterative Process Not as linear as its presented The process is like going through a funnel It feels like going around in circles but things get very narrow and focused-at the end There are no shortcuts Dr. G. Johnson, www.ResearchDemystified.org 5 Step 1: What’s the Question? Identify the issue or concern What’s already known? Literature review, talk with experts Is there a theory? Engage the stakeholders Clarify the issues What matters most? Choosing the question is deceptively difficult and takes way longer than people expect Dr. G. Johnson, www.ResearchDemystified.org 6 Types of Questions Descriptive: what is Normative: comparison to a target Impact/causal: cause-effect Tough to answer and require: Logical theory Time-order Co-variation Eliminates all other rival explanations Dr. G. Johnson, www.ResearchDemystified.org 7 Step 2: Developing a Measurement Strategy Identify and define all the key terms. Do tax cuts cause economic stability? What tax cuts? What is economic stability? Develop operational definitions that translates a concept into something that can be concretely measured. How much money? How is economic stability measured? Will need to think about natural variation too. Dr. G. Johnson, www.ResearchDemystified.org 8 Step 2: Developing a Measurement Strategy Boundaries. Scope of the study: who’s included and excluded, time frame, geographic location(s). Tax cuts during what time frame? Are we talking U.S.? Unit of analysis. If talking about U.S., then we need to look at national data. If looking at a state, then we need to look at state data Dr. G. Johnson, www.ResearchDemystified.org 9 Levels of Measurement Nominal: names and categories Ordinal: has an order to it but not real numbers Interval and Ratio: real numbers Remember: different levels of measurement use different analytical techniques Dr. G. Johnson, www.ResearchDemystified.org 10 Validity and Reliability Valid measures: the exact thing you want to measure The number of books in a library is not a good measure of the quality of the school Infant mortality rate—often used as an indicator of the quality of the health care system Reliable measures: the exact thing measured in the exact same way every time A steel ruler rather than an elastic ruler Dr. G. Johnson, www.ResearchDemystified.org 11 Reliability and Validity Remember: The poverty measure is reliable because it is measured the same way every time But many question its validity because it does not accurately measure actual living costs and various financial benefits that some people might receive Dr. G. Johnson, www.ResearchDemystified.org 12 Step 3: Research Design The Xs and Os framework Experimental Random assignment to treatment or control (comparison) groups Quasi-experimental Non-random assignment to groups Non-experimental The one-shot design: implement a program and then measure what happens Dr. G. Johnson, www.ResearchDemystified.org 13 Question-design Connection One-shot designs make sense for descriptive questions and normative questions but are weakest for cause-effect questions Dr. G. Johnson, www.ResearchDemystified.org 14 Question-Design Connection The best design for a cause-effect question is the classic experimental design But: quasi-experimental designs, including using statistical controls, are more typically used in public administration research But: sometimes a one-shot design is as good as it gets in public administration research Sophisticated users exercise great caution in drawing cause-effect conclusions Dr. G. Johnson, www.ResearchDemystified.org 15 Research Design: Other Common Research Approaches Secondary Data Analysis Evaluation Synthesis Content Analysis Survey Research Case Studies Cost-Benefit Analysis Dr. G. Johnson, www.ResearchDemystified.org 16 Step 4: Data Collection Options The decision depends upon: What you want to know Numbers or stories Where the data resides Environment, files, people Resources available; time, money, staff to conduct the research Dr. G. Johnson, www.ResearchDemystified.org 17 Data Collection Methods Locate sources of information Data collection methods: Available data Archives, documents Data collection instruments (DCIs) Observation Interviews, focus groups Surveys: mail, in-person, telephone, cyberspace Dr. G. Johnson, www.ResearchDemystified.org 18 Multiple Methods Quantitative-Qualitative war is over Neither is inherently better Law of the situation rules Each work well in some situations, less well in others Dr. G. Johnson, www.ResearchDemystified.org 19 Multiple Methods Quantitative and qualitative data collection often used together Available data with surveys Surveys with observations Observations with available data Surveys with focus groups Dr. G. Johnson, www.ResearchDemystified.org 20 Nonrandom Sampling Useful in qualitative research Sometimes a nonrandom sample is the only choice that makes sense Weakness: potential selection bias Do they hold a particular point of view that suits the agenda of the researchers? Limitations: reflects only those included Results are never generalizable Dr. G. Johnson, www.ResearchDemystified.org 21 Nonrandom Sampling Non-random sample options Quota Accidental Snow-ball Judgmental Convenience Face validity: does the choice make sense? Size is not important Dr. G. Johnson, www.ResearchDemystified.org 22 Random Sample Based on probability: ever item in the population (people, files, roads, whatever) has an equal chance of being selected Size matters: must be large enough—sample size table is needed Advantages: Ability to make inferences or generalizations about the larger population based on what we learn from the sample Eliminates selection bias Dr. G. Johnson, www.ResearchDemystified.org 23 Random Sample Challenge: To locate a complete listing of the entire population from which to select a sample Analysis requires inferential statistics There is a calculable amount of error in any random sample Confidence level, confidence intervals and sampling error (also called margin of error) Tests of statistical significance Dr. G. Johnson, www.ResearchDemystified.org 24 Step 5: Analysis Plan Analysis techniques vary based on: Level of data collected Sampling choices The analysis plan links the data collection instruments, the questions and the planned data analyses Check to make sure all the needed data are collected to answer the questions Check to make sure unneeded data are not collected Dr. G. Johnson, www.ResearchDemystified.org 25 Step 6: Test Your Plan Test all data collection instruments and data collection plans to make sure they work the way expected Pre-test in real settings Expert review Cold-reader review Revise and re-test Finalize the research proposal Dr. G. Johnson, www.ResearchDemystified.org 26 The Design Matrix A tool that helps pull on the pieces of the research plan together Helps focus on all the details to make sure everything connects It is a visual Focus is on content not writing style It is a living document Planning is an iterative process This is generic format Dr. G. Johnson, www.ResearchDemystified.org 27 “Begin with the end in mind.” It is worth the time planning and testing your plan. “If you do not know where you are going, you can wind up anywhere.” It is hard to correct mistakes after the data has been collected Remember: no amount of statistical wizardry will correct planning mistakes Dr. G. Johnson, www.ResearchDemystified.org 28 Doing Phase Gathering the data Preparing data for analysis Analyzing and interpreting the data Dr. G. Johnson, www.ResearchDemystified.org 29 Doing Phase Collect the data Accuracy is key Prepare data collected for analysis Data entry, test error rate Analyze the data Qualitative approaches Control for bias Quantitative approaches Numeric analysis Interpretation in the English language Dr. G. Johnson, www.ResearchDemystified.org 30 Analysis Techniques Descriptive Frequency, percents, means, medians, modes, rates, ratios, rates of change, range, standard deviation Bi-Variate Cross-tabs, comparison of means Dr. G. Johnson, www.ResearchDemystified.org 31 Analysis Techniques Relationships Correlation and measures of association Association does not mean the variables are causally related The closer 0, the weaker the relationship, the closer to 1, the stronger the relationship No defined rules: .2 to .3, something to look at, . 4 to .5 moderately strong, and above .5 is strong It is rare to get correlations above .9 Signal to take a more careful look at the measures Dr. G. Johnson, www.ResearchDemystified.org 32 Analysis Techniques Inference To infer something to the larger population based on the results of a random sample. Confidence intervals (standard is 95% precision) Confidence levels (standard is 95% confidence) – I am 95% certain (confident) that the true average salary in the population is between $45,000 and $50,000. Sampling error (standard is plus/minus 5%) – Think polling data Dr. G. Johnson, www.ResearchDemystified.org 33 Analysis Techniques Inference Statistical Significance: How likely are we to have gotten these results from chance alone? Many different tests to meet specific situations but interpretation is always the same Standard practice: If there is a 5% chance or less that the results are due to chance, researchers will conclude that the results are statistically significant. Dr. G. Johnson, www.ResearchDemystified.org 34 Reporting Phase What’s Your Point? Major message/story? Who is your audience? Reporting Options Executive summary Written Reports Oral presentations Use of charts and tables Dr. G. Johnson, www.ResearchDemystified.org 35 Communication Guidelines Present what matters to your audience The goal is to illuminate, not impress What’s your hook? Grab your audience’s attention Use clear, accurate and simple language Use graphics to highlight points Avoid jargon Dr. G. Johnson, www.ResearchDemystified.org 36 Communication Guidelines Organize around major themes or research questions Decide on your message and stick to it Leave time for reviews by experts and cold readers Leave time for the necessary revisions Dr. G. Johnson, www.ResearchDemystified.org 37 Communication Guidelines Provide information about your research methods so others can judge its credibility Always provide the limitations of this study For reports: Place technical information in an appendix Provide an executive summary for busy readers Dr. G. Johnson, www.ResearchDemystified.org 38 Oral Presentations Consider the needs of the audience Consider the requirements of the situation High tech or low tech? Formal or informal? Powerpoints as appropriate: simple, clear, large font, no distracting bells and whistles Not too many, not too few: just right Handouts as needed Dr. G. Johnson, www.ResearchDemystified.org 39 Visual Display Of Data Tables: better for presenting data Graphs/charts: more effective in communicating the message. Impact Increases audience acceptance Increases memory retention Shows big picture and patterns Visual relief from narrative. Dr. G. Johnson, www.ResearchDemystified.org 40 Ethics and Values Tell the truth--always. Interpretations can have spin: may not be agreed criteria for what is “good.” Build in checks to assure accuracy. Be honest about the limitations of your research. Do no harm to subjects of your research. Dr. G. Johnson, www.ResearchDemystified.org 41 Ethics and Values Don’t take cheap shots at other people’s research Don’t accuse of wrong-doing without evidence Be careful not to harm people who benefit from program by concluding a program does not work when all you know is that your study was not able to find an impact. Dr. G. Johnson, www.ResearchDemystified.org 42 Creative Commons This powerpoint is meant to be used and shared with attribution Please provide feedback If you make changes, please share freely and send me a copy of changes: Johnsong62@gmail.com Visit www.creativecommons.org for more information Dr. G. Johnson, www.ResearchDemystified.org 43