Working Group 1 HECRTF Workshop June 14

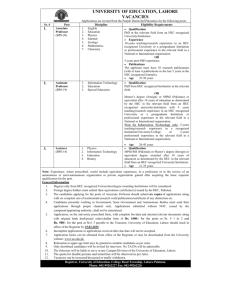

advertisement

Working Group 1 Enabling Technologies Chair: Sheila Vaidya Vice Chair: Stu Feldman WG 1 – Enabling Technologies Charter • Charter – Establish the basic technologies that may provide the foundation for important advances in HEC capability, and determine the critical tasks required before the end of this decade to realize their potential. Such technologies include hardware devices or components and the basic software approaches and components needed to realize advanced HEC capabilities. • Chair – Sheila Vaidya, Lawrence Livermore National Laboratory • Vice-Chair – Stuart Feldman, IBM WG 1 – Enabling Technologies Guidelines and Questions • As input to HECRTF charge (1a), Please provide information about key technologies that must be advanced to strengthen the foundation for developing new generations of HEC systems. Include discussion of promising novel hardware and software technologies with potential pay-off for HEC • Provide brief technology maturity roadmaps and investments, with discussion of costs to develop these technologies • Discuss technology dependencies and risks (for example, does the roadmap depend on technologies yet to be developed?) • Example topics: – semiconductors, memory (e.g. MRAM), networks (e.g. optical), packaging/cooling, novel logic devices (e.g. RSFQ), alternative computing models Working Group Participants • • • • • • • • • • • Kamal Abdali, NSF Fernand Bedard, NSA Herbert Bennett, NIST Ivo Bolsens, XILINX Jon Boyens, DOC Bob Brodersen, UC Berkeley Yolanda Comedy, IBM Loring Craymer, JPL Bronis R. de Supinski, LLNL Martin Deneroff, SGI Stuart Feldman, IBM (VICECHAIR) • Sue Fratkin, CASC • • • • • • • • • • • David Fuller, JNIC/Raytheon Gary Hughes, NSA Tyce McLarty, LLNL Kevin Martin, Georgia Tech Virginia Moore, NCO/ITRD Ahmed Sameh, Purdue John Spargo, Norhrop-Grumman William Thigpen, NASA Sheila Vaidya, LLNL (CHAIR) Uzi Vishkin, U Maryland Steven Wallach, Chiaro Timescales • 0-5 years – Suitable for deployment in high-end systems within next 5 years • Implies that the technology has been tried and tested in a systems context • Requires additional investment beyond commercial industry • 5-10 years – Suitable for deployment in high-end systems in 10 years • Implies that the component has been studied and feasibility shown • Requires system embodiment and growing investment • 10+ years – New research, not yet reduced to practice • Usefulness in systems not yet demonstrated Interconnects Passive • 0-5 – Optical networking – Serial optical interface • 5-10 – High-density optical networking – Optical packet switching • 10+ – Scalability (node density, bandwidth) Active • 0-5 – Electronic cross-bar switch – Network processing on board • 5-10 – Data Vortex – Superconducting cross-bar switch • 10+ Power/Thermal Management, Packaging • 0-5 – Optimization for power efficiency – 2.5-D packaging – Liquid cooling (e.g., spray) • 5-10 – 3-D packaging and cooling (microchannel) – Active temperature response • 10+ – Higher scalability concepts (improving OPS/W) Single Chip Architecture • 0-5 – – – – Power-efficient designs System on Chip; Processor-in-Memory Reconfigurable circuits Fine-grained irregular parallel computing • 5-10 – Adaptive architecture – Optical clock distribution – Asynchronous designs • 10+ Memory Storage & I/O Main Memory • 0-5 – Optimized memory hierarchy – Smart memory controllers • 5-10 – 3-D memory (e.g., MRAM) • 10+ – Nanoelectronics – Molecular electronics • 0-5 – Object-based storage – Remote DMA – I/O controllers (MPI, etc.) • 5-10 – Software for “cluster” storage access to – MRAM, holographic, MEMS, STM, E-beam • 10+ – Spectral hole burning – Molecular electronics Device Technologies • 0-5 – Silicon on Insulator, SiGe, mixed III-V devices – Integrated electro-optic and high-speed electronics • 5-10 – Low-temperature CMOS – Superconducting - RSFQ • 10+ – Nanotechnologies – Spintronics Algorithms, SW-HW Tools • 0-5 – – – – – Compiler innovations for new architectures Tools for robustness (e.g., delay, fault tolerance) Low-overhead coordination mechanisms Performance monitors Sparse matrix innovations • 5-10 – Very High Level Language hardware support – Real-time performance monitoring and feedback – PRAM (Parallel Random Access Machine model) • 10+ – Ideas too numerous to select Generic Needs • Sharing – NNIN-like consortia • National Nanotechnology Infrastructure Network – Custom hardware production – Intellectual Property policies (open?) • Tools for – – – – – Design for Testability Physical design Testing and Verification Simulation Programmability High-Impact Themes • 0-5 – Show value of HEC solutions to the commercial sector – Facilitate sharing and collaboration across HEC community – Technology • Power/thermal management • Optical networking • 5-10 – Long-term consistent investment in HEC – Technology • 3-D Packaging • New devices (MRAM, MEMS, RSFQ) • Power/thermal management & Optical – Ongoing • 10+ years – Continued research for HEC Working Group 2 COTS-Based Architecture Chair: Walt Brooks Vice Chair: Steve Reinhardt WG2 – Architecture: COTS-based Charter • Charter – Determine the capability roadmap of anticipated COTS-based HEC system architectures through the end of the decade. Identify those critical hardware and software technology and architecture developments, required to both sustain continued growth and enhance user support. • Chair – Walt Brooks, NASA Ames Research Center • Vice-Chair – Steve Reinhart, SGI WG2 – Architecture: COTS-based Guidelines and Questions • Identify opportunities and challenges for anticipated COTS-based HEC systems architectures through the decade and determine its capability roadmap. • Include alternative execution models, support mechanisms, local element and system structures, and system engineering factors to accelerate rate of sustained performance gain (time to solution), performance to cost, programmability, and robustness. • Identify those critical hardware and software technology and architecture developments, required to both sustain continued growth and enhance user support. • Example topics: – microprocessors, memory, wire and optical networks, packaging, cooling, power distribution, reliability, maintenance, cost, size Working Group Participants • Walt Brooks(chair) • Rob Schreiber(L) • Yuefan Deng • Steven Gottlieb • Charles lefurgy • John Ziebarth • Stephen Wheat • Guang R. Gao • Burton Smith • • • • • • • • Steve Reinhardt (co-chair) Bill Kramer(L) Don Dossa Dick Hildebrandt Greg Lindahl Tom McWilliams Curt Janseen Erik DeBenedicttis Assumptions/Definitions • Definition of “COTS based” – Using systems originally intended for enterprise or individual use – Building Blocks-Commodity processors, commodity memory and commodity disks – Somebody else building the hardware and you have limited influence over – Examples • IN-Redstorm, Blue Planet, Altix • OUT-X1, Origins, SX-6 • Givens – Massive disk storage (object stores) – Fast wires (SERDES-driven) – Heterogeneous systems (processors) Primary Technical Findings • Improve memory bandwidth – We have to be patient in the short term for the next 2-3 years the die has been cast – Sustained Memory bandwidth is not increasing fast enough – Judicious investment in the COTS vendors to effect 2008 • Improve the Interconnects-”connecting to the interconnect” – Easier to influence than memory bandwidth – Connecting through I/O is too slow we need to connect to CPU at memory equivalent speeds • One example is HyperTransport which represents a memory grade interconnect in terms of bandwidth and is a well defined I/F -others are under development • Provide ability for heterogeneous COTS based systems. – E.g. -FPGA, ASIC,… in the fabric • FPGA allows tightly coupled research on emerging execution models and architectural ideas without going to foundry • Must have the software to support programming ease for FPGA Technology Influence Direct Lead Time Indirect Lead Time Indirect Cost to Design Direct Cost to Design $0.2M 1 year $10M I/O $50M Nodes/Frames Board/Components Interconnect CPU/Chips Board 4-6 years 10-15 years $5M $3001,000M $5 - 100M Programmatic Approaches • Develop a Government wide coordinated method for direct Influence with the vendors to make “designs” changes – Less influence with COTS mfrs, more with COTS-based vendors – Recognize that commercial market is the primary driver for COTS • “Go” in early • Develop joint Government. research objectives-must go to vendors with a short focused list of HEC priorities – Where possible find common interests with the industries that drive the commodity market – “Software”- we may have more influence- • Fund long Term Research – Academic research must have access to systems at scale in order to do relevant research – Strategy for moving University research into the market • Government must be an early adopter – risk sharing with emerging systems Software Issues • Not clear that these are part of our charter but would like to be sure they are handled – Scaling “Linux” to 1000’s of processors • Administrated at full scale for capability computing – Scalable File systems – Need Compiler work to keep pace – Managing Open Source • Coordinating release implementation • Open source multi-vendor approach-O/S,Languages,Libraries, debuggers… – Overhead of MPI is going to swamp the interconnect and hamper scaling • Need a lower overhead approach to Message Passing Parallel Computing • • • • • Parallel computing is (now) the path to speed People think the problem is solved but it’s not Need new benchmarks that expose true performance of COTS If the government is willing to invest early even at the chip level there is the potential to influence design in a way that makes scaling “commodity” systems easier Parallel computers to be much more general purpose than they are today – – – – More useful, easier to use, and better balanced Continued growth of computing may depend on it To get significantly more performance, we must treat parallel computing as first class COTS processors especially will be influenced only by a generally applicable approach Themes From White Papers • Broad Themes – – • • Exploit Commodity One system doesn’t fit all applications-For specific family of codes Commodity can be a good solution – unique topology and algorithmic approaches allow exploitation of current technology Novel uses of current technology(Overlap with Panel 3) – RCM Technology- FPGA faster, lower power with multiple units-Hybrid FPGA-core is the traditional processor on chip with logic units-Need H/W architect for RCM-Apps suitable for RCM-RCM are about ease of programming – Streaming technology utilizing commercial chips – Fine grained multi threading Supporting Technology( Overlap with panel 1) – Self managing Self Aware systems – MRAM,EUVL,Micro-channel – Power Aware Computing – High end interconnect and scalable files systems – High performance interconnect technology, optical and others that can scale to large systems – Systems software that scales up gracefully to enormous processor count with reliability,efficiency and and ease of – – There is a natural layering of technologies involved in a high-performance machine: the basic silicon, • • the cell boards and shared memory nodes, the cluster interconnect, the racks, the cooling, the OS kernel, the added OS services, the runtime libraries, the compilers and languages, the application libraries. Relevant White Papers 18 of the 64/80 papers have some relevance to our topic • 6 • 10 • 12 • 16 • 17 • 31 • 33 • 39 • 45 • 46 • 47 • 50 • 65 • 68 • 72 • 75 • 80 Working Group 3: Custom-Based Architectures Chair: Peter Kogge Vice Chair: Thomas Sterling WG3 – Architecture: Custom based Charter • Charter – Identify opportunities and challenges for innovative HEC system architectures, including alternative execution models, support mechanisms, local element and system structures, and system engineering factors to accelerate rate of sustained performance gain (time to solution), performance to cost, programmability, and robustness. Establish a roadmap of advanced-concept alternative architectures likely to deliver dramatic improvements to user applications through the end of the decade. Specify those critical developments achievable through custom design necessary to realize their potential. • Chair – Peter Kogge, Notre Dame • Vice-Chair – Thomas Sterling, California Institute of Technology & Jet Propulsion Laboratory WG3 – Architecture: Custom based Guidelines and Questions • Present driver requirements and opportunities for innovative architectures demanding custom design • Identify key research opportunities in advanced concepts for HEC architecture • Determine research and development challenges to promising HEC architecture strategies. Project brief roadmap of potential developments and impact through the end of the decade. • Specify impact and requirements of future architectures on system software and programming environments. • Example topics: – System-on-a-chip (SOC), Processor-in-memory (PIM), streaming, vectors, multithreading, smart networks, execution models, efficiency factors, resource management, memory consistency, synchronization Working Group Participants • • • • • • • • • • Duncan Buell, U. So. Carolina George Cotter, NSA William Dally, Stanford Un. James Davenport, BNL Jack Dennis, MIT Mootaz Elnozahy, IBM Bill Feiereisen, LANL Michael Henesey, SRC Computers David Fuller, JNIC David Kahaner, ATIP • • • • • • • • • • Peter Kogge, U. Notre Dame Norm Kreisman, DOE Grant Miller, NCO Jose Munoz, NNSA Steve Scott, Cray Vason Srini, UC Berkeley Thomas Sterling, Caltech/JPL Gus Uht, U. RI Keith Underwood, SNL John Wawrzynek, UC Berkeley Charter (from Charge) • Identify opportunities & challenges for innovative HEC system architectures, including – – – – alternative execution models, support mechanisms, local element and system structures, and system engineering factors to accelerate – – – – rate of sustained performance gain (time to solution), performance to cost, programmability, and robustness. • Establish roadmap of advanced-concept alternative architectures likely to deliver dramatic improvements to user applications through the end of the decade. • Specify those critical developments achievable through custom design necessary to realize their potential. Original Guidelines and Questions • Present driver requirements and opportunities for innovative architectures demanding custom design • Identify key research opportunities in advanced concepts for HEC architecture • Determine research and development challenges to promising HEC architecture strategies. • Project brief roadmap of potential developments and impact through the end of the decade. • Specify impact and requirements of future architectures on system software and programming environments. • (new) What role should/do universities play in developments in this area Outline • • • • • • • What is Custom Architecture (CA) Endgame Objectives, Benefits, & Challenges Fundamental Opportunities Delivered by CA Road Map Summary Findings Difficult fundamental challenges Roles of Universities What Is Custom Architecture? • Major components designed explicitly and system balanced for support of scalable, highly parallel HEC systems • Exploits performance opportunities afforded by device technologies through innovative structures • Addresses sources of performance degradation (inefficiencies) through specialty hardware and software mechanisms • Enable higher HEC programming productivity through enhanced execution models • Should incorporate COTS components where useful without sacrifice of performance Endgame Objectives • Enable solution of – Problems we can’t solve now – And larger versions of ones we can solve now • Base economic model: provides 10 – 100X ops/Lifecycle $ AT SCALE – Vs inefficiencies of COTS • Significant reduction in real cost of programming – Focus on sustained performance, not peak Strategic Benefits • Promotes architecture diversity • Performance: ops & bandwidth over COTS – Peak: 10X – 100X through FPU proliferation – Memory bandwidth 10X-100X through network and signaling technology – Focus on sustainable • High Efficiency – Dynamic latency hiding – High system bandwidth and low latency – Low overhead • Enhanced Programmability – Reduced barriers to performance tuning – Enables use of programming models that simplify programming and eliminate sources of errors • Scalability – Exploits parallelism at all levels of parallelism • Cost, size, and power – High compute density Challenges To Custom • • • • • • • • • Small market and limited opportunity to exploit economy of scale Development lead time Incompatibility with standard ISAs Difficulty of porting legacy codes Training of users in new execution models Unproven in the field Need to develop new software infrastructure Less frequent technology refresh Lack of vendor interest in leading edge small volumes Fundamental Technical Opportunities Enabled by CA • Enhanced Locality – Increasing Computation/Communication Demand • Exceptional global bandwidth • Architectures that enable utilization of global bandwidth • Execution models that enable compiler/programmer to use the above Enhanced Locality – Increasing Computation/Communication Demand Mechanisms • Spatial computation via reconfigurable logic • Streams that capture physical locality by observing temporal locality • Vectors – scalability and locality microarchitecture enhancements • PIM – capture spatial locality via high bandwidth local memory (low latency) • Deep and explicit register & memory hierarchies – With software management of hierarchies Technologies • Chip stacking to increase local B/W Providing Exceptional Global Bandwidth Mechanisms: • High radix networks • Non-blocking, bufferless topologies • Hardware congestion control • Compiler scheduled routing Technologies: • High speed signaling (system-oriented) – Optical, electrical, heterogeneous (e.g. VCSEL) • Optical switching & routing • High bandwidth memory device, high density Notes: • Routing & flow control are nearing optimal Architectures that Enable Use of Global Bandwidth Note: This addresses providing the traffic stream to utilize the enhanced network • Stream and Vectors • Multi-threading (SMT) • Global shared memory (a communication overhead reducer) • Low overhead message passing • Augmenting microprocessors to enhance additional requests (T3E, Impulse) • Prefetch mechanisms Execution Models Note: A good model should: – – – – • • • • • • • • Expose parallelism to compiler & system s/w Provide explicit performance cost model for key operations Not constrain ability to achieve high performance Ease of programming Spatial direct mapped hardware Resource flow Streams Flat vs Dist. Memory (UMA/NUMA vs M.P.) New memory semantics CAF and UPC, first good step Low overhead synchronization mechanisms PIM-enabled: Traveling threads, message-driven, active pages, ... Roadmap: When to Expect CA Deployment • 5 Years or less – Must have relatively mature support s/w (and/or “friendly users”) • 5-10 years – Still open research issues in tools & system s/w – Approaching 10 years if requires mind set change in applications programmers • 10-15 years: – After 2015 all that’s left in silicon is architecture Roadmap - 5 Year Period • Significant research prototype examples – Berkeley Emulation Engine: $0.4M/TF by 2004 on Immersed Boundary method codes – QCDOC: $1M/TF by 2004 – Merrimac Streaming: $40K/TF by 2006 – Note: several companies are developing custom architecture roadmaps Roadmap - 5 Years or Less Technologies Ready for Insertion • High bandwidth network technology can be inserted – No software changes • SMT: will be ubiquitous within 5 years – But will vendors emphasize single thread performance in lieu of supporting increased parallelism • Spatial direct mapped approach Roadmap - 5 to 10 Years • All prior prototypes could be expanded to reach PF sustained at competitive recurring $ • Industry is targeting sustained Petaflops – If properly funded • Need to encourage transfer of research results • Virtually all of prior technology opportunities will be deployable – Drastic changes to programming will limit adoption Roadmap: 10-15 Years • Silicon scaling at sunset – Circuit, packaging, architecture, and software opportunities remain • Need to start looking now at architectures that mesh with end of silicon roadmap and non-silicon technologies – Continue exponential scaling of performance – Radically different timing/RAS considerations – Spin out: how to use faulty silicon Findings • Significant CA-driven opportunities for enhanced Performance/Programmability – 10-100X potential above COTS at the same time • Multiple, CA-driven innovations identified for near & medium term – Near term: multiple proof of concept – Medium term: deployment @ petaflops scale • Above potential will not materialize in current funding culture Findings (2) • No one side of the community can realize opportunities of future Custom Architecture: – Strong peer-peer partnering needed between industry, national labs, & academia – Restart pipeline of HEC & parallel-oriented grad students & faculty • Creativity in system S/W & programming environments must support, track, & reflect creativity in HEC architecture Findings (3) • Need to start now preparing for end of Moore’s Law and transition into new technologies – If done right, potential for significant trickle back to silicon Fundamentally Difficult Challenges Technical • • • • Newer applications for HEC OS geared specifically to highly scaled systems How to design HEC for upgradable High Latency, low bandwidth ratios of memory chips and systems • File systems • Reliability with unreliable components at large scale • Fundamentally parallel ISAs Fundamentally Difficult Challenges Cultural • Instilling change into programming model • Software inertia • How should HEC be viewed – As a service vs product • I/O, SAN, Storage systems for HEC • How to define requirements Universities As A Critical Resource • • • • • • • • • Provide innovative concepts and long term vision Provide students Keeps the research pipeline full Good at early simulations and prototype tools Students no longer commonly exposed to massive parallelism Parallel computing architecture students in significant decline, as well as those interested in HEC Difficult to roll leading edge chips but only place for 1st generation prototypes of novel concepts Don’t do well at attacking the hard problems of moving beyond 1st prototype, or productizing Soft money makes it hard to keep teams together Working Group 4: Runtime and Operating Systems Chair: Rick Stevens Vice Chair: Ron Brightwell WG 4– Runtime and OS Charter • Charter – Establish baseline capabilities required in the operating systems for projected HEC systems scaled to the end of this decade and determine the critical advances that must be undertaken to meet these goals. Examine the potential, expanded role of low-level runtime system components in support of alternative system architectures. • Chair – Rick Stevens, Argonne National Laboratory • Vice-Chair – Ron Brightwell, Sandia National Laboratory WG 4– Runtime and OS Guidelines and Questions • Establish principal functional requirements of operating systems for HEC systems of the end of the decade • Identify current limitations of OS software and determine initiatives required to address them • Discuss role of open source software for HEC community needs and issues associated with development/maintenance/use of open source • Examine future role of runtime system software in the management/use of HEC systems containing from thousands to millions of nodes. • Example topics: – file systems, open source software, Linux, job and task scheduling, security, gridinteroperable, memory management, fault tolerance, checkpoint/restart, synchronization, runtime, I/O systems Working Group Participants • • • • • • • • Ron Brightwell Neil Pundit Jeff Brown Lee Wand Gary Girder Ron Minnich Leslie Hart DK Panda • • • • • • • • • Thuc Hoang Bob Balance Barney McCabe Wes Felter Keshav Pingali Deborah Crawford Asaph Zemach Dan Reed Rick Stevens Our Charge • Establish Principal Functional Requirements of OS/runtime for systems for the end of the decade systems • Assumptions: – Systems with 100K-1M nodes (fuzzy notion of node) +-order of magnitude (SMPs, etc.) – COTS and custom targets included • Role of Open Source in enabling progress • Formulate critical recommendations on research objectives to address the requirements Critical Topics • • • • • • • • • • • • Operating System and Runtime APIs High-Performance Hardware Abstraction Scalable Resource Management File Systems and Data Management Parallel I/O and External Networks Fault Management Configuration Management OS Portability and Development Productivity Programming Model Support Security OS and Systems Software Development Test beds Role of Open Source Recurring Themes • • • • • • • • • Limitations of UNIX Blending of OS and runtime models Coupling apps and OS via feedback mechanisms Performance transparency (visibility) Minimalism and enabling applications access to HW Desire for more hardware support for OS functions “Clusters” are the current OS/runtime targets Lack of full-scale test beds limiting progress OS “people” need to be involved in design decisions OS APIs (e.g. POSIX) • Findings: – POSIX APIs not adequate for future systems • Lack of performance transparency • Global state assumed in POSIX semantics • Recommendations: – Determine a subset of POSIX APIs suitable for Highperformance Computing at scale – New API development addressing scalability and performance transparency • Explicitly support research in developing non-POSIX compatible Hardware Abstractions • Findings: – HAs needed for portability and improved resource management • Remove dependence on physical configurations – virtual processors abstractions (e.g. MPI processes) • Virtualization to improve resource management – virtual PIMs, etc. for improved programming model support • Recommendations: – Research to determine what are the right candidates for virtualization – Develop low overhead mechanisms for enabling abstraction – Making abstraction layers visible and optional where needed Scalable Resource Management • Findings: – Resource allocation and scheduling at the system and node level are critical for large-scale HEC systems – Memory hierarchy management will become increasingly important – Dynamic process creation and dynamic resource management increasingly important – Systems most likely to be space-shared – OS support required for management of shared resources (network, I/O, etc.) Scalable Resource Management • Recommendations: – Investigate new models for resource management • Enabling user applications to have as much control of lowlevel resource management where needed • Compute-Node model – Minimal runtime and App can bring as much or as little OS with them • Systems/Services Nodes – Need more OS services to manage shared resources • I/O systems and fabric need to be managed – Explore cooperative services model • Some runtime and traditional OS combined into a cooperative scheme, offload services not considered critical for HEC – Increase the potential use of dynamic resource management at all levels Data Management and File Systems • Findings: – The POSIX model for I/O is incompatible with future systems – The passive file system model may also not be compatible with requirements for future systems • Recommendations: – Develop an alternative (to POSIX) API for file system – Investigate scalable authentication and authorization schemes for data – Research scalable schemes for handling file systems (data management) metadata – Consider moving processing into the I/O paths (storage devices) Parallel and Network I/O • Findings: – I/O channels will be highly parallel and shared (multiple users/jobs) – External network and grid interconnects will be highly parallel – The OS will need to manage I/O and network connections as a shared resource (even in space shared systems) • Recommendations: – Develop new scalable approaches to supporting I/O and network interfaces (near term) – Consider integrating I/O and network interface protocols (medium term) – Develop HEC appropriate system interfaces to grid services (long term) Fault Management • Findings: – Fault management is increasingly critical for HEC systems – The performance impacts of fault detection and management may be significant and unexpected – Automatic fault recovery may not be appropriate in some cases – Fault prediction will become increasingly critical • Recommendations: – Efficient schemes for fault detection and prediction • What can be done in hardware? – Improved runtime handling (graceful degradation) of faults – Investigate integration of fault management, diagnostics with advanced configuration management – Autonomic computing ideas relevant here Configuration Management • Findings: – Scalability of management tools needs to be improved • Manage to a provable state (database driven management) – Support interrupted firmware/software update cycles (surviving partial updates) – New models of configuration (away from file based systems) may be important directions for the future • Recommendations: – – – – – New models for systems configuration needed Scalability research (scale invariance, abstractions) Develop interruptible update schemes (steal from database technologies) Fall back, fall forward Automatic local consistency OS Portability • Findings: – Improving OS portability and OS/runtime code reuse will improve OS development productivity • Device drivers (abstractions) • Shared code base and modular software technology • Recommendations: – Develop new requirements for device driver interfaces • Support unification where possible and where performance permits – Consider developing a common runtime execution software platform – Research toward improving use of modularization and components in OS/runtime development OS Security for HEC Systems • Findings: – Current (nearly 30 year old) Unix security model has significant limitations – Multi-level Security (orange book like) may be a requirement for some HEC systems – Current Unix security model is deeply coupled to current OS semantics and limits scalability in many cases • Recommendations: – Active resource models • Rootless, UIDless, etc. – Eros, Plan 9 models possible starting point – Fund research explicitly different from UNIX Programming Model Support in OS • Findings: – MPI has productivity limitations, but is the current standard for portable programming, need to push beyond MPI – UPC and CAF considered good candidates for improving productivity and probably should be targets for improved OS support • Recommendations: – Determine OS level support needed for UPC and CAF and accelerate support for these (near term) – Performance and productivity tool support (debuggers, performance tools, etc.) Testbeds for Runtime and OS • Findings: – Lack of full scale test beds have slowed research in scalable OS and systems software – Test beds need to be configured to support aggressive testing and development • Recommendations: – Establish one or more full scale (1,000’s nodes) test beds for runtime, OS and Systems software research communities – Make test beds available to University, Laboratory and Commercial developers The Role of Open Source • Findings: – Open source model for licensing and sharing of software valuable for HEC OS and runtime development – Open source (open community) development model may not be appropriate for HEC OS development – The Open Source contract model may prove useful (LUSTRE model) • Recommendations: – Encourage use of open source to increase leverage in OS development – Consider creating and funding an Institute for HEC OS/rumtime Open Source development and maintenance (keeping the HEC community in control of key software systems) Working Group 5 Programming Environments and Tools Chair: Dennis Gannon Vice Chair: Rich Hirsh WG5 – Programming Environments and Tools Charter • Charter – Address programming environments for both existing legacy codes and alternative programming models to maintain continuity of current practices, while also enabling advances in software development, debugging, performance tuning, maintenance, interoperability and robustness. Establish key strategies and initiatives required to improve time to solution and ensure the viability and sustainability of applying HEC systems by the end of the decade. • Chair – Dennis Gannon, Indiana University • Vice-Chair – Rich Hirsh, NSF WG5 – Programming Environments and Tools Guidelines and Questions • Assume two possible paths to future programming environments: – incremental evolution of existing programming languages and tools consistent with portability of legacy codes – innovative programming models that dramatically advance user productivity and system efficiency/performance • Specify requirements of programming environments and programmer training consistent with incremental evolution, including legacy applications • Identify required attributes and opportunities of innovative programming methodologies for future HEC systems • Determine key initiatives to improve productivity and reduce time-to-solution along both paths to future programming environments • Example topics: – Programming models, portability, debugging, performance tuning, compilers Charter • Address programming environments for both existing legacy codes and alternative programming models to maintain continuity of current practices, while also enabling advances in software development, debugging, performance tuning, maintenance, interoperability and robustness. • Establish key strategies and initiatives required to improve time to solution and ensure the viability and sustainability of applying HEC systems by the end of the decade. Guidelines • Assume two possible paths to future programming environments: – incremental evolution of existing programming languages and tools consistent with portability of legacy codes – innovative programming models that dramatically advance user productivity and system efficiency/performance • Specify requirements of programming environments and programmer training consistent with incremental evolution, including legacy applications • Identify required attributes and opportunities of innovative programming methodologies for future HEC systems • Determine key initiatives to improve productivity and reduce time-to-solution along both paths to future programming environments Key Findings • Revitalizing evolutionary progress requires a dramatically increased investment in – Improving the quality/availability/usability of software development lifecycle tools – Building interoperable libraries and component/application frameworks that simplify the development of HEC applications • Revitalizing basic research in revolutionary HEC programming technology to improve time-to-solution: – Higher Level programming models for HEC software developers that improve productivity – Research on the hardware/software boundary to improve HEC application performance The Strategy • Need an attitude change about software funding for HEC. – Software is a major cost component for all modern complex technologies. • Mission critical and basic research HEC software is not provided by industry – Need federally funded, management and coordination of the development of high end software tools. – Funding is needed for • Basic research and software prototypes • Technology Transfer: – moving successful research prototypes into real production quality software. – Structural changes are needed to support sustained engineering • Software capitalization program • Institute for HEC advanced software development and support. – Could be a cooperative effort between industry, labs, universities. The Strategy • A new approach is needed to education for HEC. – A national curriculum is needed for high performance computing. – Continuing education and building interdisciplinary science research. – A national HEC testbed for education and research The State of the Art in HEC Programming • Languages (used in Legacy software) – A blend of traditional scientific programming languages, scripting languages plus parallel communication libraries and parallel extensions • (Fortran 66-95, C++, C, Python, Matlab )+MPI+OpenMP/threads, HPF • Programming Models in current use – Traditional serial programming – Global address space or partitioned memory space (mpi+on linux cluster) – SPMD vs MPMD The Evolutionary Path Forward Already Exists • For Languages – Co-array Fortran, UPC, Adaptive MPI, specialized C++ template libraries • For Models – Automatic parallelization of whole-program serial legacies no longer considered sufficient, • but it is important for code generation for procedure bodies on modern processors. – multi-paradigm parallel programming is desirable goal and within reach Short Term Needs • There is clearly very slow progress in evolving HEC software practices to new languages and programming models. The rest of the software industry is moving much faster. – What is the problem? – Scientists/engineers continue to use the old approaches because it is still perceived as the shortest path to the goal … a running code. • In the short term, we need – A major initiative to improve the software design, debugging, testing and maintenance environment for HEC systems The Components of a Solution • Our applications are rapidly evolving to multilanguage, multi-disciplinary, multi-paradigm software systems – High end computing has been shut out of a revolution in software tools • When tools have been available centers can’t afford to buy them. • Scientific programmers are not trained in software engineering. – For example, industrial quality build, configure and testing tools are not available for HEC applications/languages. • We need portable of software maintenance tools across HEC platforms. The Components of a Solution • We need a rapid evolution of all language processing tools – Extensible standards are needed: examples -language object file format, compiler intermediate forms. – Want complete interoperability of all software lifecycle tools. • Performance analysis should be part of every step of the life cycle of a parallel program – Feedback from program execution can drive automatic analysis and optimization. The Evolution of HEC Software Libraries • The increasing complexity of scientific software (multidisciplinary, multi-paradigm) has other side effects – Libraries are an essential way to encapsulate algorithmic complexity but • Parallel libraries are often difficult to compose because of low level conflicts over resources. • Libraries often require low-level flat interfaces. We need a mechanism to exchange more complex and interesting data structures. • Software Component Technology and Domain-specific application frameworks are one solution Components and Application Frameworks • Provides an approach to factoring legacy into reusable components that can be flexibly composed. – Resource management is managed by the framework and components encapsulate algorithmic functionality • Provides for polymorphism and evolvability. • Abstract hardware/software boundary and allow better language independence/interoperability. • Testing/validating is made easier. Can insure components can be trusted. • May enable a marketplace of software libraries and components for HEC systems. • However, no free lunch. – It may be faster to build a reliable application from reusable components, but will it have performance scalability? • Initial results indicate the answer is yes. Revolutionary Approaches:The Long Range View • We still have problems getting efficiency out of large scale cluster architectures. • A long range program of research is needed to explore – New programming models for HEC systems – Scientific languages of the future: • Scientist does not think about concurrency but rather science. • Expressing concurrency as the natural parallelism in the problem. – Integrating locality model into the problem can be the real challenge • Languages built from first principles to support the appropriate abstractions for scalable parallel scientific codes (e.g. ZPL). New Abstractions for Parallel Program Models • Approaches that promote automatic resource management. • Integration of user-domain abstractions into compilation. – Extensible Compilers • Telescoping languages – application level languages transformed into high level parallel languages transformed into … Locality may be part of new programming models. • • • • To be able to publish and discover algorithms. Automatic generation of missing components. Integrating persistence into programming model. Better support for transactional interactions in applications Programming Abstractions cont. • Better separation of data structure and algorithms. • Programming by contract – Quality of service, performance and correctness • Integration of declarative and procedural programming • Roundtrip engineering/model driven software – Reengineering: Specification to design and back • Type systems that have better support for architectural properties. Research on the Hardware/Software Boundary • Instruction set architecture – Performance counters, interaction with VM and Memory Hierarch • Open bidirectional APIs between hardware and software • Programming methodology for reconfigurable hardware will be a significant challenge. • Changing memory consistence models depending on applications. Research on the Hardware/Software Boundary • Predictability (scheduling, fine-grained timing, memory mapping) is essential for scalable optimization. • Fault Tolerance/awareness – For systems with millions of processors the applications/runtime/os will need to be aware of and have mechanisms to deal with faults. – Need mechanisms to identify and deal with faults at every level. – Develop programming models that better support non-determinism (including desirable but boundedly-incorrect results). Hardware/Software Boundary: Memory Hierarchy • There are limits to what we can do with legacy code that has bad memory locality problems. • Software needs better control data structure-to-hierarchy layout. • New solutions: – – – – Cache aware/cache oblivious algorithms Need more research on the role of virtual memory or file caching. Threads can be used to hide latency. New ways to think about data structures. • First class support for hierarchical data structures. – – – – Streaming models Integration of persistence and aggressive use of temporal locality. Separation of algorithm and data structure, i.e. generic programming. Support from system software/hardware to control aspects of memory hierarchy. Best Practices and Education • Education is crucial for the effective use of HEC systems. – Apps are more interdisciplinary • Requires interdisciplinary teams of people: – Drives need for better software engineering. – Application scientists does not need to be an expert on parallel programming. • Multi-disciplinary teams including computer scientist. – Students need to be motivated to learn that performance is fun. • Updated curriculum to use HEC systems. • Educators/student need access to HEC systems – Need to increase support for student fellowships in HEC. Working Group 6 Performance Modeling, Metrics and Specifications Chair: David Bailey Vice Chair: Allen Snavely • WG6 – Performance Modeling, Metrics, and Specifications Charter Charter – Establish objectives of future performance metrics and measurement techniques to characterize system value and productivity to users and institutions. Identify strategies for evaluation including benchmarking of existing and proposed systems in support of user applications. Determine parameters for specification of system attributes and properties. • Chair – David Bailey, Lawrence Berkeley National Laboratory • Vice-Chair – Allan Snavely, UC San Diego WG6 – Performance Modeling, Metrics, and Specifications Guidelines and Questions • As input to HECRTF charge (2c), provide information about the types of system design specifications needed to effectively meet various application domain requirements. • Examine current state and value of performance modeling, metrics for HEC and recommend key extensions • Analyze performance-based procurement specifications for HEC that lead to appropriately balanced systems. • Recommend initiatives needed to overcome current limitations in this area. • Example topics: – Metrics, time to solution, measurement and modeling methods, benchmarking, specification parameters, time to solution and relationship to fault-tolerance Working Group Participants • • • • • • • • • David Bailey Stan Ahalt Stephen Ashby Rupak Biswas Patrick Bohrer Carleton DeTar Jack Dongarra Ahmed Gameh Brent Gorda Adolfy Hoisie • • • • • • • • Sally McKee David Nelson Allan Snavely Carleton DeTar Jeffrey Vetter Theresa Windus Patrick Worley and others Charter • Establish objectives of future performance metrics and measurement techniques to characterize system value and productivity to users and institutions. Identify strategies for evaluation including benchmarking of existing and proposed systems in support of user applications. Determine parameters for specification of system attributes and properties. Fundamental Metrics Best single overriding metric: time to solution. Time to solution includes: • Execution time. • Time spent in batch queues. • System background interrupts and other overhead. • Time lost due to scheduling inefficiencies, downtime. • Programming time, debugging and tuning time. • Pre-processing: grid generation, problem definition, etc. • Post-processing: output data management, visualization, etc. Related Factors • Programming time and difficulty – – – – Must be better understood (and reduced). Identify key factors affecting development time. Identify HPC relevant techniques from software engineering. Closely connected to research in programming models and languages. • System-level efficiency – Some metrics exist (i.e. ESP). • Performance stability • Grid generation, problem definition, etc. – For some applications, this step requires effort more than the computational step. – No good metrics at present time. Current Best Practice for Procurements • Characterize machines via micro-benchmarks and synthetic benchmarks run on available machines. – Numerous general specifications. – Results on some standard benchmarks. – Results on application benchmarks (different for each procurement). • Identify and track applications of interest. – Use modeling to characterize performance. – Validate models on largest available system of that kind. • Optimization problem–solving with constraints, including performance, dollars, floor space, power. – This step is not standardized, currently ad hoc. This approach is inadequate to select systems 10x or more beyond systems in use at a given point in time. Toward Performance-Based System Selection • Procurements or other system selections should not be based on any single figure of merit. • Can various agencies converge on a reference set of discipline-specific benchmark applications? • On a set of micro-benchmarks? • How can we better handle intellectual property and classified code issues in procurements? • Accurate performance modeling holds the best promise for simplifying procurement benchmarking. Performance Modeling • Goals: A set of low-level basic system metrics, plus a solid methodology for accurately projecting the performance of a specific high-level application program on a specific high-end system. • Challenges: – Current approaches require significant skill and expertise. – Current approaches require large amounts of run time. – Fast, nearly automatic, easy-to-use schemes are needed. • Benefits: – – – – Architecture research Procurements Vendors Users Potential Modeling Impact • Influence architecture early in the design cycle • Improve applications development – Use modeling in the entire lifecycle of an application, including algorithmic selection, code development, software engineering, deployment, tuning. • Impact assessment – Project new science enabled by a proposed petaflop system. • Research needed in: – Novel approaches to performance modeling: analytical, statistical, kernels and benchmarks, synthetic programs. – How to deal with exploding quantity of performance data on systems with 10,000+ CPUs. – Online reduction of trace data. System Simulation Salishan Conference, Apr. 2003: “Computational scientists have become quite expert in using high-end computers to model everything except the systems they run on.” Research in the parallel discrete event simulation (PDES) field now makes it possible to: • Develop a modular open-source system simulation facility, to be used by researchers and vendors. – Prime application: modeling very large-scale inter-processor networks. • Need to work with vendors to resolve potential intellectual property issues. Tools and Standards • Characterized workloads from different agencies – Establishing common set of low-level micro-benchmarks predictive of performance. – In-depth characterization of applications incorporated in a common performance modeling framework. – Enables comparability of models and cooperative sharing of workload requirements. • A standardized simulation framework for modeling and predicting performance of future machines. • Diagnostic tools to reveal factors affecting performance on existing machines . • Intelligent, visualization-based facilities to locate “hot spots” and other performance anomalies. Performance Tuning • Self-tuning library software: FFTW, Atlas, LAPACK. • Near-term (1-5 yrs): – Extend to numerous other scientific libraries. • Mid-term (5-10 yrs): – Develop prototype pre-processor tools that can extend this technology to ordinary user-written codes. • Long-term (10-15 yrs): – Incorporate this technology into compilers. Example from history–vectorization: – Step 1: Completely manual, explicit vectorization – Step 2: Semi-automatic vectorization, using directives – Step 3: Generate both scalar and vector code, selected with run-time analysis Working Group 7 Application-Driven System Requirements Chair: Mike Norman Vice Chair: John Van Rosendale WG7 – Application-driven System Requirements Charter • Charter – Identify major classes of applications likely to dominate HEC system usage by the end of the decade. Determine machine properties (floating point performance, memory, interconnect performance, I/O capability and mass storage capacity) needed to enable major progress in each of the classes of applications. Discuss the impact of system architecture on applications. Determine the software tools needed to enable application development and support for execution. Consider the user support attributes including ease of use required to enable effective use of HEC systems. • Chair – Mike Norman, University of California at San Diego • Vice-Chair – John Van Rosendale, DOE WG7 – Application-driven System Requirements Guidelines and Questions • Identify major classes of applications likely to dominate use of HEC systems in the coming decade, and determine the scale of resources needed to make important progress. For each class indicate the major hardware, software and algorithmic challenges. • Determine the range of critical systems parameters needed to make major progress on the applications that have been identified. Indicate the extent to which system architecture effects productivity for these applications. • Identify key user environment requirements, including code development and performance analysis tools, staff support, mass storage facilities, and networks. • Example topics: – applications, algorithms, hardware and software requirements, user support Discipline Coverage • • • • • • • • • Lattice Gauge Theory Accelerator Physics Magnetic Fusion Chemistry and Environmental Cleanup Bio-molecules and Bio-Systems Materials Science and Nanoscience Astrophysics and Cosmology Earth Sciences Aviation FINDING #1 Top Challenges • Achieving high sustained performance on complex applications becoming more and more difficult • Building and maintaining complex applications • Managing data tsunami (input and output) • Integrating multi-scale space and time, multidisciplinary simulations Multi-Scale Simulation in Nanoscience Maciej Gutowski, WP 001 Question 1 1 cm • Identify major classes of applications likely to dominate use of HEC systems in the coming decade, and determine the scale of resources needed to make important progress. For each class indicate the major hardware, software and algorithmic challenges. 1027 cm Question 2 • Determine the range of critical systems parameters needed to make major progress on the applications that have been identified. Indicate the extent to which system architecture effects productivity for these applications. Question 3 • Identify key user environment requirements, including code development and performance analysis tools, staff support, mass storage facilities, and networks. Findings: HW [1] • 100x current sustained performance needed now in many disciplines to reach concrete objectives • A spectrum of architectures is needed to meet varying application requirements – Customizable COTS an emerging reality – Closer coupling of application developers with computer designer needed • The time dimension is sequential: difficult to parallelize – ultrafast processors and new algorithms are required. – fusion, climate simulation, biomolecular, astrophysics: multiscale problems in general Findings: HW [2] • Thousands of CPUs useful with present codes and algorithms; reservations about 10,000 (scalability and reliability) – Some applications can effectively exploit 1000s of cpus only by allowing problem size to grow (weak scaling) • Memory bandwidth and latency seems to be a universal issue • Communication fabric latency/bandwidth is a critical issue: applications vary greatly in their communications needs Findings: Software • SW model of single-programmer monolithic codes is running out of steam – need to switch to a team-based approach (a’la SciDAC) – scientists, application developers, applied mathematicians, computer scientists – modern SW practices for rapid response • Multi-scale and/or multi-disciplinary integration is a social as well as a technical challenge – new team structures and new mechanisms to support collaboration are needed – intellectual effort is distributed, not centralized Findings: User Environment • Emerging data management challenge in all sciences; e.g., bio-sciences • Massive shared memory architectures for data analysis/assimilation/mining – TB’s / day (NCAR/GFDL, NERSC, DOE Genome to Life, HEP) – sequential ingest/analysis codes – I/O-centric architectures • HEC Visualization environments a la DOE Data Corridors Strategy and Policy [1] • HEC has become essential to the advancement of many fields of science & engineering • US scientific leadership in jeopardy without increased and balanced investment in HEC hardware and wetware (i.e., people) • 100x increase of current sustained performance needed now to maintain scientific leadership Strategy and Policy [2] • A spectrum of architectures is needed to meet varying application requirements • New institutional structures needed for disciplinary computational science teams (research facility model) – An integrated answer to Question 3 National User Facility User Interface “End Station” Small Angle Scattering Neutron Reflectometer Polymer Science Nano-Magnetism Strongly Correlated Materials Ultra-high vacuum station Spallation Neutron Source (SNS) Users Sample High Res. Triple Axis Dynamics Fusion Fusion CRT 1 Materials Science Research Network Magnetism CRT Materials : Math : Computer Fe Scientists Correlation CRT Facilities Analogy NERSC ORNL-CCS PSC HPC Facilities Direction of competition Standards Based - Tool Kits Open Source Repository Workshops Education Microstructure CRT QCD QCD CRT 1 Domain Specific Research Networks Collaborative Research Teams Working Group 8 Procurement, Accessibility and Cost of Ownership Chair: Frank Thames Vice-Chair: Jim Kasdorf WG8 – Procurement, Accessibility, and Cost of Ownership Charter • Charter – Explore the principal factors affecting acquisition and operation of HEC systems through the end of this decade. Identify those improvements required in procurement methods and means of user allocation and access. Determine the major factors contributing to the cost of ownership of the HEC system over its lifetime. Identify impact of procurement strategy including benchmarks on sustained availability of systems. • Chair – Frank Thames, NASA • Vice-Chair – Jim Kasdorf, Pittsburgh Supercomputing Center WG8 – Procurement, Accessibility, and Cost of Ownership Guidelines and Questions • Evaluate the implications of the virtuous infrastructure cycle i.e. the relationship among the advanced procurement development and deployment for shaping research, development, and procurement of HEC systems. • As input to HECRTF charge (3c), provide information about total cost of ownership beyond procurement cost, including space, maintenance, utilities, upgradeability, etc. • As input to HECRTF charge (3) overall, provide information about how the Federal government can improve the processes of procuring and providing access to HEC systems and tools • Example topics: – procurement, requirements specification, user infrastructure, remote access, allocation policies, security, power and cooling costs, maintenance costs, reliability and support Working Group Participants • • • • • • • • • • • • Frank Thames Jim Kasdorf Bill Turnbull Gary Wohl Candace Culhane James Tomkins Charles W. Hayes Sander Lee Charles Slocomb Christopher Jehn Matt Leininger Mark Seager • • • • • • • • • • • • • Gary Walter Graciela Narcho Dale Spangenberg Thomas Zacharia Gene Bal Per Nyberg Scott Studham Rene Copeland Paul Muzio Phil Webster Steve Perry Cray Henry Tom Page WG8 Paper Presentations • Per Nyberg: Total Cost of Ownership • Matt Leininger: A Capacity First Strategy to U.S. HEC • Steve Perry: Improving the Process of Procuring HEC Systems • Scott Studham: Best Practices for the Procurement of High Performance Computers by the Federal Government Total Cost of Ownership • Procurement of Capital Assets – – – – Hardware Acquisition cost (FTE) Cost of money for LTOPS Software licenses • Maintenance of Capital Assets • Services (Workforce dominated; will inflate yearly) – – – – Application support/porting System administration Operations Security Total Cost of Ownership • Facility – – – – – – Site Preparation HVAC Electrical power Maintenance Initial construction Floor space • Networks: Local and WAN • Training • Miscellaneous – Residual value of equipment – Disposal of assets – Insurance Total Cost of Ownership • Can “Lost Opportunity” cost be quantified? – – – – Lost research opportunities Lower productivity due to lack of tools Codes not optimized for the architecture Etc. • Replacement cost of human resources • Difficulty in valuing system software as it impacts productivity (development and production) vice quantitative methods to measure hardware performance Total Cost of Ownership • Other Considerations – If costs are to include end-to-end services • Output analysis must be added (e.g., visualization) • Mass Storage • Application Development – Some architectures are harder to program (ASCI: 4-6 years application development; application lifetime: 10-20 years) – H/W architectures last 3-4 years applications must last over multiple architectures Total Cost of Ownership – Bottom Line • Consider ALL applicable factors • Some are not obvious • Develop a comprehensive cost candidate list Procurement • • • • • Requirements Specification Evaluation Criteria Improving the Process Contract Type Other Considerations Procurement • Requirements Specification – – – – – – – Elucidate the fundamental science requirement Emphasize quantifiable Functional requirements Exploit economies of scale Application development environment Make optimum use of contract options and modifications Maximize the use of technical partnerships Consider flexible delivery dates where applicable (increases vendor flexibility) Procurement (Continued) • Requirements Specification (Continued) – Be careful about “mandatory” requirements prioritize or weight them – Be aware of specifications which may limit competition – Avoid “over specifying” requirements for advanced systems – Fundamental differences in specification depending on the intended use of the system (Natural tension between Capacity vs Capability and general tool vs specific research tool) Procurement (Continued) • Evaluation Criteria – For options on long-term contracts, projected “speedup” of applications – Total Cost of Ownership – Use “Real Benchmarks” • Be careful not to water down benchmarks too much • On the other hand, don’t push so hard that some vendors can’t afford it • Other approaches needed for future advanced systems – Use Best Value – Risks Procurement (Continued) • Improving the Process – Insure users are heavily involved in the process • Eases vendor risk mitigation • Users have “decision proximity” – Non-disclosures required by vendors hamstring government personnel after award – Maintain communications between vendors and customers during the acquisition cycle without compromising fairness Procurement (Continued) • Improving the Process (Continued) – Consider DARPA HPCS Process for “Advanced Systems” • Multiple down-selects • R&D like • Leads to production system at end – Attempt to maintain acquisition schedule adherence Procurement (Continued) • Contract Type – Consider Cost Plus contracts for new technology systems or those with inherent risks (e.g., development contracts) – Leverage existing contracts that fit what you want to do • Other Considerations – Don’t have a single acquisition for ALL HEC in government • Leads to “Ivory Tower” syndrome and • A disconnect from users • Bottom Line: don’t over Centralize Procurement (Continued) • Other Considerations (Continued) – Inconsistencies in way acquisition regulations are implemented can lead to inefficiencies (vendor issue) – Practices that would revitalize the HEC industry • What size of market is needed: At least several hundred million dollars per year per vendor • Recognize that HEC vendors must make an acceptable return to survive and invest Accessibility • Key Issue: Funding in the requiring Agency to purchase computational capabilities from other sources • There are many valid vehicles to providing interagency agreements to provide accessibility (e.g., Interagency MOU’s) • Suggested Process: DOE Office of Science and NSF process – open scientific merit evaluated on a project by project basis • Current large sources would add x% capability to supply computational capabilities to smaller agencies • Implementation suggestion: Consider providing a single POC for Agencies for HEC access (NCO?)