Office of Information Resources and Technology presents

advertisement

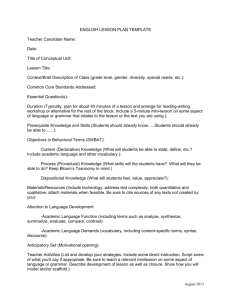

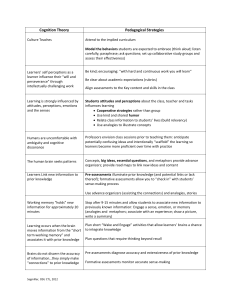

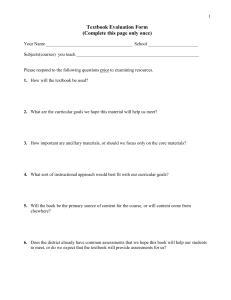

Drexel University Office of Information Resources and Technology presents: INSTITUTE on INNOVATION in TRAINING and TEACHING Assessment and Evaluation Utilizing Online Elements and Technologies Stephen Chestnut, Rich Varenas, Julie Allmayer & Amy Lynch September 11, 2008 Introductions • Julie Allmayer • Steve Chestnut • Rich Varenas – Online Learning Team Drexel University • Amy Lynch – LeBow College of Business Drexel University 3 Objectives • Define assessment in both offline and online contexts • Identify advantages and disadvantages to online assessments • Describe some tools/technologies for creating online tests/quizzes and surveys/polls • Demonstrate assessment capabilities of a CMS/LMS like Blackboard Vista • Identify some exam security products for online assessments • Discuss the importance of performance-based assessment and describe how e-portfolios can be a useful assessment tool 4 Agenda • Assessment Overview (Julie) – Online/E-assessment • • • • • Assessment Within a CMS/LMS (Steve) Exam Security (Julie & Rich) Polling (Rich) Surveying (Steve) E-Portfolios (Amy) 5 Discussion #1 • Take 3 minutes to discuss with your neighbor(s): What does the word “assessment” mean to you? 6 Assessment Overview • The Five Ws (and one H) – What? – Who? – When? – How? – Why? – Where? • Evaluation = Assessment 7 What is Assessment? • “The process of obtaining information that is used to make educational decisions about [learners and] to give feedback about their progress/strengths/ weaknesses…” Source: Barry Sweeny’s Glossary of Assessment Terms, 1994 8 What is Assessment? • “Assessment is used to measure knowledge, skills, attitudes, and beliefs” Source: George Lucas Educational Foundation, Why is Assessment Important 9 Who is Being Assessed? • Individual learners • Institutions (schools, colleges, universities) • Companies/organizations 10 When Do We Assess? • Formative vs. summative assessment – Formative assessment = assessment carried out throughout a course or project – Summative assessment = assessment carried out at end of course or project • Assessment for learning vs. assessment of learning 11 Formative Assessment • Bi-directional process between instructor/trainer and learner • Provides crucial feedback for both instructors and learners • Other forms: peer assessment, self-assessment, etc. 12 Formative Assessment • Purpose is to enhance learning and not to assign a grade • Part of instructional sequence – Feedback is key • Examples: test/quiz that is not graded, assignment, discussion, paper, etc. 13 Summative Assessment • Takes place at end of course or project • Results in some credential (grade, certification, licensure, etc.) • Used to summarize learning 14 Summative Assessment • Did the learner learn what s/he was supposed to learn • Did the course, unit, or module teach what it was supposed to teach • Examples: mid-term quiz, final exam, final paper, capstone course, etc. 15 Why Assess? • Formative assessment: – Provides feedback to instructors about learners’ knowledge/skills so instructors can: • Create appropriate lessons and activities • Decide how to improve instruction/learner success • Inform learners of progress 16 Why Assess? • Formative assessment: – Provides feedback to learners about knowledge/skills they possess – Self-assessment = learners assess their own progress and judge their own work • Learners use “self-evaluation” to improve knowledge and develop skills in future 17 Why Assess? • Formative assessment: – Peer assessment = learners make assessment decisions about other learners’ work • Can be anonymous or not • Enables learners to gain important feedback from each other 18 Why Assess? • Summative assessment: – Used to assign grades, typically course grades – Used to issue certification, licensure, etc. 19 How Do We Assess? • What methods, instruments, processes are used? – Tests and quizzes • Assessment is not just about testing! – Performance-based assessment = focuses on learner achievement, not on test/quiz score 20 How Do We Assess? • Performance-based assessment – Learners showcase what they know or can do, often in real-world settings • Proficiency demonstrated by product or performance – Examples of products: portfolios, writings, paintings, scripts, etc. – Examples of performances: presentations, speeches, readings, etc. 21 How Do We Assess? • Tests/quizzes and products/performances measure knowledge or skills • Surveys, polls, evaluations, questionnaires, interviews, etc. used to capture attitudes, beliefs, and biases 22 Where Do We Assess? • Classroom, training room, etc. • High-stakes tests: – Testing center, computer lab, or convention center • Performance-based evaluations: – Artistic performances: auditorium, concert hall, art gallery – Athletic performances: stadium, gym, dance studio 23 Where Do We Assess? • Corporate sphere – Employee office, meeting room, etc. • Online – 24x7 access 24 Video “Assessment Overview: Beyond Standardized Testing” (8:10) http://www.edutopia.org/assessme nt-overview-video © 2002 The George Lucas Educational Foundation 25 Discussion #2 What is your reaction to the video? How do you feel about using standardized testing vs. performance-based evaluation as a means of assessment? 26 Types of Assessment • Diagnostic assessment = measures learner’s current knowledge and skills – Type of formative assessment – Identifies a suitable program of learning – Examples: pre-tests, writing assignments, or journaling exercises 27 Types of Assessment • Group assessment = assessment of learners within a group by: – Other learners within group (peer) – Learners outside of group (peer) – Instructor/trainer • Can refer to assessment of group as a whole or individual learners’ contributions to group • Examples: group presentations or group papers 28 Types of Assessment • Formal assessment = numerical score or grade assigned – Usually in form of test/quiz or paper – Examples: graded portfolios, presentations, or performances • Informal assessment = does not contribute to learner’s final grade – Conducted in a more casual manner – Examples: observations, checklists, participation, discussions, etc. 29 Types of Assessment • Objective assessment = contains questions that have a single correct answer • Examples: T/F, MC, matching • Subjective assessment = contains questions that have more than one correct answer, or more than one way of expressing the correct answer • Examples: short answer, essay 30 Types of Assessment • Valid assessment = measures what it is intended to measure – Example: written and road components of driving test • Reliable assessment = same results achieved by same testtakers – Example: MC test that can be scored with high accuracy 32 Validity & Reliability Validity Reliability MC Test Low High Essay Test High Low 33 Validity & Reliability Valid assessment Reliable assessment 33 E-Assessment • E-assessment = use of information technology for any assessment-related activity 35 E-Assessment NetSupport Remark Office Paper-Based Assessments Online Assessments Clickers Respondus 36 Online Assessment • Online assessment = form of eassessment in which an assessment tool is delivered via a computer connected to the Internet 37 Online Assessment Tools 1. CMS/LMS = Content/Learning Management System – Examples: Blackboard Vista (formerly WebCT), Blackboard, Angel, eCollege, Moodle, Sakai – Include tool(s) for assessment 2. E-portfolio = electronic or digital portfolio 38 Online Assessment Tools • E-Portfolios – Electronic compilation of items assembled and managed on Web – Provide evidence of learner’s achievement (“learning record”) – Good example of performance-based assessment – Also a form of self-assessment – Examples: Folio by ePortaro, Masterfile ePortfolio Manager by Concord, etc. 39 Online Assessment Tools 3. Online survey/polling tools – For surveys: SurveyMonkey, Zoomerang, PollDaddy – For polling: Poll Everywhere, Wimba Classroom, clickers – Should be used for informal assessments only (those for which no grade is assigned) 40 E-Assessment Tools • NetSupport – for exam security and computer lab management • Remark Office – automates processing of paper-based evaluation forms • Clickers – transmit and record audience responses to questions • Respondus – automates upload of questions and answers to CMS/LMS assessment engine 41 Paper-Based Assessments NetSupport Remark Office Paper-Based Assessments Online Assessments Clickers Respondus 42 Paper-Based Assessments • Low-tech is not necessarily bad! – Relatively easy to implement and no technical issues introduced – Examples: handwritten assignments or exams, Scantron, paper-based evaluation forms 43 Advantages of Online Assessments 1. Lower long-term costs – For MC, T/F, and short answer exams that are set up in CMS/LMS: • Questions and answers can be culled from publisher test bank and uploaded automatically via Respondus • Answers are automatically scored by the system • Grades can automatically flow over to Grade Book 44 Advantages of Online Assessments 2. Instant feedback for learners – For exams in CMS/LMS, results can be released immediately after exam is submitted, or later – Instructor controls level of detail displayed – Instant feedback is more beneficial to learners (quick positive/negative reinforcement) 45 Advantages of Online Assessments 3. Greater flexibility of location and timing – Learners can take online assessments anytime, anywhere – Instructors can set up exam questions, administer exams, score exams, and release grades anytime, anywhere 46 Advantages of Online Assessments 4. Improved reliability – Machine marking more reliable than human marking – Only applies to objective assessments (containing questions that have a single correct answer, i.e., MC, T/F, short answer, matching) 47 Advantages of Online Assessments 5. Allow for enhanced question styles which incorporate interactivity and multimedia – Can include video, audio, images, Flash-based elements, animations, drop-down lists, etc. – Example: Drexel’s language placement exams in Bb Vista 48 Disadvantages of Online Assessments 1. Online assessments can be expensive to set up – Time and cost investment can be high – Once the assessment is created, it can be reused and easily modified 49 Disadvantages of Online Assessments 2. Online assessments are not suitable for every type of assessment – “Toolbox approach”: not every tool is appropriate for every job – Example: subjective assessments (consisting of essay questions) still have to be scored/graded manually 50 Disadvantages of Online Assessments 3. Online assessments are lacking in quality – Questions from publisher test banks may or may not be of high quality – Instructors can develop their own questions, but this takes time and effort 51 Disadvantages of Online Assessments 4. Online assessments are not secure – Two main security issues: 1. Cheating – Test-takers can use cell phones, send text messages, access other programs or files on the computer, or use a second computer to look up answers 2. Authentication – No way to confirm that test-taker is the person who is supposed to take test 52 Disadvantages of Online Assessments Issue of Authentication – If login is required, test-taker could: • • Give login to a friend Login as him/herself and ask friend to take it • Solution: administer the test in a proctored environment and check IDs 53 Disadvantages of Online Assessments 5. Technical issues – Two kinds of technical issues: 1. Connectivity issues – – Internet connection could drop out during exam Important to have policy in place 2. User error – If users don’t save answers before submitting exam, answers will not be recorded in system 54 Assessment Within a CMS/LMS 55 Assessment Within a CMS/LMS • Quizzes, exams, self-tests • Recent implementations – Placement Exams – Neuroscience Exams – “Without Regard” • Mandatory Online Discrimination, Harassment, and Retaliation Prevention Training 56 Assessment Within a CMS/LMS 57 Assessment Within a CMS/LMS • A demonstration 58 Assessment Within a CMS/LMS • Notepad • Respondus (PC/Mac) • Bb Vista 59 Assessment Within a CMS/LMS • Respondus Standard Format • Preview / Publish 60 Assessment Within a CMS/LMS Respondus Standard Format 1. The capital of Georgia (formerly part of the Soviet Union) is: a. South Ossetia *b. Tbilisi c. Atlanta d. Gori e. Moscow 61 Assessment Within a CMS/LMS • Create Assessment • Question Database 62 Assessment Within a CMS/LMS 63 Exam Security • Exam security products – For online and face-to-face (F2F) instruction: 1. CMS/LMS 2. Respondus LockDown Browser (integrates with Bb Vista, Blackboard, and Desire2Learn CMS/LMSs) 3. Securexam Browser (integrates with Bb Vista and Blackboard) 64 Security Options in Bb Vista Proctor password IP address/mask Browser restriction 65 Exam Security Security options in Bb Vista: 1. Proctor password – Instructor can set a case-sensitive password that test-takers must enter to begin assessment 2. Browser restriction – Assessment can only be taken with Respondus LockDown Browser (not Internet Explorer, Firefox, etc.) 66 Exam Security • Respondus LockDown Browser – Browser that prevents test-takers from printing or copying assessment, going to Internet site(s), or accessing other applications during assessment – Works on Windows and Mac – Versions available for lab, personal, and work computers – Must be downloaded/installed prior to taking assessment 67 Security Options in Bb Vista Proctor password IP address/mask Browser restriction 68 Exam Security Security options in Bb Vista: 3. IP address – Restricts access to assessment based on IP address for one computer • Test-taker can only access assessment from particular computer 4. IP address mask – Restricts access to assessment based on IP address for group of computers • Group of test-takers can only access assessment from particular location 69 Exam Security • Additional assessment security features in Bb Vista: 1. 2. 3. 4. 5. Deliver questions one at a time and do not allow questions to be revisited Create question set and randomly choose questions from “Question Database” Randomize questions in question set for each attempt Randomize answers (only for certain question types) Set time limit for assessment 70 Exam Security • Additional assessment security features in Bb Vista: 6. Disallow answer submission if time has expired 7. Restrict availability dates 8. Score release options (i.e., do not release score until all questions have been graded or availability period has ended) 9. Choose not to show correct answers (“Results display properties”) 71 Exam Security • Securexam Browser – Similar to Respondus LockDown Browser – Compatible with Bb Vista and Blackboard 72 Exam Security • Security risks – Test-takers in a non-proctored environment can cheat • Can use another computer, get answers from a friend, etc. – Test-takers who want to copy or print the exam can probably do it – Solution: administer exam in proctored environment 73 Exam Security • Securexam Remote Proctor – Proctors online assessments 74 Exam Security • Securexam Remote Proctor – Two components: 1. Exam security software that locks down the computer – Includes fingerprint authentication 2. Small webcam and microphone – – Records audio and video in testtaking area Captures 360-degree image and flags significant noises/motions 75 Discussion #3 • What do you think of Securexam Remote Proctor? • It is a useful tool or not? • Will it actually prevent cheating? • How would you feel if you had to take a test using this product? 76 Exam Security • Classroom management/ monitoring products (can also be used for exam security) – For F2F instruction: 1. NetSupport School 2. Software Secure Classmate 77 Exam Security • NetSupport & Software Secure Classmate – Do not replace need for proctor during assessment – purpose is to assist instructors – Can be used for testing, but designed more for instruction than exam security 78 Exam Security • What is NetSupport? – Computer management and monitoring tool – Can be used in classroom and lab environments 79 Exam Security Monitor student computers 80 Exam Security Manage student Internet access 81 Exam Security Manage student surveys 82 Exam Security • Surveying in NetSupport – Create survey using pre-supplied or custom responses – Instantly see all responses and results summary for the class – Publish survey results to all learners 83 Exam Security • Quizzing/testing in NetSupport – Create a library of questions that can be shared – Create any number of tests using questions from your library – Create questions with 2, 3, or 4 possible answers – Display individual results back to each learner 84 NetSupport www.netsupportschool.com 85 Software Secure Classmate • Restricts Web access • Disables Word and access to desktop (so Start menu cannot be accessed) • Monitors test-takers’ screens and compiles listing of websites visited • Sends notification to instructor if testtakers log off of Classmate • Allows instructor to apply different management settings to individual testtakers 86 Clickers • What are clickers? – Simple remote “Personal Response System” – Use infrared (IR) or radio frequency (RF) technology – Transmit and record participant responses to questions 87 Clickers • What are clickers? – Provide immediate feedback – Optional registration – Nominal charge 88 Clickers • Who is using them? – Colleges and universities since 1998 89 Clickers • What makes them unique? – Give instructors the ability to finetune their instruction based on student feedback – Work for large and small class sizes 90 Clickers • What types of assessments should they be used for? – Polling and surveying – Pre-tests or quizzes that do not contribute to learner’s grade (i.e., informal assessments) • Test-takers can switch clickers or enter unintended responses by mistake 91 Clickers • Why use them? – Easy-to-adapt technology – Evaluate student mastery of content – Students can validate their own learning – Gauge student opinion – Easy to use and inexpensive to acquire 92 Clickers • What are the downsides? – Initial expense – Specialized keypads can be expensive – Receiver and software costs – Can be lost or stolen 93 Clickers • Where is the technology going? – Broad range of applicability – Faculty use increasing – Underlying technology is growing rapidly – Cell phones 94 Clickers • What are the implications for teaching and learning? – Facilitate interaction and make instruction more engaging – Identify misconceptions and provide feedback • Consideration: asking the right questions is important 95 Poll Everywhere • What is Poll Everywhere? – Used for live audience polling 96 Poll Everywhere • What is a poll? – Single question or prompt requiring audience response – Types of polls: • Multiple choice • Free text (open-ended) 97 Poll Everywhere • Why use Poll Everywhere? – Replaces hardware-based remote Personal Response Systems (clickers) – Uses standard Web technology – Audience can respond via SMS text message on their cell phones – Gathers live responses in any venue 98 Poll Everywhere • Can audience vote over Web? – Yes; when viewing poll, click on "Web Voting" link on right – Can embed Flash widget for poll within website, including Bb Vista and other CMS/LMSs 99 Poll Everywhere • How does Poll Everywhere work? 1. 2. 3. 4. Ask question Audience casts their votes Show results Generate reports 100 Poll Everywhere Question Vote Results Report 101 Poll Everywhere • How will audience know how to vote? – Simple instructions are displayed in every poll 102 Poll Everywhere • What are the downsides? – Free plan • Only 30 votes per poll (but no limit to number of questions) • Cannot identify respondents – Cellular text messaging costs – No Microsoft Office 2004 or 2008 support for Macintosh 103 104 Poll Everywhere www.polleverywhere.com 105 Surveying 106 Surveying • Gather information, opinions, enrollments, problem reporting, interest, etc. • Course evaluations • Generate reports 107 Surveying • ClassApps – Build surveys in online environment, relatively easy to use, adequate reporting, exportable results – EMBA, Senior Survey, Democratic Presidential Debate 108 Surveying 109 Surveying • Snap Surveys – Both desktop and online components – Multiple editions (online, email, paper) – Learning curve – Robust reporting features 110 Surveying 111 Surveying • Bb Vista – Course evaluations, already targeted 112 Surveying 113 Surveying 114 Surveying • Remark Office OMR – Optical Mark Recognition – Not technically “online” – Easy to use • User creates own forms • Easy report generation – Not “green” (handling issues) – Course evaluations 115 E-Portfolios • LIFEfolio (LeBow College’s Integrated Focused Experience) • Undergraduate Electronic Career Portfolio Assessment – Life’s Learning Online 116 LIFEfolio • An electronic portfolio built and maintained by the student every term • College-wide template personalized by each student’s experiences • Archives documents for future reference • Showcases attributes and learned skills • Avenue for thought and reflection on learning 117 Technologies Used in E-Portfolios • • • • • • Waypoint Assessment Camtasia Video and audio clips Scans PDF/PPT/MS documents ePortaro system 118 Example 119 Learning Goal • Career learning – Essential learning outcome for all students at LeBow College of Business (LeBow) – Consistent with the College’s and University’s core mission • Uses a cooperative learning model, integrating academic and experiential learning 120 Career Learning • Career learning is defined as the ability to: – Identify knowledge learned in the classroom and skills acquired through work experience that are relevant to a student’s future career – Showcase the knowledge and skills in such a way that will promote employability over one’s career – Develop a career action plan based on one’s professional/personal goals 121 Assessment Levels • • • • Personal Peer Faculty Employer 122 Goal Measurement When • Assessment will be made based on student presentations of career portfolios to faculty in a seminar class • Students will take this class after completing final co-op, in either spring term of junior year or first term of senior year • Seminar class will replace second term of UNIV 101 123 Goal Measurement Structure • One-credit course will consist of two classes in beginning of term which will focus on requirements of the presentation • Middle six weeks will be devoted to individual student presentations (three per hour) made to panel of seminar faculty – Ideally, these presentations will be captured in video format and given to students for their portfolios 124 Goal Measurement Structure • Final two weeks of class will consist of feedback given to students about using their portfolios in a job search and their career plans • Faculty for this seminar will have similar professional backgrounds to those who currently teach UNIV 101 125 Goal Measurement Rubric • Rubric will be created and used to assess student learning across three career learning criteria: 1. Awareness of one’s skills and knowledge relevant to a professional career; 2. Professional communication or presentation of skills and knowledge to others; and 3. Development of a plan for student’s future career • Waypoint will be used by faculty in order to accumulate data at College level 126 Rubric • Portfolio – Unsatisfactory, Meets requirements, or Exceeds requirements for following criteria: • • • • Content Accomplishments Evidence of skills and knowledge Learning reflections – Unsatisfactory or Satisfactory for following criteria: • Grammar and writing mechanics • Design/appearance 127 Rubric • Oral Presentation of Career Portfolio – Unsatisfactory, Meets requirements, or Exceeds requirements for following criteria: • Organization • Content • Career Plan – Unsatisfactory, Meets requirements, or Exceeds requirements for following criteria: • Realism • Content 128 Reporting • Learning goal and outcomes of annual assessment will be communicated to students, faculty and staff of LeBow on annual basis • Mechanisms for this will include: – Dean’s newsletter (distributed to all faculty) – Presentations of results at college faculty meetings – Memorandums sent to current Department chairpersons and posted to a learning assessment webpage which will be linked to College website 129 Action Plan • After assessments are completed and results communicated to the LeBow community, action plans will be developed each year so that continuous improvement of student achievement toward learning goal may be obtained • Action plans may consist of, but are not limited to, curriculum interventions, changes in course content and other steps deemed necessary by the assessment committee to improve student learning 130 Outcomes • Retain information needed in future course work • Show evidence of and reflect your learning while at LeBow • Uniquely present yourself in the professional world • Increase value of your degree by enhancing LeBow’s image 131 Testimonials “I had taken an economics course in high school, but it was mainly conceptual. I had also taken three years of calculus. Not once did I see a connection between these two subjects. My experience in this calculusbased section of microeconomics has shown me otherwise. I would never have thought to pull up previous math assignments and to find a correlation between what he had done and what we were currently learning in economics.” 132 Wrap-Up • Q&A • Evaluation forms • Handouts 133 Objectives Revisited • Define assessment in both offline and online contexts • Identify advantages and disadvantages to online assessments • Describe some tools/technologies for creating online tests/quizzes and surveys/polls • Demonstrate assessment capabilities of a CMS/LMS like Blackboard Vista • Identify some exam security products for online assessments • Understand the importance of performance-based assessment and describe how e-portfolios can be a useful assessment tool 134 Thank You! • Our contact information: Drexel University Online Learning Team Korman 109 215-895-1224 olt@drexel.edu 135