A Splitter for German Compound Words

advertisement

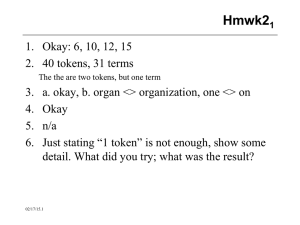

A Splitter for German compound words Pasquale Imbemba Free University of Bozen-Bolzano Supervisor: Dr. Raffaella Bernardi Scenario User input Compound words in German ● Problem for IR -retrieval of German books -no direct keyword matching ● Problem for CLIR -retrieval of IT & EN books -No direct translation in dictionary 2 Problem: German compound words Compounding is productive: ● Combine pre-existing morphemes to form a new word (aka Univerbierung) Compounds of nouns most frequent cases User input may not be in the lexicon used by CLIR search engines ● User input may be a lexicalized “compound” word ● ● Donau + Dampf + Schiff + Fahrt (tr.: Steam navigation on the Danube) Malerei (tr.: painting) no: Maler+Ei (tr.: painter and egg) Hence, need of a splitter to handle both cases Furthermore, language is in continuous evolution (neologism); need of constantly up-to-date lexical resources 3 State of the art ● TAGH (Berlin-Brandenburg Academy of Sciences / University of Potsdam) ● MORPHY ● (University of Paderborn) Reduce to base form and affixes, look them up MORPA ● Weighted FSA: choose combination with least cost (Tilburg University) Probabilistic calculus to determine segmentation De Rijke/Monz (University of Amsterdam) Shallow approach ● Given a word, if substring is in lexicon, subtract it. Repeat until no substring is left. 4 Tools ● Splitter Mechanism to segment nouns ● ● Implemented, evaluated and improved De Rijke/Monz algorithm using Java Lexicon Morphy (57,000 nouns), dated (Lezius) deWaC (440,000 nouns), recent (Baroni & Kilgarriff) ● Lexical resource to execute lookup onto Extracted nouns from Morphy & deWaC Regular Expression filtering on deWaC Resources indexed with Lucene 5 De Rijke/Monz algorithm Split (word) For i := 1 to length-1 do if substring(0,i)isInNounLex && split(substr(i+1,length) != “ “ do r = split(substr(i+1,length) return concat (substr(1,i),+,r) if (isInNounLex(word)) return word; else r = split(substr,i to length) return ““; Ö l Ö l p r e i s p r e i s p r e i s p r e i s P r e i s p r e i s r = preis 6 Enhanced Splitter workflow ● ● Cascading lexical resources Increases split correctness Improves overall correctness Lookup first Lexicalized elements Reduces amount of incorrect splits 7 Splitter diagram 8 MuSiL Integration Query Input Donaudampfschifffahrt Name Recognition Donau Dampfschifffahrt Morphological Analysis Dampfschifffahrt_N Multiword recognition Dampfschifffahrt_N Split and Translate Multilingual Dictionary EN: vapour_N | steam_N (...) 2 1 Splitter 3 EN: ship_N | (...) EN: drive_N | navigation_N (...) IT: vapore_nm | (...) IT: nave_nf | (...) IT: guida_nf | navigazione_nf (...) Multilingual Thesaurus 9 Evaluation De Rijke/Monz Splitter Our Splitter deWaC Morphy deWaC Lexicon used 6.201 16.141 6.207 Total splits 2.723 4.851 2.022 Total non splits 3.478 11.290 4.185 Total NS wrong 1.322 50 1.404 Split due to lexical error 2.383 66 1.871 Split due to logic error 9 1.067 7 Correct splits 331 3.718 144 Split correctness 12,16% 76,64% 7,12% Correct elements 2.487 14.958 2.925 Correctness 40,11% 92,67% 47,12% ● Morphy 16.135 4.517 11.618 50 45 864 3.608 79,88% 15.176 94,06% Total correctness improved ● By increasing the amount of non splits with deWaC and Morphy 10 Complexity of the split function • De Rijke/Monz – Best case: • We scan the input word from first to last position T (n) (n 1) O(n) – Worst case: • Calls to split • Exponential growth n 1 2 i T (n) 2 O i 0 1 2 n 1 • Our splitter: – Best case: • We find the word immediately to exist in the lexical resources of nouns T (n) O1 – Worst case: • Execute function recursively every time we encounter a word in the lexicon and the remaining substring is not empty (see De Rijke/Monz) 11 Performance on MuSiL Without splitter component With splitter component DE IT EN Precision DE IT EN Precision Abenteuer+Geschichten 1 0 0 100% 10 57 2 51% Beruf+Orientierung 13 0 0 100% 13 392 24 43% Kommunikation+Politik - - - - 28 69 317 47% Wert+Papier+Handel+Gesetz 4 0 0 100% 0 36 90 17% Doppel+Besteuerung+Abkommen 4 0 2 100% 0 0 15 100% Aufmerksamkeit+Defizit+Syndrom 44 0 25 36% 3 0 8 73% Hirn+Leistung+Training 15 0 0 100% 4 48 189 46% Kunst+Erziehung+Bewegung 1 0 0 100% 144 478 251 36% Emotion+Regulierung 1 0 0 100% 0 3 66 71% Unternehmen+Netzwerke 8 0 25 61% 73 61 677 27% ● Increased amount of retrieved documents ● More relevant documents are top ranked 12 Conclusion and future work ● ● Good: Cascade method Deal with lexicalized elements Open topics: Choose correct segmentation among alternatives Metrics for correctness of segmentation ● Weights, probability … 13