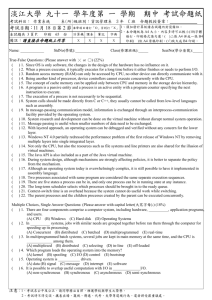

CSWiki_StudyGuide

advertisement

5 State Process Diagram:

There are 5 states a process may be in. The kernel will run as the result of

TRAP or INTERRUPT, and if needed it will move a process from state to state by

adjusting its tables. The states a process can move through are shown in the

following diagram.

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

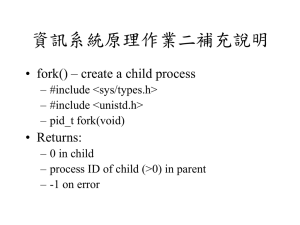

fork()

(TRAP)

-----------fork()

-----------|

New

| <---------------------------| Exiting |

|

* *

|

(TRAP)

^

| * * *

|

-----------|

-----------\

-------------------|

/

Admitted \

|

Time slice up |

|

/ exit()

v

v (clock INTERUPT) |

|

/

(TRAP)

----------------------| Ready

| ---------------> |

Run

|

| ******** |

sched()

|

*

|

| ******** |

dispatch()

|

|

----------------------^

/

Completion of service\

/ Request for a "slow"

(INTERUPT)

\

/ service via a System Call

\

/

(TRAP)

\

v

-----------|

Wait

|

| ******** |

| ******** |

-----------~~~~~~~~~~~~~~~~~~~~X~~~~~~~~~~~~~~~~~~~~X~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

States

New: Newly Created Processes ( fork() call on UNIX systems ).

Ready: Processes that are not waiting for services and can run.

Run: The single Running Process (on a multiprocessor system with k processors (CPUs)

there could be k processes in this state.

Wait: Processes waiting for a operating system service to complete.

Exiting: Processes that are terminating ("zombies" on UNIX systems).

Note that systems have a "Clock INTERUPT" that usually occurs every 1/60th or

1/100th of a second. The Clock INTERUPT is called a "Clock TICK" and the number

of Clock TICKS is kept by the Kernel and is a measure of time. When a Clock

INTERUPT occurs the Kernel may choose to move a Running Process into the Ready

State (if it's "Time Slice" has expired) and select another process to run.

Components of a Process:

Memory Image

o Includes executable machine code, process-specific data, a call stack (to keep track of active

subroutines and other events), and a heap to hold data generated at run-time.

State of the Processor

o The register content and physical memory addressing stored in the registers when a process

executes, and in memory when the process is waiting.

PCB (Process Control Block)

o the data structure in the kernel that identifies a process. Includes PID, state, program counter,

address space for the process, accounting information, the pointer to the PCB of the next process,

I/O information

o the PCB is stored in the kernel stack to be protected from normal users.

Virtual Memory Image:

1.6 Virtual Memory Layout of a Process

The concept of a "process" is a central to computing and over time it has evolved to the point where major

Multiprocessing Opeating Systems all have processes that, at the upper level, appear very similar. This is no

accident, since intense hardware support for effective process management is require and hardware evolved

with Operating Systems. Neither exists in a vacuum.

UNIX (mid 1970s) was one of the earliest Operating Systems to use the currently, widely used present process

model. This model is used by: all UNIX and Linux version, Windows NT and later Micorsoft versions, Mac OS,

VMS and also several "mainframe" and real time operating systems.

Virtual Memory is the menory the CPU can detect when it is not in "Privileged Mode" and all that a user

process sees when performing loads and stores. We will see later how Virtual memory is mapped to Real

(Physical) Memory.

Note that normally:

The PC, which points to the location where the next machine instruction to execute is located, contains an

address in the Text Segment.

The SP points to the top of the Stack, which grown upward in memory when things are pushed onto the stack.

Most programming languages use this for "Stack Frames", for function calls. A Stack Frame is space on the

stack to:

1)

2)

3)

4)

Pass arguments to the function being called

Hold Local variables for the function being called

Return arguments to the calling function

Save the "Context", in case of a "Context Switch".

<more on Context Switches appears later>

the heap contains space that is dynamically allocated, such as with "malloc()" in C or when "new" is used in

C++.

When a program attempts to access memory that it does not have permission to access, access permission is

checked by the Memory Management Unit (MMU), an interupt is generated. Unless you explicitly catch such

an illegal memory interupt in your program, the usual result is the classic fatal error "segmentation fault" or

"bus error".

-------------------------|

|

|

Unused

|

|

|

-------------------------PC - > *

|

|

Text Segment

*

| Machine Instructions

|

*

|

|

-------------------------|

|

|

Unused

|

|

|

-------------------------*

|

|

*

| Static Read Only

|

*

|

|

*

-------------------------*

|

|

*

| Static Read/Write

|

*

|

|

Data Segment *

-------------------------*

|

|

*

| Heap

|

*

|

grows down

|

*

-----------|-------------+

|

v

|

+

|

|

+

|

Unused

|

+

|

|

+

|

^

|

SP ->

*

-----------|-------------*

|

grows up

|

*

| Stack

|

*

|

|

-------------------------|

|

|

Unused

|

Virtual Address MAX|

|

-------------------------Virtual Address0

No access

Read Only

No Access

Read Only

Read/Write

Read/Write

No Access/Raed/Write

Read/Write

No Access

Contents of the PCB:

1.11) The Process Control Block (PCB) and Kernel Attributes

The information the kernel maintains about a process is called that process's Process Control Block (PCB). The

PCBs are maintained in the kernel's address space, but a PCB is not really one block or table, rather the

information is scattered around a number of tables and data structures.

We call some of this information "Process Attributes". For UNIX systems some of the most important Process

Attributes are:

pid

ppid

uid euid

gid egid

pgid

sid

cwd

umask

limits

Process ID

Parent Process ID

User ID, Effective User ID

Group ID, Effective Group ID

Process Group ID

Session ID

Current Working Directory

file mode creation MASK

(hard and soft) limits for resources:

time

file

data

stack

coredump

nofiles

vmemory

nice

root

time

maxium CPU seconds for a process

maxium number of blocks that can be written into a file

maxium data segment size

maxium stack size

maxium coredump file size

maxium number of open file descriptors

maxium virtual memory size

process scheduling priority adjustment

root directory for all paths and commands

Approximate times

start

system

user

other process statistics: (total page faults etc...)

open file descriptor table: (files, pipes, sockets, devices).

opened IPC objects: (semiphores, shared memory, message queues).

There are usually both shell commands and system calls for accessing, and in some cases, changing process

attributes.

On UNIX new processes are generated by using a fork() call, which causes the kernel to make a new process

which is almost an exact copy of the process making the fork() call. Therefore process attributes are inherited

from a proccess's parent process (except for example pid and ppid). Thus, setting a process attribute in a shell

(process) causes all processes you start from that shell to inherit the attribute you had set in the shell.

To review, in addition to the process attributes in the PCB, a process consists of a Virtual Memory system, the

contents of caches (for the running process), and the CPU State.

The von Neumann Cycle

The von Neumann Cycle is the basis of most successful computer architectures that have ever been developed.

Considering it was worked out in some detail more than 50 years ago by John von Neumann, before the first

vacuum tube computer was built, this was a major intellectual breakthrough.

The von Neumann Cycle

while( !HALT ) {

fetch the next instruction from memory and increment the PC;

decode the instruction;

fetch memory operand(s), if needed for the instruction;

perform CPU operations, if needed for the instruction;

store result(s) in memory, if indicated by the instruction;

}

Note that:

"Memory" here means external memory, not CPU registers.

"CPU operations" consist of all operations that do

not involve external memory.

The Control Unit (CU) is the heart of the CPU, it implements the von Neumann Cycle. In some machines in the

past (1960/1970), the CU was actually implemented as a software porgram (called microcode, no relation to

current microprocessors) that ran in a hardware microcode-CPU, internal to the CPU! Note the microcode

instrucion set would look very different from the CPU's instruction set, it would control the CPU busses,

external memory interface, ALU etc... In extreme cases, one could rewrite the microcode for a CPU and

download it into the CPU, creating one's own instruction set!!! Current CPUs do not use "software"

microcoding, because of the slowdown it introduces, instead they use hardwired logic for the CU, typically

random logic, or an array of diodes that represent an hardcoded microcode program.

Context Switch during a system call

1. Save State

2. Flush Caches

3. Switch from user virtual memory to kernel virtual memory

4. Mark cache as invalid

5. Set priority to 1 (for kernel privileges)

6. Set state (may not overwrite all the registers, some may be used to pass arguments/parameters)

Threading

Benefits of threading

Responsiveness - Multithreading an interactive application may allow a program to continue running even if

part of its blocked or is performing a lengthy operation, thereby increasing responsiveness to the user. For

instance, a multithreaded Web browser could allow user interaction in one thread while an image was being

loaded in another thread.

Resource Sharing - Processes may only share resources through techniques such as shared memory or message

passing. Such techniques must be explicitly arranged by the programmer. However, threads share the memory

and the resources of the process to which they belong by default. The benefit of sharing code and data is that it

allows an application to have several different threads of activity within the same address space.

Economy - Allocating memory and resources for process creation is costly. Because threads share the resources

of the process to which they belong, it is more economical to ccreate and context-switch threads. Empirically

gauging the difference in overhead can be difficult, but in general it is much more time consuming to create and

manage processes than threads. In Solaris, for example, creating a process is about thirty times slower than is

creating a thread, and context switching is about five times slower.

Scalability - The benefits of multithreading can be greatly increased in a multiprocessor architecture, where

threads may be running in parallel on different processors. A single-threaded process can only run on one

processor, regardless how many are available. Multithreading on a multi-CPU machine increases parallelism.

THREADING ISSUES:

fork() - if a thread calls fork(), does it cause all threads to fork() or just the one?

exec() - same thing as fork()

Semaphores

A semaphore S is an integer variable that, apart from initialization, is accessed only through two standard

atomic options:wait() and signal(). The wait() operation was originally termed P (from the Dutch proberen, "to

test"); signal() was originally called V (from verhogen, "to increment"). The definition of wait() is as follows:

wait(S) {

while S <= 0;

S--;

}

The definition of signal is as follows:

signal(S) {

S++;

}

All modifications to the integer value of the semaphore in the wait() and signal() operations must be executed

indivisibly. That is, when one process modifies the semaphore value, no other process can simultaneously

modify that same semaphore value. In addition, in the case of wait(S), the testing of the integer value of S (S <=

0), as well as its possible modification (S--), must be executed without interruption.

Usage~~

Operation systems often distinguish between counting and binary semaphores. The value of a counting

semaphore can range over an unrestricted domain. The value of a binary semaphore can range only between 0

and 1. On some systems, binary semaphores are known as mutex locks, as they are locks that provide mutual

exclusion.

We can use binary semaphores to deal with the critical-section problem for multiple processes. The n processes

share a semaphore, mutex, initialized to 1. Counting semaphores can be used to control access to a given

resource consisting of a finite number of instances. The semaphore is initialized to the number of resources

available. Each process that wishes to use a resource performs a wait() operation on the semaphore (thereby

decrementing the count). When a process releases a resource, it performs a signal() operation (incrementing the

count). When the count for the semaphore goes to 0, all resources are being used. After that, processes that wish

to use a resource will block until the count becomes greater than 0.

We can also use semaphores to solve various synchronization problems. For example, consider two

concurrently running processes: P1 with a statement S1 and p@ with a statement S2. Suppose we require that

S2 be executed only after S1 has completed. We can implement this scheme readily by letting P1 and P2 share a

common semaphore synch, initialized to 0, and by inserting the statements

S1;

signal(synch);

in process P1 and the statements

wait(synch);

S2;

in process P2. Because synch is initialized to 0, P2 will execute S2 only after P1 has invoked signal(synch),

which is after statement S1 has been executed.

Deadlock

Process may utilize a resource in the following sequence:

Request - a process requests resources and waits.

Use - Process operates on resource.

Release - Process releases resource.

Conditions for Deadlock:

Mutual Exclusion - Only one process at a time can use a resource

Hold and Wait - Process must be holding >= 1 resources and wait to acquire more resources that are

being used by other processes

No preemption - Resource can be released only voluntarily by process using it

Circular Wait - For a set {p0, p1, ... pN}, of waiting processes, p0 is waiting for a resource from p1, p1

waits on p2, ... , pN waits p0.

Methods for Handling Deadlock:

Use of a protocol to prevent or avoid deadlock

Allow deadlock, detect it, and recover

Ignore the problem and pretend deadlocks never occur (used when it is believed that the deadlocks will

be extremely rare)

Starvation:

Process can't get the resources necessary to complete. Another process that holds the needed resources

may release it, but it gets it back before the starving process can get it.

Gantt Charts

Turnaround Time - From point of view of particular process, important criterion is how long it takes to

execute that process. The interval from the time of submission of a process tothe time of completion is

the turnaround time. Turnaround time is the sum of the periods spent waiting to get into memory,

waiting in the ready queue, executing on the CPU, and doing I/O.

Waiting Time - The CPU-scheduling algorithm does not affect the amount of time during which a

process spends waiting in the ready queue. Waiting time is the sum of the periods spent waiting in the

ready queue.

Response Time - In an interactive system, turnaround time may not e the best criterion. Often, a process

can produce some output fairly early and can continue computing new results while previous results are

being output to the user. Thus, another measure is the time from the submission of a request until the

first response is produced. This measure, called response time is the time it takes to start responding, not

the time it takes to output the response. The turnaround time is generally limited by the speed of the

output device.

Examples:

Thread Fork:

#include

#include

#include

#include

<pthread.h>

<stdio.h>

<unistd.h>

<stdlib.h>

int value =0;

void *runner(void *param);

int main(int argc, char *argv[])

{

int pid;

pthread_t tid;

pid=fork();

if(pid==0)

{

pthread_create(&tid,NULL,runner,NULL);

pthread_join(tid,NULL);

printf("CHILD %d\n",value);

}

else if(pid>0)

{

wait(NULL);

printf("PARENT %d\n",value);

}

}

void *runner(void *param)

{

value = 5;

pthread_exit(0);

}

Signals:

#include

#include

#include

#include

<stdio.h>

<signal.h>

<setjmp.h>

<stdlib.h>

volatile int i=-1,j=-1;

//global variables

//only create volatile variables when dealing with signal handling.

volatile long t0=0;

jmp_buf Env;

void alarm_handler(int dummy)

{

long t1;

t1 = time(0) - t0;

printf("%d second%s passed: j = %d. i = %d\n",t1,(t1==1) ? " has" : "s have", j,

i);

if(t1>=8)

{

printf("giving up\n");

longjmp(Env,1); //allows jumping from where your running in a program to a

saved state

}

alarm(1);

}

int main()

{

if(setjmp(Env) == 0)

{

signal(SIGALRM, alarm_handler);

}

else

{

printf("Gave up: j = %d, i = %d\n", j, i);

exit(1);

}

alarm(1);

t0 = time(0);

for(j = 0; j < 10000; j++)

{

for(i = 0; i < 1000000; i++);

}

printf("Finished the for loops!\n");

exit(0);

}

Sample Output:

1 second has passed: j = 220. i = 415297

2 seconds have passed: j = 447. i = 141689

3 seconds have passed: j = 673. i = 458505

4 seconds have passed: j = 900. i = 441633

5 seconds have passed: j = 1127. i = 83834

6 seconds have passed: j = 1353. i = 975331

7 seconds have passed: j = 1580. i = 761367

8 seconds have passed: j = 1807. i = 665729

giving up

Gave up: j = 1807, i = 665729

Fork

#include <sys/types.h>

#include <stdio.h>

#include <unistd.h>

int value = 5;

int main()

{

pid_t pid;

pid = fork();

if (pid == 0) {/* child process */

value += 15;

}

else if (pid > 0) {/* parent process */

wait(NULL);

printf("PARENT: value = %d",value); /* LINE A */

exit(0);

}

}

What is the output at LINE 20? Output at LINE A is 20

#include <stdio.h>

#include <unistd.h>

#include <sys/types.h>

int value = 5;

int main()

{

pid_t pid,pid1;

pid = fork();

if(pid<0)

{

return 1;

}

else if (pid ==0)

{

pid1=getpid();

printf("A pid %d\n",pid);

printf("B pid1 %d\n",pid1);

return 1;

}

else

{

wait(NULL);

pid1=getpid();

printf("C pid %d\n",pid);

printf("D pid1 %d\n",pid1);

wait(NULL);

}

return 0;

}

What is the output?

Sample Output:

A pid 0

B pid1 3002

C pid 3002

D pid1 3001

#include <stdio.h>

#include <unistd.h>

#include <sys/types.h>

int value = 5;

int main(){

pid_t pid;

pid = fork();

pid = fork();

pid = fork();

if(pid<0){

return 1;

}

else if (pid ==0)

{

printf("Child\n");

}

else{

printf("Parent\n");

}

return 0;

}

How many processes are created? 7 Processes are created, 8 are running (including the initial parent)

Thread

#include

#include

#include

#include

<pthread.h>

<stdio.h>

<unistd.h>

<stdlib.h>

void *runner (void *par);//thread function

int sum;//guess

int main(int argc, char *argv[])

{

pthread_t tid;//the thread

if(pthread_create (&tid, NULL, runner, argv[1])==0)//create the thread

{

pthread_join (tid, NULL);

printf("sum = %d\n",sum);

}

return 0;

}

void *runner(void *par)

{

int i, upper = atoi(par);

sum = 0;

for (i = 1; i <= upper; i++)

sum += i;

pthread_exit(0);

}