Predictive Profiling from Massive Transactional Data Sets

advertisement

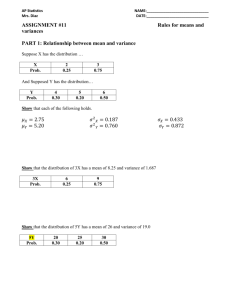

Author-Topic Models for Large Text Corpora Padhraic Smyth Department of Computer Science University of California, Irvine In collaboration with: Mark Steyvers (UCI) Michal Rosen-Zvi (UCI) Tom Griffiths (Stanford) Outline • Problem motivation: • • Probabilistic approaches • • topic models -> author-topic models Results • • • • Modeling large sets of documents Author-topic results from CiteSeer, NIPS, Enron data Applications of the model (Demo of author-topic query tool) Future directions Data Sets of Interest • Data = set of documents • Large collection of documents: 10k, 100k, etc Know authors of the documents Know years/dates of the documents …… • (will typically assume bag of words representation) • • • Examples of Data Sets • CiteSeer: • • NIPS papers • • 160k abstracts, 80k authors, 1986-2002 2k papers, 1k authors, 1987-1999 Reuters • 20k newspaper articles, 114 authors Pennsylvania Gazette 1728-1800 80,000 articles 25 million words www.accessible.com Enron email data 500,000 emails 5000 authors 1999-2002 Problems of Interest • What topics do these documents “span”? • Which documents are about a particular topic? • How have topics changed over time? • What does author X write about? • Who is likely to write about topic Y? • Who wrote this specific document? • and so on….. A topic is represented as a (multinomial) distribution over words TOPIC 289 TOPIC 209 WORD PROB. WORD PROB. PROBABILISTIC 0.0778 RETRIEVAL 0.1179 BAYESIAN 0.0671 TEXT 0.0853 PROBABILITY 0.0532 DOCUMENTS 0.0527 CARLO 0.0309 INFORMATION 0.0504 MONTE 0.0308 DOCUMENT 0.0441 DISTRIBUTION 0.0257 CONTENT 0.0242 INFERENCE 0.0253 INDEXING 0.0205 PROBABILITIES 0.0253 RELEVANCE 0.0159 CONDITIONAL 0.0229 COLLECTION 0.0146 PRIOR 0.0219 RELEVANT 0.0136 .... ... ... ... P(w | z ) Cluster Models DOCUMENT 1 DOCUMENT 2 Probabilistic Information Learning Retrieval Learning Information Bayesian Retrieval Cluster Models DOCUMENT 1 DOCUMENT 2 Probabilistic Information Learning Retrieval Learning Information Bayesian Retrieval P(probabilistic | topic) = 0.25 P(learning | topic) = 0.50 P(Bayesian | topic) = 0.25 P(other words | topic) = 0.00 P(information | topic) = 0.5 P(retrieval | topic) = 0.5 P(other words | topic) = 0.0 Graphical Model z Cluster Variable w Word n words Graphical Model z Cluster Variable w Word n words D documents Graphical Model Cluster Weights Cluster-Word distributions a z Cluster Variable w Word f n words D documents Cluster Models DOCUMENT 1 DOCUMENT 2 Probabilistic Information Learning Retrieval Learning Information Bayesian Retrieval DOCUMENT 3 Probabilistic Learning Information Retrieval Topic Models DOCUMENT 1 DOCUMENT 2 Probabilistic Information Learning Retrieval Learning Information Bayesian Retrieval Topic Models DOCUMENT 1 DOCUMENT 2 Probabilistic Information Learning Retrieval Learning Information Bayesian Retrieval DOCUMENT 3 Probabilistic Learning Information Retrieval History of topic models • Latent class models in statistics (late 60’s) • Hoffman (1999) • • Blei, Ng, and Jordan (2001, 2003) • • Original application to documents Variational methods Griffiths and Steyvers (2003, 2004) • Gibbs sampling approach (very efficient) Word/Document counts for 16 Artificial Documents River Stream Bank Money Loan documents 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 Can we recover the original topics and topic mixtures from this data? Example of Gibbs Sampling • Assign word tokens randomly to topics: (●=topic 1; ●=topic 2 ) River 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 Stream Bank Money Loan After 1 iteration • Apply sampling equation to each word token River 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 Stream Bank Money Loan After 4 iterations River 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 Stream Bank Money Loan After 32 iterations f ● ● topic 1 stream .40 bank .35 river .25 River 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 Stream Bank topic 2 bank .39 money .32 loan .29 Money Loan Topic Models DOCUMENT 1 DOCUMENT 2 Probabilistic Information Learning Retrieval Learning Information Bayesian Retrieval DOCUMENT 3 Probabilistic Learning Information Retrieval Author-Topic Models DOCUMENT 1 DOCUMENT 2 Probabilistic Information Learning Retrieval Learning Information Bayesian Retrieval Author-Topic Models DOCUMENT 1 DOCUMENT 2 Probabilistic Information Learning Retrieval Learning Information Bayesian Retrieval DOCUMENT 3 Probabilistic Learning Information Retrieval Approach • The author-topic model • a probabilistic model linking authors and topics • • learned from data • • authors -> topics -> words completely unsupervised, no labels generative model • • • Different questions or queries can be answered by appropriate probability calculus E.g., p(author | words in document) E.g., p(topic | author) Graphical Model x Author z Topic Graphical Model x Author z Topic w Word Graphical Model x Author z Topic w Word n Graphical Model a x Author z Topic w Word n D Graphical Model a Author-Topic distributions Topic-Word distributions q f x Author z Topic w Word n D Generative Process • • Let’s assume authors A1 and A2 collaborate and produce a paper • A1 has multinomial topic distribution q1 • A2 has multinomial topic distribution q2 For each word in the paper: 1. Sample an author x (uniformly) from A1, A2 2. Sample a topic z from qX 3. Sample a word w from a multinomial topic distribution fz Graphical Model a Author-Topic distributions Topic-Word distributions q f x Author z Topic w Word n D Learning • Observed • • Unknown • • • x, z : hidden variables Θ, f : unknown parameters Interested in: • • • W = observed words, A = sets of known authors p( x, z | W, A) p( θ , f | W, A) But exact inference is not tractable Step 1: Gibbs sampling of x and z a Marginalize over unknown parameters q f x Author z Topic w Word n D Step 2: MAP estimates of θ and f a q f x Author z Topic w Word Condition on particular samples of x and z n D Step 2: MAP estimates of θ and f a q Point estimates of unknown parameters f x Author z Topic w Word n D More Details on Learning • Gibbs sampling for x and z • • • Estimating θ and f • • • x and z sample -> point estimates non-informative Dirichlet priors for θ and f Computational Efficiency • • Typically run 2000 Gibbs iterations 1 iteration = full pass through all documents Learning is linear in the number of word tokens Predictions on new documents • can average over θ and f different runs) (from different samples, Gibbs Sampling • Need full conditional distributions for variables • The probability of assigning the current word i to topic j and author k given everything else: P( zi j , xi k | wi m, z i , x i , w i , a d ) WT Cmj WT C m' m' j V CmjAT a AT C j ' kj ' Ta number of times word w assigned to topic j number of times topic j assigned to author k Experiments on Real Data • Corpora • • • • CiteSeer: 160K abstracts, 85K authors NIPS: 1.7K papers, 2K authors Enron: 115K emails, 5K authors (sender) Pubmed:27K abstracts, 50K authors • Removed stop words; no stemming • Ignore word order, just use word counts • Processing time: Nips: 2000 Gibbs iterations 8 hours CiteSeer: 2000 Gibbs iterations 4 days Four example topics from CiteSeer (T=300) TOPIC 205 TOPIC 209 WORD PROB. WORD DATA 0.1563 MINING 0.0674 BAYESIAN ATTRIBUTES 0.0462 DISCOVERY TOPIC 289 PROB. TOPIC 10 WORD PROB. WORD PROB. RETRIEVAL 0.1179 QUERY 0.1848 0.0671 TEXT 0.0853 QUERIES 0.1367 PROBABILITY 0.0532 DOCUMENTS 0.0527 INDEX 0.0488 0.0401 CARLO 0.0309 INFORMATION 0.0504 DATA 0.0368 ASSOCIATION 0.0335 MONTE 0.0308 DOCUMENT 0.0441 JOIN 0.0260 LARGE 0.0280 CONTENT 0.0242 INDEXING 0.0180 KNOWLEDGE 0.0260 INDEXING 0.0205 PROCESSING 0.0113 DATABASES 0.0210 RELEVANCE 0.0159 AGGREGATE 0.0110 ATTRIBUTE 0.0188 CONDITIONAL 0.0229 COLLECTION 0.0146 ACCESS 0.0102 DATASETS 0.0165 PRIOR 0.0219 RELEVANT 0.0136 PRESENT 0.0095 AUTHOR PROB. AUTHOR PROB. AUTHOR PROB. AUTHOR PROB. Han_J 0.0196 Friedman_N 0.0094 Oard_D 0.0110 Suciu_D 0.0102 Rastogi_R 0.0094 Heckerman_D 0.0067 Croft_W 0.0056 Naughton_J 0.0095 Zaki_M 0.0084 Ghahramani_Z 0.0062 Jones_K 0.0053 Levy_A 0.0071 Shim_K 0.0077 Koller_D 0.0062 Schauble_P 0.0051 DeWitt_D 0.0068 Ng_R 0.0060 Jordan_M 0.0059 Voorhees_E 0.0050 Wong_L 0.0067 Liu_B 0.0058 Neal_R 0.0055 Singhal_A 0.0048 Mannila_H 0.0056 Raftery_A 0.0054 Hawking_D 0.0048 Ross_K 0.0061 Brin_S 0.0054 Lukasiewicz_T 0.0053 Merkl_D 0.0042 Hellerstein_J 0.0059 Liu_H 0.0047 Halpern_J 0.0052 Allan_J 0.0040 Lenzerini_M 0.0054 Holder_L 0.0044 Muller_P 0.0048 Doermann_D 0.0039 Moerkotte_G 0.0053 PROBABILISTIC 0.0778 DISTRIBUTION 0.0257 INFERENCE 0.0253 PROBABILITIES 0.0253 Chakrabarti_K 0.0064 More CiteSeer Topics TOPIC 10 TOPIC 209 WORD TOPIC 87 WORD PROB. SPEECH 0.1134 RECOGNITION 0.0349 BAYESIAN 0.0671 TOPIC 20 PROB. WORD PROB. WORD PROB. PROBABILISTIC 0.0778 USER 0.2541 STARS 0.0164 INTERFACE 0.1080 OBSERVATIONS 0.0150 WORD 0.0295 PROBABILITY 0.0532 USERS 0.0788 SOLAR 0.0150 SPEAKER 0.0227 CARLO 0.0309 INTERFACES 0.0433 MAGNETIC 0.0145 ACOUSTIC 0.0205 MONTE 0.0308 GRAPHICAL 0.0392 RAY 0.0144 RATE 0.0134 DISTRIBUTION 0.0257 INTERACTIVE 0.0354 EMISSION 0.0134 SPOKEN 0.0132 INFERENCE 0.0253 INTERACTION 0.0261 GALAXIES 0.0124 SOUND 0.0127 VISUAL 0.0203 OBSERVED 0.0108 TRAINING 0.0104 CONDITIONAL 0.0229 DISPLAY 0.0128 SUBJECT 0.0101 MUSIC 0.0102 PRIOR 0.0219 MANIPULATION 0.0099 STAR 0.0087 AUTHOR PROB. AUTHOR PROB. AUTHOR PROB. AUTHOR PROB. Waibel_A 0.0156 Friedman_N 0.0094 Shneiderman_B 0.0060 Linsky_J 0.0143 Gauvain_J 0.0133 Heckerman_D 0.0067 Rauterberg_M 0.0031 Falcke_H 0.0131 Lamel_L 0.0128 Ghahramani_Z 0.0062 Lavana_H 0.0024 Mursula_K 0.0089 Woodland_P 0.0124 Koller_D 0.0062 Pentland_A 0.0021 Butler_R 0.0083 Ney_H 0.0080 Jordan_M 0.0059 Myers_B 0.0021 Bjorkman_K 0.0078 Hansen_J 0.0078 Neal_R 0.0055 Minas_M 0.0021 Knapp_G 0.0067 Renals_S 0.0072 Raftery_A 0.0054 Burnett_M 0.0021 Kundu_M 0.0063 Noth_E 0.0071 Lukasiewicz_T 0.0053 Winiwarter_W 0.0020 Christensen-J 0.0059 Boves_L 0.0070 Halpern_J 0.0052 Chang_S 0.0019 Cranmer_S 0.0055 Young_S 0.0069 Muller_P 0.0048 Korvemaker_B 0.0019 Nagar_N 0.0050 PROBABILITIES 0.0253 Some topics relate to generic word usage TOPIC 273 WORD PROB. METHOD 0.5851 METHODS 0.3321 APPLIED 0.0268 APPLYING 0.0056 ORIGINAL 0.0054 DEVELOPED 0.0051 PROPOSE 0.0046 COMBINES 0.0034 PRACTICAL 0.0031 APPLY 0.0029 AUTHOR PROB. Yang_T 0.0014 Zhang_J 0.0014 Loncaric_S 0.0014 Liu_Y 0.0013 Benner_P 0.0013 Faloutsos_C 0.0013 Cortadella_J 0.0012 Paige_R 0.0011 Tai_X 0.0011 Lee_J 0.0011 What can the Model be used for? • We can analyze our document set through the “topic lens” • Applications • Queries • Who writes on this topic? • • • • • • e.g., finding experts or reviewers in a particular area What topics does this person do research on? Discovering trends over time Detecting unusual papers and authors Interactive browsing of a digital library via topics Parsing documents (and parts of documents) by topic and more….. Some likely topics per author (CiteSeer) • Author = Andrew McCallum, U Mass: • • • • Topic 1: classification, training, generalization, decision, data,… Topic 2: learning, machine, examples, reinforcement, inductive,….. Topic 3: retrieval, text, document, information, content,… Author = Hector Garcia-Molina, Stanford: - Topic 1: query, index, data, join, processing, aggregate…. - Topic 2: transaction, concurrency, copy, permission, distributed…. - Topic 3: source, separation, paper, heterogeneous, merging….. • Author = Paul Cohen, USC/ISI: - Topic 1: agent, multi, coordination, autonomous, intelligent…. - Topic 2: planning, action, goal, world, execution, situation… - Topic 3: human, interaction, people, cognitive, social, natural…. Temporal patterns in topics: hot and cold topics • We have CiteSeer papers from 1986-2002 • For each year, calculate the fraction of words assigned to each topic • -> a time-series for topics • • Hot topics become more prevalent Cold topics become less prevalent 4 2 x 10 Document and Word Distribution by Year in the UCI CiteSeer Data 5 x 10 14 1.8 12 1.6 Number of Documents 1.2 8 1 6 0.8 0.6 4 0.4 2 0.2 0 1986 1988 1990 1992 1994 Year 1996 1998 2000 2002 0 Number of Words 10 1.4 0.012 CHANGING TRENDS IN COMPUTER SCIENCE 0.01 WWW Topic Probability 0.008 0.006 INFORMATION RETRIEVAL 0.004 0.002 0 1990 1992 1994 1996 Year 1998 2000 2002 0.012 CHANGING TRENDS IN COMPUTER SCIENCE 0.01 Topic Probability 0.008 OPERATING SYSTEMS WWW PROGRAMMING LANGUAGES 0.006 INFORMATION RETRIEVAL 0.004 0.002 0 1990 1992 1994 1996 Year 1998 2000 2002 -3 8 x 10 HOT TOPICS: MACHINE LEARNING/DATA MINING 7 Topic Probability 6 CLASSIFICATION 5 REGRESSION 4 DATA MINING 3 2 1 1990 1992 1994 1996 Year 1998 2000 2002 -3 5.5 x 10 BAYES MARCHES ON 5 BAYESIAN Topic Probability 4.5 PROBABILITY 4 3.5 STATISTICAL PREDICTION 3 2.5 2 1.5 1990 1992 1994 1996 Year 1998 2000 2002 0.012 INTERESTING "TOPICS" 0.01 FRENCH WORDS: LA, LES, UNE, NOUS, EST Topic Probability 0.008 0.006 DARPA 0.004 0.002 0 1990 MATH SYMBOLS: GAMMA, DELTA, OMEGA 1992 1994 1996 Year 1998 2000 2002 Four example topics from NIPS (T=100) TOPIC 19 TOPIC 24 TOPIC 29 WORD PROB. WORD PROB. WORD LIKELIHOOD 0.0539 RECOGNITION 0.0400 MIXTURE 0.0509 CHARACTER 0.0336 POLICY EM 0.0470 CHARACTERS 0.0250 DENSITY 0.0398 TANGENT GAUSSIAN 0.0349 ESTIMATION 0.0314 DIGITS LOG 0.0263 MAXIMUM TOPIC 87 PROB. WORD PROB. KERNEL 0.0683 0.0371 SUPPORT 0.0377 ACTION 0.0332 VECTOR 0.0257 0.0241 OPTIMAL 0.0208 KERNELS 0.0217 HANDWRITTEN 0.0169 ACTIONS 0.0208 SET 0.0205 0.0159 FUNCTION 0.0178 SVM 0.0204 IMAGE 0.0157 REWARD 0.0165 SPACE 0.0188 0.0254 DISTANCE 0.0153 SUTTON 0.0164 MACHINES 0.0168 PARAMETERS 0.0209 DIGIT 0.0149 AGENT 0.0136 ESTIMATE 0.0204 HAND 0.0126 DECISION 0.0118 MARGIN 0.0151 AUTHOR PROB. AUTHOR PROB. AUTHOR PROB. AUTHOR PROB. Tresp_V 0.0333 Simard_P 0.0694 Singh_S 0.1412 Smola_A 0.1033 Singer_Y 0.0281 Martin_G 0.0394 Barto_A 0.0471 Scholkopf_B 0.0730 Jebara_T 0.0207 LeCun_Y 0.0359 Sutton_R 0.0430 Burges_C 0.0489 Ghahramani_Z 0.0196 Denker_J 0.0278 Dayan_P 0.0324 Vapnik_V 0.0431 Ueda_N 0.0170 Henderson_D 0.0256 Parr_R 0.0314 Chapelle_O 0.0210 Jordan_M 0.0150 Revow_M 0.0229 Dietterich_T 0.0231 Cristianini_N 0.0185 Roweis_S 0.0123 Platt_J 0.0226 Tsitsiklis_J 0.0194 Ratsch_G 0.0172 Schuster_M 0.0104 Keeler_J 0.0192 Randlov_J 0.0167 Laskov_P 0.0169 Xu_L 0.0098 Rashid_M 0.0182 Bradtke_S 0.0161 Tipping_M 0.0153 Saul_L 0.0094 Sackinger_E 0.0132 Schwartz_A 0.0142 Sollich_P 0.0141 REINFORCEMENT 0.0411 REGRESSION 0.0155 NIPS: support vector topic NIPS: neural network topic Pennsylvania Gazette Data (courtesy of David Newman, UC Irvine) Enron email data 500,000 emails 5000 authors 1999-2002 Enron email topics TOPIC 36 TOPIC 72 TOPIC 23 TOPIC 54 WORD PROB. WORD PROB. WORD PROB. FEEDBACK 0.0781 PROJECT 0.0514 FERC 0.0554 PERFORMANCE 0.0462 PLANT 0.028 MARKET 0.0328 PROCESS 0.0455 COST 0.0182 ISO 0.0226 PEP 0.0446 MANAGEMENT 0.03 UNIT 0.0166 ORDER COMPLETE 0.0205 FACILITY 0.0165 QUESTIONS 0.0203 SITE 0.0136 CONSTRUCTION 0.0169 WORD PROB. ENVIRONMENTAL 0.0291 AIR 0.0232 MTBE 0.019 EMISSIONS 0.017 0.0212 CLEAN 0.0143 FILING 0.0149 EPA 0.0133 COMMENTS 0.0116 PENDING 0.0129 COMMISSION 0.0215 SELECTED 0.0187 PROJECTS 0.0117 PRICE 0.0116 SAFETY 0.0104 COMPLETED 0.0146 CONTRACT 0.011 CALIFORNIA 0.0110 WATER 0.0092 SYSTEM 0.0146 UNITS 0.0106 FILED 0.0110 GASOLINE 0.0086 SENDER PROB. SENDER PROB. SENDER PROB. SENDER PROB. perfmgmt 0.2195 *** 0.0288 *** 0.0532 *** 0.1339 perf eval process 0.0784 *** 0.022 *** 0.0454 *** 0.0275 enron announcements 0.0489 *** 0.0123 *** 0.0384 *** 0.0205 *** 0.0089 *** 0.0111 *** 0.0334 *** 0.0166 *** 0.0048 *** 0.0108 *** 0.0317 *** 0.0129 Non-work Topics… TOPIC 66 TOPIC 182 TOPIC 113 TOPIC 109 WORD PROB. WORD PROB. WORD PROB. WORD PROB. HOLIDAY 0.0857 TEXANS 0.0145 GOD 0.0357 AMAZON 0.0312 PARTY 0.0368 WIN 0.0143 LIFE 0.0272 GIFT 0.0226 YEAR 0.0316 FOOTBALL 0.0137 MAN 0.0116 CLICK 0.0193 SEASON 0.0305 FANTASY 0.0129 PEOPLE 0.0103 SAVE 0.0147 COMPANY 0.0255 SPORTSLINE 0.0129 CHRIST 0.0092 SHOPPING 0.0140 CELEBRATION 0.0199 PLAY 0.0123 FAITH 0.0083 OFFER 0.0124 ENRON 0.0198 TEAM 0.0114 LORD 0.0079 HOLIDAY 0.0122 TIME 0.0194 GAME 0.0112 JESUS 0.0075 RECEIVE 0.0102 RECOGNIZE 0.019 SPORTS 0.011 SPIRITUAL 0.0066 SHIPPING 0.0100 MONTH 0.018 GAMES 0.0109 VISIT 0.0065 FLOWERS 0.0099 SENDER PROB. SENDER PROB. SENDER PROB. SENDER PROB. chairman & ceo 0.131 cbs sportsline com 0.0866 crosswalk com 0.2358 amazon com 0.1344 *** 0.0102 houston texans 0.0267 wordsmith 0.0208 jos a bank 0.0266 *** 0.0046 houstontexans 0.0203 *** 0.0107 sharperimageoffers 0.0136 *** 0.0022 sportsline rewards 0.0175 travelocity com 0.0094 general announcement 0.0017 pro football 0.0136 barnes & noble com 0.0089 doctor dictionary 0.0101 *** 0.0061 Topical Topics TOPIC 18 TOPIC 22 TOPIC 114 WORD PROB. WORD PROB. WORD PROB. TOPIC 194 WORD PROB. POWER 0.0915 STATE 0.0253 COMMITTEE 0.0197 LAW 0.0380 CALIFORNIA 0.0756 PLAN 0.0245 BILL 0.0189 TESTIMONY 0.0201 ELECTRICITY 0.0331 CALIFORNIA 0.0137 HOUSE 0.0169 ATTORNEY 0.0164 UTILITIES 0.0253 POLITICIAN Y 0.0137 SETTLEMENT 0.0131 PRICES 0.0249 RATE 0.0131 LEGAL 0.0100 MARKET 0.0244 EXHIBIT 0.0098 PRICE 0.0207 SOCAL 0.0119 CONGRESS 0.0112 CLE 0.0093 UTILITY 0.0140 POWER 0.0114 PRESIDENT 0.0105 SOCALGAS 0.0093 CUSTOMERS 0.0134 BONDS 0.0109 METALS 0.0091 ELECTRIC 0.0120 MOU 0.0107 DC 0.0093 PERSON Z 0.0083 SENDER PROB. SENDER PROB. SENDER PROB. SENDER PROB. *** 0.1160 *** 0.0395 *** 0.0696 *** 0.0696 *** 0.0518 *** 0.0337 *** 0.0453 *** 0.0453 *** 0.0284 *** 0.0295 *** 0.0255 *** 0.0255 *** 0.0272 *** 0.0251 *** 0.0173 *** 0.0173 *** 0.0266 *** 0.0202 *** 0.0317 *** 0.0317 BANKRUPTCY 0.0126 WASHINGTON 0.0140 SENATE 0.0135 POLITICIAN X 0.0114 LEGISLATION 0.0099 Enron email: California Energy Crisis Message-ID: <21993848.1075843452041.JavaMail.evans@thyme> Date: Fri, 27 Apr 2001 09:25:00 -0700 (PDT) Subject: California Update 4/27/01 …………. FERC price cap decision reflects Bush political and economic objectives. Politically, Bush is determined to let the crisis blame fall on Davis; from an economic perspective, he is unwilling to create disincentives for new power generation The FERC decision is a holding move by the Bush administration that looks like action, but is not. Rather, it allows the situation in California to continue to develop virtually unabated. The political strategy appears to allow the situation to deteriorate to the point where Davis cannot escape shouldering the blame. Once they are politically inoculated, the Administration can begin to look at regional solutions. Moreover, the Administration has already made explicit (and will certainly restate in the forthcoming Cheney commission report) its opposition to stronger price caps ….. Enron email: US Senate Bill Message-ID: <23926374.1075846156491.JavaMail.evans@thyme> Date: Thu, 15 Jun 2000 08:59:00 -0700 (PDT) From: *************** To: *************** Subject: Senate Commerce Committee Pipeline Safety Markup The Senate Commerce Committee held a markup today where Senator John McCain's (R-AZ) pipeline safety legislation, S. 2438, was approved. The overall outcome was not unexpected -- the final legislation contained several provisions that went a little bit further than Enron and INGAA would have liked, …………… 2) McCain amendment to Section 13 (b) (on operator assistance investigations) -- Approved by voice vote. ……. 3) Sen. John Kerry (D-MA) Amendment on Enforcement -- Approved by voice vote. Another confusing vote, in which many members did not understand the changes being made, but agreed to it on the condition that clarifications be made before Senate floor action. Late last night, Enron led a group including companies from INGAA and AGA in providing comments to Senator Kerry which caused him to make substantial changes to his amendment before it was voted on at markup, including dropping provisions allowing citizen suits and other troubling issues. In the end, the amendment that passed was acceptable to industry. Enron email: political donations 10/16/2000 04:41 PM Subject: Ashcroft Senate Campaign Request We have received a request from the Ashcroft Senate campaign for $10,000 in soft money. This is the race where Governor Carnahan is the challenger. Enron PAC has contributed $10,000 and Enron has also contributed $15,000 soft money in this campaign to Senator Ashcroft. Ken Lay has been personally interested in the Ashcroft campaign. Our polling information is that Ashcroft is currently leading 43 to 38 with an undecided of 19 percent. ………………………………………………………………………………………………………………………………… Message-ID: <2546687.1075846182883.JavaMail.evans@thyme> Date: Mon, 16 Oct 2000 14:13:00 -0700 (PDT) From: ***** To: ***** Subject: Re: Ashcroft Senate Campaign Request If you can cover it I would say yes. It's a key race and we have been close to Ashcroft for years. Let's make sure he knows we gave it.... we need to follow up with him. Last time I talked to him he basically recited the utilities' position on electric restructuring. Let's make it clear that we want to talk right after the election. PubMed-Query Topics TOPIC 188 WORD BIOLOGICAL TOPIC 63 PROB. WORD TOPIC 32 TOPIC 85 PROB. WORD PROB. 0.1002 PLAGUE 0.0296 BOTULISM 0.1014 AGENTS 0.0889 MEDICAL 0.0287 BOTULINUM 0.0888 THREAT 0.0396 CENTURY 0.0280 BIOTERRORISM 0.0348 MEDICINE 0.0266 0.0669 INHIBITORS 0.0366 0.0340 INHIBITOR 0.0220 0.0245 PLASMA 0.0204 0.0203 POTENTIAL 0.0305 EPIDEMIC 0.0106 ATTACK 0.0290 GREAT 0.0091 CHEMICAL 0.0288 WARFARE 0.0219 CHINESE 0.0083 ANTHRAX 0.0146 FRENCH 0.0082 PARALYSIS 0.0124 PROB. AUTHOR PROB. AUTHOR 0.0563 TYPE HISTORY PROB. PROTEASE 0.0916 0.0877 0.0328 AUTHOR HIV PROB. TOXIN WEAPONS EPIDEMICS 0.0090 WORD CLOSTRIDIUM INFANT NEUROTOXIN AMPRENAVIR 0.0527 0.0184 APV 0.0169 BONT 0.0167 DRUG 0.0169 FOOD 0.0134 RITONAVIR 0.0164 IMMUNODEFICIENCY0.0150 AUTHOR PROB. Atlas_RM 0.0044 Károly_L 0.0089 Hatheway_CL 0.0254 Sadler_BM 0.0129 Tegnell_A 0.0036 Jian-ping_Z 0.0085 Schiavo_G 0.0141 Tisdale_M 0.0118 Aas_P 0.0036 Sabbatani_S 0.0080 Sugiyama_H 0.0111 Lou_Y 0.0069 Arnon_SS 0.0108 Stein_DS 0.0069 Simpson_LL 0.0093 Haubrich_R 0.0061 Greenfield_RA Bricaire_F 0.0032 0.0032 Theodorides_J 0.0045 Bowers_JZ 0.0045 PubMed-Query Topics TOPIC 40 WORD TOPIC 89 PROB. WORD ANTHRACIS 0.1627 CHEMICAL 0.0578 ANTHRAX 0.1402 SARIN 0.0454 BACILLUS 0.1219 AGENT 0.0332 SPORES 0.0614 GAS 0.0312 CEREUS 0.0382 SPORE 0.0274 THURINGIENSIS 0.0177 AGENTS VX PROB. ENZYME 0.0938 MUSTARD 0.0639 ACTIVE 0.0429 EXPOSURE 0.0444 SM 0.0361 0.0264 SKIN 0.0208 REACTION 0.0225 0.0232 EXPOSED 0.0185 AGENT 0.0140 0.0124 TOXIC 0.0197 PRODUCTS 0.0170 PROB. EPIDERMAL DAMAGE AUTHOR Mock_M 0.0203 Minami_M 0.0093 Phillips_AP 0.0125 Hoskin_FC 0.0092 Smith_WJ Welkos_SL 0.0083 Benschop_HP 0.0090 Turnbull_PC 0.0071 Raushel_FM 0.0084 Wild_JR SITE 0.0399 0.0308 STERNE 0.0067 0.0353 SUBSTRATE ENZYMES 0.0220 Fouet_A PROB. 0.0657 NERVE AUTHOR WORD 0.0343 ACID PROB. HD PROB. SULFUR 0.0152 AUTHOR WORD 0.0268 SUBTILIS INHALATIONAL 0.0104 TOPIC 178 TOPIC 104 0.0075 0.0129 0.0116 PROB. Monteiro-Riviere_NA 0.0284 SUBSTRATES 0.0201 FOLD CATALYTIC RATE AUTHOR 0.0176 0.0154 0.0148 PROB. Masson_P 0.0166 0.0219 Kovach_IM 0.0137 Lindsay_CD 0.0214 Schramm_VL 0.0094 Sawyer_TW 0.0146 Meier_HL 0.0139 Barak_D Broomfield_CA 0.0076 0.0072 PubMed-Query Author Model • P. M. Lindeque, South Africa TOPICS • Topic • Topic • Topic • Topic • Topic 1: 2: 3: 4: 5: water, natural, foci, environmental, source anthracis, anthrax, bacillus, spores, cereus species, sp, isolated, populations, tested epidemic, occurred, outbreak, persons positive, samples, negative, tested prob=0.33 prob=0.13 prob=0.06 prob=0.06 prob=0.05 PAPERS • • • • Vaccine-induced protections against anthrax in cheetah Airborne movement of anthrax spores from carcass sites in the Etosha National Park Ecology and epidemiology of anthrax in the Etosha National Park Serology and anthrax in humans, livestock, and wildlife PubMed-Query: Topics by Country ISRAEL, n=196 authors TOPIC 188 p=0.049 BIOLOGICAL AGENTS THREAT BIOTERRORISM WEAPONS POTENTIAL ATTACK CHEMICAL WARFARE ANTHRAX TOPIC 6 p=0.045 INJURY INJURIES WAR TERRORIST MILITARY MEDICAL VICTIMS TRAUMA BLAST VETERANS TOPIC 133 p=0.043 HEALTH PUBLIC CARE SERVICES EDUCATION NATIONAL COMMUNITY INFORMATION PREVENTION LOCAL TOPIC 104 p=0.027 HD TOPIC 7 p=0.026 RENAL HFRS VIRUS SYNDROME FEVER TOPIC 79 p=0.024 FINDINGS CHEST CT LUNG CLINICAL HEMORRHAGIC PULMONARY CONCLUSIONS BACKGROUND HANTAVIRUS HANTAAN ABNORMAL STUDY TOPIC 197 p=0.023 PATIENTS HOSPITAL PATIENT ADMITTED TWENTY HOSPITALIZED CONSECUTIVE INVOLVEMENT OBJECTIVES PROSPECTIVELY MUSTARD EXPOSURE SM SULFUR SKIN EXPOSED AGENT EPIDERMAL DAMAGE TOPIC 159 p=0.025 EMERGENCY RESPONSE MEDICAL PREPAREDNESS DISASTER MANAGEMENT TRAINING EVENTS BIOTERRORISM LOCAL CHINA, n=1775 authors TOPIC 177 p=0.045 SARS RESPIRATORY SEVERE COV SYNDROME ACUTE CORONAVIRUS CHINA TOPIC 49 p=0.024 METHODS RESULTS CONCLUSION OBJECTIVE POTENTIAL ATTACK CHEMICAL WARFARE ANTHRAX MEDICAL VICTIMS TRAUMA BLAST VETERANS NATIONAL COMMUNITY INFORMATION PREVENTION LOCAL SKIN TOPIC 7 p=0.026 RENAL HFRS VIRUS SYNDROME FEVER TOPIC 79 p=0.024 FINDINGS CHEST CT LUNG CLINICAL HEMORRHAGIC PULMONARY CONCLUSIONS BACKGROUND HANTAVIRUS HANTAAN PUUMALA ABNORMAL STUDY INVOLVEMENT COMMON OBJECTIVES INVESTIGATE HANTAVIRUSES RADIOGRAPHIC DESIGN EXPOSED MANAGEMENT TRAINING EVENTS BIOTERRORISM LOCAL PubMed-Query: Topics by Country EPIDERMAL AGENT DAMAGE CHINA, n=1775 authors TOPIC 177 p=0.045 SARS RESPIRATORY SEVERE COV SYNDROME ACUTE CORONAVIRUS CHINA KONG PROBABLE TOPIC 49 p=0.024 METHODS RESULTS CONCLUSION OBJECTIVE TOPIC 197 p=0.023 PATIENTS HOSPITAL PATIENT ADMITTED TWENTY HOSPITALIZED CONSECUTIVE PROSPECTIVELY DIAGNOSED PROGNOSIS 3 of 300 example topics (TASA) TOPIC 82 WORD TOPIC 77 TOPIC 166 PROB. WORD PROB. WORD PROB. PLAY 0.0601 MUSIC 0.0903 PLAY 0.1358 PLAYS 0.0362 DANCE 0.0345 BALL 0.1288 STAGE 0.0305 SONG 0.0329 GAME 0.0654 MOVIE 0.0288 PLAY 0.0301 PLAYING 0.0418 SCENE 0.0253 SING 0.0265 HIT 0.0324 ROLE 0.0245 SINGING 0.0264 PLAYED 0.0312 AUDIENCE 0.0197 BAND 0.0260 BASEBALL 0.0274 THEATER 0.0186 PLAYED 0.0229 GAMES 0.0250 PART 0.0178 SANG 0.0224 BAT 0.0193 FILM 0.0148 SONGS 0.0208 RUN 0.0186 ACTORS 0.0145 DANCING 0.0198 THROW 0.0158 DRAMA 0.0136 PIANO 0.0169 BALLS 0.0154 REAL 0.0128 PLAYING 0.0159 TENNIS 0.0107 CHARACTER 0.0122 RHYTHM 0.0145 HOME 0.0099 ACTOR 0.0116 ALBERT 0.0134 CATCH 0.0098 ACT 0.0114 MUSICAL 0.0134 FIELD 0.0097 MOVIES 0.0114 DRUM 0.0129 PLAYER 0.0096 ACTION 0.0101 GUITAR 0.0098 FUN 0.0092 0.0097 BEAT 0.0097 THROWING 0.0083 0.0094 BALLET 0.0096 PITCHER 0.0080 SET SCENES Word sense disambiguation (numbers & colors topic assignments) A Play082 is written082 to be performed082 on a stage082 before a live093 audience082 or before motion270 picture004 or television004 cameras004 ( for later054 viewing004 by large202 audiences082). A Play082 is written082 because playwrights082 have something ... He was listening077 to music077 coming009 from a passing043 riverboat. The music077 had already captured006 his heart157 as well as his ear119. It was jazz077. Bix beiderbecke had already had music077 lessons077. He wanted268 to play077 the cornet. And he wanted268 to play077 jazz077... Jim296 plays166 the game166. Jim296 likes081 the game166 for one. The game166 book254 helps081 jim296. Don180 comes040 into the house038. Don180 and jim296 read254 the game166 book254. The boys020 see a game166 for two. The two boys020 play166 the game166.... Finding unusual papers for an author Perplexity = exp [entropy (words | model) ] = measure of surprise for model on data Can calculate perplexity of unseen documents, conditioned on the model for a particular author Papers and Perplexities: M_Jordan Factorial Hidden Markov Models 687 Learning from Incomplete Data 702 Papers and Perplexities: M_Jordan Factorial Hidden Markov Models 687 Learning from Incomplete Data 702 MEDIAN PERPLEXITY 2567 Papers and Perplexities: M_Jordan Factorial Hidden Markov Models 687 Learning from Incomplete Data 702 MEDIAN PERPLEXITY 2567 Defining and Handling Transient Fields in Pjama 14555 An Orthogonally Persistent JAVA 16021 Papers and Perplexities: T_Mitchell Explanation-based Learning for Mobile Robot Perception 1093 Learning to Extract Symbolic Knowledge from the Web 1196 Papers and Perplexities: T_Mitchell Explanation-based Learning for Mobile Robot Perception 1093 Learning to Extract Symbolic Knowledge from the Web 1196 MEDIAN PERPLEXITY 2837 Papers and Perplexities: T_Mitchell Explanation-based Learning for Mobile Robot Perception 1093 Learning to Extract Symbolic Knowledge from the Web 1196 MEDIAN PERPLEXITY 2837 Text Classification from Labeled and Unlabeled Documents using EM 3802 A Method for Estimating Occupational Radiation Dose… 8814 Author prediction with CiteSeer • Task: predict (single) author of new CiteSeer abstracts • Results: • • For 33% of documents, author guessed correctly Median rank of true author = 26 (out of 85,000) Who wrote what? Test of model: 1) artificially combine abstracts from different authors 2) check whether assignment is to correct original author A method1 is described which like the kernel1 trick1 in support1 vector1 machines1 SVMs1 lets us generalize distance1 based2 algorithms to operate in feature1 spaces usually nonlinearly related to the input1 space This is done by identifying a class of kernels1 which can be represented as norm1 based2 distances1 in Hilbert spaces It turns1 out that common kernel1 algorithms such as SVMs1 and kernel1 PCA1 are actually really distance1 based2 algorithms and can be run2 with that class of kernels1 too As well as providing1 a useful new insight1 into how these algorithms work the present2 work can form the basis1 for conceiving new algorithms This paper presents2 a comprehensive approach for model2 based2 diagnosis2 which includes proposals for characterizing and computing2 preferred2 diagnoses2 assuming that the system2 description2 is augmented with a system2 structure2 a directed2 graph2 explicating the interconnections between system2 components2 Specifically we first introduce the notion of a consequence2 which is a syntactically2 unconstrained propositional2 sentence2 that characterizes all consistency2 based2 diagnoses2 and show2 that standard2 characterizations of diagnoses2 such as minimal conflicts1 correspond to syntactic2 variations1 on a consequence2 Second we propose a new syntactic2 variation on the consequence2 known as negation2 normal form NNF and discuss its merits compared to standard variations Third we introduce a basic algorithm2 for computing consequences in NNF given a structured system2 description We show that if the system2 structure2 does not contain cycles2 then there is always a linear size2 consequence2 in NNF which can be computed in linear time2 For arbitrary1 system2 structures2 we show a precise connection between the complexity2 of computing2 consequences and the topology of the underlying system2 structure2 Finally we present2 an algorithm2 that enumerates2 the preferred2 diagnoses2 characterized by a consequence2 The algorithm2 is shown1 to take linear time2 in the size2 of the consequence2 if the preference criterion1 satisfies some general conditions Written by (1) Scholkopf_B Written by (2) Darwiche_A The Author-Topic Browser Querying on author Pazzani_M Querying on topic relevant to author Querying on document written by author Stability of Topics • Content of topics is arbitrary across runs of model (e.g., topic #1 is not the same across runs) • However, • • • Majority of topics are stable over processing time Majority of topics can be aligned across runs Topics appear to represent genuine structure in data Comparing NIPS topics from the same Markov chain 10 16 20 14 30 12 40 10 50 t1 ANALOG CIRCUIT CHIP CURRENT VOLTAGE VLSI INPUT OUTPUT CIRCUITS FIGURE PULSE SYNAPSE SILICON CMOS MEAD .043 .040 .034 .025 .023 .022 .018 .018 .015 .014 .012 .011 .011 .009 .008 t2 ANALOG CIRCUIT CHIP VOLTAGE CURRENT VLSI OUTPUT INPUT CIRCUITS PULSE SYNAPSE SILICON FIGURE CMOS GATE .044 .040 .037 .024 .023 .023 .022 .019 .015 .012 .012 .011 .010 .009 .009 8 60 KL distance Re-ordered topics at t2=2000 BEST KL = 0.54 70 80 90 100 20 40 60 topics at t1=1000 80 100 6 4 2 WORST KL = 4.78 t1 FEEDBACK ADAPTATION CORTEX REGION FIGURE FUNCTION BRAIN COMPUTATIONAL FIBER FIBERS ELECTRIC BOWER FISH SIMULATIONS CEREBELLAR .040 .034 .025 .016 .015 .014 .013 .013 .012 .011 .011 .010 .010 .009 .009 t2 ADAPTATION FIGURE SIMULATION GAIN EFFECTS FIBERS COMPUTATIONAL EXPERIMENT FIBER SITES RESULTS EXPERIMENTS ELECTRIC SITE NEURO .051 .033 .026 .025 .016 .014 .014 .014 .013 .012 .012 .012 .011 .009 .009 Gibbs Sampler Stability (NIPS data) New Applications/ Future Work • Reviewer Recommendation • • Change Detection/Monitoring • • • Which authors are on the leading edge of new topics? Characterize the “topic trajectory” of this author over time Author Identification • • “Find reviewers for this set of grant proposals who are active in relevant topics and have no conflicts of interest” Who wrote this document? Incorporation of stylistic information (stylometry) Additions to the model • • • Modeling citations Modeling topic persistence in a document ….. Summary • Topic models are a versatile probabilistic model for text data • Author-topic models are a very useful generalization • • Equivalent to topics model with 1 different author per document Learning has linear time complexity • • Gibbs sampling is practical on very large data sets Experimental results • • On multiple large complex data sets, the resulting topic-word and author-topic models are quite interpretable Results appear stable relative to sampling • Numerous possible applications…. • Current model is quite simple….many extensions possible Further Information • www.datalab.uci.edu • • • Steyvers et al, ACM SIGKDD 2004 Rosen-Zvi et al, UAI 2004 www.datalab.uci.edu/author-topic • • JAVA demo of online browser additional tables and results BACKUP SLIDES Author-Topics Model a Author-Topic distributions Topic-Word distributions q f x Author z Topic w Word n D Topics Model: Topics, no Authors q Topic-Word distributions f x Author z Topic w Word Document-Topic Distributions n D Author Model: Authors, no Topics a Author-Word Distributions a Author w Word f n D Comparison Results 14000 • Train models on part of a new document and predict remaining words • Without having seen any words from new document, authortopic information helps in predicting words from that document • Topics model is more flexible in adapting to new document after observing a number of words Author model 10000 8000 6000 4000 24 6 10 25 64 16 4 Author-Topics 1 2000 Topics model 0 Perplexity (new words) 12000 # Observed words in document Latent Semantic Analysis (Landauer & Dumais, 1997) word/document counts high dimensional space Doc1 Doc2 Doc3 … RIVER 34 0 0 STREAM 12 0 0 BANK 5 19 6 MONEY … 0 … 16 … 1 … SVD STREAM RIVER BANK Words with similar co-occurence patterns across documents end up with similar vector representations MONEY Topics LSA • • • • Geometric • Probabilistic • Fully generative • Topic dimensions are often interpretable • Modular language of bayes nets/ graphical models Partially generative Dimensions are not interpretable Little flexibility to expand model (e.g., syntax) Modeling syntax and semantics (Steyvers, Griffiths, Blei, and Tenenbaum) semantics: probabilistic topics q long-range, document specific, dependencies short-range dependencies constant across all documents z z z w w w x x x syntax: 3rd order HMM