web characteristics - Professor C. Lee Giles

advertisement

Web characteristics, broadly

defined

Thanks to C. Manning, P. Raghavan, H.

Schutze

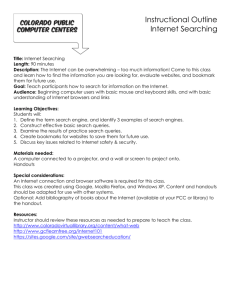

What we have covered

What is IR

Evaluation

Tokenization and properties of text

Web crawling

Vector methods

Measures of similarity

Indexing

Inverted files

Today:

Web characteristics

Web vs classic IR

Web advertising

Web as a graph – size

SEO

Web spam

Dup detection

Query Engine

Index

Interface

Indexer

Users

Crawler

Characteristics

Web

A Typical Web Search Engine

What Is the World Wide Web?

The world wide web (web) is a network of information resources,

most of which is published by humans.

–

World’s largest publishing mechanism

The web relies on three mechanisms to make these resources

readily available to the widest possible audience:

–

A uniform naming scheme for locating resources on the web (e.g.,

URIs).

•

–

Protocols, for access to named resources over the web (e.g., HTTP).

•

–

How to get there

Hypertext, for easy navigation among resources (e.g., HTML).

•

Find it

Where to go from there.

Web is opt-out not an opt-in system

–

–

Your information is available to all; you have to protect it.

DMCA (Digital Millennium Copyright Act)

–

Only to those who sign it.

Internet vs. Web

Internet:

•

Internet is a more general term

Includes physical aspect of underlying

networks and mechanisms such as email,

FTP, HTTP…

•

Web:

•

HTTP

Associated with information stored on the

Internet

Refers to a broader class of networks, i.e.

Web of English Literature

•

•

Both Internet and web are networks

Networks vs

Graphs

Examples?

Old internet network

Internet Technologies

Web Standards

Internet Engineering Task Force (IETF)

http://www.ietf.org/

Founded 1986

Request For Comments (RFC) at

http://www.ietf.org/rfc.html

World Wide Web Consortium (W3C)

http://www.w3.org

Founded 1994 by Tim Berners-Lee

Publishes technical reports and recommendations

Internet Technologies

Web Design Principles

Interoperability: Web languages and

protocols must be compatible with one

another independent of hardware and

software.

Evolution: The Web must be able to

accommodate future technologies.

Encourages simplicity, modularity and

extensibility.

Decentralization: Facilitates scalability and

robustness.

Languages of the WWW

Markup languages

A markup language combines text and extra

information about the text. The extra information,

for example about the text's structure or

presentation, is expressed using markup, which is

intermingled with the primary text.

The best-known markup language is in modern

use is HTML (Hypertext Markup Language), one

of the foundations of the World Wide Web.

Historically, markup was (and is) used in the

publishing industry in the communication of

printed work between authors, editors, and

printers.

Without search engines the web wouldn’t

scale (there would be no web)

1.

2.

3.

No incentive in creating content unless it can be easily found –

other finding methods haven’t kept pace (taxonomies,

bookmarks, etc)

The web is both a technology artifact and a social environment

“The Web has become the “new normal” in the American way

of life; those who don’t go online constitute an ever-shrinking

minority.” – [Pew Foundation report, January 2005]

Search engines make aggregation of interest possible:

Create incentives for very specialized niche players

4.

5.

Economical – specialized stores, providers, etc

Social – narrow interests, specialized communities, etc

The acceptance of search interaction makes “unlimited

selection” stores possible:

Amazon, Netflix, etc

Search turned out to be the best mechanism for advertising on

the web, a $15+ B industry (2011)

Growing very fast but entire US advertising industry $250B –

huge room to grow

Sponsored search marketing is about $10B

Classical IR vs. Web IR

Basic assumptions of

Classical Information Retrieval

Corpus: Fixed document collection

Goal: Retrieve documents with information

content that is relevant to user’s information

need

Searcher: information scientist or a search

professional trained in making logical queries

Classic IR Goal

Classic relevance

For each query Q and stored document D in a given

corpus assume there exists relevance Score(Q, D)

Score is average over users U and context C

Optimize Score(Q, D) as opposed to Score(Q, D, U, C)

That is, usually:

Context ignored

Individuals ignored

Bad assumptions

in the web context

Corpus predetermined

Web Information Retrieval

Basic assumptions of

Web Information Retrieval

Corpus: constantly changing; created by

amateurs and professionals

Goal: Retrieve summaries of relevant information

quickly with links to the original site

High precision! Recall not important

Searcher: amateurs; no professional training and

less or no concern about quality queries

Subscription

Feeds

Crawls

Content creators

Transaction

Advertisement

Editorial

The coarse-level dynamics

Content aggregators

Content consumers

Brief (non-technical) history

Early keyword-based engines

Altavista, Excite, Infoseek, Inktomi, ca. 1995-1997

Paid placement ranking: Goto.com (morphed into

Overture.com Yahoo!)

Your search ranking depended on how much you

paid

Auction for keywords: casino was expensive!

Brief (non-technical) history

1998+: Link-based ranking pioneered by Google

Blew away all early engines save Inktomi

Great user experience in search of a business

model

Meanwhile Goto/Overture’s annual revenues

were nearing $1 billion

Result: Google added paid-placement “ads” to

the side, independent of search results

Yahoo follows suit, acquiring Overture (for paid

placement) and Inktomi (for search)

Query Results 2009

Ads

Algorithmic

results.

Query Results 2010

Ads

Algorithmic

results.

Query Results 2013

Algorithmic

results.

Query Results 2014

Algorithmic

results.

Algorithmic

results.

Query Results 2016

Algorithmic

results.

Query String for Google 2013

http://www.google.com/search?q=lee+giles

“?” in query implies the start of a query component

query equals lee AND giles

Basic query reduced from the full one.

Query above is

lee giles

What does Bing do?

duckduckgo?

• For more, see wikipedia query string

Ads vs. search results

Google has maintained that ads

(based on vendors bidding for

keywords) do not affect vendors’

rankings in search results

Web

Search =

miele

Sponsored Links

CG Appliance Express

Discount Appliances (650) 756 -3931

Same Day Certified Installation

www.cgappliance.com

San Francis co -Oakland - San Jose,

CA

Miele Vacuum Cleaners

Miele Vacuums - Complete Sele ction

Free Shipping!

www.vacuums.com

Miele Vacuum Cleaners

Miele -Free Air shipping!

All models. Helpful advice.

www.best -vacuum.com

Results 1 - 10 of about 7,310,000 for miele . ( 0.12 seconds)

Miele , Inc -- Anything else is a compromise

At the heart of your home, Appliances by

Miele . ... USA. to miele .com. Residential Appliances.

Vacuum Cleaners. Dishwashers. Cooking Appliances. Steam Oven. Coffee System

...

www. miele .com/ - 20k - Cached - Similar pages

Miele

Welcome to Miele , the home of the very best appliances and kitchens in the world.

www. miele .co.uk/ - 3k - Cached - Similar pages

Miele - Deutscher Hersteller von

Einbaugeräten, Hausgeräten ... - [ Translate this

page ]

Das Portal zum Thema Essen & Geniessen online unter www.zu

-tisch.d e. Miele weltweit

...ein Leben lang. ... Wählen Sie die Miele Vertretung Ihres Landes.

www. miele .de/ - 10k - Cached - Similar pages

Miele Österreich - [ Translate this page

Herzlich willkommen bei Miele Österreich Wenn Sie nicht automatisch

weitergeleitet werden, klicken Sie bitte hier! HAUSHALTSGERÄTE

...

www. miele .at/ - 3k - Cached - Similar pages

Herzlich willkommen bei

]

Google has maintained that ads (based on vendors

bidding for keywords) do not affect vendors’ rankings

in search results

Search 2016

Ads vs. search results

Other vendors (Yahoo, Bing) have made

similar statements from time to time

Any of them can change anytime

Focus primarily on search results

independent of paid placement ads

Although the latter is a fascinating

technical subject in itself

Pay Per Click (PPC) Search

Engine Ranking

PPC ads appear as “sponsored listings”

Companies bid on price they are willing to pay “per click”

Typically have very good tracking tools and statistics

Ability to control ad text

Can set budgets and spending limits

Google AdWords and Overture are the two leaders

Google $67B income

from advertising

Most expensive ad

words

Research field:

Computational

advertising

Algorithmic

advertising

Web search basics

Sponsored Links

CG Appliance Express

Discount Appliances (650) 756 -3931

Same Day Certified Installation

www.cgappliance.com

San Francisco -Oakland - San Jose,

CA

User

Miele Vacuum Cleaners

Miele Vacuums - Complete Selection

Free Shipping!

www.vacuums.com

Miele Vacuum Cleaners

Miele -Free Air shipping!

All models. Helpful advice.

www.b est -vacuum.com

Web

Results 1 - 10 of about 7,310,000 for miele . ( 0.12 seconds)

Miele, Inc -- Anything else is a compromise

Web spider

At the heart of your home, Appliances by

Miele . ... USA. to miele .com. Residential Appliances.

Vacuum C leaners. Dishwashers. Cooking Appliances. Steam Oven. Coffee System

...

www. miele .com/ - 20k - Cached - Similar pages

Miele

Welcome to Miele , the home of the very best appliances and kitchens in the world.

www. miele .co.uk/ - 3k - Cached - Similar pages

Miele - Deutscher Hersteller von Einbaugeräten, Hausgeräten

... - [ Translate this

page ]

Das Portal zum Thema Essen & Geniessen online unter www.zu

-tisch.de. Miele weltweit

...ein Leben lang. ... Wählen Sie die Miele Vertretung Ihres Landes.

www. miele .de/ - 10k - Cached - Similar pages

Miele Österreich - [ Translate this page ]

Herzlich willkommen bei Miele Österreich Wenn Sie nicht automatisch

weitergeleitet werden, klicken Sie bitte hier! HAUSHALTSGERÄTE

...

www. miele .at/ - 3k - Cached - Similar pages

Herzlich willkommen bei

Search

Indexer

The Web

Indexes

Ad indexes

User Needs

Need [Brod02, RL04]

Informational – want to learn about something (~40% / 65%)

Low hemoglobin

Navigational – want to go to that page (~25% / 15%)

United Airlines

Transactional – want to do something (web-mediated) (~35% / 20%)

Access a service

Downloads

Shop

Seattle weather

Mars surface images

Canon S410

Gray areas

Car rental Brasil

Find a good hub

Exploratory search “see what’s there”

Web search users

Make ill defined

queries

Short

Specific behavior

AV 2001: 2.54 terms

avg, 80% < 3 words)

AV 1998: 2.35 terms

avg, 88% < 3 words

[Silv98]

Imprecise terms

Sub-optimal syntax (most

queries without operator)

Low effort

Wide variance in

Needs

Expectations

Knowledge

Bandwidth

85% look over one

result screen only

(mostly above the

fold)

78% of queries are

not modified (one

query/session)

Follow links –

“the scent of

information” ...

Query Distribution

Power law: few popular broad queries,

many rare specific queries

How far do people look for results?

(Source: iprospect.com WhitePaper_2006_SearchEngineUserBehavior.pdf)

Users’ empirical evaluation of results

Quality of pages varies widely

Relevance is not enough

Other desirable qualities (non IR!!)

Precision vs. recall

On the web, recall seldom matters

What matters

Precision at 1? Precision above the fold?

Comprehensiveness – must be able to deal with obscure

queries

Content: Trustworthy, new info, non-duplicates, well maintained,

Web readability: display correctly & fast

No annoyances: pop-ups, etc

Recall matters when the number of matches is very small

User perceptions may be unscientific, but are

significant over a large aggregate

Users’ empirical evaluation of engines

Relevance and validity of results

UI – Simple, no clutter, error tolerant

Trust – Results are objective

Coverage of topics for polysemic queries

Eg. mole, bank

Pre/Post process tools provided

Mitigate user errors (auto spell check, syntax errors,…)

Explicit: Search within results, more like this, refine ...

Anticipative: related searches

Deal with idiosyncrasies

Web specific vocabulary

Impact on stemming, spell-check, etc

Web addresses typed in the search box

…

The Web corpus

The Web

Large dynamic directed graph

No design/co-ordination

Distributed content creation, linking,

democratization of publishing

Content includes truth, lies, obsolete

information, contradictions …

Unstructured (text, html, …), semistructured (XML, annotated photos),

structured (Databases)…

Scale much larger than previous text

corpora … but corporate records are

catching up.

Growth – slowed down from initial

“volume doubling every few months”

but still expanding

Content can be dynamically

generated

Web Today

The Web consists of hundreds of billions of

pages (Google query ‘the’)

Potentially infinite if dynamic pages are

considered

It is considered one of the biggest information

revolutions in recent human history

One of the largest graphs around

Full of information

Trends

Web page

The simple Web graph

A graph G = (V, E) is defined by

The Web page graph (directed)

a set V of vertices (nodes)

a set E of edges (links) = pairs of nodes

V is the set of static public pages

E is the set of static hyperlinks

Many more graphs can be defined

The host graph

The co-citation graph

Temporal graph

etc

Which pages do we care for if we

want to measure the web graph?

Avoid “dynamic” pages?

catalogs

pages generated by queries

pages generated by cgi-scripts (the nostradamus

effect)

Only interested in “static” web pages

The Web: Dynamic content

A page without a static html version

E.g., current status of flight AA129

Current availability of rooms at a hotel

Usually, assembled at the time of a request from a browser

Sometimes, URL has a ‘?’ character in it

‘?’ precedes the actual query

AA129

Browser

Application server

Back-end

databases

The Static Public Web

Example - http:/clgiles.ist.psu.edu

Static

Public

no password

required

no robots.txt

exclusion

no “noindex” meta

tag

These rules can still be fooled etc.

“Dynamic pages” appear static

not the result of a cgibin scripts

no “?” in the URL

doesn’t change very

often

etc.

• browseable catalogs (Hierarchy built from DB)

Spider traps -- infinite url descent

• www.x.com/home/home/home/…./home/home.html

Spammer games

Why do we care about the Web graph?

Is it the largest human artifact ever created?

Exploit the Web structure for

crawlers

search and link analysis ranking

spam detection

community discovery

classification/organization

business, politics, society applications

Predict the Web future

mathematical models

algorithm analysis

sociological understanding

New business opportunities

New politics

The first question: what is the size of the Web?

Surprisingly hard to answer

Naïve solution: keep crawling until the whole graph has been

explored

Extremely simple but wrong solution: crawling is complicated

because the web is complicated

spamming

duplicates

mirrors

Simple example of a complication: Soft 404

When a page does not exists, the server is supposed to return an

error code = “404”

Many servers do not return an error code, but keep the visitor on

site, or simply send him to the home page

A sampling approach

Sample pages uniformly at random

Compute the percentage of the pages that

belong to a search engine repository (search

engine coverage)

Estimate the size of the Web

Problems:

how do you sample a page uniformly at random?

how do you test if a page is indexed by a search

engine?

Sampling pages [LG99, HHMN00]

Create IP addresses uniformly at random

problems with virtual hosting, spamming

Starting from a subset of pages perform a

random walk on the graph. After “enough” steps

you should end up in a random page.

near uniform sampling

Testing search engine containment [BB98]

Measuring the Web

It is clear that the Web that we see is what

the crawler discovers

We need large crawls in order to make

meaningful measurements

The measurements are still biased by

the crawling policy

size limitations of the crawl

Perturbations of the "natural" process of birth and

death of nodes and links

Measures on the Web graph [BKMRRSTW00]

Degree distributions

The global picture

what does the Web look like from a far?

Reachability

Connected components

Community structure

The finer picture

In-degree distribution

Power-law distribution with exponent 2.1

Out-degree distribution

Power-law distribution with exponent 2.7

The good news

The fact that the exponent is greater than 2

implies that the expected value of the degree is a

constant (not growing with n)

Therefore, the expected number of edges is

linear in the number of nodes n

This is good news, since we cannot handle

anything much more than linear

Connected components – definitions

Weakly connected components (WCC)

Set of nodes such that from any node can go to any node via an

undirected path

Strongly connected components (SCC)

Set of nodes such that from any node can go to any node via a

directed path.

WCC

SCC

The bow-tie structure of the Web

SCC and WCC distribution

The SCC and WCC sizes follows a power law

distribution

the second largest SCC is significantly smaller

The inner structure of the bow-tie

[LMST05]

What do the individual components of the bow tie

look like?

They obey the same power laws in the degree

distributions

The inner structure of the bow-tie

Is it the case that the bow-tie repeats itself in

each of the components (self-similarity)?

It would look nice, but this does not seem to be the

case

no large WCC, many small ones

The daisy structure?

Large connected core, and highly fragmented

IN and OUT components

Unfortunately, we do not have a large crawl

to verify this hypothesis

A different kind of self-similarity [DKCRST01]

Consider Thematically Unified Clusters (TUC):

pages grouped by

keyword searches

web location (intranets)

geography

hostgraph

random collections

All such TUCs exhibit a bow-tie structure!

Self-similarity

The Web consists of a collection of self-similar

structures that form a backbone of the SCC

Dynamic content

Most dynamic content is ignored by web spiders

Some dynamic content (news stories from

subscriptions) are sometimes delivered as dynamic

content

Many reasons including malicious spider traps

Application-specific spidering

Spiders commonly view web pages just as Lynx (a text

browser) would

Note: even “static” pages are typically assembled on

the fly (e.g., headers are common)

The web: size

What is being measured?

Number of hosts

Number of (static) html pages

Number of hosts – netcraft survey

Volume of data

http://news.netcraft.com/archives/web_server_survey.html

Monthly report on how many web hosts & servers are out

there

Number of pages – numerous estimates

Netcraft Web Server Survey

http://news.netcraft.com/archives/web_server_survey.html

Netcraft Web Server Survey

http://news.netcraft.com/archives/web_server_survey.html

Netcraft Web Server Survey

http://news.netcraft.com/archives/web_server_survey.html

Netcraft Web Server Survey

http://news.netcraft.com/archives/web_server_survey.html

The web: evolution

All of these numbers keep changing

Relatively few scientific studies of the

evolution of the web [Fetterly & al, 2003]

http://research.microsoft.com/research/sv/svpubs/p97-fetterly/p97-fetterly.pdf

Sometimes possible to extrapolate from

small samples (fractal models) [Dill & al,

2001]

http://www.vldb.org/conf/2001/P069.pdf

Rate of change

[Cho00] 720K pages from 270 popular sites sampled

daily from Feb 17 – Jun 14, 1999

[Fett02] Massive study 151M pages checked over

few months

Any changes: 40% weekly, 23% daily

Significant changed -- 7% weekly

Small changes – 25% weekly

[Ntul04] 154 large sites re-crawled from scratch

weekly

8% new pages/week

8% die

5% new content

25% new links/week

Static pages: rate of change

Fetterly et al. study (2002): several views of data, 150 million

pages over 11 weekly crawls

Bucketed into 85 groups by extent of change

Other characteristics

Significant duplication

High linkage

More than 8 links/page in the average

Complex graph topology

Syntactic – 30%-40% (near) duplicates [Brod97,

Shiv99b, etc.]

Semantic – ???

Not a small world; bow-tie structure [Brod00]

Spam

Billions of pages

Web

evolution

Spam

vs

Search Engine Optimization (SEO)

SERPs

• Search engine results

page (SERP) are

very influential

• How can they be

manipulated

• How can you come

up first?

Google SERP for

query “lee giles’

The trouble with paid placement…

It costs money. What’s the alternative?

Search Engine Optimization (SEO):

“Tuning” your web page to rank highly in the

search results for select keywords

Alternative to paying for placement

Thus, intrinsically a marketing function

Performed by companies, webmasters and

consultants (“Search engine optimizers”) for

their clients

Some perfectly legitimate, some very shady

Some frowned upon by Search Engines

Simplest forms

First generation engines relied heavily on tf/idf

The top-ranked pages for the query maui resort were the

ones containing the most maui’s and resort’s

SEOs responded with dense repetitions of chosen

terms

e.g., maui resort maui resort maui resort

Often, the repetitions would be in the same color as the

background of the web page

Repeated terms got indexed by crawlers

But not visible to humans on browsers

Pure word density cannot

be trusted as an IR signal

Search Engine Spam: Objective

Success of commercial Web sites depends on the number of

visitors that find the site while searching for a particular product.

85% of searchers look at only the first page of results

A new business sector – search engine optimization

M. Henzinger, R. Motwani, and C. Silverstein. Challenges in web

search engines. International Joint Conference on Artificial

Intelligence, 2003.

Drost, I. and Scheffer, T., Thwarting the Nigritude Ultramarine:

Learning to Identify Link Spam. 16th European Conference on

Machine Learning, Porto, 2005

What’s SEO?

SEO = Search Engine Optimization

Refers to the process of “optimizing” both the on-page and offpage ranking factors in order to achieve high search engine

rankings for targeted search terms.

Refers to the “industry” that revolves around obtaining high

rankings in the search engines for desirable keyword search terms

as a means of increasing the relevant traffic to a given website.

Refers to an individual or company that optimizes websites for its

clientele.

Has a number of related meanings, and usually refers to an

individual/firm that focuses on optimizing for “organic” search

engine rankings

What’s SEO based on

Features we know used in web page

ranking

Page content

Page metadata

Anchor text

Links

User behavior

Others?

Search Engine Spam: Spamdexing

spamdexing (also known as search spam, search

engine spam, web spam or search engine

poisoning) is the deliberate manipulation of search

engine indexes

aka: black hat SEO

Porn led the way – ‘96

Search Engine Spamdexing Methods

Content based

Link spam

Cloaking

Mirror sites

URL redirection

Content spamming

Keyword stuffing

•calculated placement of keywords within a page to raise the keyword count,

variety, and density of the page. Truncation so that massive dictionary lists

cannot be indexed on a single webpage.

•Hidden or invisible text

Meta-tag stuffing

•Out of date

Doorway pages

•"Gateway" or doorway pages created with very little content but are instead

stuffed with very similar keywords and phrases.

•BMW caught

Scraper sites

•amalgamation of content taken from other sites; still works

Article spinning

•rewriting existing articles, as opposed to merely scraping content from other

sites, undertaken by hired writers or automated using a thesaurus database or a

neural network.

Variants of keyword stuffing

Misleading meta-tags, excessive repetition

Hidden text with colors, style sheet tricks,

etc.

Meta-Tags =

“… London hotels, hotel, holiday inn, hilton, discount,

booking, reservation, sex, mp3, britney spears, viagra,

…”

Link Spamming: Techniques

Link farms: Densely connected arrays of pages. Farm pages

propagate their PageRank to the target, e.g., by a funnelshaped architecture that points directly or indirectly towards

the target page. To camouflage link farms, tools fill in

inconspicuous content, e.g., by copying news bulletins.

Link exchange services: Listings of (often unrelated)

hyperlinks. To be listed, businesses have to provide a back

link that enhances the PageRank of the exchange service.

Guestbooks, discussion boards, and weblogs: Automatic

tools post large numbers of messages to many sites; each

message contains a hyperlink to the target website.

Cloaking

Serve fake content to search engine spider

DNS cloaking: Switch IP address. Impersonate

Y

SPAM

Is this a Search

Engine spider?

Cloaking

N

Real

Doc

Google Bombing != Google Hacking

http://en.wikipedia.org/wiki/Google_bomb

A Google bomb or Google wash is an attempt to

influence the ranking of a given site in results

returned by the Google search engine. Due to the

way that Google's Page Rank algorithm works, a

website will be ranked higher if the sites that link to

that page all use consistent anchor text.

A google bomb is when links are put in several sites

on the internet by a number of people so it leads a

particular keyword search combination to a specific

site and the site is deluged/swamped (it may crash

the site) with hits.

94

Google Bomb - old

Query: french military victories

Others?

Link Spamming: Defenses

Manual identification of spam pages and farms to create a

blacklist.

Automatic classification of pages using machine learning

techniques.

BadRank algorithm. The "bad rank" is initialized to a high

value for blacklisted pages. It propagates bad rank to all

referring pages (with a damping factor) thus penalizing

pages that refer to spam.

Intelligent SEO

Figure out how search engines do their ranking

Inductive science

Make intelligent changes

Figure our what happens

Repeat

So What is the Search Engine

Ranking Algorithm?

Top Secret! Only select employees of the actual search

engines know for certain

Reverse engineering, research and experiments gives

some idea of major factors and approximate weight

assignments

Constant changing, tweaking, updating is done to the

algorithm

Websites and documents being searched are also

constantly changing

Varies by Search Engine – some give more weight to onpage factors, some to link popularity

SEO Expert

Search engine optimization vs Spam

Motives

Operators

Commercial, political, religious, lobbies

Promotion funded by advertising budget

Contractors (Search Engine Optimizers) for lobbies, companies

Web masters

Hosting services

Forums

E.g., Web master world ( www.webmasterworld.com )

Search engine specific tricks

Discussions about academic papers

The spam industry

SEO contests

•Now part of some search engine classes!

The war against spam

Quality signals - Prefer

authoritative pages based

on:

Ignore statistically implausible

linkage (or text)

Use link analysis to detect

spammers (guilt by

association)

Training set based on

known spam

Family friendly filters

Anti robot test

Limits on meta-keywords

Robust link analysis

Spam recognition by

machine learning

Policing of URL

submissions

Votes from authors (linkage

signals)

Votes from users (usage

signals)

Linguistic analysis, general

classification techniques,

etc.

For images: flesh tone

detectors, source text

analysis, etc.

Editorial intervention

Blacklists

Top queries audited

Complaints addressed

Suspect pattern detection

The war against spam – what Google does

Google changes their algorithms regularly

Panda and Penguin updates

Suing Google doesn’t work

Google Wins in SearchKing Lawsuit

Defines what counts as a high quality site

Uses machine learning and AI methods

SEO doesn’t seem to use this

23 features

Google penalty

refers to a negative impact on a website's search ranking

based on updates to Google's search algorithms.

penalty can be an unfortunate by-product

an intentional penalization of various black-hat SEO

techniques

Future of spamdexing

Who’s the smartest tech wise: Search engines

vs SEOs

Web search engines have policies on SEO

practices they tolerate/block

http://www.bing.com/toolbox/webmaster

http://www.google.com/intl/en/webmasters/

Adversarial IR: the unending (technical) battle

between SEO’s and web search engines

Constant evolution

Can it ever be solved?

Research http://airweb.cse.lehigh.edu/

Many SEO companies will suffer

Reported “Organic” (white hat)

Optimization Techniques

Register with search engines

Research keywords related to your business

Identify competitors, utilize benchmarking techniques and identify level of

competition

Utilize descriptive title tags for each page

Ensure that your text is HTML text and not image text

Use text links when possible

Use appropriate keywords in your content and internal hyperlinks (don’t

overdo!)

Obtain inbound links from related websites

Use Sitemaps

Monitor your search engine rankings and more importantly your website

traffic statistics and sales/leads produced

Educate yourself about search engine marketing or consult a search

engine optimization firm or SEO expert

Use the Google Guide to High Quality Websites

Duplicate detection

Get rid of duplicates; save space and time

Product or idea evolution (near duplicates)

Check for stolen information, plagiarism, etc.

Duplicate documents

The web is full of duplicated content

Strict duplicate detection = exact match

Estimates at 40%

Not as common

But many, many cases of near duplicates

E.g., Last modified date the only difference

between two copies of a page

Duplicate/Near-Duplicate Detection

Duplication: Exact match can be detected with

fingerprints

Hash value

Near-Duplication: Approximate match

Overview

Compute syntactic similarity with an edit-distance

measure

Use similarity threshold to detect near-duplicates

E.g., Similarity > 80% => Documents are “near

duplicates”

Not transitive though sometimes used transitively

Computing Similarity

Features:

Segments of a document (natural or artificial breakpoints)

Shingles (Word N-Grams)

a rose is a rose is a rose →

a_rose_is_a

rose_is_a_rose

is_a_rose_is

a_rose_is_a

Similarity Measure between two docs (= sets of shingles)

Set intersection

Specifically (Size_of_Intersection / Size_of_Union)

Shingles + Set Intersection

Computing exact set intersection of shingles

between all pairs of documents is

expensive/intractable

Approximate using a cleverly chosen subset of

shingles from each (a sketch)

Estimate (size_of_intersection / size_of_union)

based on a short sketch

Doc

A

Shingle set A

Doc

B

Shingle set A

Sketch A

Jaccard

Sketch A

Sketch of a document

Create a “sketch vector” (of size ~200) for

each document

Documents that share ≥ t (say 80%)

corresponding vector elements are near

duplicates

For doc D, sketchD[ i ] is as follows:

Let f map all shingles in the universe to 0..2m

(e.g., f = fingerprinting)

Let pi be a random permutation on 0..2m

Pick MIN {pi(f(s))} over all shingles s in D

Comparing Signatures

Signature Matrix S

Rows = Hash Functions

Columns = Columns

Entries = Signatures

Can compute – Pair-wise similarity of

any pair of signature columns

All signature pairs

Now we have an extremely efficient method for

estimating a Jaccard coefficient for a single pair

of documents.

But we still have to estimate N2 coefficients

where N is the number of web pages.

Still slow

One solution: locality sensitive hashing (LSH)

Another solution: sorting (Henzinger 2006)

What we covered

Web vs classic IR

What users do

Web advertising

Web as a graph

SEO/Web spam

Applications

Structure and size

White vs black hat

Dup detection