Privacy and Trust - data dissemination

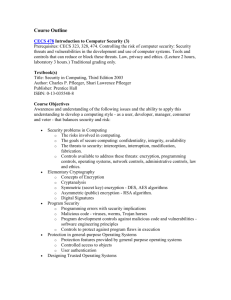

advertisement

Vulnerabilities and Threats

in Distributed Systems*

Prof. Bharat Bhargava

Dr. Leszek Lilien

Department of Computer Sciences and

the Center for Education and Research in Information Assurance and Security (CERIAS )

Purdue University

www.cs.purdue.edu/people/{bb, llilien}

Presented by

Prof. Sanjay Madria

Department of Computer Science

University of Missouri-Rolla

*

Supported in part by NSF grants IIS-0209059 and IIS-0242840

Prof. Bhargava thanks the organizers of the 1st International

Conference on Distributed Computing & Internet Technology—ICDCIT

2004. In particular, he thanks:

Prof. R. K. Shyamsunder

Prof. Hrushikesha Mohanty

Prof. R.K. Ghosh

Prof. Vijay Kumar

Prof. Sanjay Madria

He thanks the attendees, and regrets that he could not be present.

He came to Bhubaneswar in 2001 and enjoyed it tremendously. He was

looking forward to coming again.

He will be willing to communicate about this research. Potential exists

for research collaboration. Please send mail to bb@cs.purdue.edu

He will very much welcome your visit to Purdue University.

ICDCIT 2004

2

From Vulnerabilities to Losses

Growing business losses due to vulnerabilities in distributed

systems

Vulnerabilities occur in:

Identity theft in 2003 – expected loss of $220 bln worldwide ; 300%(!)

annual growth rate [csoonline.com, 5/23/03]

Computer virus attacks in 2003 – estimated loss of $55 bln worldwide

[news.zdnet.com, 1/16/04]

Hardware / Networks / Operating Systems / DB systems / Applications

Loss chain

Dormant vulnerabilities enable threats against systems

Potential threats can materialize as (actual) attacks

Successful attacks result in security breaches

Security breaches cause losses

ICDCIT 2004

3

Vulnerabilities and Threats

Vulnerabilities and threats start the loss chain

Best to deal with them first

Deal with vulnerabilities

Gather in metabases and notification systems info on

vulnerabilities and security incidents, then disseminate it

Example vulnerability and incident metabases

Example vulnerability notification systems

CVE (Mitre), ICAT (NIST), OSVDB (osvdb.com)

CERT (SEI-CMU), Cassandra (CERIAS-Purdue)

Deal with threats

Threat assessment procedures

Specialized risk analysis using e.g. vulnerability and incident info

Threat detection / threat avoidance / threat tolerance

ICDCIT 2004

4

Outline

1. Vulnerabilities

2. Threats

3. Mechanisms to Reduce Vulnerabilities

and Threats

3.1. Applying Reliability and Fault Tolerance

Principles to Security Research

3.2. Using Trust in Role-based Access

Control

3.3. Privacy-preserving Data Dissemination

3.4. Fraud Countermeasure Mechanisms

ICDCIT 2004

5

Vulnerabilities - Topics

Models for Vulnerabilities

Fraud Vulnerabilities

Vulnerability Research Issues

ICDCIT 2004

6

Models for Vulnerabilities (1)

A vulnerability in security domain – like a fault in

reliability domain

Modeling vulnerabilities

A flaw or a weakness in system security procedures,

design, implementation, or internal controls

Can be accidentally triggered or intentionally exploited,

causing security breaches

Analyzing vulnerability features

Classifying vulnerabilities

Building vulnerability taxonomies

Providing formalized models

System design should not let an adversary know

vulnerabilities unknown to the system owner

ICDCIT 2004

7

Models for Vulnerabilities (2)

Diverse models of vulnerabilities in the literature

Analysis of four common computer vulnerabilities

In various environments

Under varied assumptions

Examples follow

[17]

Identifies their characteristics, the policies violated by

their exploitation, and the steps needed for their

eradication in future software releases

Vulnerability lifecycle model applied to three case

studies [4]

Shows how systems remains vulnerable long after security

fixes

Vulnerability lifetime stages:

appears, discovered, disclosed, corrected, publicized, disappears

ICDCIT 2004

8

Models for Vulnerabilities (3)

Model-based analysis to identify configuration

vulnerabilities [23]

Formal specification of desired security properties

Abstract model of the system that captures its securityrelated behaviors

Verification techniques to check whether the abstract

model satisfies the security properties

Kinds of vulnerabilities

Operational

[3]

E.g. an unexpected broken linkage in a distributed database

Information-based

E.g. unauthorized access (secrecy/privacy), unauthorized

modification (integrity), traffic analysis (inference problem), and

Byzantine input

ICDCIT 2004

9

Models for Vulnerabilities (4)

Not all vulnerabilities can be removed, some shouldn’t

Because:

Vulnerabilities create only a potential for attacks

Some vulnerabilities cause no harm over entire system’s life cycle

Some known vulnerabilities must be tolerated

Removal of some vulnerabilities may reduce usability

E.g., removing vulnerabilities by adding passwords for each resource

request lowers usability

Some vulnerabilities are a side effect of a legitimate system feature

Due to economic or technological limitations

E.g., the setuid UNIX command creates vulnerabilities [14]

Need threat assessment to decide which vulnerabilities to

remove first

ICDCIT 2004

10

Fraud Vulnerabilities (1)

Fraud:

a deception deliberately practiced in order to secure unfair

or unlawful gain [2]

Examples:

Using somebody else’s calling card number

Unauthorized selling of customer lists to telemarketers

(example of an overlap of fraud with privacy breaches)

Fraud can make systems more vulnerable to

subsequent fraud

Need for protection mechanisms to avoid future damage

ICDCIT 2004

11

Fraud Vulnerabilities (2)

Fraudsters:

[13]

Impersonators

illegitimate users who steal resources from victims

(for instance by taking over their accounts)

Swindlers

legitimate users who intentionally benefit from the system or

other users by deception

(for instance, by obtaining legitimate telecommunications

accounts and using them without paying bills)

Fraud involves abuse of trust

[12, 29]

Fraudster strives to present himself as a trustworthy

individual and friend

The more trust one places in others the more vulnerable

one becomes

ICDCIT 2004

12

Vulnerability Research Issues (1)

Analyze severity of a vulnerability and its potential

impact on an application

Qualitative impact analysis

Quantitative impact

Expressed as a low/medium/high degree of

performance/availability degradation

E.g., economic loss, measurable cascade effects, time to recover

Provide procedures and methods for efficient

extraction of characteristics and properties of

known vulnerabilities

Analogous to understanding how faults occur

Tools searching for known vulnerabilities in metabases

can not anticipate attacker behavior

Characteristics of high-risk vulnerabilities can be learnt

from the behavior of attackers, using honeypots, etc.

ICDCIT 2004

13

Vulnerability Research Issues (2)

Construct comprehensive taxonomies of

vulnerabilities for different application areas

Medical systems may have critical privacy vulnerabilities

Vulnerabilities in defense systems compromise homeland

security

Propose good taxonomies to facilitate both

prevention and elimination of vulnerabilities

Enhance metabases of vulnerabilities/incidents

Reveals characteristics for preventing not only identical

but also similar vulnerabilities

Contributes to identification of related vulnerabilities,

including dangerous synergistic ones

Good model for a set of synergistic vulnerabilities can lead to

uncovering gang attack threats or incidents

ICDCIT 2004

14

Vulnerability Research Issues (3)

Provide models for vulnerabilities and their contexts

The challenge: how vulnerability in one context

propagates to another

If Dr. Smith is a high-risk driver, is he a trustworthy doctor?

Different kinds of vulnerabilities emphasized in different

contexts

Devise quantitative lifecycle vulnerability models for

a given type of application or system

Exploit unique characteristics of vulnerabilities &

application/system

In each lifecycle phase:

- determine most dangerous and common types of vulnerabilities

- use knowledge of such types of vulnerabilities to prevent them

Best defensive procedures adaptively selected from a

predefined set

ICDCIT 2004

15

Vulnerability Research Issues (4)

The lifecycle models helps solving a few problems

Avoiding system vulnerabilities most efficiently

Evaluations/measurements of vulnerabilities at each

lifecycle stage

By discovering & eliminating them at design and implementation

stages

In system components / subsystems / of the system as a whole

Assist in most efficient discovery of vulnerabilities before

they are exploited by an attacker or a failure

Assist in most efficient elimination / masking of vulnerabilities

(e.g. based on principles analogous to fault-tolerance)

OR:

Keep an attacker unaware or uncertain of important system

parameters

(e.g., by using non-deterministic or deceptive system behavior, increased

component diversity, or multiple lines of defense)

ICDCIT 2004

16

Vulnerability Research Issues (5)

Provide methods of assessing impact of

vulnerabilities on security in applications & systems

Create formal descriptions of the impact of vulnerabilities

Develop quantitative vulnerability impact evaluation methods

Use resulting ranking for threat/risk analysis

Identify the fundamental design principles and guidelines for dealing

with system vulnerabilities at each lifecycle stage

Propose best practices for reducing vulnerabilities at all lifecycle

stages (based on the above principles and guidelines)

Develop interactive or fully automatic tools and infrastructures

encouraging or enforcing use of these best practices

Other issues:

Investigate vulnerabilities in security mechanisms themselves

Investigate vulnerabilities due to non-malicious but threat-enabling

uses of information [21]

ICDCIT 2004

17

Outline

1. Vulnerabilities

2. Threats

3. Mechanisms to Reduce Vulnerabilities

and Threats

3.1. Applying Reliability and Fault Tolerance

Principles to Security Research

3.2. Using Trust in Role-based Access

Control

3.3. Privacy-preserving Data Dissemination

3.4. Fraud Countermeasure Mechanisms

ICDCIT 2004

18

Threats - Topics

Models of Threats

Dealing with Threats

Threat Avoidance

Threat Tolerance

Fraud Threat Detection for Threat

Tolerance

Fraud Threats

Threat Research Issues

ICDCIT 2004

19

Models of Threats

Threats in security domain – like errors in reliability domain

Entities that can intentionally exploit or inadvertently trigger specific

system vulnerabilities to cause security breaches [16, 27]

Attacks or accidents materialize threats (changing them from

potential to actual)

Attack - an intentional exploitation of vulnerabilities

Accident - an inadvertent triggering of vulnerabilities

Threat classifications:

[26]

Based on actions, we have:

threats of illegal access, threats of destruction, threats of modification,

and threats of emulation

Based on consequences, we have:

threats of disclosure, threats of (illegal) execution, threats of

misrepresentation, and threats of repudiation

ICDCIT 2004

20

Dealing with Threats

Dealing with threats

Avoid (prevent) threats in systems

Detect threats

Eliminate threats

Tolerate threats

Deal with threats based on degree of risk

acceptable to application

Avoid/eliminate threats to human life

Tolerate threats to noncritical or redundant components

ICDCIT 2004

21

Dealing with Threats –

Threat Avoidance (1)

Design of threat avoidance techniques - analogous

to fault avoidance (in reliability)

Threat avoidance methods are frozen after system

deployment

Effective only against less sophisticated attacks

Sophisticated attacks require adaptive schemes for

threat tolerance [20]

Attackers have motivation, resources, and the whole

system lifetime to discover its vulnerabilities

Can discover holes in threat avoidance methods

ICDCIT 2004

22

Dealing with Threats –

Threat Avoidance (2)

Understanding threat sources

Understand threats by humans, their motivation and

potential attack modes [27]

Understand threats due to system faults and failures

Example design guidelines for preventing threats:

Model for secure protocols [15]

Formal models for analysis of authentication protocols

[25,

10]

Models for statistical databases to prevent data disclosures

[1]

ICDCIT 2004

23

Dealing with Threats –

Threat Tolerance

Useful features of fault-tolerant approach

Not concerned with each individual failure

Don’t spend all resources on dealing with individual failures

Can ignore transient and non-catastrophic errors and failures

Need analogous intrusion-tolerant approach

Deal with lesser and common security breaches

E.g.: intrusion tolerance for database systems [3]

Phase 1: attack detection

Phases 2-5: damage confinement, damage assessment, reconfiguration,

continuation of service

Optional (e.g., majority voting schemes don’t need detection)

can be implicit (e.g., voting schemes follow the same procedure whether

attacked or not)

Phase 6: report attack

to repair and fault treatment (to prevent a recurrence of similar attacks)

ICDCIT 2004

24

Dealing with Threats – Fraud Threat

Detection for Threat Tolerance

Fraud threat identification is needed

Fraud detection systems

Widely used in telecommunications, online transactions,

insurance

Effective systems use both fraud rules and pattern

analysis of user behavior

Challenge: a very high false alarm rate

Due to the skewed distribution of fraud occurrences

ICDCIT 2004

25

Fraud Threats

Analyze salient features of fraud threats

Some salient features of fraud threats

Fraud is often a malicious opportunistic reaction

Fraud escalation is a natural phenomenon

Gang fraud can be especially damaging

[9]

Gang fraudsters can cooperate in misdirecting suspicion on

others

Individuals/gangs planning fraud thrive in fuzzy

environments

Use fuzzy assignments of responsibilities to participating entities

Powerful fraudsters create environments that facilitate fraud

E.g.: CEO’s involved in insider trading

ICDCIT 2004

26

Threat Research Issues (1)

Analysis of known threats in context

Identify (in metabases) known threats relevant for the context

Find salient features of these threats and associations between them

Build a threat taxonomy for the considered context

Propose qualitative and quantitative models of threats in context

Threats can be associated also via their links to related vulnerabilities

Infer threat features from features of vulnerabilities related to them

Including lifecycle threat models

Define measures to determine threat levels

Devise techniques for avoiding/tolerating threats

via unpredictability or non-determinism

Detecting known threats

Discovering unknown threats

ICDCIT 2004

27

Threat Research Issues (2)

Develop quantitative threat models using analogies

to reliability models

E.g., rate threats or attacks using time and effort random variables

Mean Effort To security Failure (METF)

Analogous to Mean Time To Failure (MTTF) reliability measure

Mean Time To Patch and Mean Effort To Patch (new security

measures)

Describe the distribution of their random behavior

Analogous to Mean Time To Repair (MTTR) reliability measure and METF

security measure, respectively

Propose evaluation methods for threat impacts

Mere threat (a potential for attack) has its impact

Consider threat properties: direct damage, indirect damage, recovery

cost, prevention overhead

Consider interaction with other threats and defensive mechanisms

ICDCIT 2004

28

Threat Research Issues (3)

Invent algorithms, methods, and design guidelines

to reduce number and severity of threats

Consider injection of unpredictability or uncertainty to

reduce threats

E.g., reduce data transfer threats by sending portions of critical

data through different routes

Investigate threats to security mechanisms themselves

Study threat detection

It might be needed for threat tolerance

Includes investigation of fraud threat detection

ICDCIT 2004

29

Products, Services and Research

Programs for Industry (1)

There are numerous commercial products and services, and some free products

and services

Examples follow.

Notation used below: Product (Organization)

Example vulnerability and incident metabases

Example vulnerability notification systems

CVE (Mitre), ICAT (NIST), OSVDB (osvdb.com), Apache Week Web Server (Red Hat), Cisco Secure

Encyclopedia (Cisco), DOVESComputer Security Laboratory (UC Davis), DragonSoft Vulnerability

Database (DragonSoft Security Associates), Secunia Security Advisories (Secunia), SecurityFocus

Vulnerability Database (Symantec), SIOS (Yokogawa Electric Corp.), Verletzbarkeits-Datenbank (scip

AG), Vigil@nce AQL (Alliance Qualité Logiciel)

CERT (SEI-CMU), Cassandra (CERIAS-Purdue), ALTAIR (esCERT-UPC), DeepSight Alert Services

(Symantec), Mandrake Linux Security Advisories (MandrakeSoft)

Example other tools (1)

Vulnerability Assessment Tools (for databases, applications, web applications, etc.)

AppDetective (Application Security), NeoScanner@ESM (Inzen), AuditPro for SQL Server (Network Intelligence India

Pvt. Ltd.), eTrust Policy Compliance (Computer Associates), Foresight (Cubico Solutions CC), IBM Tivoli Risk

Manager (IBM), Internet Scanner (Internet Security Systems), NetIQ Vulnerability Manager (NetIQ), N-Stealth (NStalker), QualysGuard (Qualys), Retina Network Security Scannere (Eye Digital Security), SAINT (SAINT Corp.),

SARA (Advanced Research Corp.), STAT-Scanner (Harris Corp.), StillSecure VAM (StillSecure), Symantec Vulnerability

Assessment (Symantec)

Automated Scanning Tools, Vulnerability Scanners

Automated Scanning (Beyond Security Ltd.), ipLegion/intraLegion (E*MAZE Networks), Managed Vulnerability

Assessment (LURHQ Corp.), Nessus Security Scanner (The Nessus Project), NeVO (Tenable Network Security)

ICDCIT 2004

30

Products, Services and Research

Programs for Industry (2)

Example other tools (2)

Vulnerability und Penetration Testing

Intrusion Detection System

Cisco Secure IDS (Cisco), Cybervision Intrusion Detection System (Venus Information Technology), Dragon Sensor

(Enterasys Networks), McAfee IntruShield (IDSMcAfee), NetScreen-IDP (NetScreen Technologies), Network Box

Internet Threat Protection Device (Network Box Corp.)

Threat Management Systems

Attack Tool Kit (Computec.ch), CORE IMPACT (Core Security Technologies), LANPATROL (Network Security Syst.)

Symantec ManHunt (Symantec)

Example services

Vulnerability Scanning Services

Vulnerability Assessment and Risk Analysis Services

ActiveSentry (Intranode), Risk Analysis Subscription Service (Strongbox Security), SecuritySpace Security Audits (ESoft), Westpoint Enterprise Scan (Westpoint Ltd.)

Threat Notification

Netcraft Network Examination Service (Netcraft Ltd.)

TruSecure IntelliSHIELD Alert Manager (TruSecure Corp.)

Pathches

Software Security Updates (Microsoft)

More on metabases/tools/services: http://www.cve.mitre.org/compatible/product.html

Example Research Programs

Microsoft Trustworthy Computing (Security, Privacy, Reliability, Business Integrity)

IBM

Almaden: information security; Zurich: information security, privacy, and cryptography;

Secure Systems Department; Internet Security group; Cryptography Research Group

ICDCIT 2004

31

Outline

1. Vulnerabilities

2. Threats

3. Mechanisms to Reduce Vulnerabilities and

Threats

3.1. Applying Reliability and Fault Tolerance

Principles to Security Research

3.2. Using Trust in Role-based Access Control

3.3. Privacy-preserving Data Dissemination

3.4. Fraud Countermeasure Mechanisms

ICDCIT 2004

32

Applying Reliability Principles

to Security Research (1)

Apply the science and engineering from Reliability to

Security [6]

Analogies in basic notions

[6, 7]

Fault – vulnerability

Error (enabled by a fault) – threat (enabled by a vulnerability)

Failure/crash (materializes a fault, consequence of an error) –

Security breach (materializes a vulnerability, consequence of a threat)

Time - effort analogies:

[18]

time-to-failure distribution for accidental failures –

expended effort-to-breach distribution for intentional security

breaches

This is not a “direct” analogy: it considers important differences

between Reliability and Security

Most important: intentional human factors in Security

ICDCIT 2004

33

Applying Reliability Principles

to Security Research (2)

Analogies from fault avoidance/tolerance

[27]

Fault avoidance - threat avoidance

Fault tolerance - threat tolerance (gracefully adapts to threats that

have materialized)

Maybe threat avoidance/tolerance should be named: vulnerability

avoidance/tolerance

(to be consistent with the vulnerability - fault analogy)

Analogy:

To deal with failures, build fault-tolerant systems

To deal with security breaches, build threat-tolerant systems

ICDCIT 2004

34

Applying Reliability Principles

to Security Research (3)

Examples of solutions using fault tolerance analogies

Voting and quorums

To increase reliability - require a quorum of voting replicas

To increase security - make forming voting quorums more difficult

Checkpointing applied to intrusion detection

This is not a “direct” analogy but a kind of its “reversal”

To increase reliability – use checkpoints to bring system back to a

reliable (e.g., transaction consistent) state

To increase security - use checkpoints to bring system back to a

secure state

Adaptability / self-healing

Adapt to common and less severe security breaches as we adapt to

every-day and relatively benign failures

Adapt to: timing / severity / duration / extent of a security breach

ICDCIT 2004

35

Applying Reliability Principles

to Security Research (4)

Beware: Reliability analogies are not always helpful

Differences between seemingly identical notions

E.g., “system boundaries” are less open for Reliability than for Security

No simple analogies exist for intentional security breaches

arising from planted malicious faults

In such cases, analogy of time (Reliability) to effort (Security) is

meaningless

E.g., sequential time vs. non-sequential effort

E.g., long time duration vs. “nearly instantaneous” effort

No simple analogies exist when attack efforts are

concentrated in time

As before, analogy of time to effort is meaningless

ICDCIT 2004

36

Outline

1. Vulnerabilities

2. Threats

3. Mechanisms to Reduce Vulnerabilities

and Threats

3.1. Applying Reliability and Fault Tolerance

Principles to Security Research

3.2. Using Trust in Role-based Access

Control

3.3. Privacy-preserving Data Dissemination

3.4. Fraud Countermeasure Mechanisms

ICDCIT 2004

37

Basic Idea - Using Trust

in Role-based Access Control (RBAC)

Traditional identity-based approaches to access

control are inadequate

Don’t fit open computing, incl. Internet-based computing

[28]

Idea: Use trust to enhance user authentication and

authorization

Enhance role-based access control (RBAC)

Use trust in addition to traditional credentials

Trust based on user behavior

Trust is related to vulnerabilities and threats

Trustworthy users:

Don’t exploit vulnerabilities

Don’t become threats

ICDCIT 2004

38

Overview - Using Trust in RBAC (1)

Trust-enhanced role-mapping (TERM) server added

to a system with RBAC

Collect and use evidence related to trustworthiness

of user behavior

Formalize evidence type, evidence

Different forms of evidence must be accommodated

Evidence statement: incl. evidence and opinion

Opinion tells how much the evidence provider trust the evidence

he provides

ICDCIT 2004

39

Overview - Using Trust in RBAC (2)

TERM architecture includes:

Algorithm to evaluate credibility of evidence

Declarative language to define role assignment policies

Algorithm to assign roles to users

Based on its associated opinion and evidence about

trustworthiness of the opinion issuer’s

Based on role assignment policies and evidence statements

Algorithm to continuously update trustworthiness ratings

for user

Its output is used to grant or disallow access request

Trustworthiness ratings for a recommender is affected by

trustworthiness ratings of all users he recommended

ICDCIT 2004

40

Overview - Using Trust in RBAC (3)

A prototype TERM server

Software available at:

http://www.cs.purdue.edu/homes/bb/NSFtrust.html

More details on “Using Trust in RBAC”

available in the extended version of this presentation

at: www.cs.purdue.edu/people/bb#colloquia

ICDCIT 2004

41

Access Control: RBAC & TERM Server

Role-based access control (RBAC)

Trust-enhanced role-mapping (TERM) server

cooperates with RBAC

Request roles

TERM Server

user

Send roles

Request Access

Respond

RBAC enhanced

Web Server

ICDCIT 2004

42

Evidence

Evidence:

Direct evidence

User/issuer behavior observed by TERM

First-hand information

OR:

Indirect evidence (recommendation)

Recommender ’s opinion w.r.t. trust in a user/issuer

Second-hand information

ICDCIT 2004

43

Evidence Model

Design considerations:

Evidence type

Accommodate different forms of evidence in an integrated

framework

Support credibility evaluation

Specify information required by this evidence type (et)

(et_id, (attr_name, attr_domain, attr_type)* )

E.g.: (student, [{name, string, mand}, {university,

string, mand}, {department, string, opt}])

Evidence

Evidence is an instance of an evidence type

ICDCIT 2004

44

Evidence Model – cont.

Opinion

(belief, disbelief, uncertainty)

Probability expectation of Opinion

Alternative representation

Belief + 0.5 * uncertainty

Characterizes the degree of trust represented by an opinion

Fuzzy expression

Uncertainty vs. vagueness

Evidence statement

<issuer, subject, evidence, opinion>

ICDCIT 2004

45

TERM Server Architecture

users’

behaviors

user’s trust

trust

information

mgmt

issuer’s trust

user/issuer

information

database

role

assignment

assigned

roles

evidence

evaluation evidence

statement,

credibility

evidence

statement

credential

mgmt

Component implemented

Component partially

implemented

credentials provided by

third parties or retrieved

from the internet

role-assignment

policies specified

by system

administrators

Credential Management (CM) – transforms different formats of credentials to evidence statements

Evidence Evaluation (EE) - evaluates credibility of evidence statements

Role Assignment (RA) - maps roles to users based on evidence statements and role assignment policies

Trust Information Management (TIM) - evaluates user/issuer’s trust information based on direct experience

and recommendations

ICDCIT 2004

46

EE - Evidence Evaluation

Develop an algorithm to evaluate credibility of

evidence

Issuer’s opinion cannot be used as credibility of evidence

Two types of information used:

Evidence Statement

Issuer’s opinion

Evidence type

Trust w.r.t. issuer for this kind of evidence type

ICDCIT 2004

47

EE - Evidence Evaluation Algorithm

Input: evidence statement E1 = <issuer, subject, evidence, opinion1>

Output: credibility RE(E1) of evidence statement E1

Step1: get opinion1 = <b1, d1, u1> and issuer field from evidence

statement E1

Step2: get the evidence statement about issuer’s testimony_trust E2 =

<term_server,

issuer,

testimony_trust,

opinion2>

from

local

database

Step3: get opinion2 = <b2, d2, u2> from evidence statement E2

Step4: compute opinion3 = <b3, d3, u3 >

(1) b3 = b1 * b2

(2) d3 = b1 * d2

(3) u3 = d1 + u1 + b2 * u1

Step5: compute probability expectation for opinion3 = < b3, d3, u3 >

PE (opinion3) = b3 + 0.5 * u3

Step6: RE (E1) = PE (opinion3)

ICDCIT 2004

48

RA - Role Assignment

Design a declarative language for system administrators to

define role assignment policies

Specify content and number of evidence statements needed for role

assignment

Define a threshold value characterizing the minimal degree of trust

expected for each evidence statement

Specify trust constraints that a user/issuer must satisfy to obtain a role

Develop an algorithm to assign roles based on policies

Several policies may be associated with a role

The role is assigned if one of them is satisfied

A policy may contain several units

The policy is satisfied if all units evaluate to True

ICDCIT 2004

49

RA - Algorithm for Policy Evaluation

Input: evidence set E and their credibility, role A

Output: true/false

P ← the set of policies whose left hand side is role A

while P is not empty{

q = a policy in P

satisfy = true

for each units u in q{

if evaluate_unit(u, e, re(e)) = false for all evidence

statements e in E

satisfy = false

}

if satisfy = true

return true

else

remove q from P

}

return false

ICDCIT 2004

50

RA - Algorithm for Unit Evaluation

Input: evidence statement E1 = <issuer, subject, evidence, opinion1> and

its credibility RE (E1), a unit of policy U

Output: true/false

Step1: if issuer does not hold the IssuerRole specified in U or the type

of evidence does not match evidence_type in U then return false

Step2: evaluate Exp of U as follows:

(1) if Exp1 = “Exp2 || Exp3” then

result(Exp1) = max(result(Exp2), result(Exp3))

(2) else if Exp1 = “Exp2 && Exp3” then

result(Exp1) = min(result(Exp2), result(Exp3))

(3) else if Exp1 = “attr Op Constant” then

if Op {EQ, GT, LT, EGT, ELT} then

if “attr Op Constant” = true then result(Exp1) = RE(E1)

else result(Exp1) = 0

else if Op = NEQ” then

if “attr Op Constant” = true then result(Exp1) = RE(E1)

else result(Exp1) = 1- RE(E1)

Step3: if min(result(Exp), RE(E1)) threshold in U

then output true else output false

ICDCIT 2004

51

TIM - Trust Information Management

Evaluate “current knowledge”

“Current knowledge:”

Interpretations of observations

Recommendations

Developed algorithm that evaluates trust towards a user

User’s trustworthiness affects trust towards issuers who

introduced user

Predict trustworthiness of a user/issuer

Current approach uses the result of evaluation as

the prediction

ICDCIT 2004

52

Prototype TERM Server

Defining role assignment policies

Loading evidence for role assignment

Software: http://www.cs.purdue.edu/homes/bb/NSFtrust.html

ICDCIT 2004

53

Outline

1. Vulnerabilities

2. Threats

3. Mechanisms to Reduce Vulnerabilities

and Threats

3.1. Applying Reliability and Fault Tolerance

Principles to Security Research

3.2. Using Trust in Role-based Access

Control

3.3. Privacy-preserving Data Dissemination

3.4. Fraud Countermeasure Mechanisms

ICDCIT 2004

54

Basic Terms - Privacy-preserving Data

Dissemination

Guardian 1

Original Guardian

“Owner”

(Private Data Owner)

“Data”

(Private Data)

Guardian 5

Third-level

Guardian 2

Second Level

Guardian 4

Guardian 3

“Guardian:”

Entity entrusted by private data owners with collection, storage, or

transfer of their data

Guardian 6

owner can be a guardian for its own private data

owner can be an institution or a computing system

Guardians allowed or required by law to share private data

With owner’s explicit consent

Without the consent as required by law

research, court order, etc.

ICDCIT 2004

55

Problem of Privacy Preservation

Guardian passes private data to another

guardian in a data dissemination chain

Owner privacy preferences not transmitted due

to neglect or failure

Chain within a graph (possibly cyclic)

Risk grows with chain length and milieu fallibility and

hostility

If preferences lost, receiving guardian unable to

honor them

ICDCIT 2004

56

Challenges

Ensuring that owner’s metadata are never

decoupled from his data

Metadata include owner’s privacy preferences

Efficient protection in a hostile milieu

Threats - examples

Uncontrolled data dissemination

Intentional or accidental data corruption, substitution, or

disclosure

Detection of a loss of data or metadata

Efficient recovery of data and metadata

Recovery by retransmission from the original guardian is

most trustworthy

ICDCIT 2004

57

Overview - Privacy-preserving Data

Dissemination

Use bundles to make data and metadata inseparable

bundle = self-descriptive private data + its metadata

E.g., encrypt or obfuscate bundle to prevent separation

Each bundle includes mechanism for apoptosis

apoptosis = clean self-destruction

Bundle chooses apoptosis when threatened with a successful hostile

attack

Develop distance-based evaporation of bundles

E.g., the more “distant” from it owner is a bundle, the more it

evaporates (becoming more distorted)

More details on “Privacy-preserving Data Dissemination”

available in the extended version of this presentation

at: www.cs.purdue.edu/people/bb#colloquia

ICDCIT 2004

58

Proposed Approach

A. Design bundles

bundle = self-descriptive private data + its metadata

B. Construct a mechanism for apoptosis of bundles

apoptosis = clean self-destruction

C. Develop distance-based evaporation of bundles

ICDCIT 2004

59

Related Work

Self-descriptiveness

Use of self-descriptiveness for data privacy

The idea briefly mentioned in [Rezgui, Bouguettaya,

and Eltoweissy, 2003]

Securing mobile self-descriptive objects

Many papers use the idea of self-descriptiveness in

diverse contexts (meta data model, KIF, context-aware

mobile infrastructure, flexible data types)

Esp. securing them via apoptosis, that is clean selfdestruction [Tschudin, 1999]

Specification of privacy preferences and policies

Platform for Privacy Preferences [Cranor, 2003]

AT&T Privacy Bird [AT&T, 2004]

ICDCIT 2004

60

A. Self-descriptiveness in Bundles

Comprehensive metadata include:

owner’s privacy preferences

How to read and write private data

owner’s contact information

To notify or request permission

guardian privacy policies

For the original and/or

subsequent data guardians

metadata access conditions

How to verify and modify metadata

enforcement specifications

How to enforce preferences and

policies

data provenance

Who created, read, modified, or

destroyed any portion of data

context-dependent and

other components

Application-dependent elements

Customer trust levels for

different contexts

Other metadata elements

ICDCIT 2004

61

Bundle Owner Notification

Bundles simplify notifying owners or requesting

their permissions

Contact information available in owner’s contact

information component

Notifications and requests sent to owners

immediately, periodically, or on owner’s demand

Via pagers, SMSs, email, mail, etc.

ICDCIT 2004

62

Optimization of Bundle Transmission

Transmitting complete bundles between

guardians is inefficient

Metadata in bundles describe all foreseeable aspects

of data privacy

For any application and environment

Solution: prune transmitted metadata

Use application and environment semantics along the

data dissemination chain

ICDCIT 2004

63

B. Apoptosis of Bundles

Assuring privacy in data dissemination

In benevolent settings:

use atomic bundles with retransmission recovery

In malevolent settings:

when attacked bundle threatened with disclosure, it

uses apoptosis (clean self-destruction)

Implementation

Detectors, triggers, code

False positive

Dealt with by retransmission recovery

Limit repetitions to prevent denial-of-service attacks

False negatives

ICDCIT 2004

64

C. Distance-based Evaporation

of Bundles

Perfect data dissemination not always desirable

Example:

Confidential business data may be shared within

an office but not outside

Idea: Bundle evaporate in proportion to

its “distance” from its owner

“Closer” guardians trusted more than “distant” ones

Illegitimate disclosures more probable at less trusted

“distant” guardians

Different distance metrics

Context-dependent

ICDCIT 2004

65

Examples of Metrics

Examples of one-dimensional distance metrics

Distance ~ business type

2

Used Car

Dealer 3

Used Car

Dealer 1

Bank I Original

Guardian 5

Insurance

Company C

2

5

1

1

2

5

Bank III

Insurance

Company A

Bank II

Used Car

Dealer 2

If a bank is the

original guardian,

then:

-- any other bank is

“closer” than any

insurance company

-- any insurance

company is “closer”

than any used car

dealer

Insurance

Company B

Distance ~ distrust level: more trusted entities are “closer”

Multi-dimensional distance metrics

Security/reliability as one of dimensions

ICDCIT 2004

66

Evaporation Implemented as

Controlled Data Distortion

Distorted data reveal less, protecting privacy

Examples:

accurate

more and more distorted

250 N. Salisbury Street

West Lafayette, IN

Salisbury Street

West Lafayette, IN

somewhere in

West Lafayette, IN

250 N. Salisbury Street

West Lafayette, IN

[home address]

250 N. University Street

West Lafayette, IN

[office address]

P.O. Box 1234

West Lafayette, IN

[P.O. box]

765-123-4567

[home phone]

765-987-6543

[office phone]

765-987-4321

[office fax]

ICDCIT 2004

67

Outline

1. Vulnerabilities

2. Threats

3. Mechanisms to Reduce Vulnerabilities

and Threats

3.1. Applying Reliability and Fault Tolerance

Principles to Security Research

3.2. Using Trust in Role-based Access

Control

3.3. Privacy-preserving Data Dissemination

3.4. Fraud Countermeasure Mechanisms

ICDCIT 2004

68

Overview - Fraud Countermeasure

Mechanisms (1)

System monitors user behavior

System decides whether user’s behavior qualifies as

fraudulent

Three types of fraudulent behavior identified:

“Uncovered deceiving intention”

“Trapping intention”

User misbehaves all the time

User behaves well at first, then commits fraud

“Illusive intention”

User exhibits cyclic behavior: longer periods of proper behavior

separated by shorter periods of misbehavior

ICDCIT 2004

69

Overview - Fraud Countermeasure

Mechanisms (2)

System architecture for swindler detection

Profile-based anomaly detector

State transition analysis

Provides state description when an activity results in entering a

dangerous state

Deceiving intention predictor

Monitors suspicious actions searching for identified fraudulent

behavior patterns

Discovers deceiving intention based on satisfaction ratings

Decision making

Decides whether to raise fraud alarm when deceiving pattern is

discovered

ICDCIT 2004

70

Overview - Fraud Countermeasure

Mechanisms (3)

Performed experiments validated the architecture

All three types of fraudulent behavior were quickly

detected

More details on “Fraud Countermeasure Mechanisms”

available in the extended version of this presentation

at: www.cs.purdue.edu/people/bb#colloqia

ICDCIT 2004

71

Formal Definitions

A swindler – an entity that has no intention to keep

his commitment in cooperation

Commitment: conjunction of expressions describing

an entity’s promise in a process of cooperation

Example: (Received_by=04/01) (Prize=$1000)

(Quality=“A”) ReturnIfAnyQualityProblem

Outcome: conjunction of expressions describing

the actual results of a cooperation

Example: (Received_by=04/05) (Prize=$1000)

(Quality=“B”) ¬ReturnIfAnyQualityProblem

ICDCIT 2004

72

Formal Definitions

Intention-testifying indicates a swindler

Intention-dependent indicates a possibility

Predicate P: ¬P in an outcome entity making the promise is

a swindler

Attribute variable V: V's expected value is more desirable than

the actual value the entity is a swindler

Predicate P: ¬P in an outcome entity making the promise

may be a swindler

Attribute variable V: V's expected value is more desirable than

the actual value the entity may be a swindler

An intention-testifying variable or predicate is

intention-dependent

The opposite is not necessarily true

ICDCIT 2004

73

Modeling Deceiving Intentions (1)

Satisfaction rating

Associate it with the actual value of each intentiondependent variable in an outcome

Range: [0,1]

The higher the rating, the more satisfied the user

Related to deceiving intention and unpredictable factors

Modeled by using random variable with normal distribution

Mean function fm(n)

Mean value of normal distribution for n-th rating

ICDCIT 2004

74

Modeling Deceiving Intentions (2)

Uncovered deceiving

intention

Satisfaction ratings are

stably low

Ratings vary in a small

range over time

ICDCIT 2004

75

Modeling Deceiving Intentions (3)

Trapping intention

Rating sequence has

two phases:

preparing and trapping

A swindler initially

behaves (well to achieve

a trustworthy image),

then conducts frauds

ICDCIT 2004

76

Modeling Deceiving Intentions (4)

Illusive intention

A smart swindler

attempts to “cover”

bad behavior by

intentionally doing

something good after

misbehaviors

Preparing and trapping

phases are repeated

cyclically

ICDCIT 2004

77

Architecture for Swindler Detection (1)

ICDCIT 2004

78

Architecture for Swindler Detection (2)

Profile-based anomaly detector

State transition analysis

Provides state description when an activity results in

entering a dangerous state

Deceiving intention predictor

Monitors suspicious actions based upon the established

behavior patterns of an entity

Discovers deceiving intention based on satisfaction ratings

Decision making

ICDCIT 2004

79

Profile-based Anomaly Detector (1)

ICDCIT 2004

80

Profile-based Anomaly Detector (2)

Rule generation and weighting

User profiling

Generate fraud rules and weights associated with rules

Variable selection

Data filtering

Online detection

Retrieve rules when an activity occurs

Retrieve current and historical behavior patterns

Calculate deviation between these two patterns

ICDCIT 2004

81

Deceiving Intention Predictor

Kernel of the predictor: DIP algorithm

Belief for deceiving intention as complementary of

trust belief

Trust belief evaluated based on satisfaction

sequence

Trust belief properties:

Time dependent

Trustee dependent

Easy-destruction-hard-constructio

ICDCIT 2004

82

ICDCIT 2004

83

Experimental Study

Goal:

Investigate DIP’s capability of discovering deceiving

intentions

Initial values for parameters:

Construction factor (Wc): 0.05

Destruction factor (Wd): 0.1

Penalty ratios for construction factor (r1): 0.9

Penalty ratios for destruction factor (r2): 0.1

Penalty ratios for supervision-period (r3): 2

Threshold for a foul event (fThreshold): 0.18

ICDCIT 2004

84

Discover Swindler with Uncovered

Deceiving Intention

Trust values are close

to the minimum rating

of interactions: 0.1

Deceiving intention

belief is high, around

0.9

ICDCIT 2004

85

Discover Swindler with Trapping

Intention

DIP responds quickly to

sharp drop in behavior

“goodness”

It takes 6 interactions

for DI-confidence to

increase from 0.2239 to

0.7592 after the sharp

drop

ICDCIT 2004

86

Discover Swindler with Illusive

Intention

DIP is able to catch this

smart swindler because

belief in deceiving

intention eventually

increases to about 0.9

The swindler's effort to

mask his fraud with

good behaviors has less

and less effect with

each next fraud

ICDCIT 2004

87

Conclusions for Fraud Detection

Define concepts relevant to frauds

conducted by swindlers

Model three deceiving intentions

Propose an approach for swindler detection

and an architecture realizing the approach

Develop a deceiving intention prediction

algorithm

ICDCIT 2004

88

Summary

Presented:

1. Vulnerabilities

2. Threats

3. Mechanisms to Reduce Vulnerabilities and Threats

3.1. Applying Reliability and Fault Tolerance Principles to Security

Research

3.2. Using Trust in Role-based Access Control

3.3. Privacy-preserving Data Dissemination

3.4. Fraud Countermeasure Mechanisms

ICDCIT 2004

89

Conclusions

Exciting area of research

20 years of research in Reliability can form a basis

for vulnerability and threat studies in Security

Need to quantify threats, risks, and potential

impacts on distributed applications. Do not be

terrorized and act scared

Adapt and use resources to deal with different

threat levels

Government, industry, and the public are

interested in progress in this research

ICDCIT 2004

90

References (1)

1.

2.

3.

4.

5.

6.

7.

8.

9.

10.

11.

N.R. Adam and J.C. Wortmann, “Security-Control Methods for Statistical Databases:

A Comparative Study,” ACM Computing Surveys, Vol. 21, No. 4, Dec. 1989.

The American Heritage Dictionary of the English Language, Fourth Edition, Houghton

Mifflin, 2000.

P. Ammann, S. Jajodia, and P. Liu, “A Fault Tolerance Approach to Survivability,” in

Computer Security, Dependability, and Assurance: From Needs to Solutions, IEEE

Computer Society Press, Los Alamitos, CA, 1999.

W.A. Arbaugh, et al., “Windows of Vulnerability: A Case Study Analysis,” IEEE Computer,

pp. 52-59, Vol. 33 (12), Dec. 2000.

A. Avizienis, J.C. Laprie, and B. Randell, “Fundamental Concepts of Dependability,”

Research Report N01145, LAAS-CNRS, Apr. 2001.

A. Bhargava and B. Bhargava, “Applying fault-tolerance principles to security research,” in

Proc. of IEEE Symposium on Reliable Distributed Systems, New Orleans, Oct. 2001.

B. Bhargava, “Security in Mobile Networks,” in NSF Workshop on Context-Aware Mobile

Database Management (CAMM), Brown University, Jan. 2002.

B. Bhargava (ed.), Concurrency Control and Reliability in Distributed Systems, Van

Nostrand Reinhold, 1987.

B. Bhargava, “Vulnerabilities and Fraud in Computing Systems,” Proc. Intl. Conf. IPSI, Sv.

Stefan, Serbia and Montenegro, Oct. 2003.

B. Bhargava, S. Kamisetty and S. Madria, “Fault-tolerant authentication and group key

management in mobile computing,” Intl. Conf. on Internet Comp., Las Vegas, June 2000.

B. Bhargava and L. Lilien, “Private and Trusted Collaborations,” Proc. Secure Knowledge

Management (SKM 2004): A Workshop, Amherst, NY, Sep. 2004.

ICDCIT 2004

91

References (2)

12.

13.

14.

15.

16.

17.

18.

19.

20.

21.

B. Bhargava and Y. Zhong, “Authorization Based on Evidence and Trust,” Proc. Intl. Conf.

on Data Warehousing and Knowledge Discovery DaWaK-2002, Aix-en-Provence, France,

Sep. 2002.

B. Bhargava, Y. Zhong, and Y. Lu, "Fraud Formalization and Detection,” Proc. Intl. Conf. on

Data Warehousing and Knowledge Discovery DaWaK-2003, Prague, Czechia, Sep. 2003.

M. Dacier, Y. Deswarte, and M. Kaâniche, “Quantitative Assessment of Operational

Security: Models and Tools,” Technical Report, LAAS Report 96493, May 1996.

N. Heintze and J.D. Tygar, “A Model for Secure Protocols and Their Compositions,” IEEE

Transactions on Software Engineering, Vol. 22, No. 1, 1996, pp. 16-30.

E. Jonsson et al., “On the Functional Relation Between Security and Dependability

Impairments,” Proc. 1999 Workshop on New Security Paradigms, Sep. 1999, pp. 104-111.

I. Krsul, E.H. Spafford, and M. Tripunitara, “Computer Vulnerability Analysis,” Technical

Report, COAST TR 98-07, Dept. of Computer Sciences, Purdue University, 1998.

B. Littlewood at al., “Towards Operational Measures of Computer Security”, Journal of

Computer Security, Vol. 2, 1993, pp. 211-229.

F. Maymir-Ducharme, P.C. Clements, K. Wallnau, and R. W. Krut, “The Unified Information

Security Architecture,” Technical Report, CMU/SEI-95-TR-015, Oct. 1995.

N.R. Mead, R.J. Ellison, R.C. Linger, T. Longstaff, and J. McHugh, “Survivable Network

Analysis Method,” Tech. Rep. CMU/SEI-2000-TR-013, Pittsburgh, PA, Sep. 2000.

C. Meadows, “Applying the Dependability Paradigm to Computer Security,” Proc. Workshop

on New Security Paradigms, Sep. 1995, pp. 75-81.

ICDCIT 2004

92

Reference (3)

22.

23.

24.

25.

26.

27.

28.

29.

P.C. Meunier and E.H. Spafford, “Running the free vulnerability notification system

Cassandra,” Proc. 14th Annual Computer Security Incident Handling Conference, Hawaii,

Jan. 2002.

C. R. Ramakrishnan and R. Sekar, “Model-Based Analysis of Configuration Vulnerabilities,”

Proc. Second Intl. Workshop on Verification, Model Checking, and Abstract Interpretation

(VMCAI’98), Pisa, Italy, 2000.

B. Randell, “Dependability—a Unifying Concept,” in: Computer Security, Dependability, and

Assurance: From Needs to Solutions, IEEE Computer Society Press, Los Alamitos, CA,

1999.

A.D. Rubin and P. Honeyman, “Formal Methods for the Analysis of Authentication

Protocols,” Tech. Rep. 93-7, Dept. of Electrical Engineering and Computer Science,

University of Michigan, Nov. 1993.

G. Song et al., “CERIAS Classic Vulnerability Database User Manual,” Technical Report

2000-17, CERIAS, Purdue University, West Lafayette, IN, 2000.

G. Stoneburner, A. Goguen, and A. Feringa, “Risk Management Guide for Information

Technology Systems,” NIST Special Publication 800-30, Washington, DC, 2001.

M. Winslett et al., “Negotiating trust on the web,” IEEE Internet Computing Spec. Issue on

Trust Management, 6(6), Nov. 2002.

Y. Zhong, Y. Lu, and B. Bhargava, “Dynamic Trust Production Based on Interaction

Sequence,” Tech. Rep. CSD-TR 03-006, Dept. Comp. Sciences, Purdue Univ., Mar.2003.

The extended version of this presentation available at:

www.cs.purdue.edu/people/bb#colloqia

ICDCIT 2004

93

Thank you!

ICDCIT 2004

94