Class 15 Lecture: Crosstabs 1

advertisement

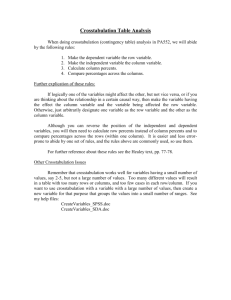

Lecture 15: Crosstabulation 1 Sociology 5811 Copyright © 2005 by Evan Schofer Do not copy or distribute without permission Announcements • Final Project Assignment Handed out • Proposal due November 15 • Final Project due December 13 • Today’s class: • New Topic: Crosstabulation • Also called “crosstabs” • Coming Soon: correlation, regression Crosstabulation: Introduction • T-Test and ANOVA look to see if groups differ on a continuous dependent variable • Groups are actually a nominal variable • Example: Do different ethnic groups vary in wages? • Difference in means for two groups indicates a relationship between two variables • Null hypothesis (means are the same) suggests that there is no relationship between variables • Alternate hypothesis (means differ) is equivalent to saying that there is a relationship. Crosstabulation: Introduction • T-test and ANOVA determine whether there is a statistical relationship between a nominal variable and a continuous variable in your data • But, we may be interested in two nominal variables • Examples: Class and unemployment; gender and drug use • Crosstabulation: used for nominal/ordinal variables • Tools to descriptively examine variables • Tools to identify whether there is a relationship between two variables. Crosstabulation: Introduction • What is bivariate crosstabulation? • Start two nominal variables in a dataset: • Example: gender (Male/Female) and political party (Democrat, Republican) • Crosstabulation is simply counting up the number of people in each combined category • How many democratic women? democratic men? republican women? Republican men? • It is similar to computing frequencies • But, for two variables jointly, rather than just one. Crosstabulation: Introduction • Example: Female = 1, Democrat = 1 ID 1 2 3 4 5 6 7 8 Gender 0 1 1 0 0 1 1 0 Political Party 1 0 1 0 0 0 1 1 Question: How many Republican Women are in the dataset? Answer: 2 Crosstabulation: Introduction • Example: Dataset of 68 people • Look and count up the number of people in each combined category • Or, determine frequency along the first variable: • Frequency: 43 women, 25 men • Then break out groups by the second variable • Of 43 women, 27 = democrat, 16 = republican • Of 25 men, 10 = democrat, 15 = republican. Crosstabulation: Introduction • Crosstab: a table that presents joint frequencies • Also called a “joint contingency table” Each box with a value is a “cell” Women Democrat Republican 27 16 Men 10 15 This is a table row This is a table column Crosstabulation: Introduction • Tables may also have additional information: • Row and column marginals (i.e., totals) Women Dem Rep 27 16 + Men + 10 Total = 37 15 31 25 68 = Total 43 This is the total N Crosstabulation: Introduction • Tables can also reflect percentages • Either of total N, or of row or column marginals • This table shows percentage of total N: Women 27 Dem N 14.7% 37 22.1% 31 10 39.7% 16 Rep Men 15 23.5% Just divide each cell value by the total N to get a proportion. Multiply by 100 for a percentage: (10/68)(100)=14.7 N 43 25 68 Crosstabulation: Introduction • In addition, you can calculate percentages with respect to either row or column marginals • Here is an example of column percentages Women 27 Dem N 40.0% 37 60.0% 31 10 62.8% 16 Rep Men 15 37.2% Just divide each cell by the column marginal to get a proportion. Multiply by 100 for a percentage: (10/25)(100)=40% N 43 25 68 Crosstabulation: Independence • Question: How can we tell if there is a relationship between the two variables? • Answer: If category on one variable appears to be linked to category on the other: Women Men N Dem 43 0 43 Rep 0 25 25 N 43 25 68 Crosstabulation: Independence • If there is no relationship between two variables, they are said to be “independent” • Neither “depends” on the other • If there is a relationship, the variables are said to be “associated” or to “covary” • If individuals in one category also consistently fall in another (women=dem, men=rep), you may suspect that there is a relationship between the two variables • Just as when the mean of a certain sub-group is much higher or lower than another (in T-test/ANOVA). Crosstabulation: Independence • Relationships aren’t always very clearly visible • Widely differing numbers of people in categories make comparisons difficult (e.g., if there were 200 men and only 15 women in the sample) • And, large tables become more difficult to interpret (Example: Knoke, p. 157) • Looking at row or column percentages can make visual interpretation a bit easier • Calculate the percentages within the category you think is the “independent” variable • If you think that political party affiliation depends on gender (column variable), look a column percentages. Crosstabulation: Independence • Here, column percentages highlight the relationship among variables: Women Men N Dem 62.8% 40.0% 37 Rep 37.2% 60.0% 31 N 43 25 68 • It appears as though women tend to be more democratic, while men tend to be republican Chi-square Test of Independence • In the sample, women appear to be more democratic, men republican • How do we know if this difference is merely due to sampling variability? (Thus, there is no relationship in the population?) • Or, is it indicative of a relationship at the population level? • Answer: A new kind of statistical test • The chi-square (2) test • Pronunciation: “chi” rhymes with “sky” • Chi-square tests: Similar to T-tests, F-tests • Another family of distributions with known properties. Chi-square Test of Independence • Chi-Square test is a test of independence • Asks “is there a relationship between variables or not?” • Independence = no relationship • ANOVA, T-Test do this too (same means = independent) • Null hypothesis: the two variables are statistically independent • H0: Gender and political party are independent • There is no relationship between them • Alternate hypothesis: the variables are related, not independent of each other • H1: Gender and political party are not independent. Chi-square Test of Independence • How does a chi-square test of independence work? • It is based on comparing the observed cell values with the values you’d expect if there were no relationship between variables • Definitions: • Observed values = values in the crosstab cells based on your sample • Expected values = crosstab cell values you would expect if your variables were unrelated. Crosstabs: Notation • The value in a cell is referred to as a frequency – Math symbol = f • Cells are referred to by row and column numbers – Ex: women republicans = 2nd row, 1st column – In general, rows are numbered from 1 to i, columns are numbered from 1 to j • Thus, the value in any cell of any table can be written as: – fij Expected Cell Values • If two variables are independent, cell values will depend only on row & column marginals – Marginals reflect frequencies… And, if frequency is high, all cells in that row (or column) should be high • The formula for the expected value in a cell is: ˆf ij ( f i )( f j ) N • fi and fj are the row and column marginals • N is the total sample size Expected Cell Values • Expected cell values are easy to calculate – Expected = row marginal * column marginal / N Women Men N RowM * ColM / N (25*37)/68=13.6 Dem 23.4 13.6 37 Rep 19.6 11.4 31 N 43 25 68 Expected Cell Values • Question: What makes these values “expected”? • A: They simply reflect percentages of marginals • Look at column %’s based on expected values: Women Men N Dem 54% 54% 37 (54%) Rep 46% 46% 31 (46%) N 43 25 68 Expected Cell Values • Expected values are “expected” because they mirror the properties of the sample. • If the sample is 63% women, you’d expect: – 63% of democrats would be women and – 63% of republicans would be women • If not, the variables (gender & political view) would not be “independent” of each other Chi-Square Test of Independence • The Chi-square test is a comparison of expected and observed values • For each cell, compute: ( Expected Observed ) Expected 2 • Then, sum this up for all cells • If cells all deviate a lot from the expected values, then the sum is large • Maybe we can reject H0 Chi-square Test of Independence • The actual Chi-square formula: R C 2 i 1 j 1 • • • • ( Eij Oij ) 2 Eij R = total number of rows in the table C = total number of columns in the table Eij = the expected frequency in row i, column j Oij = the observed frequency in row i, column j • Question: Why square E – O ? Chi-square Test of Independence • Assumptions require for Chi-square test: • Only one: Sample size is large, N > 100 • Hypotheses – H0: Variables are statistically independent – H1: Variables are not statistically independent • The critical value can be looked up in a Chisquare table – See Knoke, p. 509-510 – Calculate degrees of freedom: (#Rows-1)(#Col-1) Chi-square Test of Independence • Example: Gender and Political Views – Let’s pretend that N of 68 is sufficient Women Men Democrat O11: 27 E11: 23.4 O12 : 10 E12 : 13.6 Republican O21 : 16 E21 : 19.6 O22 : 15 E22 : 11.4 Chi-square Test of Independence • Compute (E – O)2 /E for each cell Women Men Democrat (23.4 – 27)2/23.4 = .55 (13.6 – 10)2/13.6 = .95 Republican (19.6 – 16)2/19.6 = .66 (11.4 – 15)2/15 = .86 Chi-Square Test of Independence • Finally, sum up to compute the Chi-square • 2 = .55 + .95 + .66 + .86 = 3.02 • What is the critical value for a=.05? • Degrees of freedom: (R-1)(C-1) = (2-1)(2-1) = 1 • According to Knoke, p. 509: Critical value is 3.84 • Question: Can we reject H0? • No. 2 of 3.02 is less than the critical value • We cannot conclude that there is a relationship between gender and political party affiliation. Chi-square Test of Independence • Weaknesses of chi-square tests: • 1. If the sample is very large, we almost always reject H0. • Even tiny covariations are statistically significant • But, they may not be socially meaningful differences • 2. It doesn’t tell us how strong the relationship is • It doesn’t tell us if it is a large, meaningful difference or a very small one • It is only a test of “independence” vs. “dependence” • Measures of Association address this shortcoming. Measures of Association • Separate from the issue of independence, statisticians have created measures of association – They are measures that tell us how strong the relationship is between two variables • Weak Association Women Men Dem. 51 49 Rep. 49 51 Strong Association Women Men Dem. 100 0 Rep. 0 100 Crosstab Association:Yule’s Q • #1: Yule’s Q – Appropriate only for 2x2 tables (2 rows, 2 columns) • Label cell frequencies a through d: bc ad Formula : Q bc ad a b c d • Recall that extreme values along the “diagonal” (cells a & d) or the “off-diagonal” (b & c) indicate a strong relationship. • Yule’s Q captures that in a measure • 0 = no association. -1, +1 = strong association Crosstab Association:Yule’s Q • Rule of Thumb for interpreting Yule’s Q: • Bohrnstedt & Knoke, p. 150 Absolute value of Q Strength of Association 0 to .24 “virtually no relationship” .25 to .49 “weak relationship” .50 to .74 “moderate relationship” .75 to 1.0 “strong relationship” Crosstab Association:Yule’s Q • Example: Gender and Political Party Affiliation Women a Dem 27 10 Calculate “ad” d 16 Calculate “bc” bc = (10)(16) = 160 b c Rep Men 15 ad = (27)(15) = 405 bc ad 160 405 245 Q .48 bc ad 160 405 505 • -.48 = “weak association”, almost “moderate” Association: Other Measures • Phi () • Very similar to Yule’s Q • Only for 2x2 tables, ranges from –1 to 1, 0 = no assoc. • Gamma (G) • Based on a very different method of calculation • Not limited to 2x2 tables • Requires ordered variables • Tau c (tc) and Somer’s d (dyx) • Same basic principle as Gamma • Several Others discussed in Knoke, Norusis.