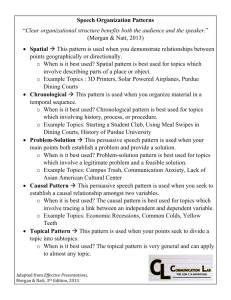

SLIDES: Types of Economic Data

advertisement

TYPES OF ECONOMIC DATA Omar Al-Ubaydli Overview Causal effects Debates to be considered Experimental vs. non-experimental Field vs. laboratory experiments Structural vs. reduced form Key dimensions of the debate Generalizability Control and feasibility Ethics Welfare and counterfactual analysis Causal Effects Setup Definition of a causal effect For example, the statistical relationship between GDP and education When remaining explanatory variables become unobservable (or an exhaustive list is unfeasible), we switch to their stochastic equivalent, average causal effects g(x,x’,z) = f(x’,z) – f(x,z) (x,x’,z) is known as a causal triple Distinguishes economics from statistics because we are interested in policy interventions Y = dependent variable X = explanatory variable of interest Z = vector of all the other explanatory variables (assumed to be observable) Y = f(X,Z) While we can’t guarantee holding unobservables constant, we can guarantee that they don’t change on average For the time being, we will ignore average causal effects, sidestepping the endogeneity problem (covered in previous lectures) Causal Effects Target space T is a set of causal triples that a researcher is interested in estimating May only be interested in a property of the causal triples, e.g., are they positive/negative/zero (Samuelson’s qualitative calculus) The researcher has a prior about these causal effects Dataset D is a set of causal triples that the researcher directly collects data on Results R is the set of causal effects that the researcher can estimate directly from the dataset D and without any parametric assumptions Assume that g(x,x’,z) for each element of D is automatically estimated consistently For the moment, setting aside the issues of small samples and endogeneity, i.e., the identification problem, to focus debate on newer, more interesting aspects => no distinction between g(x,x’,z) and a direct empirical estimate of g(x,x’,z) D and T may be disjoint and singletons After seeing the results R, the researcher updates priors to form posteriors Generalizability Given results R, the generalizability set ∆(R) is the set of causal triples outside the dataset D where the posterior is updated as a consequence of learning the results When ∆(R) is non-empty, the results R are said to be generalizable The researcher is generalizing when the generalizability set ∆(R) and the target space T intersect Note that so far, the generalizability issue applies equally to all data types—experimental and natural The issue is usually obfuscated in natural data by the need to tackle the more pressing problem of endogeneity Does not mean that generalizability is, in principle, any less of an issue in natural data Generalizability Types: Zero: the generalizability set ∆(R) is empty Highly conservative Local: the generalizability set ∆(R) contains points in an arbitrarily small neighborhood of points in the dataset D Usually follows from assuming continuity of f(X,Z), as this permits local linearity and therefore local extrapolation Global: the generalizability set ∆(R) contains points outside an arbitrarily small neighborhood of points in the dataset D Summary: Paralyzing fear of interpolation/extrapolation/additive-separability In a nonparametric world, results can fail to generalize, generalize locally, or generalize globally A sufficient condition for local generalizability is continuity of f(X,Z) A sufficiently conservative researcher is unlikely to believe that her results generalize globally because it requires a strong assumption than continuity Before comparing data types, we need to briefly define them Data Taxonomy Harrison and List (2004) Key dimension: how organic (loosely speaking) is the data generating process? Conventional laboratory experiment Artefactual field experiment Same as artefactual field experiment except field context in the commodity/task/information Natural field experiment Same as conventional laboratory experiment except non-standard subject pool Framed field experiment Students subject pool Abstract framing Imposed set of rules Same as framed field experiment except subjects are unaware of their participation in an experiment Natural data Same as natural field experiment except there is no intervention by an experimenter; data are completely organic Data Taxonomy Experiments and randomized control are NOT the same thing Many experiments do not use randomized control Market experiments Many natural data sets have randomized independent variables Gambling data Empirical Analysis Taxonomy Structural Econometric specification derived from explicit modeling of the optimization problems faced by the decision-makers Typically include an equilibrium concept to reconcile the optimization Deductive in spirit Reduced form Econometric specification is the departure point rather than the end-point of a series of optimization problems Inductive in spirit Main Questions What do laboratory experiments, field experiments, and natural data imply for: Identification? Generalizability? Should we use structural or reduced-form empirical analysis? Knowing what you want to do with the data influences what kind of data you choose to collect in the first place Generalizability and Data Types A causal effect g(x,x’,z) is investigation neutral if it is unaffected by the fact that it is being induced by a scientific investigator, ceteris paribus A causal triple (x,x’,z) is a natural setting if it can plausibly exist in the absence of academic, scientific investigation Laboratory experiments are not natural settings (Levitt & List, 2007) Scrutiny Stakes Selection into the experiment/roles (punctual college students) Artificial constraints on choice sets and time horizons In fact, laboratory experiments are often not even close to being natural settings Key assumptions: Investigation neutrality of causal effects Interested in estimating causal effects in natural settings Unnatural settings important only insofar as they generalize to natural settings Generalizability and Data Types The following propositions are based on evaluating data with respect to generalizability only Proposition 1: Under a liberal stance, neither field nor laboratory experiments are demonstrably superior to each other Features that make this more likely For example, simple markets Proposition 2: Under a conservative stance (local generalizability), field experiments are more useful than laboratory experiments Absence of moral concerns Small computational demands Experience is unimportant or quickly learned Non-random participant selection is unimportant Neighborhood of a natural setting is natural and an unnatural setting is unnatural For example, charitable contributions Proposition 3: Under the most conservative stance (zero generalizability), field experiments are more useful than laboratory experiments because they are performed in one natural setting Generalizability and Data Types Why Laboratory Experiments? Even if we care only about generalizability, there remain factors that can render laboratory experiments more attractive Cost: field experiments are typically (though not always) more expensive than laboratory experiments Because: This can be especially important for replication as a robustness check and as a guard against fraud “One-time” field experiments are concerning in this regard Feasibility: some field experiments are simply unfeasible because the researcher cannot plausibly acquire the necessary control More complex environment => have to “purchase” control of more factors More extraneous sources of variation => noisier data => need more observations Non-students are typically more expensive to recruit/compensate Real takes usually exceed laboratory stakes For example, the causal effect of interest rate changes on inflation, or of rainfall on public demonstrations Ethics: For example, Roth et al.’s investigation of job-market signaling in the economics job market Control: Common Misconceptions Unquestionably, laboratory experiments afford the researcher greater levels of control over many features of the environment Key consideration: prospective experimental subjects can be active or passive decision-makers Subjects refuse to participate in an experiment, e.g., Bertrand and Mullainathan (2004) labor market discrimination study Principle 2: When the net benefits accruing to a subject of being active are sufficiently low, and the value of the research question is sufficiently high, researchers choose the cheaper of laboratory and natural field experiments Principle 3: When there is a positive and sufficiently strong relationship between the research value of an experiment and the net benefits accruing to a subject from being active, natural field experiments become the only viable option for researchers Laboratory experiments require some passive decision-making as the subject will submit to randomization Principle 1: When the net benefits accruing to a subject of active decision-making are sufficiently high, laboratory experiments are impossible, and natural field experiments will be chosen by the experimenter if their cost is low enough and the research question is sufficiently valuable However this is not a universal truth, and some of that additional control systematically reduces control over other features Experiments signal the value of a decision to prospective subjects, e.g., studying the effect sunshine exposure on vitamin D absorption Bottom line: sometimes, the only way to get someone to participate in an experiment is to do so covertly Beware of the blanket statement: “laboratory experiments give you more control” Why Natural Data? Sometimes doesn’t require the assumption of investigation neutrality Can be relatively cheap, especially if thousands or millions of observations are necessary Field experiments maybe unfeasible If the data are naturally collected for non-research purposes, e.g., traffic data, tax data Trade off naturalness of natural data with benefits of laboratory experiments, including randomization Randomization bias undermines the value of experiments Structural vs. Reduced Form If you intend on doing structural analysis You will need to think more carefully about the data that you intend to collect You will probably need to collect more data What structural? Allows welfare analysis (a key distinction between economists and statisticians) Broader range of endogenous variables that allow you to drill deeper Some more exogenous variables to improve precision Can be critical for policy analysis Allows for more sophisticated out-of-sample predictions, especially counterfactual analysis Why not? If the requisite assumptions are a poor approximation of reality, but you treat them as accurate, then your conclusions can be incredibly inaccurate Can be dangerous for policy Structural modeling is also more work and requires more skills The theoretical modeling The statistical programming to estimate the typically non-linear models Epilogue: Combining Data Types Today, important empirical questions would ideally be attacked using the entire toolbox Laboratory experiments + field experiments + natural data Structural + reduced-form modeling Reasons: Robustness When the different methods yield different results, trying to reconcile them enhances our understanding Horses for courses Addendum: Useful Maxims Do not try to fool yourself or other economists about the choices that you made in your empirical investigation Don’t pretend that you declined “Option X” because you though it better, when the truth is that you don’t know how to do “Option X” Nobody has a complete toolkit; always work on improving yours, and focus on your weaknesses If your grad school curriculum was weak in a certain area, don’t run away from that area for the rest of your career Do not rely on others to teach you the material required to fill your gaps You will be surprised how many improvements to the design you will discover by forcing yourself to think carefully Can help you avoid massively costly and simple errors To the greatest extent possible, let the research agenda chronologically precede the design/data collection The best economists are always teaching themselves new things Write the programming code for your empirical analysis before you start collecting any data This is especially true of theory/structural modeling Many studies fail to realize the potential because the researcher insists on letting the data/design dictate the research question Keep an open mind when analyzing your data Very smart scientists have been made to look very silly for refusing to change their views in the face of mounting evidence