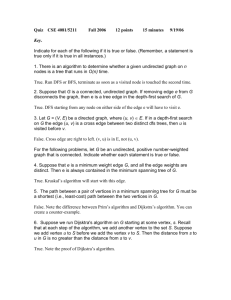

CS503: First Lecture, Fall 2008

advertisement

CS503: Sixteenth Lecture, Fall 2008

Graph Algorithms

Michael Barnathan

Here’s what we’ll be learning:

• Data Structures:

– Graphs (Adjacency Matrix Representation).

• Theory:

–

–

–

–

Dijkstra’s algorithm.

Floyd’s algorithm.

Traveling salesman problem (TSP).

Dynamic programming.

• And then we’re done.

– With all of the basic topics covered in Algorithms I and II at

Monmouth.

– With every algorithm that is discussed in the book (except

red-black trees). You should recognize all of them by now.

– Of course, we still have a month left in the course.

– We’ll probably use it for programming.

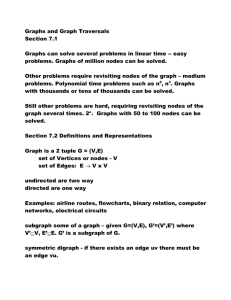

Traditional Graph Representation

Vertices

G=

1

3

2

5

Edges

4

V=

E=

1

2

3

4

5

Adjacency Matrices

• A graph G can also be represented as an adjacency

matrix.

• Let v be the number of vertices in G.

• The adjacency matrix A is then a v x v binary matrix

indicating the presence of edges between nodes:

– Ai,j = 1 if an edge exists between vertices i and j.

– Ai,j = 0 otherwise.

• If the graph is undirected, A will be symmetric.

– That is, an edge between vertices i and j means an edge

also exists between vertices j and i.

• This is not necessarily true if the graph is directed.

Adjacency Matrix Example

G=

1

2

3

5

4

A=

0

1

0

0

1

1 0 0 1

0 0 1 1

0 0 0 1

1 0 0 1

1 1 1 0

Adjacency Matrix Tradeoffs

• O(n2) space required to store the matrix, but each

entry can be represented with one bit.

– More efficient representation in very dense graphs, but

fails overall.

• More natural for use in certain algorithms.

• O(n) time required to retrieve all edges of a vertex,

even if the vertex has only one edge.

– Because it also stores the 0s, scanning a vertex’s edges

requires scanning a whole row.

– The traditional (adjacency list) representation takes O(e)

time, where e is the number of edges adjacent to the

vertex you are examining. e is much smaller than n.

Adjacency Lists

• The traditional method of representing edges is

called an adjacency list.

• Simple: Every vertex contains a linked list of edges it

is adjacent to.

• The edge typically stores the vertices on both ends as

well, to allow the traversal across that edge to be

constant time.

– If edges did not keep track of their vertices, traversing one

would require linearly scanning all vertices for adjacency.

Converting

Vertex[] mat2list(boolean[][] adjmatrix) {

Vertex[] ret = new Vertex[adjmatrix.length];

for (int vidx = 0; vidx < adjmatrix.length; vidx++)

ret[vidx] = new Vertex();

//Initialize vertices.

for (int vidx = 0; vidx < adjmatrix.length; vidx++)

for (int eidx = 0; eidx < adjmatrix[vidx].length; eidx++)

if (adjmatrix[vidx][eidx]) //If the matrix has a 1, add an edge to the list.

ret[vidx].edges().add(new Edge(ret[vidx], ret[eidx]));

}

return ret;

}

boolean[][] list2mat(Vertex[] adjlist) {

boolean[][] ret = new boolean[adjlist.length][adjlist.length];

for (int vidx = 0; vidx < adjlist.length; vidx++) {

for (Edge eadj : adjlist.edges())

ret[vidx][eadj.getOtherVertexIndex()] = true;

}

return ret;

}

//Default value is false.

//Edges in the list are true.

Weighted Adjacency Matrices

• Weights can be added to adjacency matrices

as well.

• Rather than using 1s to represent edges, use

the edge weights.

• Missing edges are represented by infinity.

– Infinity in Java is Double.POSITIVE_INFINITY.

Shortest Path Problem

• You are working for a new software company

called 10100, Inc.

• They just received access to a road database

for their new product, 10100 Maps.

• You are asked to develop the algorithm that

computes the fastest route from point A to

point B.

• For example, from Monmouth University to

Carnegie Hall.

Example Graph

Carnegie Hall

27 min.

24 min.

12 min.

Newark

Jersey City

44 min.

43 min.

Old

Bridge

54 min.

20 min.

Freehold

34 min.

27 min.

Monmouth University

What is the quickest way to Carnegie Hall?

10 years.

(“Practice, practice,

practice”)

Dijkstra’s Algorithm

• Named after Edsger Dijkstra, who discovered it in 1959.

• Also called the shortest-path algorithm, which should tell you

what it does.

• Of course, it finds the shortest path from one node to another

(or to all others) in a graph.

• Key insight: if you have found the shortest path from Old

Bridge to Carnegie and Freehold to Carnegie, you will not

need to calculate the path from Freehold to Old Bridge. Going

directly to Old Bridge is faster.

– Caveat: this is not true if negative weights exist in the graph. In this

case, maybe going from Freehold to Old Bridge saves you time and the

link must still be checked!

– Dijkstra’s algorithm only works when all weights are non-negative.

Dijkstra’s Algorithm Overview:

1.

2.

3.

Declare an array, dist, of shortest path lengths to each vertex. Initialize

the distance of the start vertex to 0 and every other vertex to infinity.

Create a priority queue and fill it with all nodes in the graph.

While the queue is not empty:

1.

2.

3.

Remove the vertex u with the smallest distance from the start.

Compute the minimum distance md between u and each neighboring

vertex v (scan u’s edges and choose the one with the smallest weight).

For each neighbor v, if dist[u] + md < dist[v],

1.

2.

3.

4.

Set dist[v] = dist[u] + md

Set v’s “predecessor vertex” to u. This is used to retrace the path.

(We have found a shorter path to v than our current best).

Trace the path back from the target to the source by traversing the

predecessor node of each vertex from the target. Reverse it and you

have the shortest path from source to target.

Dijkstra’s Algorithm – Starting State

∞

Carnegie Hall

27 min.

24 min.

∞

12 min.

Newark

∞

Jersey City

10 years.

43 min.

∞

Old

Bridge

54 min.

∞

20 min.

Freehold

34 min.

27 min.

44 min.

0

Monmouth University

Dijkstra’s Algorithm – First Iteration

10 years

Carnegie Hall

27 min.

24 min.

∞

12 min.

Newark

∞

Jersey City

10 years.

43 min.

54 min.

27

Freehold

20 min.

44 min.

34

Old

Bridge

34 min.

27 min.

0

Monmouth University

So far practicing is

winning. Maybe your

piano teacher was

right…

Dijkstra’s Algorithm – Second Iteration

10 years

Carnegie Hall

27 min.

24 min.

78

77

12 min.

Newark

Jersey City

10 years.

43 min.

54 min.

27

Freehold

20 min.

44 min.

34

Old

Bridge

34 min.

27 min.

0

Monmouth University

34 < 47, so Old

Bridge keeps its

current predecessor.

77 < 81, so

Newark’s

predecessor is Old

Bridge.

Dijkstra’s Algorithm – Third Iteration

102

Carnegie Hall

27 min.

24 min.

78

77

12 min.

Newark

Jersey City

10 years.

43 min.

54 min.

27

Freehold

20 min.

44 min.

34

Old

Bridge

34 min.

27 min.

0

Monmouth University

102 < 104 and 102

< 10 years, so

Carnegie Hall

changes its

predecessor to

Jersey City.

Dijkstra’s Algorithm – Results:

102

The shortest time to Carnegie Hall is

thus 102 minutes.

Carnegie Hall

24 min.

78

Jersey City

Starting at Carnegie Hall and

traversing its predecessor list, we see

that we passed through Jersey City

and Old Bridge.

44 min.

34

Old

Bridge

34 min.

0

Monmouth University

Therefore, the optimal route is

Monmouth -> Old Bridge -> Jersey

City -> Carnegie Hall.

Dijkstra’s Algorithm – Pseudocode:

Vertex[] Dijkstra(Vertex[] graph, int sourceidx) {

double[] dist = new double[graph.length];

Vertex[] predecessor = new Vertex[graph.length];

PriorityQueue<Vertex> vertq = new PriorityQueue<Vertex>();

for (int vidx = 0; vidx < graph.length; vidx++) {

dist[vidx] = Double.POSITIVE_INFINITY;

vertq.add(graph[vidx]);

}

dist[sourceidx] = 0;

while (!vertq.empty()) {

Vertex cur = vertq.pop();

for (Edge adjedge : cur.edges()) {

Vertex other = adjedge.getOtherVertex();

if (dist[cur] + adjedge.getWeight() < dist[other]) {

dist[other] = dist[cur] + adjedge.getWeight();

predecessor[other] = cur;

}

}

}

return predecessor;

}

//All shortest paths from the source node are contained here.

Dijkstra’s Algorithm – Analysis:

• What is the time complexity of this algorithm?

– Assuming a linear search is performed on the

priority queue when removing the element?

– Assuming the traditional heap implementation of

a priority queue (which is tricky in this case

because the distance changes throughout the

algorithm)?

– Hint: it will depend on both V and E.

• How much space is being used?

Dijkstra’s Algorithm – Discussion:

• This algorithm always chooses the path of

shortest distance to record at each step.

• What did we call those algorithms again?

• When it finishes, the recorded path will be the

absolute shortest from the source.

• Dijkstra’s algorithm will fail if given a graph

with negative weights. Use the Bellman-Ford

algorithm (which we won’t discuss) for this.

Floyd’s Algorithm

• Also called the Floyd-Warshall algorithm.

• This algorithm reports shortest paths between ALL pairs of

nodes in the graph.

• This algorithm also does not work when negative weights

exist in the graph.

• You could also run Dijkstra’s algorithm for each vertex in the

graph, but you would be repeating work, and it would cost

you: O(v3 * e), to be precise.

• In the worst case, e = v2, so this algorithm could cost O(v5).

• Floyd’s algorithm improves this to O(v3).

• It uses a technique called dynamic programming to do this.

Floyd’s Algorithm

double[][] floyd(double[][] graph) {

//Weighted Adjacency matrix representation.

double[][] pathlen = (double[][]) graph.clone();

for (int start1 = 0; start1 < graph.length; start1++)

for (int start2 = 0; start2 < graph.length; start2++)

for (int end = 0; end < graph.length; end++)

pathlen[start2][end] =

Math.min(pathlen[start2][end], pathlen[start2][start1] +

pathlen[start1][end]);

return pathlen;

}

Recall: Divide and Conquer

• Divide and Conquer is an algorithm design

paradigm that splits large problems up into

smaller instances of the same problem, solves

the smaller problems, then merges them to

get a solution to the original problem.

• When a table of solutions to subproblems is

kept to avoid redoing work, this is called

memoization.

Dynamic Programming

• Floyd’s algorithm is a dynamic programming algorithm.

• The idea behind dynamic programming is similar to memoization.

• Whereas memoization begins with large problems and breaks them down,

dynamic programming builds large solutions from smaller problems.

• Dynamic programming is used in problems with overlapping substructure:

when a problem can be split “horizontally” into overlapping subproblems,

which can be merged back later:

– For example, computing path[1][5] would involve computing path[1][2] +

path[2][5], path[1][3] + path[3][5], and path[1][4] + path[4][5].

– The problem space can be partitioned into subsets of itself and those subsets

can be merged together to solve the full problem.

• A table is still required to store the solutions to the subproblems.

• While memoization has a naturally recursive structure, dynamic

programming algorithms often involve computations within a loop.

The Traveling Salesman Problem

• Let’s say you’re in charge of planning FedEx’s

delivery route.

• You have packages to deliver in New York,

Denver, Chicago, and Boston.

• Gas is expensive for the company, so you’d like

to find the route with the shortest distance

required to deliver all of the packages.

Graph Representation

982 mi.

1001 mi.

Chicago

791 mi.

1777 mi.

Denver

Boston

215 mi.

New York

Starting from New York, which route minimizes the total distance?

The Naïve Algorithm

• Compute all permutations of edges and sum the path

lengths. Select the smallest.

• This is equivalent to “topological sorting” the graph,

and takes O(v!) time.

• Dynamic programming can get this down to O(v22v),

but it’s still exponential.

• The million dollar question: Is there any way to solve

this problem in less than exponential time?

• Literally. Find one or prove one can’t exist and you’ll

win $1 million.

NP Completeness

• TSP is an example of an NP Complete problem.

• These are problems whose solutions can be verified

in polynomial time, but (probably) can’t be computed

in polynomial time.

• All NP complete problems can be reduced to each

other; they form a complexity class.

• The open (million dollar) question is whether the

complexity classes P and NP are equal.

– Finding a polynomial-time algorithm for even one of

these problems, or proving that no such algorithm exists,

is sufficient to prove P = NP or P != NP.

Approximations

• So is UPS out of luck?

• Not entirely… it turns out that there are many

approximation algorithms or heuristics for NPcomplete problems that will run in polynomial time.

• Some of these give very good estimates. Certainly

good enough when the question is one of driving

distance.

• Continuing this discussion is likely outside of this

course’s scope.

Other Graph Problems

• There are many open problems

in graph theory.

–

–

–

–

–

Vertex covers.

Cliques.

Flow.

Graph coloring.

Knight’s tours.

• With the rise of social networks,

this is becoming a more and

more relevant field.

--xkcd

Not the Shortest Lecture

• We rounded out the topics usually taught in

an algorithms course with Dijkstra’s and

Floyd’s shortest-path algorithms and briefly

discussed the notion of NP completeness in

the Traveling Salesman Problem.

• The lesson:

– Slight variations on problems may not seem to

make them harder, but may in fact make them

intractable. It isn’t always apparent.