Example

advertisement

SEG 2106

SOFTWARE CONSTRUCTION

INSTRUCTOR: HUSSEIN AL OSMAN

THE COURSE MATERIAL IS BASED ON THE COURSE

CONSTRUCTED BY PROFS:

GREGOR V. BOCHMANN (HTTPS://WWW.SITE.UOTTAWA.CA/~BOCHMANN/)

JIYING ZHAO (HTTP://WWW.SITE.UOTTAWA.CA/~JYZHAO/)

COURSE SECTIONS

Section 0: Introduction

Section 1: Software development processes + Domain Analysis

Section 2: Requirements + Behavioral Modeling (Activity Diagrams)

Section 3: More Behavioral Modeling (State Machines)

Section 4: More Behavioral Modeling (Case Study)

Section 5: Petri Nets

Section 6: Introduction to Compilers

Section 7: Lexical Analysis

Section 8: Finite State Automata

Section 9: Practical Regular Expressions

Section 10: Introduction to Syntax Analysis

Section 11: LL(1) Parser

Section 12: More on LL Parsing (Error Recovery and non LL(1) Parsers)

Section 13: LR Parsing

Section 14: Introduction to Concurrency

Section 15: More on Concurrency

Section 16: Java Concurrency

Section 17: Process Scheduling

Section 18: Web Services

2

SECTION 0

SYLLABUS

3

WELCOME TO SEG2106

4

COURSE INFORMATION

Instructor: Hussein Al Osman

E-mail: halosman@uottawa.ca

Office: SITE4043

Office Hours: TBD

Web Site: Virtual Campus (https://maestro.uottawa.ca)

13:00 - 14:30

STE C0136

Lectures: Wednesday

Labs:

Friday

13:00 - 14:30

MRT 211

Monday

17:30 - 20:30

STE 2052

Tuesday

19:00 - 22:00

STE 0131

5

EVALUATION SCHEME

Assignments (4)

25%

Labs (7)

15%

Midterm Exam

20%

Final Exam

40%

Late assignments are accepted for a maximum of 24 hours

and they will receive a 30% penalty.

6

LABS

Seven labs in total

Three formal labs (with a report)

• Worth between 3 to 4%

The other labs are informal (without a report)

• 1% for each one

• You show you work to the TA at the end of the session

7

INFORMAL LABS

Your mark will be proportional to the number of task

successfully completed:

•

•

•

•

All the tasks are completed: 1%

More than half completed: 0.75%

Almost half is completed: 0.5%

You have tried at least (given that you attended the whole

session): 0.25%

8

MAJOR COURSE TOPICS

Chapter 1: Introduction and Behavioral Modeling

• Introduction to software development processes

• Waterfall model

• Iterative (or incremental) model

• Agile model

• Behavioral modeling

•

•

•

•

•

•

UML Use case models (seen previously)

UML Sequence diagrams (seen previously)

UML activity diagrams (very useful to model concurrent behavior)

UML state machines (model the behavior of a single object)

Petri Nets

SDL

9

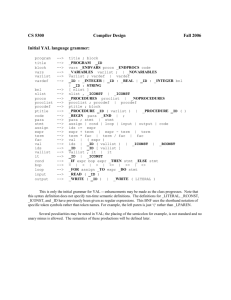

MAJOR COURSE TOPICS

Chapter 2: Compilers, formal languages and grammars

• Lexical analysis (convert a sequence of characters into a sequence of tokens)

• Formal languages

• Regular expressions (method to describe strings)

• Deterministic and Non-deterministic Finite Automata

• Syntax analysis

• Context-free grammar (describes the syntax of a programming language)

• Syntactic analysis

• Syntax trees

10

MAJOR COURSE TOPICS

Chapter 3: Concurrency

•

•

•

•

•

Logical and physical concurrency

Process scheduling

Mutual exclusion for access to shared resources

Concurrency and Java programing

Design patterns and performance considerations

11

MAJOR COURSE TOPICS

Chapter 4: Cool topics! We will vote on one or more of these

topics to cover (given that we have completed the above

described material, with some time to spare)

•

•

•

•

•

•

Mobile programing (mostly Android)

Web services

J2EE major components

Spring framework

Agile programing (especially SCRUM)

Other suggestions…

12

CLASS POLICIES

Late Assignments

• Late assignments are accepted for a maximum of 24 hours

and they will receive a 30% penalty.

13

CLASS POLICIES

Plagiarism

• Plagiarism is a serious academic offence that will not be tolerated.

• Note that the person providing solutions to be copied is also

committing an offence as they are an active participant in the

plagiarism.

• The person copying and the person copied from will be reprimanded

equally according to the regulations set by the University of Ottawa.

•

• Please refer to this link for more information:

www.uottawa.ca/academic/info/regist/crs/0305/home_5_ENG.htm.

14

CLASS POLICIES

Attendance

• Class attendance is mandatory. As per academic regulations,

students who do not attend 80% of the class will not be

allowed to write the final examinations.

• All components of the course (i.e laboratory reports,

assignments, etc.) must be fulfilled otherwise students may

receive an INC as a final mark (equivalent to an F).

• Absence from a laboratory session or an examination

because of illness will be excused only if you provide a

certificate from Health Services (100 Marie Curie, 3rd Floor)

within the week following your absence.

15

SECTION 1

SOFTWARE

DEVELOPMENT

PROCESS AND

DOMAIN ANALYSIS

LECTURE TOPICS

This lecture will briefly touch on the

following topics:

• Software Development Process

• Domain Analysis

TOPIC 1

SOFTWARE

DEVELOPMENT

PROCESS

LIFE CYCLE

The life cycle of a software product

• from inception of an idea for a product through

•

•

•

•

•

•

•

domain analysis

requirements gathering

architecture design and specification

coding and testing

delivery and deployment

maintenance and evolution

retirement

MODELS ARE NEEDED

Symptoms of inadequacy: the software crisis

• scheduled time and cost exceeded

• user expectations not met

• poor quality

The size and economic value of software

applications required appropriate "process models"

PROCESS AS A

"BLACK BOX"

Informal

Requirements

Process

Product

PROBLEMS

The assumption is that requirements can be fully

understood prior to development

Unfortunately the assumption almost never holds

Interaction with the customer occurs only at the

beginning (requirements) and end (after delivery)

PROCESS AS A

"WHITE BOX"

Informal

Requirements

Process

Product

feedback

ADVANTAGES

Reduce risks by improving visibility

Allow project changes as the project progresses

• based on feedback from the customer

THE MAIN ACTIVITIES

They must be performed independently of

the model

The model simply affects the flow among

activities

WATERFALL MODELS

Invented in the late 1950s for large air defense

systems, popularized in the 1970s

They organize activities in a sequential flow

• Standardize the outputs of the various activities

(deliverables)

Exist in many variants, all sharing sequential flow

style

A WATERFALL MODELS

Domain analysis and feasibility study

Requirements

Design

Coding and module testing

Integration and system testing

Delivery, deployment, and

maintenance

WATERFALL STRENGTHS

Easy to understand, easy to use

Provides structure to inexperienced staff

Milestones are well understood

Sets requirements stability

WATERFALL WEAKNESSES

All requirements must be known upfront

Deliverables created for each phase are considered frozen –

inhibits flexibility

Can give a false impression of progress

Does not reflect problem-solving nature of software

development – iterations of phases

Integration is one big bang at the end

Little opportunity for customer to preview the system (until it

may be too late)

WHEN TO USE WATERFALL

Requirements are very well known

Product definition is stable

Technology is very well understood

New version of an existing product (maybe!)

Porting an existing product to a new platform

• High risk for new systems because of specification and

design problems.

• Low risk for well-understood developments using familiar

technology.

WATERFALL – WITH

FEEDBACK

Domain analysis and feasibility study

Requirements

Design

Coding and

module testing

Integration and

system testing

Delivery, deployment, and

maintenance

ITERATIVE DEVELOPMENT

PROCESS

Also referred to as incremental development process

Develop system through repeated cycle (iterations)

• Each cycle is responsible for the development of a small

portion of the solution (slice of functionality)

Contrast with waterfall:

• Water fall is a special iterative process with only one cycle

ITERATIVE DEVELOPMENT

PROCESS

Domain Analysis and

Initial Planning

Update Architecture

and Design

Requirements

Iteration

Planning

Cycle

Evaluation (involving

end user)

Deployment

Implementation

Testing

AGILE METHODS

Dissatisfaction with the overheads involved in software

design methods of the 1980s and 1990s led to the creation of

agile methods. These methods:

• Focus on the code rather than the design

• Are based on an iterative approach to software development

• Are intended to deliver working software quickly and evolve

this quickly to meet changing requirements

The aim of agile methods is to reduce overheads in the

software process (e.g. by limiting documentation) and to be

able to respond quickly to changing requirements without

excessive rework.

AGILE MANIFESTO

We are uncovering better ways of developing software by

doing it and helping others do it. Through this work we have

come to value:

•

•

•

•

Individuals and interactions over processes and tools

Working software over comprehensive documentation

Customer collaboration over contract negotiation

Responding to change over following a plan

That is, while there is value in the items on the right, we value

the items on the left more.

THE PRINCIPLES OF AGILE

METHODS

Principle

Customer involvement

Description

Customers should be closely involved throughout the development

process. Their role is to provide and prioritize new system

requirements and to evaluate the iterations of the system.

Incremental delivery

The software is developed in increments with the customer specifying

the requirements to be included in each increment.

People not process

The skills of the development team should be recognized and

exploited. Team members should be left to develop their own ways of

working without prescriptive processes.

Embrace change

Expect the system requirements to change and so design the system

to accommodate these changes.

Focus on simplicity in both the software being developed and in the

development process. Wherever possible, actively work to eliminate

complexity from the system.

Maintain simplicity

SCRUM PROCESS

PROBLEMS WITH AGILE

METHODS

It can be difficult to keep the interest of customers

who are involved in the process

Team members may be unsuited to the intense

involvement that characterizes agile methods

Prioritizing changes can be difficult where there

are multiple stakeholders

Minimizing documentation: almost nothing is

captured, the code is the only authority

TOPIC 2

DOMAIN ANALYSIS

DOMAIN MODELING

The aim of domain analysis is to understand the problem

domain independently of the particular system we intend to

develop.

We do not try to draw the borderline between the system and

the environment.

We focus on the concepts and the terminology of the

application domain with a wider scope than the future

system.

ACTIVITIES AND RESULTS

OF DOMAIN ANALYSIS

1. A dictionary of terms defining the common terminology

and concepts of the problem domain;

2. Description of the problem domain from a conceptual

modeling viewpoint

• We normally use UML class diagrams (with as little detail as

possible)

• Remember, we are not designing, but just establishing the

relationship between entities

3. Briefly describe the main interactions between the user

and the system

EXAMPLE – PROBLEM

DEFINITION

EXAMPLE – PROBLEM

DEFINITION

We want to design the software for a simple Point of Sale

Terminal that operates as follows:

• Displays that amount of money to pay for the goods to be

purchased

• Asks the user to insert a financial card (debit or credit)

• If the user inserts a debit card, he or she is asked to

choose the account type

• Asks the user to enter a pin number

• Verifies the pin number against the one stored on the chip

• Contacts the bank associated with the card in order to

perform the transaction

EXAMPLE – DICTIONARY

OF TERMS (1)

Point of Sale Terminal: machine that allows a retail transaction

to be completed using a financial card

Credit card: payment card issued to users as a system of

payment. It allows the cardholder to pay for goods and services

based on the holder's promise to pay for them

Debit card: plastic payment card that provides the cardholder

electronic access to his or her bank account

EXAMPLE – DICTIONARY

OF TERMS (2)

Bank: financial institution that issues financial cards and where the user

has at least one account into which he or she can withdraw or deposit

money

Bank Account: is a financial account between a user and a financial

institution…

User: client of that possesses a debit card and benefits from the use of a

point of sale terminal

Pin number: personal identification number (PIN, pronounced "pin";

often erroneously PIN number) is a secret numeric password shared

between a user and a system that can be used to authenticate the user

to the system

EXAMPLE – PROBLEM

DOMAIN

PinNumber

1

User

*

FinancialCard

*

1..2

BankAccount

*

DebitCard

CreditCard

1

PosTerminal

1..*

Bank

EXAMPLE – MAIN

INTERACTIONS

Inputs to POS Terminal: Insertion of Financial Card, Pin

Number, Specify Account, Confirm purchase…

Outputs from POS Terminal: Error Message (regarding pin or

funds), Confirmation of Purchase,

SECTION 2

REQUIREMENTS

BEHAVIORAL MODELING

TOPICS

Review of some notions regarding requirements

• Client requirements

• Functional requirements

• Non-functional requirements

Introduction to Behavioral Modeling

• Activity Diagrams

TOPIC 1

REQUIREMENTS

REQUIREMENTS

We will describe three types of requirements:

• Customer requirements (a.k.a informal or business

requirements)

• Functional requirements

• Non-functional requirements

CUSTOMER

REQUIREMENTS

We have completed the domain analysis, we are ready to get

our hands dirty

We need to figure out exactly what the customer wants:

Customer Requirements

• This is where the expectations of the customer are captured

Composed typically of high level, non-technical statements

Example

• Requirement 1: “We need to develop an online customer

portal”

• Requirement 2: “The portal must list all our products”

•…

FUNCTIONAL

REQUIREMENTS

Capture the intended behavior of the system

• May be expressed as services, tasks or functions the

system performs

Use cases have quickly become a widespread practice for

capturing functional requirements

• This is especially true in the object-oriented community where

they originated

• Their applicability is not limited to object-oriented systems

USE CASES

A use case defines a goal-oriented set of interactions

between external actors and the system under consideration

Actors are parties outside the system that interact with the

system

• An actor may be a class of users or other systems

A use case is initiated by a user with a particular goal in

mind, and completes successfully when that goal is satisfied

It describes the sequence of interactions between actors and

the system necessary to deliver the service that satisfies the

goal

USE CASE DIAGRAMS

USE CASE DIAGRAMS

Include relationship: use case fragment that is duplicated in

multiple use cases

Extend relationship: use case conditionally adds steps to

another first class use case

• Example:

USE CASE – ATM EXAMPLE

Actors:

• ATM Customer

• ATM Operator

Use Cases:

• The customer can

• withdraw funds from a checking or savings account

• query the balance of the account

• transfer funds from one account to another

• The ATM operator can

• Shut down the ATM

• Replenish the ATM cash dispenser

• Start the ATM

USE CASE – ATM EXAMPLE

USE CASE – ATM EXAMPLE

Validate PIN is an Inclusion Use Case

• It cannot be executed on its own

• Must be executed as part of a Concrete Use Case

On the other hand, a Concrete Use Case can be executed

USE CASE – VALIDATE PIN

(1)

Use case name: Validate PIN

Summary: System validates customer PIN

Actor: ATM Customer

Precondition: ATM is idle, displaying a Welcome

message.

USE CASE – VALIDATE PIN

(2)

Main sequence:

1.

2.

3.

4.

5.

Customer inserts the ATM card into the card reader.

If system recognizes the card, it reads the card number.

System prompts customer for PIN.

Customer enters PIN.

System checks the card's expiration date and whether the

card has been reported as lost or stolen.

6. If card is valid, system then checks whether the userentered PIN matches the card PIN maintained by the

system.

7. If PIN numbers match, system checks what accounts are

accessible with the ATM card.

8. System displays customer accounts and prompts customer

for transaction type: withdrawal, query, or transfer.

USE CASE – VALIDATE PIN

(3)

Alternative sequences:

Step 2: If the system does not recognize the card, the system ejects

the card.

Step 5: If the system determines that the card date has expired, the

system confiscates the card.

Step 5: If the system determines that the card has been reported lost

or stolen, the system confiscates the card.

Step 7: If the customer-entered PIN does not match the PIN number

for this card, the system re-prompts for the PIN.

Step 7: If the customer enters the incorrect PIN three times, the

system confiscates the card.

Steps 4-8: If the customer enters Cancel, the system cancels the

transaction and ejects the card.

Postcondition: Customer PIN has been validated.

USE CASE – WITHDRAW

FUNDS (1)

Use case name: Withdraw Funds

Summary: Customer withdraws a specific amount

of funds from a valid bank account.

Actor: ATM Customer

Dependency: Include Validate PIN use case.

Precondition: ATM is idle, displaying a Welcome

message.

USE CASE – WITHDRAW

FUNDS (2)

Main sequence:

1. Include Validate PIN use case.

2. Customer selects Withdrawal, enters the amount,

and selects the account number.

3. System checks whether customer has enough

funds in the account and whether the daily limit

will not be exceeded.

4. If all checks are successful, system authorizes

dispensing of cash.

5. System dispenses the cash amount.

6. System prints a receipt showing transaction

number, transaction type, amount withdrawn, and

account balance.

7. System ejects card.

8. System displays Welcome message.

USE CASE – WITHDRAW

FUNDS (3)

Alternative sequences:

Step 3: If the system determines that the account

number is invalid, then it displays an error message

and ejects the card.

Step 3: If the system determines that there are

insufficient funds in the customer's account, then it

displays an apology and ejects the card.

Step 3: If the system determines that the maximum

allowable daily withdrawal amount has been

exceeded, it displays an apology and ejects the card.

Step 5: If the ATM is out of funds, the system displays

an apology, ejects the card, and shuts down the ATM.

Postcondition: Customer funds have been withdrawn.

NON-FUNCTIONAL

REQUIREMENTS

Functional requirements define what a system is supposed

to do

Non-functional requirements define how a system is

supposed to be

• Usually describe system attributes such as security, reliability,

maintainability, scalability, usability…

NON-FUNCTIONAL

REQUIREMENTS

Non-Functional requirements can be specified in a separate

section of the use case description

• In the previous example, for the Validate PIN use case, there

could be a security requirement that the card number and PIN

must be encrypted

Non-Functional requirements can be specified for a group of

use cases or the whole system

• Security requirement: System shall encrypt ATM card

number and PIN.

• Performance requirement: System shall respond to actor

inputs within 5 seconds.

TOPIC 2

BEHAVIORAL MODELING

SOFTWARE MODELING

UML defines thirteen basic diagram types, divided into two

general sets:

• Structural Modeling

• Behavioral Modeling

Structural Models define the static architecture of a model

• They are used to model the “things” that make up a model –

the classes, objects, interfaces and physical components

• In addition they are used to model the relationships and

dependencies between elements

BEHAVIORAL MODELING

Behavior Models capture the dynamic behavior of a system

as it executes over time

They provide a view of a system in which control and

sequencing are considered

• Either within an object (by means of a finite state machine) or

between objects (by analysis of object interactions).

UML ACTIVITY DIAGRAMS

In UML an activity diagram is used to display the sequence of

actions

They show the workflow from start to finish

• Detail the many decision paths that exist in the progression of

events contained in the activity

Very useful when parallel processing may occur in the

execution of some activities

UML ACTIVITY DIAGRAMS

An example of an activity diagram is shown below

(We will come back to that diagram)

ACTIVITY

An activity is the specification of a parameterized sequence

of behavior

Shown as a round-cornered rectangle enclosing all the

actions and control flows

ACTIONS AND

CONSTRAINS

An action represents a single step within an activity

Constraints can be attached to actions

CONTROL FLOW

Shows the flow of control from one action to the next

• Its notation is a line with an arrowhead.

Initial Node

Final Node, two types:

Activity Final Node

Flow Final Node

OBJECTS FLOW

An object flow is a path along which objects or data can pass

• An object is shown as a rectangle

A short hand for the above notation

DECISION AND MERGE

NODES

Decision nodes and merge nodes have the same notation: a

diamond shape

The control flows coming away from a decision node will

have guard conditions

FORK AND JOIN NODES

Forks and joins have the same notation: either a horizontal or

vertical bar

• They indicate the start and end of concurrent threads of

control

• Join synchronizes two inflows and produces a single outflow

• The outflow from a join cannot execute until all inflows have

been received

PARTITION

Shown as horizontal or vertical swim lane

• Represents a group of actions that have some common

characteristic

UML ACTIVITY DIAGRAMS

Coming back to our initial example

ISSUE HANDLING IN

SOFTWARE PROJECTS

Courtesy of uml-diagrams.org

MORE ON ACTIVITY

DIAGRAMS

Interruptible Activity Regions

Expansion Regions

Exception Handlers

INTERRUPTIBLE ACTIVITY

REGION

Surrounds a group of actions that can be interrupted

Example below:

• “Process Order” action will execute until completion, when it

will pass control to the “Close Order” action, unless a “Cancel

Request” interrupt is received, which will pass control to the

“Cancel Order” action.

EXPANSION REGION

An expansion region is an activity region that executes

multiple times to consume all elements of an input collection

Example of books checkout at a library modeled using an

expansion region:

Checkout Books

Find Books to

Borrow

Checkout

Book

Show Due

Date

Place Books

in Bags

EXPANSION REGION

Another example: Encoding Video

Encode Video

Capture Video

Extract Audio

from Frame

Encode Video

Frame

Attach Audio

to Frame

Save Encoded

Video

EXCEPTION HANDLERS

An exception handler is an element that specifies what to

execute in case the specified exception occurs during the

execution of the protected node

In Java

• “Try block” corresponds to “Protected Node”

• “Catch block” corresponds to the “Handler Body Node”

SECTION 3

BEHAVIORAL MODELING

TOPICS

We will continue with the subject of Behavioral Modeling

Introduce the various components of UML state machines

ACTIVITY DIAGRAMS VS

STATE MACHINES

In Activity Diagrams

• Vertices represent Actions

• Edges (arrows) represent transition that occurs at the

completion of one action and before the start of another one

(control flow)

Vertex representing

an Action

Arrow implying

transition from one

action to another

ACTIVITY DIAGRAMS VS

STATE MACHINES

In State Machines

• Vertices represent states of a process

• Edges (arrows) represent occurrences of events

Vertex representing a

State

Arrow representing

an event

UML STATE MACHINES

Used to model the dynamic behaviour of a process

• Can be used to model a high level behaviour of an entire

system

• Can be used to model the detailed behaviour of a single

object

• All other possible levels of detail in between these extremes is

also possible

UML STATE MACHINE

EXAMPLE

Example of a garage door state machine

(We will come back to this example later)

STATES

Symbol for a state

A system in a state will remain in it until the occurrence of an

event that will cause it to transition to another one

• Being in a state means that a system will behave in a

predetermined way in response to a given event

Symbols for the initial and final states

STATES

Numerous types of events can cause the system to transition

from one state to another

In every state, the system behaves in a different matter

Names for states are usually chosen as:

• Adjectives: open, closed, ready…

• Present continuous verbs: opening, closing, waiting…

TRANSITIONS

Transitions are represented with arrows

TRANSITIONS

Transitions represent a change in a state in response to an

event

• Theoretically, it is supposed to occur in a instantaneous

manner (it does not take time to execute)

A transition can have”

• Trigger: causes the transition; can be an event of simply the

passage of time

• Guard: a condition that must evaluate to true for the transition

to occur

• Effect: an action that will be invoked directly on the system of

the object being modeled (if we are modeling an object, the

effect would correspond to a specific method)

STATE ACTIONS

An effect can also be associated with a state

If a destination state is associated with numerous incident

transitions (transitions arriving a that state), and every

transition defines the same effect:

• The effect can therefore be associated with the state instead

of the transitions (avoid duplications)

• This can be achieved using an “On Entry” effect (we can have

multiple entry effects)

• We can also add one or more “On Exit” effect

SELF TRANSITION

State can also have self transitions

• These self transition are more useful when they have an

effect associated with them

Timer events are usually popular with self transitions

Below is a typical example:

COMING BACK TO OUR

INITIAL EXAMPLE

Example of a garage door state machine

DECISIONS

Just like activity diagrams, we can use decisions nodes

(although we usually call them decision pseudo-states)

Decision pseudo-states are represented with a diamond

• We always have one input transition and multiple outputs

• The branch of execution is decided by the guards associated

with the transitions coming out of the decision pseudo-state

DECISIONS

COMPOUND STATES

A state machine can include several sub-machines

Below is an example of a sub-machine included in the

compound state “Connected”

Connected

Waiting

receiveByte

disconnect

byteProcessed

Disconnected

connect

ProcessingByte

closeSession

COMPOUND STATES

EXAMPLE

COMPOUND STATES

EXAMPLE

Same example, with an alternative notation

• The link symbol in the “Check Pin” state indicates that the

details of the sub-machine associated with “Check Pin” are

specified in an another state machine

ALTERNATIVE ENTRY

POINTS

Sometimes, in a sub-machine, we do not want to start the

execution from the initial state

• We want to start the execution from a “name alternative entry

point”

PerformActivity

ALTERNATIVE ENTRY

POINTS

Here’s the same system, from a higher level

• Transition from the “No Already Initialized” state leads to the

standard initial state in the sub-machine

• Transition from the “Already Initialized” state is connected to

the named alternative entry point “Skip Initializing”

ALTERNATIVE EXIT

POINTS

It is also possible to have alternative exit points for a

compound state

• Transition from “Processing Instructions” state takes the

regular exit

• Transition from the “Reading Instructions” state takes an

"alternative named exit point

USE CASE – VALIDATE PIN

(1)

Use case name: Validate PIN

Summary: System validates customer PIN

Actor: ATM Customer

Precondition: ATM is idle, displaying a Welcome

message.

USE CASE – VALIDATE PIN

(2)

Main sequence:

1.

2.

3.

4.

5.

Customer inserts the ATM card into the card reader.

If system recognizes the card, it reads the card number.

System prompts customer for PIN.

Customer enters PIN.

System checks the card's expiration date and whether the

card has been reported as lost or stolen.

6. If card is valid, system then checks whether the userentered PIN matches the card PIN maintained by the

system.

7. If PIN numbers match, system checks what accounts are

accessible with the ATM card.

8. System displays customer accounts and prompts customer

for transaction type: withdrawal, query, or transfer.

USE CASE – VALIDATE PIN

(3)

Alternative sequences:

Step 2: If the system does not recognize the card, the system ejects

the card.

Step 5: If the system determines that the card date has expired, the

system confiscates the card.

Step 5: If the system determines that the card has been reported lost

or stolen, the system confiscates the card.

Step 7: If the customer-entered PIN does not match the PIN number

for this card, the system re-prompts for the PIN.

Step 7: If the customer enters the incorrect PIN three times, the

system confiscates the card.

Steps 4-8: If the customer enters Cancel, the system cancels the

transaction and ejects the card.

Postcondition: Customer PIN has been validated.

ATM MACHINE EXAMPLE

Validate PIN:

ATM MACHINE EXAMPLE

Funds withdrawal:

SECTION 4

BEHAVIORAL MODELING

TOPICS

We will continue to talk about UML State Machine

We will go through a complete example of a simple software

construction case study with emphasis on UML State

Machines

End this section with some final words of wisdom!

LAST LECTURE

We have talked about UML State Machines

•

•

•

•

•

•

States and transitions

State effects

Self Transition

Decision pseudo-states

Compound states

Alternative entry and exit points

Today, we will tackle more advanced UML State Machines

Concepts

HISTORY STATES

A state machine describes the dynamic aspects of a process

whose current behavior depends on its past

A state machine in effect specifies the legal ordering of

states a process may go through during its lifetime

When a transition enters a compound state, the action of the

nested state machine starts over again at its initial state

• Unless an alternative entry point is specified

There are times you'd like to model a process so that it

remembers the last substate that was active prior to leaving

the compound state

HISTORY STATES

Simple washing machine state diagram:

• Power Cut event: transition to the “Power Off” state

• Restore Power event: transition to the active state before the

power was cut off to proceed in the cycle

CONCURRENT REGIONS

Sequential sub state machines are the most common kind of

sub machines

• In certain modeling situations, concurrent sub machines might

be needed (two or more sub state machines executing in

parallel)

Brakes example:

CONCURRENT REGIONS

Example of modeling system maintenance using concurrent

regions

Maintenance

Testing

testingCompleted

shutDown

Testing

devices

maintain

Idle

Commanding

Self

diagnosing

commandProcessed

[continue]

Waiting

command

Processing

Command

ORTHOGONAL REGIONS

Concurrent Regions are also called Orthogonal Regions

These regions allow us to model a relationship of “And”

between states (as opposed to the default “or” relationship)

• This means that in a sub state machine, the system can be in

several states simultaneously

Let us analyse this phenomenon using an example of

computer keyboard state machine

KEYBOARD EXAMPLE (1)

Keyboard example without Orthogonal Regions

KEYBOARD EXAMPLE (2)

Keyboard example with Orthogonal Regions

GARAGE DOOR – CASE

STUDY

Background

• Company DOORS inc. manufactures garage door

components

• Nonetheless, they have been struggling with the embedded

software running on their automated garage opener Motor

Unit that they developed in house

• This is causing them to loose business

• They decided to scrap the existing software and hire a

professional software company to deliver “bug free” software

CLIENT REQUIREMENTS

Client (informal) requirements:

• Requirement 1: When the garage door is closed, it must

open whenever the user presses on the button of the wall

mounted door control or the remote control

• Requirement 2: When the garage door is open, it must close

whenever the user presses on the button of the wall mounted

door control or the remote control

• Requirement 3: The garage door should not close on an

obstacle

• Requirement 4: There should be a way to leave the garage

door half open

• Requirement 5: System should run a self diagnosis test

before performing any command (open or close) to make sure

all components are functional

CLIENT REQUIREMENTS

Motor Unit

(includes a microcontroller

where the software will be

running)

Wall Mounted

Controller

(a remote controller

is also supported)

Sensor Unit(s)

(detects obstacles, when the door is

fully open and when it is fully closed)

USE CASE DIAGRAM

Use Case Diagram

Garage Door System

Open Door

Run

Diagnosis

Garage Door User

Close Door

RUN DIAGNOSIS USE CASE

Use Case Name: Run Diagnosis

Summary: The system runs a self diagnosis procedure

Actor: Garage door user

Pre-Condition: User has pressed the remote or wall mounted control button

Sequence:

1.

Check if the sensor is operating correctly

2.

Check if the motor unit is operating correctly

3.

If all checks are successful, system authorizes the command to be executed

Alternative Sequence:

Step 3: One of the checks fails and therefore the system does not authorize the

execution of the command

Postcondition: Self diagnosis ensured that the system is operational

OPEN DOOR USE CASE

Use Case Name: Open Door

Summary: Open the garage the door

Actor: Garage door user

Dependency: Include Run Diagnosis use case

Pre-Condition: Garage door system is operational and ready to take a command

Sequence:

1.

User presses the remote or wall mounted control button

2.

Include Run Diagnosis use case

3.

If the door is currently closing or is already closed, system opens the door

Alternative Sequence:

Step 3: If the door is open, system closes door

Step 3: If the door is currently opening, system stops the door (leaving it half open)

Postcondition: Garage door is open

CLOSE DOOR USE CASE

Use Case Name: Close Door

Summary: Close the garage the door

Actor: Garage door user

Dependency: Include Run Diagnosis use case

Pre-Condition: Garage door system is operational and ready to take a command

Sequence:

1.

User presses the remote or wall mounted control button

2.

Include Run Diagnosis use case

3.

If the door is currently open, system closes the door

Alternative Sequence:

Step 3: If the door is currently closing or is already closed, system opens the door

Step 3: If the door is currently opening, system stops the door (leaving it half open)

Postcondition: Garage door is closed

HIGH LEVEL BEHAVIORAL

MODELING

HIGH LEVEL STRUCTURAL

MODEL

«interface»

EventHandler

EventGenerator

*

Controller

*

Sensor

«interface»

ControllerEventHandler

«interface»

SensorEventHandler

WallMountedController

RemoteController

Motor

REFINED STRUCTURAL

MODEL

EventGenerator

-id : long

-eventHandlers: List

+EventGenerator(in id : long)

+getId() : long

+addEventHandler(in handler : EventHandler) : bool

#sendEvent(in eventId : int) : void

+run() : void

«interface»

EventHandler

*

*

«interface»

SensorEventHandler

+obstacleDetected() : void

+doorClosed() : void

+doorOpen() : void

Controller

Sensor

+Controller(in id : long)

+pressButton() : bool

+run() : void

#sendEvent(in eventId : int) : void

+Sensor(in id : long)

+run() : void

+isFunctioning() : bool

#sendEvent(in eventId : int) : void

WallMountedController

+isFunctioning() : bool

RemoteController

«interface»

ControllerEventHandler

+buttonPressed() : void

Motor

-eventGenerators: List

+buttonPressed() : void

+obstacleDetected() : void

+doorClosed() : void

+doorOpen() : void

+Motor()

-closeDoor() : bool

-openDoor() : bool

+getDoorState() : DoorState

+isFunctioning() : bool

+run() : void

1

1

«enumeration»

DoorState

+Open

+Opening

+Closed

+Closing

REFINE BEHAVIORAL

MODEL – MOTOR UNIT

Running

Open

buttonPressed()

[isFunctioning()]

Timer (180 s)

[! isFunctioning()]

doorOpen()

Closing

buttonPressed(),

[! isFunctioning()]

buttonPressed(),

obstacleDetected()

[isFunctioning()]

doorClosed()

Closed

Timer (180 s)

[isFunctioning()]

buttonPressed()

[isFunctioning()]

Opening

buttonPressed()

buttonPressed()

HalfOpen

WaitingForRepair

REFINE BEHAVIORAL

MODEL – SENSOR UNIT

CheckingForObstacles

[isObstacleDetected()]

SendingObstacleEvent

[!isObstacleDetected()]

CheckingIfDoorOpen

[isDoorOpen()]

SendingOpenDoorEvent

[!isDoorOpen()]

CheckingIfDoorClosed

[isDoorClosed()]

SendingDoorClosedEvent

[!isDoorClosed()]

Sleeping

Time (20 ms)

DO NOT FALL ASLEEP YET!

CODING

Whenever we are satisfied with the level of detail in our

behavioral models, we can proceed to coding

Some of the code can be generated directly by tools from the

behavioral model

• Some tweaking might be necessary (do not use the code

blindly)

• Humans are still the smartest programmers

EVENT GENERATOR CLASS

SENSOR CLASS

SENSOR CLASS

Sensor

State machine

Implementation

UMPLE ONLINE DEMO

UMPLE is a modeling tool to enable what we call ModelOriented Programming

• This is what we do in this course

You can use it to create class diagrams (structural models)

and state machines (behavioral models)

The tool was developed at the university of Ottawa

• Online version can be found at:

http://cruise.eecs.uottawa.ca/umpleonline/

• There’s also an eclipse plugin for the tool

UMPLE CODE FOR MOTOR

UNIT STATE MACHINE

class Motor {

status {

Running {

Open {buttonPressed[isFunctioning()]->Closing; }

Closing { buttonPressed()[isFunctioning()]->Opening;

ObstacleDetected()[isFunctioning()]->Opening;

doorClosed()->Closed;}

Closed { buttonPressed()[isFunctioning()]->Opening; }

Opening { buttonPressed()->HalfOpen;

doorOpen()->Open; }

HalfOpen{buttonPressed()->Opening;}

buttonPressed()[!isFunctioning()]->WaitingForRepair;

}

WaitingForRepair{

timer()[!isFunctioning()]->WaitingForRepair;

timer()[isFunctioning()]->Running;}

}

}

MOTOR CLASS SNIPPETS

Switching between

high level states

Switching between

nest states inside

the Running

compound state

WHEN TO USE STATE

MACHINES?

When an object or a system progresses through various

stages of execution (states)

• The behavior of the system differs from one stage to another

When you can identify clear events that change the status of

the system

They are ideal for event driven programming (less loops and

branches, more events generated and exchanged)

• Lots of event are being exchanged between objects

When using even driven programming

• Make sure you follow Observable or Event Notifier patterns

• Both are pretty simple (similar to what we have done for the

garage door example)

BEHAVIORAL OVERMODELING

Please model responsibly!!

Do not get carried out with modeling every single detail to

the point where you run behind schedule

• You sell code, not models…

BEHAVIORAL OVERMODELING

Now, be careful, you do not want over-model

• Modern software development processes are all about only

doing just enough modeling for a successful product

Therefore, start with a high level model of the behavior

• This model should give a clear overview of some (not

necessary all) of the important functionality of the system

• This would be similar to the first garage door state machine

we created

BEHAVIORAL OVERMODELING

Identify potential complex areas that require further

understanding

• We minimize the risk if we understand these components well

before we start programing

• Model these complex areas in more details until you are

satisfied that they are well understood

Use tools to generate code from your existing models

• Do not rely blindly on tools (at least not yet!)

DESIGNING CLASSES WITH

STATE DIAGRAMS

Keep the state diagram simple

• State diagrams can very quickly become extremely complex

and confusing

• At all time, you should follow the aesthetic rule: “Less is More”

If the state diagram gets too complex consider splitting it into

smaller classes

Think about compound states instead of a flat design

EXAMPLE OF A CD PLAYER

WITH A RADIO

On

Display Alarm

Displaying

Current Time

Displaying

Alarm Time

Timer (3 s)

Play CD

Playing

Radio

Playing CD

Play Radio

H

On

Off

On

off

MORE UML STATE

MACHINES EXAMPLES

Flight State Machine

MORE UML STATE

MACHINES EXAMPLES

Flight State Machine

Nested

SECTION 5

PETRI NETS

THESE SLIDES ARE BASED ON LECTURE NOTES

FROM:

DR. CHRIS LING ( H TTP: / / W W W.CSSE. M O NASH .EDU.AU /~SLI N G/ )

SEG2106 – Winter 2014 – Hussein Al Osman

151

TOPICS

Today we will discuss another type of state machine:

• Petri nets (this will be just an introduction…)

This will be the last behavioral modeling topic we cover

• We will start the next section of the course next week

SEG2106 – Winter 2014 – Hussein Al Osman

152

OK, LET’S START…

SEG2106 – Winter 2014 – Hussein Al Osman

153

INTRODUCTION

First introduced by Carl Adam Petri in 1962.

A diagrammatic tool to model concurrency and

synchronization in systems

• They allow us to quickly simulate complex concurrent

behavior (which is faster than prototyping!)

Fairly similar to UML State machines that we have seen so far

• Used as a visual communication aid to model the system

behavior

Based on strong mathematical foundation

SEG2106 – Winter 2014 – Hussein Al Osman

154

EXAMPLE: POS TERMINAL

(UML STATE MACHINE)

(POS= Point of Sale)

idle

1 digit

1 digit

d1

d2

1 digit

d3

1 digit

d4

OK

OK

OK

OK

OK

pressed

Rejected

Reject

Approved

SEG2106 – Winter 2014 – Hussein Al Osman

155

EXAMPLE: POS

TERMINAL (PETRI NET)

1 digit

Initial

1 digit

d1

1 digit

d2

1 digit

d4

d3

OK

OK

OK

OK

OK

pressed

Rejected!

Reject

approve

approved

SEG2106 – Winter 2014 – Hussein Al Osman

156

POS TERMINAL

Scenario 1: Normal

• Enters all 4 digits and press OK.

Scenario 2: Exceptional

• Enters only 3 digits and press OK.

SEG2106 – Winter 2014 – Hussein Al Osman

157

EXAMPLE: POS SYSTEM

(TOKEN GAMES)

1 digit

Initial

1 digit

d1

1 digit

d2

1 digit

d4

d3

OK

OK

OK

OK

OK

pressed

Rejected!

Reject

approve

approved

SEG2106 – Winter 2014 – Hussein Al Osman

158

A PETRI NET

COMPONENTS

The terms are bit different than UML state machines

Petri nets consist of three types of components: places

(circles), transitions (rectangles) and arcs (arrows):

• Places represent possible states of the system

• Transitions are events or actions which cause the change of

state (be careful, transitions are no longer arrows here)

• Every arc simply connects a place with a transition or a

transition with a place.

SEG2106 – Winter 2014 – Hussein Al Osman

159

CHANGE OF STATE

A change of state is denoted by a movement of token(s)

(black dots) from place(s) to place(s)

• Is caused by the firing of a transition.

The firing represents an occurrence of the event or an action

taken

The firing is subject to the input conditions, denoted by

token availability

SEG2106 – Winter 2014 – Hussein Al Osman

160

CHANGE OF STATE

A transition is firable or enabled when there are sufficient

tokens in its input places.

After firing, tokens will be transferred from the input places

(old state) to the output places, denoting the new state

SEG2106 – Winter 2014 – Hussein Al Osman

161

EXAMPLE: VENDING

MACHINE

The machine dispenses two kinds of snack bars – 20c and

15c

Only two types of coins can be used

• 10c coins and 5c coins (ah the old days!!)

The machine does not return any change

SEG2106 – Winter 2014 – Hussein Al Osman

162

EXAMPLE: VENDING MACHINE

(UML STATE MACHINE)

Take 15c snack bar

5 cents

inserted

Deposit 10c

15 cents

inserted

0 cent

inserted

10 cents

inserted

Deposit 10c

20 cents

inserted

Take 20c snack bar

SEG2106 – Winter 2014 – Hussein Al Osman

163

EXAMPLE: VENDING

MACHINE (A PETRI NET)

Take 15c bar

Deposit 10c

5c

15c

Deposit 5c

0c

Deposit

5c

Deposit

5c

Deposit 10c

10c

Deposit

5c

20c

Deposit 10c

Take 20c bar

SEG2106 – Winter 2014 – Hussein Al Osman

164

EXAMPLE: VENDING MACHINE (3

SCENARIOS)

Scenario 1:

• Deposit 5c, deposit 5c, deposit 5c, deposit 5c, take 20c snack

bar.

Scenario 2:

• Deposit 10c, deposit 5c, take 15c snack bar.

Scenario 3:

• Deposit 5c, deposit 10c, deposit 5c, take 20c snack bar.

SEG2106 – Winter 2014 – Hussein Al Osman

165

EXAMPLE: VENDING

MACHINE (TOKEN GAMES)

Take 15c bar

Deposit 10c

5c

15c

Deposit 5c

0c

Deposit

5c

Deposit

5c

Deposit 10c

10c

Deposit

5c

20c

Deposit 10c

Take 20c bar

SEG2106 – Winter 2014 – Hussein Al Osman

166

MULTIPLE LOCAL STATES

In the real world, events happen at the same time

A system may have many local states to form a

global state.

There is a need to model concurrency and

synchronization

SEG2106 – Winter 2014 – Hussein Al Osman

167

EXAMPLE: IN A RESTAURANT

(A PETRI NET)

Waiter

free

Customer 1

Customer 2

Take

order

Take

order

wait

Order

taken

wait

eating

eating

Serve food

SEG2106 – Winter 2014 – Hussein Al Osman

Tell

kitchen

Serve food

168

EXAMPLE: IN A RESTAURANT

(TWO SCENARIOS)

Scenario 1:

Waiter

1. Takes order from customer 1

2. Serves customer 1

3. Takes order from customer 2

4. Serves customer 2

Scenario 2:

Waiter

1. Takes order from customer 1

2. Takes order from customer 2

3. Serves customer 2

4. Serves customer 1

SEG2106 – Winter 2014 – Hussein Al Osman

169

EXAMPLE: IN A

RESTAURANT (SCENARIO 2)

Waiter

free

Customer 1

Customer 2

Take

order

Take

order

wait

Order

taken

wait

eating

eating

Serve food

SEG2106 – Winter 2014 – Hussein Al Osman

Tell

kitchen

Serve food

170

EXAMPLE: IN A

RESTAURANT (SCENARIO 1)

Waiter

free

Customer 1

Customer 2

Take

order

Take

order

wait

Order

taken

wait

eating

eating

Serve food

SEG2106 – Winter 2014 – Hussein Al Osman

Tell

kitchen

Serve food

171

NET STRUCTURES

A sequence of events/actions:

e1

e2

e3

e2

e3

e4

e5

Concurrent executions:

e1

SEG2106 – Winter 2014 – Hussein Al Osman

172

NET STRUCTURES

Non-deterministic events - conflict, choice

or decision: A choice of either e1, e2 … or

e3, e4 ...

e1

e2

e3

e4

SEG2106 – Winter 2014 – Hussein Al Osman

173

NET STRUCTURES

Synchronization

e1

SEG2106 – Winter 2014 – Hussein Al Osman

174

NET STRUCTURES

Synchronization and Concurrency

e1

SEG2106 – Winter 2014 – Hussein Al Osman

175

ANOTHER EXAMPLE

A producer-consumer system, consist of:

• One producer

• Two consumers

• One storage buffer

With the following conditions:

• The storage buffer may contain at most 5 items;

• The producer sends 3 items in each production;

• At most one consumer is able to access the storage buffer at

one time;

• Each consumer removes two items when accessing the

storage buffer

SEG2106 – Winter 2014 – Hussein Al Osman

176

A PRODUCERCONSUMER SYSTEM

k=2

k=1

accepted

ready

p1

produce

t2

t1

p4

Storage p3

3

2

accept

t3

t4

consume

send

p2

k=5

p5

idle

ready

k=1

Producer

SEG2106 – Winter 2014 – Hussein Al Osman

k=2

Consumers

177

A PRODUCER-CONSUMER

EXAMPLE

In this Petri net, every place has a capacity

and every arc has a weight.

This allows multiple tokens to reside in a

place to model more complex behavior.

SEG2106 – Winter 2014 – Hussein Al Osman

178

SHORT BREAK?

Are you here yet?

SEG2106 – Winter 2014 – Hussein Al Osman

179

BEHAVIORAL PROPERTIES

Reachability

• “Can we reach one particular state from another?”

Boundedness

• “Will a storage place overflow?”

Liveness

• “Will the system die in a particular state?”

SEG2106 – Winter 2014 – Hussein Al Osman

180

RECALLING THE VENDING

MACHINE (TOKEN GAME)

Take 15c bar

Deposit 10c

5c

15c

Deposit 5c

0c

Deposit

5c

Deposit

5c

Deposit 10c

10c

Deposit

5c

20c

Deposit 10c

Take 20c bar

SEG2106 – Winter 2014 – Hussein Al Osman

181

A MARKING IS A STATE ...

t8

p4

t4

p2

t1

p1

t3

t5

t

7

M0 = (1,0,0,0,0)

M1 = (0,1,0,0,0)

M2 = (0,0,1,0,0)

M3 = (0,0,0,1,0)

M4 = (0,0,0,0,1)

Initial marking:M0

t6

t2

p5

p3

t9

SEG2106 – Winter 2014 – Hussein Al Osman

182

REACHABILITY

t8

p4

t4

M0 = (1,0,0,0,0)

p2

M1 = (0,1,0,0,0)

t1

M2 = (0,0,1,0,0)

p1

t3

t

7

t5

p3

t6

t2

p5

M3 = (0,0,0,1,0)

M4 = (0,0,0,0,1)

Initial marking:M0

t9

M0

t1

M1

t3

SEG2106 – Winter 2014 – Hussein Al Osman

M2

t5

M3

t8

M0

t2

M2

t6

M4

183

REACHABILITY

A firing or occurrence sequence:

M0

t1

M1

t3

M2

t5

M3

t8

M0

t2

M2

t6

M4

“M2 is reachable from M1 and M4 is reachable from M0.”

In fact, in the vending machine example, all markings are

reachable from every marking.

SEG2106 – Winter 2014 – Hussein Al Osman

184

BOUNDEDNESS

A Petri net is said to be k-bounded or simply bounded if the

number of tokens in each place does not exceed a finite

number k for any marking reachable from M0.

The Petri net for vending machine is 1-bounded.

SEG2106 – Winter 2014 – Hussein Al Osman

185

LIVENESS

A Petri net with initial marking M0 is live if, no matter what

marking has been reached from M0, it is possible to

ultimately fire any transition by progressing through some

further firing sequence.

A live Petri net guarantees deadlock-free operation, no matter

what firing sequence is chosen.

SEG2106 – Winter 2014 – Hussein Al Osman

186

LIVENESS

The vending machine is live and the producer-consumer

system is also live.

A transition is dead if it can never be fired in any firing

sequence.

SEG2106 – Winter 2014 – Hussein Al Osman

187

AN EXAMPLE

t1

p3

p2

p1

t2

t3

t4

p4

M0 = (1,0,0,1)

M1 = (0,1,0,1)

M2 = (0,0,1,0)

M3 = (0,0,0,1)

A bounded but non-live Petri net

SEG2106 – Winter 2014 – Hussein Al Osman

188

ANOTHER EXAMPLE

M0 = (1, 0, 0, 0, 0)

p1

M1 = (0, 1, 1, 0, 0)

M2 = (0, 0, 0, 1, 1)

t1

M3 = (1, 1, 0, 0, 0)

p2

p3

t2

t3

p4

p5

t4

SEG2106 – Winter 2014 – Hussein Al Osman

M4 = (0, 2, 1, 0, 0)

An unbounded but live Petri net

189

OTHER TYPES OF PETRI

NETS

Object-Oriented Petri nets

• Tokens can either be instances of classes, or states of

objects.

• Net structure models the inner behaviour of objects.

SEG2106 – Winter 2014 – Hussein Al Osman

190

AN O-O PETRI NET

Producer

Consumer

accepted

ready

produce

send

Storage

accept

consume

ready

Producer

state: ProducerState

Consumer

state: ConsumerState

Item produce( )

accept( i: Item): void

send(i: Item): void

consume(i: Item) : void

SEG2106 – Winter 2014 – Hussein Al Osman

191

PETRI NET REFERENCES

• Murata, T. (1989, April). Petri nets: properties, analysis and

applications. Proceedings of the IEEE, 77(4), 541-80.

• Peterson, J.L. (1981). Petri Net Theory and the Modeling of

Systems. Prentice-Hall.

• Reisig, W and G. Rozenberg (eds) (1998). Lectures on Petri

Nets 1: Basic Models. Springer-Verlag.

• The World of Petri nets:

http://www.daimi.au.dk/PetriNets/

SEG2106 – Winter 2014 – Hussein Al Osman

192

SECTION 6

INTRODUCTION TO COMPILERS

TOPICS

Natural languages

• Lexemes or lexical entities

• Syntax and semantics

Computer languages

• Lexical analysis

• Syntax analysis

• Semantic analysis

Compilers

• Compiler’s basic requirements

• Compilation process

NATURAL LANGUAGES

BASICS

In a (natural) language:

• A sentence is a sequence of words

• A word (also called lexemes of lexical units) is a sequence of

characters (possibly a single one)

The set of characters used in a language is finite (know as

the alphabet)

The set of possible sentences in a language is infinite

A dictionary lists all the words (lexemes) of a language

• The words are classified into different lexical categories: verb,

noun, pronoun, preposition….

NATURAL LANGUAGES

BASICS

A grammar (also considered the set of syntax rules) to

determine which sequences of words are well formed

• Sequences must have a structure that obeys the grammatical

rules

Well formed sentences, usually have a meaning that humans

understand

• We are trying to teach our natural languages to machines

With mixed

results!!

ANALYSIS OF SENTENCES

Lexical Analysis: identification of words made up of

characters

• Words are classified into several categories: articles, nouns,

verbs, adjectives, prepositions, pronouns…

Syntax analysis: rules for combining words to form sentences

Analysis of meaning: difficult to formalize

• Easily done by humans

• Gives machines a hard time (although natural language

processing is evolving)

• Big research field for those interested in graduate studies…

COMPUTER LANGUAGE

PROCESSING

In computer (or programming) languages, one speaks about

a program (corresponding to a long sentence or paragraph)

• Sequence of lexical units or lexemes

• Lexical units are sequences of characters

Lexical rules of the language determine what the valid lexical

units of the language are

• There are various lexical categories: identifier, number,

character string, operator…

• Lexical categories are also known as tokens

COMPUTER LANGUAGE

PROCESSING

Syntax rules of the language determine what sequences of

lexemes are well-formed programs

Meaning of a well-formed program is also called its

semantics

• A program can be well-formed, but its statements are

nonsensical

• Example:

int x = 0;

x = 1;

x = 0;

• Syntactically, the above code is valid, but what does it

mean??

COMPUTER LANGUAGE

PROCESSING

Compilers should catch and complain about lexical and

syntax errors

Compilers might complain about common semantic errors:

public boolean test (int x){

boolean result;

if (x > 100)

result = true;

return result;

}

Error message:

The local variable result may

have not been initialized

Your coworkers or the client will complain about the rest!!

COMPILERS

What is a compiler?

• Program that translates an executable program in one

language into an executable program in another language

• We expect the program produced by the compiler to be better,

in some way, than the original

What is an interpreter?

• Program that reads an executable program and produces the

results of running that program

We will focus on compilers in this course (although many of

the concepts apply to both)

BASIC REQUIREMENTS

FOR COMPILERS

Must-Dos:

• Produce correct code (byte code in the case of Java)

• Run fast

• Output must run fast

• Achieve a compile time proportional to the size of the program

• Work well with debuggers (absolute must)

Must-Haves:

• Good diagnostics for lexical and syntax errors

• Support for cross language calls (checkout Java Native

Interface if you are interested)

ABSTRACT VIEW OF

COMPILERS

A compiler usually realizes the translation in several steps;

correspondingly, it contains several components.

Usually, a compiler includes (at least) separate components

for verifying the lexical and syntax rules:

COMPILATION PROCESS

Source Program

Lexical Analyser

Syntax Analyser

Semantic Analyser

Intermediate Code Generator

Code Optimizer

Code Generator

Machine Code

COMPILATION PROCESS

More than one course is required to cover the details of the

various phases

In this course, we will scratch the surface

• We will focus on lexical and syntax analysis

SOME IMPORTANT

DEFINITIONS

These definitions, although sleep inducing, are important in

order to understand the concepts that will be introduced in

the next lectures

So here we go…

ALPHABET

Recall from beginning of the lecture (or kindergarten): an

alphabet is the set of characters that can be used to form a

sentence

…

Since mathematicians love fancy Greek symbols, we will

refer to an alphabet as

ALPHABET

is an alphabet, or set of terminals

• Finite set and consists of all the input characters or

symbols that can be arranged to form sentences in

the language

English: A to Z, punctuation and space symbols

Programming language: usually some well-defined

computer set such as ASCII

STRINGS OF TERMINALS

IN AN ALPHABET

={a,b,c,d}

Possible strings of terminals from include

•

•

•

•

•

•

•

aaa

aabbccdd

d

cba

abab

ccccccccccacccc

Although this is fun, I think you get the idea…

FORMAL LANGUAGES

: alphabet, it is a finite set consisting of all input

characters or symbols

*: closure of the alphabet, the set of all possible

strings in , including the empty string

A (formal) language is some specified subset of *

SECTION 7

LEXICAL ANALYSIS

TOPICS

The role of the lexical analyzer

Specification of tokens

Finite state machines

From a regular expressions to an NFA

THE ROLE OF LEXICAL

ANALYZER

Lexical analyzer is the first phase of a

compiler

• Task: read input characters and produce a

sequence of tokens that the parser uses for

syntax analysis

• Remove white spaces

Source

Program

Lexical

Analyser

(scanner)

token

Get next token

Syntax

Analyser

(parser)

LEXICAL ANALYSIS

There are several reasons for separating

the analysis phase of compiling into lexical

analysis and syntax analysis (parsing):

• Simpler (layered) design

• Compiler efficiency

Specialized tools have been designed to

help automate the construction of both

separately

LEXEMES

Lexeme: sequence of characters in the source program that

is matched by the pattern for a token

• A lexeme is a basic lexical unit of a language

• Lexemes of a programming language include its

• Identifiers: names of variables, methods, classes, packages

and interfaces…

• Literals: fixed values (e.g. “1”, “17.56”, “0xFFE” …)

• Operators: for Maths, Boolean and logical operations (e.g.

“+”, “-”, “&&”, “|” …)

• Special words: keywords (e.g. “if”, “for”, “public” …)

TOKENS, PATTERNS,

LEXEMES

Token: category of lexemes

A pattern is a rule describing the set of

lexemes that can represent as particular

token in source program

EXAMPLES OF TOKENS

double pi = 3.1416;

The substring pi is a lexeme for the token “identifier.”

Token

Sample

Lexemes

Informal Description of Pattern

type

double

double

if

if

if

booelan_operator

<. <=, ==, >, >=

< or <= or == or > or >=

id

pi, count, d2

Letter followed by letters and digits

literal

3.1414, “test”

Any alpha numeric string of

characters

LEXEME AND TOKEN (MORE

DETAILED CATEGORIES)

Index = 2 * count +17;

Lexemes

Tokens

Index

variable

=

equal_sign

2

int_literal

*

multi_op

Count

variable

+

plus_op

17

int_literal

;

semicolon

LEXICAL ERRORS

Few errors are discernible at the lexical level alone

• Lexical analyzer has a very localized view of a source

program

Let some other phase of compiler handle any error

SPECIFICATION OF

TOKENS

We need a powerful notation to specify the patterns for the

tokens

• Regular expressions to the rescue!!

In process of studying regular expressions, we will discuss:

• Operation on languages

• Regular definitions

• Notational shorthands

RECALL: LANGUAGES

: alphabet, it is a finite set consisting of all input

characters or symbols

*: closure of the alphabet, the set of all possible

strings in , including the empty string

A (formal) language is some specified subset of *

OPERATIONS ON

LANGUAGES

OPERATIONS ON

LANGUAGES

Non-mathematical format:

• Union between languages L and M: the set of strings

that belong to at least one of both languages

• Concatenation of languages L and M: the set of all

strings of the form st where s is a string from L and t is a

string from M

• Intersection between languages L and M: the set of

all strings which are contained in both languages

• Kleene closure (named after Stephen Kleene): the set

of all strings that are concatenations of 0 or more strings

from the original language

• Positive closure : the set of all strings that are

concatenations of 1 or more strings from the original

language

REGULAR EXPRESSIONS

Regular expression is a compact notation for describing

string.

In Java, an identifier is a letter followed by zero or more letter

or digits letter (letter | digit)*

|: or

*: zero or more instance of

RULES

is a regular expression that denotes {}, the set containing

empty string

If a is a symbol in , then a is a regular expression that

denotes {a}, the set containing the string a

Suppose r and s are regular expressions denoting the

language L and M, then

• (r) |(s) is a regular expression denoting LM.

• (r)(s) is regular expression denoting LM

• (r) * is a regular expression denoting (L)*.

PRECEDENCE

CONVENTIONS

The unary operator * has the highest precedence and is left

associative.

Concatenation has the second highest precedence and is left

associative.

| has the lowest precedence and is left associative.

(a)|(b)*(c)a|b*c

EXAMPLE OF REGULAR

EXPRESSIONS

PROPERTIES OF REGULAR

EXPRESSION

REGULAR DEFINITIONS

If is an alphabet of basic symbols, then a regular

definition is a sequence of definitions of the form:

d1 r 1

d2 r 2

...

dn r n

Where each di is a distinct name, and each ri is a

regular expression over the symbols in

{d1,d2,…,di-1},

• i.e., the basic symbols and the previously defined

names.

EXAMPLE OF REGULAR

DEFINITIONS

NOTATIONAL

SHORTHANDS

Certain constructs occur so frequently in regular expressions that it

is convenient to introduce notational short hands for them

We have already seen some of these short hands:

1.

2.

3.

4.

One or more instances: a+ denotes the set of all strings of one

or more a’s

Zero or more instances: a* denotes all the strings of zero or

more a’s

Character classes: the notation [abc] where a, b and c denotes

the regular expresssion a | b | c

Abbreviated character classes: the notation [a-z] denotes the

regular expression a | b | …. | z

NOTATIONAL

SHORTHANDS

Using character classes, we can describe identifiers as being

strings described by the following regular expression:

[A-Za-z][A-Za-z0-9]*

FINITE STATE AUTOMATA

Now that we have learned about regular expressions

• How can we tell if a string (or lexeme) follows a regular

expression pattern or not?

We will again use state machines!

• This time, they are not UML state machines or petri nets

• We will call them: Finite Automata

The program that executes such state machines is called a

Recognizer

SHORT BREAK…

FINITE AUTOMATA

A recognizer for a language is a program that takes as input a

string x and answers

• “Yes” if x is a lexem of the language

• “No” otherwise

We compile a regular expression into a recognizer by

constructing a generalized transition diagram called a finite

automaton

A finite automaton can be deterministic or nondeterministic

• Nondeterministic means that more than one transition out of a

state may be possible on the same input symbol

NONDETERMINISTIC

FINITE AUTOMATA (NFA)

A set of states S

A set of input symbols that belong to alphabet

A set of transitions that are triggered by the processing of a

character

A single state s0 that is distinguished as the start (initial)

state

A set of states F distinguished as accepting (final) states.

EXAMPLE OF AN NFA

The following regular expression

(a|b)*abb

Can be described using an NFA with the following diagram:

EXAMPLE OF AN NFA

The previous diagram can be described using the following

table as well

Remember the regular expression was:

(a|b)*abb

ANOTHER NFA EXAMPLE

NFA accepting the following regular expression:

aa*|bb*

DETERMINISTIC FINITE

AUTOMATA (DFA)

A DFA is a special case of a NFA in which

• No state has an -transition

• For each state s and input symbol a, there is at most one

edge labeled a leaving s

ANOTHER DFA EXAMPLE

For the same regular expression we have seen before

(a|b)*abb

NFA VS DFA

Always with the regular expression: (a|b)*abb

NFA:

DFA:

EXAMPLE OF A DFA

Recognizer for identifier:

TABLES FOR THE

RECOGNIZER

To change regular expression, we can simply change

tables…

CODE FOR THE RECOGNIZER

SECTION 8

FINITE STATE AUTOMATA

TOPICS

Algorithm to create NFAs from regular expressions

Algorithm to convert from NFA to DFA

Algorithm to minimize DFA

Many examples….

CREATING DETERMINISTIC

FINITE AUTOMATA (DFA)

In order to create a DFA, we have to perform the following:

• Create a Non-deterministic Finite Automata (NFA) out of

the regular expression

• Convert the NFA into a DFA

NFA CREATION RULES

2

A

3

ε

A|B

1

6

ε

ε

4

AB

ε

1

A

5

B

B

2

3

ε

A*

1

ε

2

A

ε

3

ε

4

NFA CREATION EXAMPLES

x | yz

According to precedence rules, this is

equivalent to:

2

x | (yz)

A

3

ε

ε

This has the same form as A | B:

1

6

ε

ε

4

B

5

And B can be represented as:

y

1

2

z

2

3

ε

Putting all together:

3

x

ε

1

7

ε

ε

4

y

5

z

6

NFA CREATION EXAMPLES

ε

(x | y)*

We have seen A*:

ε

ε

A

ε

ε

Therefore, (x | y)*:

ε

1

ε

3

x

4

ε

2

7

ε

ε

5

6

y

ε

ε

8

NFA CREATION EXAMPLES

abb

NFA CREATION EXAMPLES

a*bb

ε

1

ε

2

a

ε

3

ε

4

b

5

b

6

NFA CREATION EXAMPLES

(a|b)*bc

ε

a

ε

0

ε

2

3

ε

1

6

ε

ε

4

5

b

ε

ε

7

b

8

c

9

CONVERSION OF AN NFA