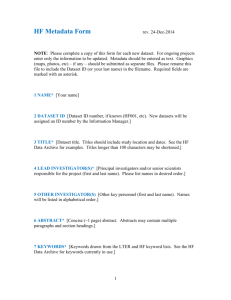

Data Files Description

advertisement

Introduction to Metadata, the DDI and the Metadata Editor Presentation to the SERPent project team by Margaret Ward 3 March 2010 Overview • Good practice in data documentation • The DDI • The Metadata Editor A ‘good’ dataset “From the archivist’s and the end user’s perspective a ‘good’ dataset is one that is easy to use. Its documentation is clear and easy to understand, the data contain no surprises, and users are able to access the dataset with relatively little startup time” Extracted from the ‘Guide to Social Science Data Preparation and Archiving’ (ICPSR) - http://www.icpsr.umich.edu/access/dataprep.pdf - ICPSR Why document data? The data documentation, or metadata, helps the researcher: • Find the data they are interested in • Understand how the data have been created • Assess the quality of the data (e.g. standards used) and also • Enables users to understand / interpret data • Ensures informed and correct use of the data • Reduces chance of incorrect use / misinterpretation What should be provided? • Explanatory material – information essential to the informed use of the dataset • Contextual information – material about the context in which the data were collected and information about the uses to which the data were put • Cataloguing information – used to create a formal catalogue record or study description for the study 5 Explanatory information • Information about the data collection process and methods, e.g. instruments used, methods used and how developed, sampling design • Information about the structure of the dataset, e.g. files, cases, relationships between files or records within a study • Technical information, e.g. computer system used, software packages used to create files • Variables and values, coding and classification schemes, e.g. full details of the variables and coding frames used • Information about derived variables, e.g. full details on how these were created Cont… 6 Explanatory information • Weighting and grossing • Data source, e.g. details about the source the data were derived from • Confidentiality and anonymisation, e.g. does the data contain confidential information on individuals • Validation and other checks 7 Contextual information • Description of the originating project, e.g. the aims and objectives of the project, who or what were being studied, geographical and temporal coverage etc. • Provenance of the dataset, e.g. the history of the data collection process, details of data errors, bibliographic references to reports or publications based on the study • Serial and time-series datasets - useful to have details of changes in question text, variable labels etc. over time 8 Using Data Documentation 9 Using data documentation Example: The UK Data Archive uses data documentation to create: • Catalogue records for datasets • User guides for datasets • Data listings • Nesstar datasets 10 UK Data Archive Catalogue records Information taken from: • Study documentation • Series information • Data deposit forms - fields include title, principle investigator, sponsors, data collectors, dates of data collection, temporal and geographic coverage 11 Creating Survey Catalogue records 12 Survey catalogue records • Used for retrieval purposes: use of controlled vocabularies provides means for consistent retrieval • Information can be searched using a free-text search • Catalogue records should provide users with enough information to enable them to decide if the data is suitable for their needs • Used for administrative purposes e.g. provides information on the provenance of a dataset 13 Catalogue records contain… • • • • • • • • A description of the data – abstract, geographical and temporal coverage, population, variable labels and values A list of subject keywords Bibliographic information – principal investigator, sponsor Information on how the data were collected – methodology How to reference the data – citation Who owns the data – copyright Who can use the data – access conditions Where to get the data – distributor cont…. 14 Catalogue records also contain.. • Information on how to use the data, e.g. weighting details • Lists of publications by the principal investigators and resulting from secondary analysis • Links to related datasets, publications, related web sites, documentation • When the data are available – new editions, frequency of release 15 Catalogue records The catalogue record should adhere to standards and rules to: • Ensure consistency, accuracy, continuity • Allow for consistent retrieval • Enable interoperability between systems 16 Example: UK Data Archive Controlled vocabularies (dynamic) • Names authority lists AACR2 (Anglo-American Cataloguing Rules Second Edition (1978), NCA (National Council on Archives) Rules for Construction of Personal, Place and Corporate Names (1997) • Subject keywords – HASSET (Humanities and Social Sciences Electronic Thesaurus) (British Standard Guide to Establishment and development of monolingual thesauri – BS 5723, ISO 2788) 17 HASSET thesaurus HASSET thesaurus contains approximately: • 4,500 subject terms • 3,270 synonyms • 28,00 relationships (BT,NT,TT,RT) (Broader, Narrower, Top, Related Terms) • 2,730 geographic terms 18 HASSET terms 19 Controlled vocabularies (fixed) • Subject categories – UK Data Archive - in-house schema • Elements describing the methodology e.g. method of data collection, sampling, etc 20 International considerations Standardisation at an international level: • Controlled vocabularies for methodology fields – work in progress within the DDI group and CESSDA • Subject categories – UKDA scheme is mapped to the CESSDA Top Classification • Thesaurus – ELSST (European Language Social Science Thesaurus) (3,209 terms) 21 What can we use to organise all the information we have? DDI and the Metadata Editor 22 The DDI 23 Introduction to the DDI • Development of the Data Document Initiative (DDI) initially supported by ICPSR and then by a grant from the National Science Foundation (NSF) • International committee set up which produced a Document Type Definition (DTD) for the ‘mark-up’ of what were originally known as ‘social science codebooks’ • This DTD employs the eXtensible Mark-up Language (XML) and is used within the Nesstar system and Metadata Editor 24 The DDI (versions 1 & 2) There are five main sections of the DDI which are: 1. Document Description: containing items describing the marked-up document itself as well as its source documents 2. Study Description: contains items describing the overall data collection (e.g. title, citation, methodology, study scope, data access etc.) 3. Data Files Description: contains items relating to the format, size and structure of the data files 25 DDI 4. Variables description: contains items relating to variables in the data collection 5. Other Study-Related Materials: contains other study-related material not included in other sections (e.g. bibliography, separate questionnaire files, etc) Further information can be found at: http://www.ddialliance.org/ 26 DDI XML Example – StdyDscr <stdyDscr> <citation> <titlStmt> <titl> Demo: Demonstration dataset </titl> <IDNo> demo </IDNo> </titlStmt> <rspStmt> <AuthEnty affiliation="UK Data Archive"> Ward, M. </AuthEnty> <AuthEnty affiliation="UK Data Archive"> Eastaugh, K. </AuthEnty> </rspStmt> 27 DDI XML Example – variable <var ID="V12" name="gender" files="F1" dcml="0" intrvl="discrete"> <location width="1" RecSegNo="1"/> <labl> Gender </labl> <qstn> <qstnLit> Sex of respondent? </qstnLit> <ivuInstr> Record respondent’s sex </ivuInstr> </qstn> <catgry> <catValu> 1 </catValu> <catStat type="freq"> 235 </catStat> . . <varFormat type="numeric" schema="other"/> </var> 28 DDI users • • • • • • • • • Australian Social Science Data Archive Canadian Research Data Centres (CRDCs) CESSDA Data Portal The Dataverse Network European Social Survey (ESS) Gallup Europe ICPSR data catalogue MIDUS II – Midlife in the US: A national study of health and well-being The Tromsø Study – to determine the reasons for the high mortality rate in Norway • International Household Survey Network • Nesstar Links available from: http://www.ddialliance.org/ddi-at-work/projects 29 The Metadata Editor 30 Metadata Editor Standards • DDI (http://www.ddialliance.org/) “Enables the effective, efficient and accurate use” of data resources • Dublin Core (http://dublincore.org/) – (Fifteen elements) “A standard for cross-domain information resource description” 31 Metadata Editor templates • Metadata added by using templates • Use templates to create individual sets of DDI fields • Can add controlled vocabulary lists and default text • Can rename template fields, i.e. use familiar terms. 32 Advantages of using templates • Create to suit individual needs of an organisation or a data series • Use of standard templates ensures consistent use of metadata fields • Can add helpful information about each field to assist the data publisher 33 Import/Export Metadata • Metadata can be imported and exported using the Metadata Editor – ‘Documentation’ Menu Options: • Import from Study: import the metadata from an existing ‘Nesstar’ file selecting the fields to import. • Import from DDI: import from an existing XML file • Export DDI: Export metadata to a new XML file 34 Import/Export data Various formats available for both import and export including: • • • • SPSS portable, sav STATA Delimited text, e.g. csv, tab Nesstar/NSDstat 35 Study level metadata • Information about the study • Basic information needed, e.g. Title, unique ID, Abstract • Other information could include: Primary investigator, Distributor, Version, copyright details • Consider use of: Keywords, Topic classification • Related information – related studies, related publications etc. • Other Materials – links to useful resources 36 Variable level metadata • Variable labels can easily be added/edited • Category labels can easily be added/edited • Identify ‘Weight’ variables • Add question text and variable notes: – to each variable separately – to a block of variables • Variable notes, e.g. how the variable was derived etc. 37 Data manipulation • View the data as a matrix allowing direct data entry or editing • Cut and paste data • Add, insert and copy variables of different types, e.g. numeric, Fixed string, Dynamic string, Date • Insert/replace data – insert data matrix from dataset, or fixed format text • Delete variables • Sort/Delete cases • Conversion between variable types 38 Variable groups • Used to organise data into specific categories, e.g. variables that relate to the same topic or theme • A hierarchy of groups can be created, e.g. topics within a ‘Selfcompletion’ section • Variables can belong to more than one group • Groups are ‘virtual’ – variables are not moved within the file • Groups can be arranged in any order • Information about that group can be added, e.g. a group definition Advantages: • Make it easier for end-users to navigate the dataset • Reduces the load time of a dataset when published 39 Support for relational datasets • Related, hierarchical, datasets are supported • Use the ‘Key Variables & Relations’ section within a dataset to describe the relationship between files • Add the related dataset names • Add the key variables – used to link the files 40 External resources • External resources include PDF files, ‘Word’ files, or the URL of an associated resource • Within the Metadata Editor they can be described and published as ‘external’ resources • Uses Dublin core fields for metadata • Enables these ‘external’ resources to be viewed alongside survey data 41 Using the Metadata Editor Creating a survey catalogue record: • Import data file • Add study level metadata • Add variable level metadata • Check data/labels • Create variable groups • Save file 42 Review • Good metadata enables easy discovery of data • Good data documentation leads to informed re-use of data • Provide meaningful information (titles, descriptions, abstract, keywords) in catalogue record 43 Metadata Editor Demonstration • Importing data • Adding study metadata • Adding variable metadata • Creating variable groups • Using the template editor – metadata fields 44 Further information http://www.surveynetwork.org/ (Follow link to Microdata Management toolkit – Tools and guidelines) http://www.ddialliance.org/ - DDI http://www.data-archive.ac.uk/ - UK Data Archive 45