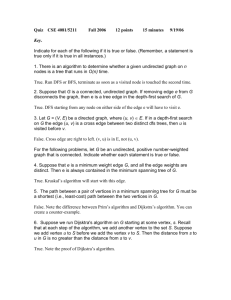

Ch. 9: Graphs - SIUE Computer Science

advertisement

Chapter 9: Graph Algorithms

• Graph ADT

• Topological Sort

• Shortest Path

• Maximum Flow

• Minimum Spanning Tree

• Depth-First Search

• Undecidability

• NP-Completeness

CS 340

Page 143

The Graph Abstract Data Type

A graph G = (V, E) consists of a set of vertices, V, and

a set of edges, E, each of which is a pair of vertices.

If the edges are ordered pairs of vertices, then the

graph is directed.

4

Undirected,

unweighted,

unconnected,

loopless graph

(length of longest

simple path: 2)

Directed,

unweighted,

acyclic, weakly

connected,

loopless graph

(length of longest

simple path: 6)

Undirected,

unweighted,

connected graph,

with loops

(length of longest

simple path: 4)

CS 340

3

6

6

5

2

2

3

3

4

5

Directed,

unweighted, cyclic,

strongly

connected,

loopless graph

(length of longest

simple cycle: 7)

Directed,

weighted, cyclic,

weakly connected,

loopless graph

(weight of longest

simple cycle: 27)

Page 144

Matrix

C

C

B

A

B

A

D

F

H

G

A

B

C

D

E

F

G

H

CS 340

D

0

1

0

0

0

0

0

0

F

0

0

0

0

0

0

1

0

G

1

0

0

0

1

1

0

1

H

0

0

0

0

0

0

1

0

A

B

C

D

E

F

G

H

A

0

0

0

0

0

1

0

0

B

1

0

1

0

0

0

0

0

C

0

0

0

0

1

0

0

0

D

1

1

0

0

0

0

0

0

5

G

E

0

0

0

1

0

0

0

1

F

0

0

0

1

0

0

0

0

G

0

0

0

1

0

1

0

0

H

0

0

0

0

0

0

1

0

A

B

C

D

E

F

G

H

3

H

4

G

E

0

0

0

0

1

0

1

0

E

2

3

F

H

C

0

1

0

0

0

0

0

0

5

D

2

F

B

1

0

1

1

0

0

0

0

6

E

D

A

1

1

0

0

0

0

1

0

B 6

A

E

C

4

3

A

2

B

3

5

C

4

D

6

E

6

2

F

G

3

4

5

H

3

The Problem: Most graphs are sparse (i.e., most

vertex pairs are not edges), so the

memory requirement is excessive:

(V2).

Page 145

Lists

C

B

A

C

B

A

6

5

E

D

D

F

B 6

A

E

2

F

H

G

B

G

A B

B A

C

D

A B 3

D 6

B D

B C 4

E 6

C B

C B

C

D B

D E

E E

G

E C

F G

G A

H G

CS 340

F A

E

F

F

H

G

3

H

4

G

D

E

2

3

G

A A

D

F

H

C

4

3

D B 5

E 2

5

G 3

E H 3

G

F G 4

G H

G A 2

H E

H G 5

Page 146

Topological Sort

MATH 120

A topological sort of an acyclic directed

graph orders the vertices so that if there is a

path from vertex u to vertex v, then vertex v

appears after vertex u in the ordering.

One topological sort of

the course prerequisite

MATH 125

CS 140

graph at left:

MATH 150

CS 111, MATH 120,

MATH 224

ECE 282

CS 111

CS 150

ECE 381 CS 140, MATH 125,

MATH 152

CS 150, MATH 224,

MATH 423

CS 234, CS 240,

ECE 482

MATH 250

ECE 282, CS 312,

CS 234

CS 240

STAT 380

CS 325, MATH 150,

ECE 483

MATH 321

MATH 152, STAT

CS 312

CS 321

380, CS 321, MATH

CS 325

250, MATH 321, CS

CS 482

314, MATH 423, CS

CS 340

340, CS 425, ECE

CS 382

CS 434

381, CS 434, ECE

CS 314

482, CS 330, CS

CS 330

382, CS 423, CS

CS 425

438, CS 454, CS

CS 447

447, CS 499, CS

CS 423

482, CS 456, ECE

CS 499

CS 438

CS 456

483

CS 454

CS 340

Page 147

Topological Sort Algorithm

Place

B

A

C

E

G

all indegree-zero vertices in a list.

While the list is non-empty:

– Output an element v in the indegree-zero

list.

– For each vertex w with (v,w) in the edge

set E:

Decrement the indegree of w by one.

Place w in the indegree-zero list if its

Indegree of A: 0

Indegree of B: new

1 indegree

0

0

0is 0.

F

H

D

I

Indegree of C:

Indegree of D:

Indegree of E:

Indegree of F:

Indegree of G:

Indegree of H:

Indegree of I:

Output:

CS 340

3

1

1

4

1

3

0

3

1

0

4

0

3

0

A

E

2

1

2

0

1

0

0

0

3

0

2

0

2

0

1

2

0

1

1

0

1

1

1

0

0

I

B

D

G

C

0

H

F

Page 148

Shortest Paths

The shortest path between two locations is dependent on

the function that is used to measure a path’s “cost”.

Does the path “cost” less if the

total distance traveled is

minimized?

Does the path “cost” less if the

amount of traffic encountered

is minimized?

Does the path “cost” less if the

amount of boring scenery is

minimized?

In general, then, how do you find the

shortest path from a specified vertex

to every other vertex in a directed

graph?

CS 340

Page 149

B

(0,-)

A

Graphs

C

E

F

G

Use a breadth-first search, i.e., starting at the specified

vertex, mark each adjacent vertex with its distance and its

predecessor, until all vertices are marked. Algorithm’s

time complexity: O(E+V).

D

H

I

(1,A)

(1,A)

B

C

B

(1,A)

(0,-)

A

F

H

(0,-)

D

I

(2,E)

(1,A)

(2,E)

C

(1,A)

F

H

(2,B)

B

(2,E)

E

G

H

D

C

(1,A)

(1,A)

F

(2,B)

B

A

(2,E)

E

G

(1,A)

(1,A)

CS 340

A

I

(1,A)

(0,-)

C

(1,A)

E

G

(2,B)

D

(3,F)

(0,-)

A

I

E

G

(1,A)

(2,E)

F

H

(2,E)

D

(3,F)

I

(4,D)

Page 150

Weighted Graphs With No Negative

Weights

(,-)

B

(,-)

(0,-)

6

A

E

8

F

2

(0,-)

19

A

8

(8,A) G

CS 340

E

16

6

A

2

(8,A) G

8

H 3

(,-)

13

F

2

8 H 3

(22,E)

D

4

3

I (,-)

(6,A)

6

A

E

8

16

(8,A) G

8

2

4

(7,A) 19

B

(0,-)

5 (,-)

F

16

7

(19,E) 5 (,-)

2

13

E

(0,-)

D

6

A

16

(8,A) G

8

13

2

H

(16,G)

D

4

3

I

3

(,-)

2

4

H

3

(7,A)

19

B

7

F

2

F

5 (,-)

D

3

I (,-)

(22,E)

C

5 (,-)

(19,E)

2

(19,E)

13

E

8

3

I (,-)

21

(6,A)

(26,B)

21

C

7

(,-)

8

3

C

(6,A) 21

6

(0,-)

D

I (,-)

21

(6,A)

(26,B)

B

7

4

8 H 3

(,-)

(7,A)

2

B

C

7

5 (,-)

(,-)

13

16

(,-) G

B

C

21

(,-)

19

(,-)

19

7

Use Dijkstra’s Algorithm, i.e., starting at the specified vertex,

finalize the unfinalized vertex whose current cost is minimal,

and update each vertex adjacent to the finalized vertex with

its (possibly revised) cost and predecessor, until all vertices

are finalized. Algorithm’s time complexity: O(E+V2) =

O(V2). (7,A)

(7,A)

(27,E)

19

21

(6,A)

(0,-)

6

A

(26,B)

C

(18,H)

13

E

8

16

(8,A) G

8

2

F

2

H

4

3

5 (,-)

D

3

I (,-)

(16,G)

Page 151

(7,A) 19

B

7

C

21

(6,A)

6

13

E

(0,-) A

8

16

G

(8,A)

8

(18,H)

2

F

2

4

H

3

(7,A) 19

B

(26,B)

7

5

(20,F)

D

(6,A)

(0,-)

3

I

(,-)

6

A

E

8

16

(8,A) G

8

7

(6,A)

(0,-)

6

A

E

8

16

(8,A) G

8

(7,A)

(25,D)

C

21

2

F

2

H

(16,G)

21

(18,H)

13

2

F

2

4

H

3

5 (20,F)

D

3

I

(23,D)

4

3

(25,D)

19

B

(18,H)

13

C

(16,G)

(16,G)

(7,A) 19

B

(25,D)

I

7

5 (20,F)

D

3

(23,D)

(0,-)

(6,A) 21

6

A

C

E

8

16

(8,A) G

8

(18,H) 5 (20,F)

13

2

F

2

H 3

(16,G)

4

I

D

3

(23,D)

Note that this algorithm would not work

for graphs with negative weights, since

a vertex cannot be finalized when there

might be some negative weight in the

graph which would reduce a particular

path’s cost.

CS 340

Page 152

Shortest Path Algorithm:

Weighted Graphs With Negative Weights

Use a variation of Dijkstra’s Algorithm without using the

concept of vertex finalization, i.e., starting at the specified

vertex, update each vertex adjacent to the current vertex with

its (possibly revised) cost and predecessor, placing each

revised vertex in a queue. Continue until the queue is empty.

Algorithm’s time complexity: O(EV).

(,-)

B

C

13

25

A

E

-2

-9

11

(,-) G

6

H -3

(,-)

(13,A) 15

B

13

(0,-)

A

25

-4

I

D

(0,-)

8

(,-)

F

-9

11

(26,A) G

6

H -3

(,-)

6

-4

I

D

8

(,-)

-2

E

11

(26,A) G

6

H -3

(,-)

(13,A) 15

B

25

26

(26,A) G

-9

6

(0,-)

8

I (,-)

F

11

H

(32,G)

6

-4

-3

25

A

26

(26,A) G

-2

E

D

-9

11

6

H -3

(,-)

8

I (,-)

25

26

(26,A) G

-9

6

8

I (,-)

7 (20,B) -9(26,F)

-2

E

-4

(28,B)

(25,A)

A

D

C

13

(0,-)

-9(,-)

6

F

(13,A) 15

B

7 (20,B) -9(,-)

-2

E

-4

D

C

(25,A)

A

6

(,-)

C

7 (23,E)

(25,A)

(28,B)

13

(0,-)

13

-9(,-)

F

-9

C

-2

C

26

-9 (,-)

(25,A) 7 (20,B)

E

25

A

(13,A)

15

B

(,-)

7 (,-)

(25,A)

(28,B)

26

CS 340

6

F

26

13

-9 (,-)

7 (,-)

(,-)

(0,-)

(13,A) 15

B

(,-)

15

F

11

H

6

-4

-3

D

8

I (,-)

(31,F)

Page 153

(13,A)

15

B

13

25

A

26

-2

E

(26,A) G

H

6

6

F

11

-9

(26,F)

D

-4

-3

8

I (,-)

25

A

26

E

(26,A) G

(0,-)

A

25

26

(26,A) G

-2

E

-9

6

F

11

H

(31,F)

CS 340

-4

-3

(13,A)

15

B

8

I (,-)

25

A

26

(26,F)

E

6

-4

-3

D

8

I (34,D)

(0,-)

A

25

26

(26,A) G

-2

E

-9

6

F

11

H

(31,F)

F

11

H

6

(26,F)

6

D

-4

-3

8

I (34,D)

(31,F)

(13,A)

15

B

(26,F)

6

-4

-3

(17,D)

C

13

-9

7 (20,B)

(22,H)

-2

-9

(26,A) G

(17,D)

C

13

-9

7 (20,B)

(22,H)

D

(0,-)

-9

7 (20,B)

(22,H)

(31,F)

(17,D)

C

13

H

6

(26,F)

6

F

11

-9

(31,F)

(13,A)

15

B

-2

(17,D)

C

13

-9

7 (20,B)

(22,H)

(0,-)

(13,A)

15

B

(28,B)

C

13

-9

7 (20,B)

(25,A)

(0,-)

(13,A)

15

B

(28,B)

C

D

8

I (34,D)

(0,-)

A

25

26

(26,A) G

-2

E

-9

6

-9

7 (20,B)

(22,H)

F

11

H

(26,F)

6

-4

-3

D

8

I (34,D)

(31,F)

Note that this algorithm would not

work for graphs with negative-cost

cycles. For example, if edge IH in the

above example had cost -5 instead of

cost -3, then the algorithm would loop

indefinitely.

Page 154

Maximum Flow

Assume that the directed graph G = (V, E) has edge

capacities assigned to each edge, and that two

vertices s and t have been designated the source and

sink nodes, respectively.

We wish to maximize the “flow” from s to t by

determining how much of each edge’s capacity can

be used so that at each vertex, the total incoming

flow equals the total outgoing flow.

This problem relates to such practical applications as

Internet routing and automobile traffic control.

19

B

35

A

18

C

21

27

E

17

16

12

35

21

F

12

23

D

14

30

G

CS 340

8

H

15

I

In the graph at left, for instance, the total of

the incoming capacities for node C is 40

while the total of the outgoing capacities for

node C is 35.

Obviously, the maximum flow for this graph

will have to “waste” some of the incoming

capacity at node C.

Conversely, node B’s outgoing capacity

exceeds its incoming capacity by 5, so

some of its outgoing capacity will havePage

to be

155

Maximum Flow Algorithm

To find a maximum flow, keep track of a flow graph and a

residual graph, which keep track of which paths have been

added to the flow.

Keep choosing paths which yield maximal increases to the

flow; add these paths to the flow graph, subtract them from the

residual graph,

add their reverse toResidual

the residual

graph.

Flowand

Graph

Graph

Original Graph

19

0

B

35

19

B

C

21

0

35

C

0

27

18

E

17

16

12

12

H

8

D

0

A

14

0

0

E

0

0

G

0

F

0

H

0

19

D

0

35

18

C

18

E

21

35

17

16

12

F

12

G

8

16

H

D

14

15

12

H

8

B

21

21

14

I

D

14

0

A

0

0

0

E

0

0

I

15

C

21

0

0

23

23

F

30

G

C

F

0

35

21

21

D

0

A

18

30

CS 340

17

19

B

21

27

E

12

I

0

21

A

27

A

0

B

35

0

I

15

21

21

30

G

C

0

23

F

35

0

21

A

B

27

E

17

16

12

F

12

2

14

0

G

0

H

0

I

D

21

30

G

8

H

15

I

Page 156

Original Graph

Flow Graph

19

B

35

A

23

F

D

17

A

14

H

8

0

E

G

8

35

17

H

0

F

23

D

17

A

0

E

18

CS 340

8

G

G

C

H

8

F

0

17

F

0

21

D

H

0

35

D

14

15

I

21

I

15

0

18

E

17

12

C

21

0

10

A

31

4

4 17

2

0

F

16 17

12

14

D

21

30

I

0

G

8

H

A

C

35

21

12

17

E

12

F

0

21

0

H

6

0

12

A

D

12

G

I

B

0

31

17

12

I

15

14

17

23

14

5

B

35

31

B

35

H

16 17

12

D

30

I

30

G

17

12

18

F

0

C

17

18

17

4

2

0

E

14

16 12

12

10

A

0

0

21

E

D

17

14

0

0

I

15

21

27

0

17

14

B

A

21

21

19

35

F

H

0

35

30

G

17

B

21

16 12

18 12

21

0

C

21

27

C

14

B

A

14

17

0

0

I

15

E

19

35

B

21

21

30

G

C

17

21

16 12

18 12

21

35

17

E

19

B

C

21

27

Residual Graph

0

12

5

C

0 21

10

E

0

4

4 17

2

F

17

4 17

12

0

12

12

G

8

H

31

14

D

21

12

18

3

I

12 Page 157

Original Graph

Flow Graph

19

14

14

B

35

C

B

21

35

35

C

A

18

E

17

16

12

12

31

D

14

25

A

12

E

17

F

12

4

21

8

H

8

6

20

I

15

G

H

8

19

C

B

21

18

27

E

35

35

17

16

12

8

4

G

0

F

12

35

F

4 17

4

D

14

A

21

16

25

E

31

17

16

8

H

15

I

F

12

21

8

H

I

B

I

12

5

C

0 21

2

25

2 4

10

12

A

D

24

G

20

10

8

3

H

D

21

6

8

12

0

0

17

23

4

4 17

2

8

C

30

G

12

E

31

14

21

A

25

8

I

12

C

14

B

35

D

5

0 21

2

A

6

30

G

B

0

17

23

F

35

21

21

27

Residual Graph

16

G

E

8

0

31

4

4 17

2

F

0 17

0

2

H

12

6

12

I

3

12

8

0

8

21

D

24

Thus, the maximum flow

for the graph is 76.

CS 340

Page 158

Algorithm

6

B

5

7

3

A

When a large set of vertices must be interconnected (i.e.,

“spanning”) and there are several possible means of doing so,

redundancy can be eliminated (i.e., “tree”) and costs can be

reduced (i.e., “minimum”) by employing a minimum spanning

tree.

Kruskal’s Algorithm accomplishes this by just selecting

minimum-cost edges as long as they don’t form cycles.

D

C

4

8

7

E

3

6

4

B

8

H

D

A

3

A

E

H

D

F

CS 340

E

E

6

5

H

3

A

D

C

4

H

3

A

D

E

G

F

C

B

G

6

5

E

H

C

4

3

A

H

3

4

D

7

E

H

3

3

F

G

B

4

4

G

E

F

3

B

H

3

4

D

F

4

3

C

D

C

4

C

5

A

3

A

G

B

3

A

G

B

3

F

H

F

C

D

E

B

9

F

G

7

ORIGINAL GRAPH

B

C

4

G

F

G

MINIMUM SPANNING TREE

Page 159

Minimum Spanning Tree: Prim’s Algorithm

6

B

5

C

7

4

D 8

E

6

3

3

A

An alternative to Kruskal’s Algorithm is Prim’s Algorithm, a

variation of Dijkstra’s Algorithm that starts with a minimumcost edge, and builds the tree by adding minimum-cost

edges as long as they don’t create cycles. Like Kruskal’s

Algorithm, Prim’s Algorithm is O(ElogV)

4

B

8

7

H

D

A

3

A

E

6

H

D

CS 340

D

E

F

H

6

B

H

D

C

D

E

H

F

G

6

B

5

E

H

3

4

G

C

F

4

3

A

3

A

G

5

E

6

4

C

4

F

H

5

3

A

4

3

E

G

B

5

G

5

A

C

4

B

D

F

G

B

4

F

3

A

H

F

C

D

E

C

9

F

G

7

ORIGINAL GRAPH

B

B

C

4

3

A

4

G

C

D

E

7

H

3

F

G

MINIMUM SPANNING

Page 160

TREE

Depth-First Search

A convenient means to traverse a graph is to

use a depth-first search, which recursively visits

and marks the vertices until all of them have

been traversed.

A

A

B

C

A

B

C

B

D

F

E

G

D

H

Original Graph

F

E

G

C

H

E

Depth-First Search

(Solid lines are part of depthfirst spanning tree; dashed

lines are visits to previously

marked vertices)

G

D

H

F

Such a traversal provides a

means by which several

significant features of a graph

CScan

340 be determined.

Depth-First

Spanning Tree

Page 161

Depth-First Search Application:

Articulation Points

B

A

E

D

C

H

G

F

Original Graph

CS 340

A vertex v in a graph G is called an articulation point if

its removal from G would cause the graph to be

disconnected.

Such vertices would be considered “critical” in

applications like networks, where articulation points are

the only means of communication between different

portions of the network.

A depth-first

all of a8/6graph’s

2/1 find 6/6

2

6 search

8 can be used to

articulation points.

J

I

1

3

4

7

5

10

9

Depth-First Search (with nodes

numbered as they’re visited)

1/1

3/1

4/1

7/6

5/5

10/7

9/7

Nodes also marked with lowestnumbered vertex reachable via zero or

more tree edges, followed by at most

one back edge

The only articulation points are the root (if it has more than one

child) and any other node v with a child whose “low” number is at

least as large as v’s “visit” number. (In this example: nodes B, C, E,

Page 162

and G.)

B

C

A

G

F

H

Depth-First Search

Application: Euler Circuits

D

E

I

An Euler circuit of a graph G is a

cycle that visits every edge exactly

once.

J

Original Graph

B

A

C

G

H

D

F

I

B

E

A

J

A

C

G

H

I

J

After Removing Third DFS

Cycle: FHIF

CS 340

F

I

B

E

D

E

J

After Removing Second

DFS Cycle: ABGHA

D

F

G

H

After Removing First DFS

Cycle: BCDFB

B

C

A

C

G

H

D

F

I

Such a cycle could be useful in

applications like networks, where it

could provide an efficient means of

testing whether each network link is

up.

A depth-first search can be used to

find an Euler circuit of a graph, if

one exists. (Note: An Euler circuit

exists only if the graph is connected

Splicing the first two cycles yields cycle

and every vertex has even degree.)

A(BCDFB)GHA.

E

J

After Removing Fourth DFS

Cycle: FEJF

Splicing this cycle with the third cycle

yields ABCD(FHIF)BGHA.

Splicing this cycle with the fourth cycle

yields ABCD(FEJF)HIFBGHA

Note that this traversal takes

O(E+V) time.

Page 163

A

B

E

H

C

F

I

D

Depth-First Search Application:

Strong Components

K

A subgraph of a directed graph is a strong component of the graph if

there is a path in the subgraph from every vertex in the subgraph to

every other vertex in the subgraph.

In network applications, such a subgraph could be used to ensure

intercommunication.

A depth-first search can be used to find the strong components of a

graph.A

A

B

C

D

B

C

D

G

J

Original Directed Graph

A

B

E

H

C

F

I

D

E

G

J

H

K

5

4

11

1

2

3

I

10

6

8

7

E

G

J

H

K

5

4

9

Number the vertices according to a

depth-first postorder traversal, and

reverse the edges.

CS 340

11

1

2

3

10

8

7

F

I

G

J

K

Third leg of depth-first search

Second leg of depth-first search

First leg of depth-first search

6

F

9

Depth-first search of the revised

graph, always starting at the vertex

with the highest number.

The trees in the final depth-first

spanning forest form the set of

strong components.

In this example, the strong

components are: { C }, { B, F, I,

E }, { A }, { D, G, K, J }, and { H

}.

Page 164

Undecidable Problems

Some problems are difficult to solve on a computer,

but some are impossible!

Example: The Halting Problem

We’d like to build a program H that will test any other

program P and any input I and answer “yes” if P will

terminate normally on input I and “no” if P will get stuck in an

infinite loop on input I.

Program P

Input I

YES (if P halts on I)

Program H

NO (if P loops forever on I)

If this program H exists, then let’s make it the subroutine for program X, which takes

any program P, and runs it through subroutine H as both the program and the input. If

the result from the subroutine is “yes”, the program X enters an infinite loop; otherwise it

halts.

Program X

YES

Program P

Program H

loop forever

NO

halt

CS 340

Note that if we ran program X with itself as its input, then it would halt only

if it loops forever, and it would loop forever only if it halts!?!?

This contradiction means that our assumption that we could build program

H was false, i.e., that the halting problem is undecidable.

Page 165

P and NP Problems

A problem is said to be a P problem if it can be solved with

a deterministic, polynomial-time algorithm. (Deterministic

algorithms have each step clearly specified.)

A problem is said to be an NP problem if it can be solved

with a nondeterministic, polynomial-time algorithm. In

essence, at a critical point in the algorithm, a decision must

be made, and it is assumed that a magical “choice” function

always chooses correctly.

CS 340

Example: Satisfiability

Given a set of n boolean

variables b1, b2, …, bn and a

boolean function f(b1, b2, …,

bn).

To try every combination takes

exponential time, but a

nondeterministic solution is

polynomial-time:

Problem: Are there values that

can be assigned to

the variables so that

the function would

evaluate to TRUE?

for (i=1; i<=n; i++)

b[i] = choice(TRUE,FALSE);

if (f(b[1],b[2],…,b[n])==TRUE)

cout << “SATISFIABLE”;

else

cout << “UNSATISFIABLE”;

For example, is the function:

(b1 OR b2) AND (b3 OR NOT

So Satisfiability is an NP problem.

Page 166

The Knapsack Problem

Given a set of n valuable jewels J1, J2, …, Jn with

respective weights w1, w2, …, wn, and respective prices p1,

p2, …, pn, as well as a knapsack capable of supporting a

total weight of M.

Problem: Is there a way to pack at least T dollars worth of

jewels, without exceeding the weight capacity of the

knapsack?

(It’s not as easy as it sounds; three lightweight $1000

A nondeterministic

polynomial-time

jewels

might be preferable

to one heavy solution:

$2500 jewel, for

totalWorth = 0;

instance.)

totalWeight = 0;

for (i=1; i<=n; i++)

{

b[i] = choice(TRUE,FALSE);

if (b[i]==TRUE)

{

totalWorth += p[i];

totalWeight += w[i];

}

}

if ((totalWorth >= T) && (totalWeight <= M))

cout << “YAHOO! I’M RICH!”;

else

cout << “@#$&%!”;

CS 340

Page 167

NP-Complete Problems

CS 340

Note that all P problems are automatically NP problems, but

it’s unknown if the reverse is true.

The hardest NP problems are the NP-complete problems.

An NP-complete problem is one to which every NP problem

can be “polynomially reduced”. In other words, an instance

of any NP problem can be transformed in polynomial time

into an instance of the NP-complete problem in such a way

that a solution to the first instance provides a solution to the

second instance, and vice versa.

For example, consider the following two problems:

The Hamiltonian Circuit Problem: Given an

undirected graph, does it contain a cycle that

passes through every vertex in the graph exactly

once?

The Spanning Tree Problem: Given an undirected

graph and a positive integer k, does the graph

have a spanning tree with exactly k leaf nodes?

Assume that the Hamiltonian Circuit

Problem is known to be NP-complete. To

prove that the Spanning Tree Problem is

NP-complete, we can just polynomially

reduce the Hamiltonian Circuit Problem to

Page 168

Let G = (V, E) be an instance of the Hamiltonian Circuit

Problem.

Let x be some arbitrary vertex in V.

Define Ax = {y in V (x,y) is in E}.

Define an instance G = (V, E) of the Spanning

Tree Problem as follows:

Let V = V {u, v, w}, where u, v, and w are

new vertices that are not in V.

Let E = E {(u, x)} {(y, v y is in Ax} {(v,

w)}.

Finally, let k = 2.

If G has a Hamiltonian circuit, then G has a

spanning tree with exactly k leaf nodes.

CS 340

Proof: G’s Hamiltonian circuit can be

written as (x, y1, z1, z2, …, zm, y2, x),

where V = {x, y1, y2, z1, z2, …, zm} and

Ax contains y1 and y2.

Thus, (u, x, y1, z1, z2, …, zm, y2, v, w)

forms a Hamiltonian path (i.e., a

spanning tree with exactly two leafPage 169

Conversely, if G has a spanning tree with exactly two leaf

nodes, then G has a Hamiltonian circuit.

Proof: The two-leaf spanning tree of G must have u and

w as its leaf nodes (since they’re the only vertices of

degree one in G ).

Notice that the only vertex adjacent to w in G

is v and the only vertex adjacent to u in G is x.

Also, the only vertices adjacent to v in G are

those in Ax {w}, and the only vertices adjacent

to x in G are those in Ax {u}.

So the Hamiltonian path in G must take the

form (u, x, y1, z1, z2, …, zm, y2, v, w) where V

= {x, y1, y2, z1, z2, …, zm} and Ax contains y1

and y2.

Thus, since y2 is adjacent to x, (x, y1, z1, z2,

…, zm, y2, x) must be a Hamiltonian circuit in

G.

CS 340

Page 170