Final review and practice problems

advertisement

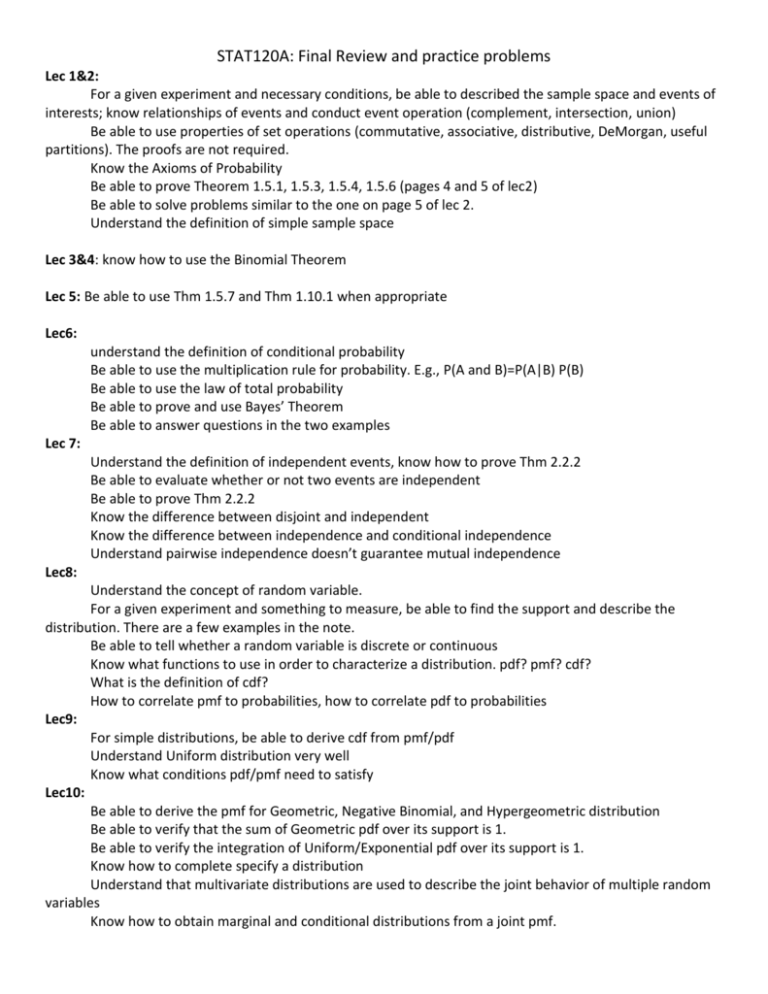

STAT120A: Final Review and practice problems

Lec 1&2:

For a given experiment and necessary conditions, be able to described the sample space and events of

interests; know relationships of events and conduct event operation (complement, intersection, union)

Be able to use properties of set operations (commutative, associative, distributive, DeMorgan, useful

partitions). The proofs are not required.

Know the Axioms of Probability

Be able to prove Theorem 1.5.1, 1.5.3, 1.5.4, 1.5.6 (pages 4 and 5 of lec2)

Be able to solve problems similar to the one on page 5 of lec 2.

Understand the definition of simple sample space

Lec 3&4: know how to use the Binomial Theorem

Lec 5: Be able to use Thm 1.5.7 and Thm 1.10.1 when appropriate

Lec6:

understand the definition of conditional probability

Be able to use the multiplication rule for probability. E.g., P(A and B)=P(A|B) P(B)

Be able to use the law of total probability

Be able to prove and use Bayes’ Theorem

Be able to answer questions in the two examples

Lec 7:

Understand the definition of independent events, know how to prove Thm 2.2.2

Be able to evaluate whether or not two events are independent

Be able to prove Thm 2.2.2

Know the difference between disjoint and independent

Know the difference between independence and conditional independence

Understand pairwise independence doesn’t guarantee mutual independence

Lec8:

Understand the concept of random variable.

For a given experiment and something to measure, be able to find the support and describe the

distribution. There are a few examples in the note.

Be able to tell whether a random variable is discrete or continuous

Know what functions to use in order to characterize a distribution. pdf? pmf? cdf?

What is the definition of cdf?

How to correlate pmf to probabilities, how to correlate pdf to probabilities

Lec9:

For simple distributions, be able to derive cdf from pmf/pdf

Understand Uniform distribution very well

Know what conditions pdf/pmf need to satisfy

Lec10:

Be able to derive the pmf for Geometric, Negative Binomial, and Hypergeometric distribution

Be able to verify that the sum of Geometric pdf over its support is 1.

Be able to verify the integration of Uniform/Exponential pdf over its support is 1.

Know how to complete specify a distribution

Understand that multivariate distributions are used to describe the joint behavior of multiple random

variables

Know how to obtain marginal and conditional distributions from a joint pmf.

Lec11&12:

Know the definition of independence

For given joint pdf/pmf, be able to tell whether or not the random variables are independent

Be able to do all the calculations in the waiting time example

Know how to obtain marginal and conditional distributions from a joint distribution

Lec13 & 14:

For a given distribution, be able to calculate E(X)

The example of Cauchy distribution can be ignored

Know and be able to use the properties of expectations

Be able to prove Thm 4.2.1, Thm4.2.4, Thm 4.26.

For a given function of a random variable, be able to calculate its expectation

For a given function of random variables, be able to calculate its expectation

Understand the definition and interpretation of variance

Be familiar with and be able to use properties of variance

Know how to prove Thm 4.3.1, 4.3.4, and Them 4.3.5 (for n=2)

Lec 15&16:

Know the definitions and be able to calculate covariance and correlation

Be able to show that independence implies zero covariance/correlation

Understand that zero covariance/correlation doesn’t imply independence

Be able to prove the first three properties for covariance/correlation

Be able conduct all the calculations in the examples

Understand the definition of conditional expectation and variance

Know how to use conditional expectation to ease some calculations

Bayesian modeling is not required. But the strategies we used to do the calculations in the example are

relevant to the course.

Lec 17&18

Know the definition of moment generating function (mgf). Know how to calculate mgf.

Understand that a mgf completely specifies a distribution

Understand how to use mgf to calculate moments, variances

Be able to prove and use Thm 4.4.4

Know the strategy used to calculate the mgf of a normal distribution

Know how to find probabilities form normal random variables

Understand the definition of N(0,1).

When the mgf of a normal r.v. is given, be able to find the mgf of a linear function of the r.v.

Know how to obtain the distribution of a linear function of independent normal r.v.s

Be able to derive the distribution of the sample mean of a random sample from a normal distribution

Practice Problems

1. True or false:

(a) If A and B and independent, then AC and BC and independent

(b) If A and B are disjoint, then A and B are independent

(c) Let X be a random variable that follows N(u,ϭ2). It is mgf is exp(ut+0.5*t2* ϭ2). If the mgf of another r.v.

Y is exp(2*t2), then Y follows the normal distribution with mean 0 and variance 4.

Answer: (a)True. See lec 7. (b) False. See lec 7. (c) True. See lec17&18

2. (The answer is in the solution to hw7)

3.

Answer:

(a) E(X)=0*0.2 + 1*0.3 + 2*0.4 + 3*0.1 = 1.4

(b) E(X2)= 0*0.2 + 1*0.3 + 4*0.4 + 9*0.1=2.8; Var(X)= E(X2)-[E(X)]2=0.84

SD(X)=[Var(X)]1/2=0.92

(c) Let Y denote the annual cost. Based on the description of the annual cost, we have Y=200+50X. In class

we learned that E(aX+b)=aE(X)+b and Var(aX+b)=a2Var(X). Therefore, The expected annual cost is

E(Y)=200+50*E(X)=200+50*1.4=270

The variance of the annual cost is

Var(Y)=50*50*0.84=2100

The standard deviation of the annual cost is 45.8.

SD(Y)=50*0.841/2=0.92

4. Let X be a Binomial random variable with parameters n and p.

n

n

(a) The binomial Theorem says (a b) n a k b n k . Use the Binomial Theorem to show that the mgf

k 0 k

of X is (1 p pe t ) n .

(b) Use the mgf to verify that E[X]=np

(c) Use the mgf to verify Var[X]=np(1-p).

Answer:

n

n

n

n

(1) M X (t ) E (e tX ) e tx p x (1 p) n x ( pe t ) x (1 p) n x

x 0 x

x 0 x

(2) E ( X )

M X (t )

n( pe t 1 p) n 1 pe t

t t 0

t 0

BinomialTheorem

( pe t 1 p) n

np

(3)

E( X 2 )

2 M X (t )

[n( pe t 1 p) n 1 pe t ] [n(n 1)( pe t 1 p) n 1 pe t pe t n( pe t 1 p) n 1 pe t ]

2

t 0

t

t

t 0

t 0

n(n 1) p 2 np

Var ( X ) E ( X 2 ) [ E ( X )] 2 n(n 1) p 2 np (np) 2 np np 2 np(1 p)

5. (The answer is in the solution to hw7)

6. (The answer is in the solution to hw6)

7.

Answer:

(a) The support is [0,1]. Therefore, for any x in the support,

x

x

0

0

F ( x) f (t )dt 4 t 3dt t 4 |0x x 4

For x<0, the event {X<=x} cannot happen; therefore, F(x)=P(X<=x)=0; for x>1, {X<=x} is always true;

therefore F(x)=P(X<=x)=1.

(b) Omitted.

(c)

P(0.2 X 0.5) P(0.2 X 0.5) [because X is continuous ]

P( X 0.5) P( X 0.2) 0.5 4 0.2 4

0.0225

(d) This is a conditional probability

P( X 0.7, X 0.5)

P( X 0.7 | X 0.5)

[by the definition of conditiona l probabilit y ]

P( X 0.5)

P( X 0.7) 1 F (0.7)

P( X 0.5) 1 F (0.5)

0.81

8.

Answer: see the lecture notes.

9.

Answer:

(a) Because the cdf is a step function, the distribution is discrete.

(b) P(X>2)=1-P(X<=2)= 1-F(2)=1-0.85=0.15.

10. (The answer is in the solution to the practice midterm)

11. Prove Theorem 4.4.4 (lec 17)