CSCI2181 - Computer Logic and Design Lecture #1

advertisement

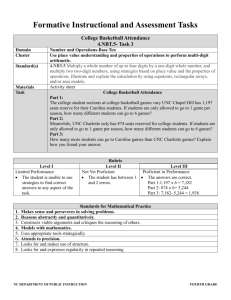

Neural Networks • Outline – – – – – – – Introduction From biological to artificial neurons Self organizing maps Backpropagation network Radial basis functions Associative memories Hopfield networks Other applications of neural networks Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 1 Introduction • Why neural networks? – Algorithms developed over centuries do not fit the complexity of real world problem – The human brain: most sophisticated computer suitable for solving extremely complex problems • Historical knowledge on human brain – Greeks thought that the brain was where the blood is cooled off! – Even till late 19th century not much was known about the brain and it was assumed to be a continuum of non-structured cells – Phineas Gage’s Story • In a rail accident, a metal bar was shot through the head of Mr. Phineas P. Gage at Cavendish, Vermont, Sept 14, 1848 – Iron bar was 3 feet 7 inches long and weighed 13 1/2 pounds. It was 1 1/4 inches in diameter at one end Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 2 Introduction (cont’d) • He survived the accident! – Originally he seemed to have fully recovered with no clear effect(s) • After a few weeks, Phineas exhibited profound personality changes – This is the first time, researchers have a clear evidence that the brain is not a continuum of cell mass and rather each region has relatively independent task Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 3 Introduction (cont’d) • Biological neural networks – 1011 neurons (neural cells) – Only a small portion of these cells are used – Main features • distributed nature, parallel processing • each region of the brain controls specialized task(s) • no cell contains too much information: simple and small processors • information is saved mainly in the connections among neurons Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 4 Introduction (Continued) • learning and generalization through examples • simple building block: neuron – Dendrites: collecting signals from other neurons – Soma (cell body): spatial summation and processing – Axon: transmitting signals to dendrites of other cells Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 5 Introduction (Continued) • biological neural networks: formation of neurons with different connection strengths Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 6 From biological to artificial neural nets • Biological vs. artificial neurons – From biological neuron to schematic structure of artificial neuron • biological: – Inputs – Summation – – of inputs Processing unit Output • artificial: x1 xN Computer Science Dept, UNC Charlotte w1 y f (w1 x1 ... wn xn ) wN Copyright 2002 Kayvan Najarian 7 From biological to artificial neural net (continued) – Artificial neural nets: • Formation of artificial neurons neuron 1 w11 x1 neuron 2 w1i xN y2 neuron i yi wNi wNM neuron M-1 yM 1 neuron M Computer Science Dept, UNC Charlotte y1 Copyright 2002 Kayvan Najarian yM 8 From biological to artificial neural nets (continued) – Multi-layer neural nets: • Serial connection of single layers: y1 w11 x1 y2 w1i xN yi wNi wNM yM 1 – Training: finding the best values of weights wij yM • Training happens iteratively and through exposing the network to examples: Computer Science Dept, UNC Charlotte wij (new ) wij (old ) wij Copyright 2002 Kayvan Najarian 9 From biological to artificial neural nets (continued) – Activation functions: • Hard limiter (binary step): 1 f ( x) 0 1 if x if x if x f (x) 1 1 x – Role of threshold – Biologically supported – Non-differentiable Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 10 From biological to artificial neural nets (continued) • Binary sigmoid (exponential sigmoid) – Differentiable – Biologically supported – Saturation curve is controlled by – In limit when , hard limiter is achieved • Bipolar sigmoid (atan) f ( x) tan 1 ( x) – As popular as binary sigmoid Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 11 From biological to artificial neural nets (continued) • Supervised Vs Unsupervised Learning – Supervised learning (classification) • • Training data are labeled, i.e the output class of all training data are given Example: recognition of birds and insects – Training set: – Classification: sparrow ? eagle bird , bee insect , ant insect , owl bird – Unsupervised learning (clustering) • • • Training data are not labeled Output classes must be generated during training Similarity between features of training example creates different classes Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 12 From biological to artificial neural nets (continued) • Example : types of companies – Features: Number of employees & rate of growth – Training data create natural clusters – From graph: 100 500 1000 Number of Employees Class #1: small size companies with small rate of growth Class #2: small size companies with large rate of growth Class #3: medium size companies with medium rate of growth Class #4: large size companies with small rate of growth – Classification: a company with NOE=600 & ROG=12% is mapped to Class #3 Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 13 From biological to artificial neural nets (continued) – Artificial neural networks as classification tools: • • • If training data are labeled, supervised neural nets are used Supervised leaning normally results to better performance Most successful types of supervised ANNs: – – – Artificial neural networks as clustering tools: • • If training data are not labeled, unsupervised neural nets are used Most successful types of supervised ANNs: – – Multi-layer perceptrons Radial basis function networks Kohonen network Some networks can be trained both in supervised and unsupervised modes Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 14 Perceptron • A more advanced version of simple neuron • Structure (architecture): – Very similar to simple neuron – The only difference: activation function is bipolar hard limiter x1 xi xn y n y _ in b j xi wi i 1 1 y 0 1 Computer Science Dept, UNC Charlotte if y _ in if y _ in if y _ in Copyright 2002 Kayvan Najarian 1 1 yin 15 Competitive Self-Organizing Networks • Biological procedure: – Each neuron (group of neurons) stores a pattern – Neurons in a neighborhood store similar patterns – During classification the similarity of new pattern with all the patterns in all neurons is calculated – The neurons (or neighborhoods) with highest similarity are the winners – The new pattern is attributed to the class of the winner neighborhood Computer Science Dept, UNC Charlotte Rocking Chair Study Chair Trees Forest Books Desk Dining Table Dishes Food New pattern: Conference Table Rocking Chair Study Chair Trees Forest Books Desk Dining Table Dishes Food Winner: Desk (and its neighborhood) Copyright 2002 Kayvan Najarian 16 Kohonen Self-Organizing Maps • Main idea: placing similar objects close to each other – Example: object classification using one-dimensional array Inputs weight (0 to 1) pencil texture (wood=0, metal =1) size (0 to 1) flies (1) or not (0) airplane chair book wooden house stapler metal desk airplane truck truck wooden house kite metal desk chair stapler book pencil kite – New pattern: hovercraft • is mapped to the nationhood of truck or airplane Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 17 Kohonen Self-Organizing Maps (continued) • Two-dimensional arrays – Proximity exists on two dimensions – Better chance of positioning similar objects in the same vicinity – The most popular type of Kohonen network – How to define neighborhoods Rectangular Hexagonal • Radius of neighborhood (R) • R = 0 means that each neighborhood has only one member Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 18 Kohonen Self-Organizing Maps (continued) • Example: two-dimensional array – Classification of objects • • • • • Objective: clustering of a number of objects 10 input features: # of lines, thickness of lines, angles, ... Competitive layer is a 2D-grid of neurons Trained network clusters objects rather successfully Proximity of some objects is not optimal Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 19 Backpropagation neural networks • • Idea: not as biologically-supported as Kohonen Architecture: z v – – • Number of layers & number of x1 neurons in xN each layer Most popular structure Activation functions: – j jk y1 w11 y2 w1i yi wNi wNL yL1 yM sigmoid Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 20 Backpropagation neural networks (continued) • Updating weights: – – Based on Delta Rule Function to be minimized: E 0.5 t k yk 2 k – – – – Best updating of wJK is toward the gradient Error of output layer is propagated back towards the input layer Error is calculated at output layer and propagated back towards the input layer As layers receive the backpropagated error, they adjust their weights according to the negative direction of gradient Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 21 Backpropagation neural networks (continued) – Define: – Then: and: – K t K yK f ( y _ inK ) E wJK K z J w jk E k z j w jk Now: E vIJ Computer Science Dept, UNC Charlotte k wJk f z _ in J xI k Copyright 2002 Kayvan Najarian 22 Backpropagation neural networks (continued) • Applications: – Time-series analysis and prediction • Problem statement (simplest case): – • • The future value of a signal depends on the previous values of the same signal, i.e. y(t ) g y(t 1), y(t 2), ..., y(t p) Objective: to use a neural net to estimate function “g” Procedure: – Form a number of training points as: y (i 1), y (i 2), ... , y (i p) y (i) i p, p 1, ...., n p 1 – • Train a backpropagation net to learn the input-output relation Advanced cases: y(t ) g y(t 1), y(t 2), ... , y(t p), x(t 1), x(t 2), ... , x(t r ) – Computer Science Dept, UNC Charlotte Procedure is similar to the simple case Copyright 2002 Kayvan Najarian 23 Backpropagation neural networks (continued) • Feedforward neural time series models are used in many fields including: – – – – – – • Stock market prediction Weather prediction Control System identification Signal and image processing Signal and image compression Classification: – When a hard limiter is added to the output neurons (only in classification and not during the training phase), backpropagation network is used to classify complex data sets Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 24 Radial Basis Function networks (RBFN’s) x1 Architecture: – – Weights of the input layer are all “1”, i.e.: x x1 , x2 , ..., xn 2 (x) x2 1 2 ….. – 1 (x) …... • all 1 Based on the exact definition m (x) xn of radial basis functions i (.) , m many different families of y ii x i 1 RBFN’s are defined Main properties of all “radial” basis functions: “radial” m • 1 Example: ( x) x xC is the same for all points with equal distance from point C. Computer Science Dept, UNC Charlotte 2 is a radial function because the value of Copyright 2002 Kayvan Najarian C 25 y RBFN’s (continued) • Basis functions – Gaussian basis functions: • • – • x x Ci i (x) exp i2 Coordinates of center: x C i 2 Width parameter: i Reciprocal Multi-Quadratic (RMQ) functions: 1 i (x) 2 1 bi x x Ci • Width parameter: bi Training: Least Mean Square Techniques Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 26 RBFN’s (continued) • Example: – – Trying to approximate a no-linear function using RMQ-RBFN’s Function to be estimated: RBFNs (batch method) g ( x) sin( x). ln 1 x 2.5 2 – – Actual and Estimated Outout 1.5 1 Red: Actual 0.5 0 135 Training points 19 Basis functions are uniformly centered between -10 and 10 All basis functions have: b = 0.5 Training method: batch Estimation is rather successful -0.5 -1 -1.5 -2 -2.5 -10 – – – Computer Science Dept, UNC Charlotte Blue: Estimated -8 -6 Copyright 2002 Kayvan Najarian -4 -2 0 Input 2 4 6 8 27 10 Associative Memories • Concept: – Object or pattern A (input) reminds the network of object or pattern B (output) • Heteroassociative Vs. autoassociative memories – If A and B are different, the system is called heteroassociative net • Example: you see a large lake (A) and that reminds you of the Pacific (B) ocean you visited last year – If A and B are the same, the system is called autoassociative net • Example: you see the Pacific ocean for the second time (A) and that reminds you of the Pacific (B) ocean you visited last year Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 28 Associative Memories (continued) • Recognizing new or incomplete patterns – Recognizing patterns that are similar to one of the patterns stored in memory (generalization) • Example: recognizing a football player you haven’t seen before from his clothes – Recognizing incomplete or noisy patterns whose complete (correct) forms were previously stored in memory • Example: recognizing somebody’s face from a picture that is partially torn • Unidirectional Vs. bidirectional memories – Unidirectional: A reminds you of B – Bidirectional: A reminds you of B and B reminds you of A • Many biological neural nets are associative memories Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 29 Hopfield Network • Concept: – A more advance type of autoassociative memory – Is almost fully connected • Architecture – Symmetric weights wij w ji – No feedback from a cell to itself wii 0 – Notice the “feedback” in the network structure Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 30 Bidirectional Associative Memories (BAM) • Concept: – Bidirectional memory: pattern A reminds you of pattern B and pattern B reminds you of pattern A – Is almost fully connected • Architecture Y1 – Symmetric weights wij w ji – No feedback from a cell to itself wii 0 – Notice the “feedback” in the network structure Computer Science Dept, UNC Charlotte … Yj … Ym wij wnm w11 X1 … Copyright 2002 Kayvan Najarian Xi … Xn 31 Other Applications of NNs • Control – Structure: Desired behavior Neurocontroller Control decision System Actual behavior – Example: Robotic manipulation Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 32 Applications of NNs (continued) • Finance and Marketing – – – – – Stock market prediction Fraud detection Loan approval Product bundling Strategic planning • Signal and image processing – – – – Signal prediction (e.g. weather prediction) Adaptive noise cancellation Satellite image analysis Multimedia processing Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 33 Applications of NNs (continued) • Bioinformatics – Functional classification of protein – Functional classification of genes – Clustering of genes based on their expression (using DNA microarray data) • Astronomy – Classification of objects (into stars and galaxies ad so on) – Compression of astronomical data • Function estimation Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 34 Applications of NNs (continued) • Biomedical engineering – Modeling and control of complex biological system (e.g. modeling of human respiratory system) – Automated drug-delivery – Biomedical image processing and diagnostics – Treatment planning • Clustering, classification, and recognition – Handwriting recognition – Speech recognition – Face and gesture recognition Computer Science Dept, UNC Charlotte Copyright 2002 Kayvan Najarian 35