Handling Surveys and Interviews in NVivo

REVIEWING SURVEYS, INTERVIEWS,

AND FOCUS GROUPS

A Post-Event Review to “Handling

Surveys and Interviews in

NVivo 10”

Jan. 30, 2015

WHY THE POST-EVENT REVIEW…?

Survey and interview data are extracted from particular research designs and approaches…from particular contexts.

The way questions are asked affect the data and the structure of that data

The research approach affects the way coding of that data should be done

The research approach affects what is knowable

2

MAIN CONTENTS

Research methods

Generic information about…:

Surveys

Interviews

Focus Groups

NVivo 10 demos

Disclaimer: This slideshow is only a first rough-cut to set the stage. For most, this is a refresher. The main message is that various types of research procedures result in different types of information that may be analyzed in particular accepted ways, with limited particular assertions possible. The assertability of the data comes from theory and method, domain practices, and other elements, and not the data analytic tool (NVivo) per se.

3

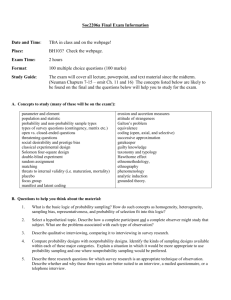

(GENERIC) RESEARCH DESIGN

Conceptualization: Research Objectives,

Research Questions, Hypotheses

Review of the Literature (annotation and write-up)

Research Design (methodology)

Instrumentation (design and pilot-testing)

Sampling (selection of respondents)

Research

Data Collection (sometimes multi-method, mixed method; form of data affects analysis)

Follow-up (if needed)

Data Visualization

Data Analysis (quantitative and qualitative methods)

Discussion

Reporting Out

Future Research

4

SURVEYS

5

BASIC PURPOSES OF SURVEYS

Collect data about people’s experiences, situations, attitudes, beliefs, opinions, and other factors at a particular point-of-time, or over time

Complement various other types of research, including experimental research

(random sampling, control group vs. experimental group)

May be used at any time in the research process for varying purposes

Identify trends over time for particular populations

Usually involves both qualitative and quantitative data (mixed-methods) data analysis

6

THE SURVEY INSTRUMENT

Is designed for particular purposes

Is written in an understandable way (“standard language”); if in a foreign language, achieved by a professional translator or native speaker (not machine-translation, which is still pretty wretched)

Uses close-ended questions appropriately with a full range of choices (no false limits)

If scaled responses, proper scaling (like Likert-like scales and consistency of order; or forced-choice 4point Likert scales with no fence-sitting neutrality); if scaled responses, proper consistency in terms of direction (highest-to-lowest for all questions; or lowest-to-highest for all questions)

Uses open-ended questions appropriately, with sufficient direction and space for a full textual response

Is accessible for all those with a range of special and other needs (transcriptions and timed text for videos, alt text for images, etc.)

7

THE SURVEY INSTRUMENT

(CONT.)

Aligns the questions with the appropriate data types [categorical, ordinal (rank order), numerical (discrete, continuous), text- / audio- based / video-based , and others)

Includes informed consent at the beginning; enables opt-out at any time; no collection of excess information; no deception (unless approved by the Institutional Review

Board / IRB)

Is informed by the research literature (explored to “saturation”)

Is strategically sequenced

Avoids forcing responses because of the participant opt-out issue per IRB guidelines

(debatable)

8

THE SURVEY INSTRUMENT

(CONT.)

Avoids any biasing design or leading language

Is pilot-tested with both experts and with people who are similar to respondents, with changes made to ensure language clarity; comprehensiveness of the survey; clear transitions; accessibility; and corrections of all known errors (and continuing testing until no other errors are found)

Is tested for reliability (that it is dependable and consistent)

Is tested for validity (that it measures what it purports to measure)

Aligns with domain’s professional research standards and expectations

May be versioned for different groups, or may be branched for certain groups

Must maintain comparability if studied for trend data for longitudinal research

9

SURVEY RELIABILITY

Achieving the same results every time the instrument is used, such as through testretest reliability with the same person or group (over time)

Reliability across different instruments or “equivalence reliability”

Internal consistency of measure [Cronbach’s alpha α / coefficient alpha; the complementarity of questions in relation to each other in measuring one dimension or a single construct (unidimensional); inter-correlations among the test items; not robust under conditions of missing data; variables and the degree to which they measure the same thing in an inter-item correlation way as expressed in a matrix and comparisons done by removing variables to see what changes occur in the measuring of the construct; a latent construct may affect the alpha; α < 1 ]

10

SURVEY VALIDITY

Accurate measurement of what it was designed to measure

Different types of validity:

Predictive validity

Concurrent validity (against an accepted measure)

Content validity (reasonable sample of related information and proper terms for what the survey wants to sample) (Fink, 2013, p. 67)

Construct validity (using the instrument on respondents who’ve been established by experts to rate a particular way on a particular scale on a particular construct to see if the target survey comes up with the same results)

11

THE CREDIBILITY OF SURVEY FINDINGS

“Reliability” and “validity” are developed to support the “measurement validity” and ultimately the credibility of survey findings

Also need to control for “error,” which comes from many sources:

Representative sampling, eligibility criteria of those taking the survey, low response rates, attrition of participants (particularly in longitudinal research)

Researcher effects: cognitive biases, incentives, weaknesses

Research design

Instrument design

Administration

Follow-on survey without sufficient passage of time (and the effect of the first survey’s results on the latter)

Exclusion / inclusion of outlier data points

Insufficient analysis and refinement

12

THE TIME FACTOR

Cross-sectional or slice-in-time surveying

Multiple-sequential surveying

Longitudinal (or periodic over-time surveying)

13

SAMPLING OF SURVEY RESPONDENTS

Random (and sufficient) sampling the “gold standard” for generalizing to a population

Stratified random sampling to select members of particular groups as respondents

Simple random cluster sampling (convenience sampling, assumption of pre-defined clusters in the population)

Convenience sampling (like snowball sampling); non-random; gold standard as

“representative” sampling for qualitative research

Systematic sampling (like every 5 th person…, may have hidden if unintentional biases, with a common example as A-Z sampling but with fewer individuals with names in the

W-Z range)

14

SAMPLING OF SURVEY RESPONDENTS

(CONT.)

Open-call sampling with an online survey

Bias in terms of those who self-select or opt-in, have techno access and techno savvy

Potential difficulty in verifying identity

Potential broader geographic reach

Case control: case group (“extant” condition) and control group (absence of “extant” condition) for comparison and contrast and potential generalizing

15

ONLINE SURVEYS

Researcher needs to know and deploy the technology well

Must protect the data well to meet all legal guidelines (going with a trusted survey company)

Must protect participant privacy and confidentiality

Must de-identify data / anonymize before data ingestion into an analysis tool (or dataset sharing through repositories or “reproducible research” articles)

Must offer opt-out function at any time (for IRB standards)

Must anticipate potential harm and mitigate

Online survey must be fully comprehensible without survey taker intervention (designed to head off potential misinterpretation with additional opt-in data as needed)

Data usually a mix of quantitative and qualitative data

May be exported as .csv, .docx, .pdf, and other file types

May be partially exported in pre-made tables, charts, and graphs

16

DATA FORMS

.xlsx data tables (for quant data)

.csv text files, .doc and .docx text files

Some pre-extracted bar charts from the online survey systems

17

INTERVIEWS

18

BASIC PURPOSES OF INTERVIEWS

Targeted elicitation of information

Achieving specific insights

Applied for various practical purposes: awareness, research, decision-making, vetting, historical understandings, and others

19

TYPES OF INTERVIEWS

Structured, semi-structured, or unstructured (pre-written and non-changing…to playit-by-ear)

Formal or informal

Individual or group

On-the-record, off-the-record (information usable but not publicly quotable or attributable back to the source)

May be conducted in the field; may be in a more controlled setting; may be conducted online

20

DATA FORMS

Videos, audios, and derived transcripts

Interviewee-created materials

Researcher notes, memos, and other recorded materials

…and others…

21

FOCUS GROUPS

22

BASIC PURPOSES OF FOCUS GROUPS

The elicitation of information from a homogeneous group (homogeneous based on a relevant dimension) targeted because of their access to particular types of

information in a session or multiple sessions conducted by a non-directive

interviewer (who uses sequenced questions, activities, and nuanced elicitation to acquire responses beyond intellectualized ones—such as emotions and unconscious behaviors)

Used for community outreach, brand testing, product testing, problem-solving

23

SEATING THE FOCUS GROUP

Usually 8 – 20 people, with preference towards fewer for manageability and coherence

Homogeneous group based on a particular dimension (such as access to experiences and / or categorical biographical features); convenience sampling; quasi-experimental

Focus on comfort level of members in speaking and sharing

May divide focus groups into different sexes, for full encouragement of information sharing

Seat individuals who will likely not see each other again

Avoid power differentials in the group (because of reactivity to power)

If cross-cultural context, many efforts for cultural sensitivity with information from informants and native speakers

May have to “over-sample” for wider geographic reach (Krueger & Casey, 2013, p. 27)

24

COMPLEX RESEARCHER ROLE

Researcher: “moderator, listener, observer, analyst” (Krueger & Casey, 2013, p. 7)

Encourages all to speak early on, so some do not lapse into silence for the session (Krueger & Casey,

2013, p. 39)

Does not share his or her opinion or any biasing expressions or body language

Must be able to handle high affect or emotions (if the research calls for stressors)

Must handle dominant personalities

Must encourage variant opinions

Must be comfortable with serendipity and interaction effects among the participants

Should “reality check” understandings with participants during the session (Krueger & Casey, 2013, p.

189)

25

INFORMATION ELICITATION METHODS

Must design test questions and elicitations (like prompts or activities) that result in usable data

Must generally include a catch-all question at the end to make sure nothing was missed

Must design a “questioning route” or sequence that makes sense to participants

Should ask questions answerable by the particular group

Should run positive questions first and negative ones later

Should run simpler questions first and more difficult ones later

Must design activities that get at more elusive information (such as emotions or unconscious ideas)

Should pilot-test draft questions and dry-run processes (and pacing) with individuals most similar to those who would likely take part in the actual focus groups

26

DATA COLLECTION METHODS

Face-to-Face (F2F)

Video-recording (may be unobtrusive and through one-way mirrors), audio recording, human coders, participant writing

May be captured by computers if used in the session

Researcher observations (and journaling or memo-ing)

Online

Synchronous and live (facilitated); asynchronous (may be facilitated or nonfacilitated)

Web session recording

Transcription (full or abridged)

27

DATA ISSUES

“Verbal exchange coding” may be one approach to coding the data (Saldaña,

2013, pp. 136 – 141)

Researchers cannot assert beyond where the information will go.

They…

cannot generalize from the findings.

cannot stereotype participants to “stand in” for others of the same racial background, ethnic background, demographic group, social class, etc.

should describe the research in sufficient detail so that it is theoretically and practically replicable.

should avoid any potential mis-use of the results or findings.

should use the research to benefit participants.

28

SOME LIMITS

Difficulty in finding individuals who fit a particular requirement (characteristic or experience)

Quasi-experimental study does not result in generalizable results

Challenges in setting up incentive structures to encourage participation

Need to protect participant privacy

Must run focus groups as many times as needed to get to the desired data (to the point of “saturation,” p. 21)

Costs of hosting focus groups

If working with community partners, need to ensure that they also follow IRB guidelines and other policies and laws at K-State

29

DATA FORMS

Transcripts

Videos

Audio files

Notes

Participant-created artifacts

Participant performance

30

THE DATA AND NVIVO 10

31

PARAMETRIC DATA

Assumption of a Gaussian “bell curve” as the underlying data structure

Need for statistical significance

Ability to reject the null hypothesis

Univariate data: descriptive statistical measures (of a population based on findings from a random or stratified random sample), such as arithmetic mean (central tendency), median, standard deviation (statistical dispersion), variance, min-max

(range), confidence interval (for single or repeated sampling), and others

Inferential or inductive statistics

32

PARAMETRIC DATA

(CONT.)

Bivariate data: If conducting a linear regression to compare an independent variable (IV) and a potential association with a dependent variable (IV), you’ll need paired data with the IV on the x-axis and the DV on the y-axis, the paired data plotted, and a line drawn based on the least-squares method…and a study of the

“scatter” of the plotted data, whether a line exists, whether a line is linear or curvilinear, whether the line slope is positive or negative, and so on

Pearson product-moment correlation (Pearson’s r): analysis of associations between two variables (based on SDs from the expected mean); co-variance of two variables divided by the product of their standard deviations; measures linear dependence between two variables; “the standardizing of covariance between -1 and 1”

33

PARAMETRIC DATA

(CONT.)

Multivariate data: If conducting a factor analysis (from survey data), minimize factors with a principal components analysis (a form of data reduction) and to identify potential multi-collinearity

ANOVAs (analyses of variance)

MANOVAs (multivariate analysis of variance), and others

34

NON-PARAMETRIC DATA

Assumption of discrete data range and expected average as the underlying data structure

Ability to assert an observable effect beyond chance

Chi-square test (table) and the calculation of the expected values if there is no effect

(or the likelihood of the categorical data spread based on chance alone, or the probability based on just

If sample size too small for a t-test , the Mann-Whitney U test (Wilcoxian rank sum), with data compared against what one would find based on alternative and competing hypotheses

35

NON-PARAMETRIC DATA

(CONT.)

Spearman’s rho (rank order correlation) for categorical data that is set up on an ordinal (rank) scale (two sets of non-parametric ranks)

Pearson’s r “ bootstrap ” for qualitative data:

“Non-parametric statistics.”

36

CONTENT ANALYSIS (FOR TEXTUAL DATA)

Extant themes*

Use of language (“in vivo” coding by using the language of the respondents for codes)

Direction and strength of sentiment; “emotion coding”; attitudes and beliefs

Causal attribution analysis*

Comparisons across categories of cases / individuals (for theoretical generalizability)

Quotable insights (to use in the “reporting out” phase)*

* Common “generic” elements that are extracted

37

CONTENT ANALYSIS (FOR TEXTUAL DATA)

(CONT.)

Semantic analysis: strict inclusion; spatial; cause-effect; rationale; location for action; function; means-end; sequence; attribution (Spradley, 1979, as cited in Miles,

Huberman, & Saldaña, 2014, p. 179)

Display-based analyses: matrix analyses, network analyses

38

FIRST AND SECOND CYCLE CODING

First Cycle (Initial)

(aka the coding nodes)

Descriptive

In vivo coding

Process coding

Emotion coding

Values coding

Evaluation coding

Dramaturgical coding

Holistic coding

Second Cycle (Follow-on)

(aka “Pattern Codes,” the organization of the coded nodes)

Categories or themes

Causes / explanations

Relationships among people

Theoretical constructs (Miles, Huberman, &

Saldaña, 2014, p. 87)

39

FIRST AND SECOND CYCLE CODING

(CONT.)

First Cycle (Initial)

Provisional coding

Hypothesis coding

Protocol coding

Causation coding

Attribute coding

Magnitude coding

Subcoding

Simultaneous coding (Miles, Huberman, &

Saldaña, 2014, pp. 74 – 81)

Second Cycle (Follow-on)

40

REPORTING OUT: PROPER DATA REPRESENTATION

Correct data representations (text, visualizations, videos, and interactive pieces)

Clear labels on all visualizations

Explanatory legends

Lead-up and lead-away texts explaining the data visualizations (efforts to head off negative learning or misunderstandings from the data; controlling for false inferences)

“Triangulation” of data from various sources

Considering competing explanations for the observed data

Proper qualifications of the data

Higher standards for assertions of predictivity

Acknowledged point-of-view (POV) of the researcher

41

“SURVEYS AND INTERVIEWS IN NVIVO”

DEMOS

42

DEMO: PRE-INGESTION INTO NVIVO

Transcription of audio and video files

Machine-based transcription with human review and double-check; manual coding with review and double-check

Keeping original voice, verbiage, syntax, and grammatical mistakes (verbatim); acknowledging incoherence of the audio or video (full or abridged transcription)

Multilingual challenges

Annotation of imagery

Cleaning up of memos, notes, and research journal entries

Consistent file naming protocols across all files

Within-file annotations and labeling

43

DEMO: PRE-INGESTION INTO NVIVO

(CONT.)

Data cleaning

Aligning units of measure, units of time, and any other relevant units

Data disambiguation

Content moving for different file types (.docx, .xl, .csv, and others)

Deletion of unreconcilable information

Data structuring / semi-structuring

Early matrix or table or categorization work

Early ideas for coding (based on theory)

Data log

Planning also for potential data ingestion into other data-analytic software tools and applications in addition to NVivo

44

DEMO: PRE-INGESTION INTO NVIVO

(CONT.)

De-identifying data and then ingesting the data into Nvivo (for participant privacy protections)

Not before the identified data has been fully exploited (not “lossiness” with usable data)

Not in a re-identifiable (reverse-engineering from summary data) way

Separate file for “encrypting” of coded identities (not in the .nvp file), so the researcher can reidentify the data if needed

Erasure of metadata riding on digital files: videos, images, Word files, and others

Data management

Access only to those who should have access (“principle of least privilege”)

Proper data storage for the length of promised time (three years?)

Offline storage and encryption (very slow) if highly sensitive

Proper handling if published as part of “reproducible research” or released / published datasets

45

DEMO: INGESTION INTO NVIVO

(CONT.)

In NVivo

One comprehensive project or many smaller (related) ones

Coherent folder structures

Setup for group coding (if applicable)

Autocoding by text style (tagging)

Creating question nodes for summary-by-questions and then manual copying of contents into the respective nodes

Creating respondent nodes for matrix coding queries

Manual coding

Creating demographic groupings through Person Node Classifications (and pre-set and / or customized descriptive attributes) for cross-group comparisons

46

DEMO: DATA QUERIES AND PROCESSING IN

NVIVO

(CONT.)

Matrix coding

Based on nodes

Based on Person Node Classifications

Data queries

Text frequency counts and word clustering (and related data visualizations)

Text searches and related word trees (and related data visualizations)

… and others …

47

DEMO: OUTPUTS FROM NVIVO

(CONT.)

Data visualizations

Word clouds, graphs, and cluster diagrams (2D, 3D) (.jpg, .jpeg; .bmp, .gif, and .pdf)

Data tables (.xlsx)

Data matrices (.xlsx)

Formatted reports (.nvr as a transferable format between NVivo projects; .txt, .docx,

.rtf, .pdf, .xlsx., .xls, and .htm / .html)

…and easy transcoding from Excel workbook or worksheet to .csv, .xml, .txt, .pdf, and others; visual formats to .png, .tif, and others

48

REFERENCES

Fink, A. (2013). How to Conduct Surveys: A Step-by-Step Guide. Los Angeles: SAGE

Publications.

Krueger, R.A. & Casey, M.A. (2013). Focus Groups: A Practical Guide for Applied

Research. (4 th ed.) Los Angeles: SAGE Publications.

Miles, M.B., Huberman, A.M., & Saldaña, J. (2014). Qualitative Data Analysis: A

Methods Sourcebook. (3 rd Ed.) Los Angeles: SAGE Publications.

Saldaña, J. (2013). The Coding Manual for Qualitative Researchers. (2 nd Ed.)

London: SAGE Publications.

Note: These texts will be available for perusal after the presentation.

49

CONCLUSION AND CONTACT INFO

Dr. Shalin Hai-Jew

Instructional Designer, iTAC

K-State

212 Hale Library

785-532-5262 shalin@k-state.edu

(Note: Thanks to Alice Anderson for patiently critiquing an early version of this. A few changes were made, and I decided to make this a post-presentation handout instead of a preevent refresher.)

Resource

Using NVivo: An Unofficial and Unauthorized Primer

50