Illuminative evaluation

advertisement

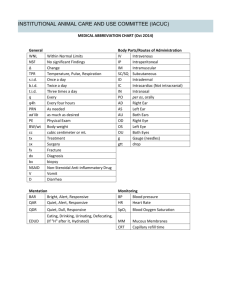

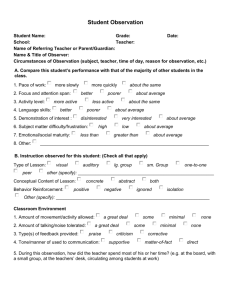

GALA 14th Conference, 14-16 Dec. 2007, Thessaloniki. A responsive/illuminative approach to evaluation of innovatory, foreign language programs. Dr Angeliki Deligianni EFL State School Advisor -Thessaloniki HOU Tutor Former Education Attache - London Embassy of Greece Email: ade@gecon.gr 1 Evaluating a Learning Support Program (LSP) in English as a foreign language (EFL). LSP in EFL constitutes a component of the Educational Reform 2525/97 funded by EU. It was intended to provide for students with knolwedge gaps. In implementing this reform the Greek Ministry of Education responded to the demand for lifelong learning and the autonomous learner which was guided by EU. LSP constitutes a component of this Reform and so it focuses on the development of autonomous learning. Therefore, in evaluating this program I aimed to investigate the extent to which features of autonomous learning were fostered. The conceptual and procedural framework which I constructed was grounded in recent developments in educational evaluation. It was hoped that this would serve as an instrument for evaluating innovatory language programs and that it would contribute to the developing field of educational evaluation in Greece. 2 Absence of any evaluation practices in education in Greece and oppostion to evaluation Since 1980 there has been an absence of any kind of evaluation practices in the Greek educational system with the exception of the regular assessment of students. There has been a great deal of opposition from teashers and teacher unions every time that a political decision for any type of evaluation was announced. The responsive/illuminative approach followed in this study provides evidence that this kind of participatory evaluation model within the context of formative evaluation can be seen as a means of achieving improvement rather than numerically assessing the performance of those involved. In this model the evaluand shares the same degree of responsibility as the evaluator. This is achieved through the reflection and review stages which foster self evaluation. It is exepected that a sense of “ownership”, a term coined by Kennedy (1988), of the program/innovation could be developed in the stakeholders and unjustified fears dispelled. It was also hoped that by developing and introducing this participatory model, teachers and unionists would become less opposed to evaluation in education. 3 The rationale of this evaluation study and the choice for interpretive/naturalistic paradigm First attempts to evaluate program sought quantitative data. School advisors, in charge of this program, were asked to collect and send back to Ministry mainly quantitative data, such as number of students attending, number of students being satisfied, amount of teaching hours etc. Quantification and statistical generalisaitions were then dispatched to EU funding centres to prove that EU funds were wisely distributed. Holding a different view I decided to design an instrument to explore perspectives and shared meanings and develop insights into the particular situation of the of LSP in EFL classroom. I decided that the potential of the interpretive paradigm would best suit my situation. Within this tradition emphasis is placed on unravelling the individual’s point of view. I also embarked on formative evaluatin techniques which are responsive to the needs of stakeholders and provide information that will illuminate the claims, concerns and issues raised by stakeholding audiences. 4 Aims of the research study •To determine the strengths and weaknesses of LSP in EFL •To investigate factors influencing the effectiveness of LSP in EFL •To produce suggestions for improvement of LSP in EFL 5 Responsive/illuminative approach My choice for this duet is grounded in the principles of responsive –illuminative evaluation in the broader context of formative evaluation. It seeks to interpret information in order to faciliate remedy of problematic areas. It is also flexible in responding to a range of contextual constraints. This flexibility is assisted by two facts: a) it takes as its organisers the claims, concerns, and issues of the stakeholders, illuminating issues of importance to implementation and decision making as they emerge,and b) it takes place within the naturalistic or anthropological paradigm using mainly qualitative methods. 6 Brief historical review of the literature on educational evaluation- Presenting the evolution of the field through its various stages up to the present Tyler (1950) reshapes measurement oriented into an objectives-oriented approach. Tyler’s contribution to the field is considered to be of great importance. During 1930s and 1940s Tyler separated maesurement from evaluation making it clear that the former constitutes a tool serving the other. Cronbach (1963) calls for a shift from objectives to decisions as organisers of evaluation, foreshadows formative evaluation. He argues that if evaluation were to be of maximum utility to course developers and innovation planners it needed to focus on ways in which refinements and improvements would occur while the course was in process of development. Scriven (1967) makes distinction between formative and summative evaluation, mere assessment of goal achievement and evaluation,intrinsic or process evaluation and payoff or outcome evaluation and argues for the utility of comparative evaluation. Stufflebeam (1968, 1988) also calls for decisions as organisers (CIPP model, popular after 1971). Stufflebeam proposes four decision types which are serviced by the four evaluation stages in his model (Context, Input, Process, Product). Scriven (1974) defines effects as the organiser of evaluation and revolutionises thinking about evaluation. He argues that evaluation should 7 be goal free and it should evaluate actual effects against a profile of demonstated needs in education, rather than goals and decisions. Responsive evaluation Stake (1983) first uses the term responsive. He takes as organisers the concerns and issues of stakeholders. He emphasises the distinction between a pre-ordinate and a responsive approach. Many evaluation plans are pre-ordinate emphasising statement of goals and using objective tests. In responsive evaluation the evaluator should first observe the program and only then determine what to look for. The claims, concerns and issues about the evaluand that arise in conversations with stakeholders (people and groups in and around the program) constitute the organisers of responsive evaluation. With reference to the organisers of responsive evaluation Guba and Lincoln (1981) provide useful definitions accordingly. •Claims: Assertions that a stakeholder may introduce that are favourable to the evaluand. •Concerns: Assertions that a stakeholder may introduce that are unfavourable to the evaluand. •Issues: States of affairs about which reasonable persons may disagree. It stems that natural communication rather than formal communication is what is needed in order to address the above organisers in evaluation. In this sense Stake argues that responsive evaluation is an old alternative as it is based on what people do naturally to evaluate things: they observe and react. He identifies three ways in which an evaluation can be responsive: •If it orients more directly to program activities than to program intents •If it responds to audience requirements for information •If the different value perspectives of the people at hand are referred to in reporting the success anf failure of the program. 8 Responsive evaluation Highlighting the recycling nature of this type of evaluation which has no natural end point, Guba and Lincoln state that “responsive evaluation is truly a continuous and interactive process.” (1981:27) 9 Illuminative evaluation In responding to the need for an alternative approach to evaluation, Parlett and Hamilton (1988) advocated a new approach to educational evaluation which they termed “illuminative evaluation”. As its title suggests the aim of this form of evaluation is to illuminate problems, issues and significant program features particularly when an innovatory program in education is implemented. This model is concerned with description & interpretation, not measurement and prediction. 10 Illuminative evaluation: Change The value I found in illuminative evaluation is the empowerment of all participants through interpretation of shared findings. This contributes to awareness, as to what is going on externally and self awareness as to what is going on in the inner world of the participants, which can result into their own decision making and acceptance of the need to change internally as individuals.And this will finally bring about change into the educational environment. As personal change is pursued throughout all stages of the evaluation process illuminative approach has much in common with consulting. Yet, unlike consulting, illuminative evaluation does not aim to proffer prescriptions, recommnendations, or judgments as such. It rather provides information and comment that can serve to promote discussions among those concerned with decisions concerning the system studied, (Parlett, 1981:221). Put simply, this approach to evaluation aims to illuminate whatever might be hidden thus revealing the real reasons of failure and ultimately to serve the decision-making for improvement. 11 Illuminative evaluation: The role of the evaluator. “The role of the illuninative evaluator joins a diverse group of specialists such as the psychiatrists, social antropologists and historians and in each of these fields the research worker has to weigh and sift a complex array of human evidence and draw conclusions from it.” (Parlett & Hamilton, 1988:69) By sharing his/her findings with the stakeholders, the illuminative evaluator, facilitates the process of self awareness of all the participants. Self-awareness is pursued through illuminative evaluation and as in psychiatrics and counseling, it is through this stage that an individual would be willing to change and decide on his/her own free will to take remedial action. (Parlett & Hamilton, 1988, Kennedy 1988). 12 Illuminative evaluation Major working assumptions. (Parlett ,1981): A system cannot be understood if viewed in isolation from its wider contexts,Similarly an innovation is not examined in isolation but in the school context of the “learning milieu”. The investigator needs to probe beyond the surface in order to obtain a broad picture. The “learning milieu”, a term coined by Parlett (1981, is defined as the social-psychological and material environment in which students and teachers work together. Its particular characteristics have a considerable impact on the implementattion of any educational program. •The individual biography of settings being examined need to be discovered. •There is no one absolute and agreed upon reality that has an objective truth. This implies that the investigator needs to consult widely from a position of “neutral outsider”. •Attentiont to what is done in practice is crucial since there can be no reliance on what people say. 13 lluminative-responsive evaluation. The functional structure of both responsive and illuminative evaluation takes us to the consideration of formative versus summative evaluation. “The aim of formative evaluation is refinement and improvement while summative evaluation aims to determine impact or outcomes” (Guba and Lincoln, 1981:49). “formative evaluation does not simply evaluate the outcome of the program but on an ongoing evaluating process, from the very beginning, it seeks to form, improve, and direct the innovative program” (Williams & Burden, 1994:22). 14 lluminative-responsive evaluation The functional structure of both responsive and illuminative evaluation takes us to the consideration of formative versus summative evaluation. “what is needed is a form of evaluation that will guide the project and help decision-making throughout the duration of the innovation. For this reason formative evaluation is often used where the very process of evaluation helps to shape the nature of the project itself and therefore increases the likelihood of its successful implementation” (Williams & Burden, 1994:22). 15 Figure 1. Illuminative/responsive evaluation of innovatory remedial program. Conceptual Framework Procedural/ Operational Framework Step 1 Preparing the ground 16 Step 1 Preparing the ground A. Teachers Raising awareness of problematic situation Identifying training needs to cope with specific requirements Introducing them to “Cause for concern forms” -positive attitude-positive self image Interviews 17 Step 1 Preparing the ground B. Heads of Schools – L.S.P Teachers, LSP coordinators – parents Informing them about project guidelines and regulations Discussing claims, concerns, issues C. Students’ Problem Solving framework Identification of students’ own problem Raising students’ metacognitive awareness Goal setting (assisted by teacher) Identification of appropriate tactics /strategies (assisted by teacher) Self evaluation (assisted by teacher) Group discussions Investigating perceptions questionnaire (Parts A B C D perceptions towards EFL & themselves as EFL learners) Individual advisory session or (Language Advising Interview) of students with evaluator (monitored, supported and assisted by teachers) 18 Figure 2. Illuminative/responsive evaluation of innovatory remedial program. Conceptual Framework Procedural/ Operational Framework Step 2 Identifying the setting Understanding Perceptions Problems Issues Nature of the school reality or “learning milieu” within which the program is implemented Students’ questionnaires (Parts E,F, Reasons for attending, Parental support) Teachers’ interviews (claims, concerns, issues) Students’ interviews Group discussions (Heads, project coordinators) Review of students’ personal information “cause for concern form” (documents and progress files) 19 Figure 3. Illuminative/responsive evaluation of innovatory remedial program. Conceptual Framework Procedural/Operational Framework Step 3 SOS (sharing, observing, seeking) recycling technique Sharing information gained Observing Seeking more specific information Group discussions (Heads, project coordinators, teachers, parents) Observing classes, episodes, incidents Students’ questionnaire (Parts G H I, Perceptions towards LSP, LSP teacher, LSP environment) Students’ interviews Review of teaching 20 material files Figure 4. Illuminative/responsive evaluation of innovatory remedial program. Conceptual Framework Procedural/Operational Framework Step 4 The 3 Rs (reviewing, reflecting, remedying) technique Reviewing information gained so far Reflecting on action by answering “what, why” questions with regard to desirable outcomes Remedying problematic situations or “illness” through collaboratively elaborated action plan Teachers’ interviews (reviewingreported on - students’ self evaluation cheklists and “cause for concern” forms Students’ interviews (suggestions) Group discussions (Heads, project coordinators, teachers, parents) 21 Illuminative-responsive evaluation: Its contribution to autonomy. Through their active participation in program evaluation (critical reflection, decision making, self evaluation) students developed an awareness of their progress. This enhanced their self confidence enabling them to take control of their own learning in the EFL classroom and develop as autonomous language learners in other school subjects as well. 22 Implications for using this evaluation model in the field of education. This conceptual duet of responsive and illuminative evaluation aspires to make its own contribution to the field of educational evaluation. The underlying theory of the conceptual and operational framework , hopefully holds a significant potential for the evaluation of innovatory/remedial language learning programs and educational programs in general. The involvement of all participants at all stages can be very promising for the planning and implementation of educational programs which aim to follow a “bottom-up” process. The use of responsiveilluminative approach to evaluation serves the purpose of remedying the possible complications caused by a “top-down” process of implementation of educational programs.In this sense it is also expected to develop the sense of “ownership”(Kennedy, 1988) in the stakeholder and this is expected to result in the program effectiveness. 23 Sources • Council of Europe. (2000). Working Paper. Directorate General for Education and Culture of the European Commission. Implementing lifelong learning for active citizenship in a Europe of knowledge: Consortium of Institutions for Development and Research in Education in Europe (CIDREE). Lisbon Launch Conference. • Council of Europe. Presidency Conclusions, Lisbon Conference. (2000). 23 and 24 March 2000, para. 5, 13, 17, 24, 26, 29, 33, 37, 38. Brussels. • MoE (Ministry of Education). (1997). Reform Act 2525/1997. Athens. 24 References •Cronbach, L. J. (1963). Course improvement through evaluation. Teachers. College Record, 64, 672-683. •Tyler, R.W. (1950). Basic principles of curriculum and instruction. Chicago: University of Chicago Press. •Guba, E. G. & Lincoln, Y.S. (1981). Effective evaluation improving the usefulness of evaluation results through responsive and naturalistic approaches. San Fransisco: Jossey-Bass Publishers. •Parlett, M. (1981). ‘Illuminative evaluation.’ In Reason, P. & Rowan, J. (eds.). Human Inquiry. Chichester: Wiley Ltd. •Parlett, M. & Hamilton, D. (1988). ‘Evaluation as illumination: a new approach to the study of innovatory programmes.’ In Murphy, R. & Torrance, H. (eds.).Evaluating education: issues and methods. London: Paul Chapman Publishing Ltd. •Scriven, M. (1967). ‘The methodology of evaluation.’ In Stake, R. E. (ed.). AERA. Monograph series on curriculum evaluation. Chicago: Rand McNally. • Scriven, M. (1974). ‘Goal-free evaluation.’ In House, E. R. (ed.). School evaluation. Berkeley, LA.: McCutcham. •Stake, R.E. (1983). ‘Program evaluation, particularly responsive evaluation.’ In Madaus, G. F., Scriven, M.F. & Stufflebeam, D. L. (eds.). Evaluation models: viewpoints on educational and human services evaluation. Boston: Kluwer-Nijhoff. •Stufflebeam, D. L. (1968) Towards a science of educational evaluation. Educational Technology, 8 (14), 512. •Stufflebeam, D. L. (1988). ‘The CIPP model for program evaluation.’ In Madaus,G. F., Scriven, M. F. & Stufflebeam, D. L. (eds.). Evaluation models: viewpoints on educational and human services evaluation. Boston: Kluwer-Nijhoff. •Tyler, R.W. (1950). Basic principles of curriculum and instruction. Chicago: University of Chicago Press. 2522•Williams, M. & Burden, R. L. (1994). The role of evaluation in ELT project design. ELT Journal, 48 (1), 27.