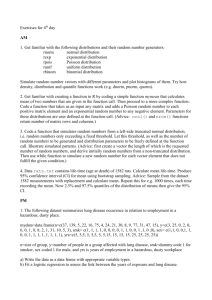

Stat 502 Topic #4

advertisement

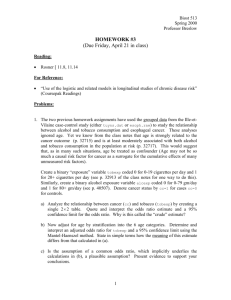

Topic 4 – Extra Sums of Squares and the General Linear Test Using Partial F Tests in Multiple Regression Analysis (Chapter 9) 1 Recall: Types of Tests 1. ANOVA F Test: Does the group of predictor variables explain a significant percentage of the variation in the response? 2. Variable Added Last T-tests: Does a given variable explain a significant part of the variation remaining after all other variables have been included in the model? 3. Partial F Tests: Does a group of variables explain significant variation in the response over and above that already explained by another group of variables already in the model? 2 Hypotheses Consider the model: Y 0 smk X smk age X age size X size Now we want to test whether we should remove Age and Size, the hypotheses are: H 0 : age size 0 H a : age 0 or size 0 3 Hypotheses Using Models We can view such hypotheses as comparing two models The partial F-test will choose which of these two models it thinks is a better model. Corresponding Example: REDUCED: Y 0 smk X smk FULL: Y 0 smk X smk age X age size X size 4 Hypotheses Using Models (2) The null hypothesis is the “reduced model”, what we get when the group of predictors we are testing are not present. In the example, we are setting H 0 : age size 0 which leaves: H 0 : Yi 0 smk X smk The alternative hypothesis is the “full model” “full” does NOT mean “all variables” “full model” means the biggest model currently under consideration H a : Yi 0 smk X smk age X age size X size 5 Hypotheses in “English” Our null hypothesis is that the “additional group of variables” is not useful explaining the “remaining variation” in the response. “Additional Group of Variables” refers to the variables we are testing for removal. “Remaining Variation” refers to variation not already explained by other variables in the model. The alternative is that there is at least one of the “additional variables” that is important. 6 Adding or removing variables? Are we starting with all the full model and trying to remove variables, age and size? Or are we starting with smoking by itself and trying to add age and size? It doesn’t matter the hypotheses for both situations are identical and the partial F test will decide which of the two models it likes the best! REDUCED: Y 0 smk X smk FULL: Y 0 smk X smk age X age size X size 7 Extra Sums of Squares In order to perform these hypothesis tests, we further partitioning of the model sums of squares into pieces. 8 Review: Sums of Squares Total sums of squares represents the variation in the response variable that has a chance to be explained by predictors. Model (or regression) SS represents the variation that is explained Error SS represents the variation left unexplained. 9 And Remember! SSTOT SS R SS E 10 Extra Sums of Squares (ESS) Extra sums of squares breaks down the model/regression sums of squares into pieces corresponding to each variable. Because of collinearity, the ESS are order dependent – they will depend on the order in which the variables appear in (or are added to) the model! 11 ESS – Two types Type I ESS is the sequential sums of squares. The sequential SS for each variable is the additional part of SST that it explains at the point which it is added to the model. Think: variables added in order. Type II / Type III is the marginal sums of squares. The marginal SS for each variable is the additional component of the SST that is explained when the variable is the last to be added to the model. Think: variables added last. 12 SBP Example (Type I) Three variable model had SSR = 4890 If variable order is QUET, AGE, SMK, then the Type I ESS turn out to be: SS(Size) = 3538 SS(Age|Size) = 583 SS(Smk|Size,Age) = 769 Important Note: The Sum of the Type I SS will always be SSR. 13 SBP Example (Type I) If we order the variables differently, say SMK, AGE, SIZE, we will have different ESS: SS(Smk) = 393 SS(Age|Smk) = 4297 SS(Size|Age,Smk) = 200 Again, sum will be 4890. 14 SBP Example (Type I) Some interesting observations… SIZE added first explained 3538; added last explained only 200. WHY? AGE explained 583 in the first model and 4297 in the second. WHY the big difference? SMK explained 769 when added last. But alone (added first) explained only 393. This is NOT at all intuitive – WHY? 15 Pictorial Representation Total SS SS(smk|size,age) SS(age) SS(size|age) 16 Strange behavior among variables Strange things can happen in MLR models between variables In our problem there is a high correlation between AGE and SIZE (about 0.8). This creates some of the havoc we are seeing but there are other things going on as well Smoking is a better predictor with other variables in the model This is NOT collinearity (or multi-collinearity) 17 In Our Example... Consider AGE and SMK without SIZE in the model (suggested by the high correlation of age to size). Now, depending on the ordering of the variables in the model, age explains either 3861 or 4296. Each of them still (strangely) explains more as the 2nd variable to be added – so in some way these two variables are “cooperating” with each other. 18 The Other Extreme If we have a “balanced” design, then all correlations among all predictor variables will be zero. In this case, what will happen with the extra sums of squares? What would the picture look like? 19 SS: Notation Consider the case of three independent variables X1, X2, X3. We can consider regression SS for models SS(X1): SS explained using X1 by itself SS(X1,X2): SS explained using just X1 and X2 SS(X1,X2,X3): SS explained using X1, X2, and X3 If there is any correlation (and there almost always is) then SS X1 , X 2 SS X1 SS X 2 20 ESS: Notation But if we use Extra Sums of Squares notation, we can get it to work The extra sums of squares are: SS(X2|X1): additional SS explained by including X2 in a model already containing X1. SS(X3|X1,X2): extra SS explained by including X3 in a model already containing X1 and X2. Regardless of correlation, SS X 1 , X 2 SS X 1 SS X 2 | X 1 SS X 1 , X 2 , X 3 SS X 1 , X 2 SS X 3 | X 1 , X 2 21 Pictorial Example (4 variables) SS(X2,X3|X1,X4) SSTOT SS(X1,X4) 22 Obtaining ESS in SAS Remember: Type I is Variables added in order Type II/III is Variables added last In PROC REG, using as an option “/ss1 ss2” will print out both Type I and Type II SS. proc reg; model sbp = size age smk /ss1 ss2; model sbp = smk age size /ss1 ss2; run; 23 Model #1 Variable Intercept Size Age Smk DF 1 1 1 1 Variable Intercept Size Age Smk DF 1 1 1 1 Parameter Estimate 45.10 8.59 1.21 9.94 Type I SS 668457 3538 583 769 Standard Error 10.76 4.49 0.32 2.65 t Value 4.19 1.91 3.75 3.74 Pr > |t| 0.0003 0.0664 0.0008 0.0008 Type II SS 963.1 200.1 769.5 769.2 24 Model #2 Variable Intercept Smk Age Size DF 1 1 1 1 Variable Intercept Smk Age Size DF 1 1 1 1 Parameter Estimate 45.10 9.95 1.21 8.59 Type I SS 668457 393 4297 200 Standard Error 10.76 2.66 0.32 4.50 t Value 4.19 3.74 3.75 1.91 Pr > |t| 0.0003 0.0008 0.0008 0.0664 Type II SS 963.1 769.2 769.5 200.1 25 Comparison of Models Estimates, SE’s, T-tests all the same Type II tests also identical Type I tests are order dependent Variable Intercept Size Age Smk DF 1 1 1 1 Variable Intercept Smk Age Size DF 1 1 1 1 Type I SS 668457 3538 583 769 Type I SS 668457 393 4297 200 Type II SS 963.1 200.1 769.5 769.2 Type II SS 963.1 769.2 769.5 200.1 26 Obtaining ESS in SAS Another way is to use PROC GLM GLM produces the Type I and Type II SS automatically – however, they will be labeled Type I and Type III. For regression, Types II and III are equivalent. proc glm; model sbp = size age smk; 27 Output from GLM Source Size Age Smk DF 1 1 1 Type I SS 3538 583 769 Mean Square 3538 583 769 F Value 64.49 10.62 14.02 Pr > F <.0001 0.0029 0.0008 Source Size Age Smk DF 1 1 1 Type III SS 200 769 769 Mean Square 200 769 769 F Value 3.65 14.03 14.02 Pr > F 0.0664 0.0008 0.0008 Remember: For regression, Type II/III are equivalent. F-tests provided by GLM: For Type II/III are equivalent to T-tests (Why?) 28 SAS Coding Available online in file 04SBP.sas Need corresponding data file from previous lecture (SBP Example). 29 CLG Activity #1 In your groups, please attempt the first set of questions. These will involve computing various extra sums of squares. 30 General Linear Test All of the hypothesis tests that are important to us can be thought of in terms of Extra Sums of Squares and constructed using the idea of a general linear test. 31 Uses for ESS By getting the appropriate Extra Sums of Squares, you can test for the “usefulness” of any subset of the variables in your model. The basic idea is to use something called a General Linear Test. 32 General Linear Test The formula for the General Linear Test is not pretty, and can be difficult to understand at first But if you understand the concept of ESS, I think things become much easier (and as an added bonus you will find memorization of formulas to be unnecessary). 33 General Linear Test: Models Compares two models: Full Model: All variables under consideration Reduced Model: Subset of the variables from the full model (null hypothesis is that the others are zero) Example: 4 variables, H 0 : b 2 = b 3 = 0 REDUCED: Y 0 1 X 1 4 X 4 FULL: Y 0 1 X 1 2 X 2 3 X 3 4 X 4 34 General Linear Test: Hypothesis We’ve already seen these! NULL: Represents Reduced model ALTERNATIVE: Represents Full Model Concluding the alternative means that at least one of the variables in our group of additional variables (those in the full model but not in the reduced) is important. Failing to reject (with appropriate power) suggests the added variables are unimportant. 35 General Linear Test Statistic The test is performed by comparing variances between the models – using an F-statistic based on the SS: SSE reduced SSE full k F MSE full k is the number of variables added to the model. The SSE(reduced) will be larger than SSE(full) because the model having more variables (full) will never explain less of the variation. 36 Another (better) Way of Thinking What is SSE reduced SSE full ? Suppose we want to test: REDUCED: Y 0 1 X 1 4 X 4 FULL: Y 0 1 X 1 2 X 2 3 X 3 4 X 4 The question is, what are we gaining (or losing) from X2 and X3? Let’s look again. 37 Pictorial Example (4 variables) SS(X2,X3|X1,X4) SSTOT SS(X1,X4) 38 Another (better) Way of Thinking SSE reduced SSE full is actually just this extra sums of squares piece SSE reduced SSE full SSE X 1 X 4 SSE X 1 X 2 X 3 X 4 SS ( X 2 X 3 | X 1 X 4 ) So the formula becomes: SS ( X 2 X 3 | X1 X 4 ) k F MSE full 39 Alternative Way of Thinking Can think of the numerator in terms of SSR’s too. SSE reduced SSE full SSR full SSR reduced SS (extraXs | reducedXs ) 40 General Linear Test simplified SS ( X 2 X 3 | X1 X 4 ) k F MSE full k is just the number of variables being added (or removed) MSE(full) is taken from the full model 1600 2 F 140 41 General Linear Test Results When the “added” variables don’t do anything, SSE(R) and SSE(F) will be about the same and we will get a small F value If F is large, then we may say that the “added” group of variables is important in addition to those already in the model. Compare to an F statistic on k and DFError degrees of freedom. Added variables explain a significant portion of the remaining sums of squares 42 Example (2) The question being asked here is the following: Do X2 and X3 as a group contribute significantly to explain variation in Y when the model already contains X1 and X4”. To answer this, we look at the F-statistic, and if it is large (bigger than an F-critical value on 2 and n – 5 DF) then we may say that they do. 43 We can do them in SAS! Suppose we want to test two separate partial F tests: Given the model: Yi 0 smk X smk age X age size X size NoSize: H 0 : size 0 OnlyAge: H 0 : size smk 0 44 Proc REG: TEST statement PROC REG can be set up to do these tests for you. proc reg; model sbp = age smk size /ss1 ss2; NoSize: test size=0; OnlyAge: test size = smk = 0; 45 Test Statement Output Test NoSize Results for Dependent Variable SBP Source Numerator Denominator DF 1 28 Mean Square 200.14147 54.86225 F Value 3.65 Pr > F 0.0664 Test OnlyAge Results for Dependent Variable SBP Source Numerator Denominator DF 2 28 Mean Square 514.09766 54.86225 F Value 9.37 Pr > F 0.0008 46 By hand MSE = 54.86 on 28 DF. Variable Intercept Age Smk Size DF 1 1 1 1 Type I SS 668457 3862 828 200 Type II SS 963 769 769 200 ESS(Size,Smoking|Age) = 828 + 200 = 1028 1028/ 2 F 9.37 54.86 47 SAS does these tests automatically Source Size Age Smk DF 1 1 1 Type I SS 3538 583 769 Mean Square 3538 583 769 F Value 64.49 10.62 14.02 Pr > F <.0001 0.0029 0.0008 Source Size Age Smk DF 1 1 1 Type III SS 200 769 769 Mean Square 200 769 769 F Value 3.65 14.03 14.02 Pr > F 0.0664 0.0008 0.0008 Remember: For regression, Type II/III are equivalent. F-tests provided by GLM: For Type II/III are equivalent to T-tests (Why?) 48 Some Comments on MSE If you look at Type I SS in SAS, you will notice that they are all calculated using the same MSE (as opposed to re-computing the MSE at each step). Two things to consider: Generally, the results will be the same either way you do it. In cases where they are not, the re-computed 2 MSE will overestimate because some important predictors are not yet in the model. Thus, the calculations in SAS seem appropriate. 49 CLG Activity #2 This activity will have you using the ESS you computed in CLG Activity #1 to practice constructing test statistics and conducting the hypothesis tests. 50 Questions? 51 Upcoming in Topic 5... Partial Correlations (Chapter 10) MLR Diagnostics & Remedial Measures (Chapter 14) 52