non-coding neutral Sequences Vs regulatory Modules

advertisement

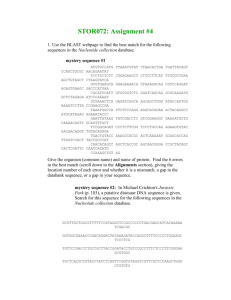

SVM: Non-coding Neutral Sequences Vs Regulatory Modules Ying Zhang, BMB, Penn State Ritendra Datta, CSE, Penn State Bioinformatics – I Fall 2005 Outline Background: Machine Learning & Bioinformatics Data Collection and Encoding Distinguish sequences using SVM Results Discussion Regulation: A Recurring Challenge Expression of genes are under regulation. Regulation: cis-element vs trans-element Right protein, right time, right amount, right location… Cis-element: Non-coding functional sequence Trans-element: Proteins interact with cis-element Predicting cis-regulatory elements remains a challenge: Significant effort put in the past Current trends: TFBS clusters, pattern analysis Alignments and Sequences: The Data Information: Sequence Genetics information encoded in DNA sequence Typical information: Codon, Binding site, … Codon: ATG (Met), CGT (Arg.), … Binding sites: A/TGATAA/G ( Gata1 ), … Evolutionary Information: Aligned Sequence Similarity between species Conservation ~ Function Human: TCCTTATCAGCCATTACC Mouse: TCCTTATCAGCCACCACC Problem Given the genome sequence information, is it possible to automatically distinguish Regulatory Regions from other genomic non-coding Neutral sequences using machine learning ? Machine Learning: The Tool Sub-field of A.I. Computers programs “learn” from experience, i.e. by analyzing data and corresponding behavior Confluence of Statistics, Mathematical Logic, Numerical Optimization Applied in Information Retrieval, Financial Analysis, Computer Vision, Speech Recognition, Robotics, Bioinformatics, etc. M.L. Statistics Optimization Predicting Genes Logic Analyzing Stocks Personalized WWW search Applications Machine Learning: Types of Learning Supervised Learning Unsupervised Learning Learning statistical models from past sample-label data pairs, e.g. Classification Building models to capture the inherent organization in data, e.g. Clustering Reinforcement Learning Building models from interactive feedback on how well the current model is doing, e.g. Robotic learning Machine Learning and Bioinformatics: The Confluence Learning problems in Bioinformatics [ICML ’03] Protein folding and protein structure prediction Inference of genetic and molecular networks Gene-protein interactions Data mining from micro arrays Functional and comparative genomics, etc. Machine Learning and Bioinformatics: Sample Publications Identification of DNaseI Hypersensitive Sites in the human genome (may disclose the location of cis-regulatory sequences) Functionally classifying genes based on gene expression data from DNA microarray hybridization experiments using SVMs M. P. S. Brown, “Knowledge-based analysis of microarray gene expression data by using support vector machines,” PNAS, 2004. Using Log-odds ratios from Markov models for identifying regulatory regions in DNA sequences W.S. Noble et al., “Predicting the in vivo signature of human gene regulatory sequences,” Bioinformatics, 2005. L. Elnitski et al., “Distinguishing Regulatory DNA From Neutral Sites,” Genome Research, 2003. Selection of informative genes using an SVM-based feature selection algorithm I. Guyon et al., “Gene selection for cancer classification using support vector machines,” Machine Learning, 2002. Machine Learning and Bioinformatics: Books Support Vector Machines: A Powerful Statistical Learning Technique Which of the linear separators is optimal? Support Vector Machines: A Powerful Statistical Learning Technique Choose the one that maximizes the margin between the classes ξi ξi Support Vector Machines: A Powerful Statistical Learning Technique The classes in these datasets linearly separate easily x 0 x What about these datasets ? 0 x Support Vector Machines: A Powerful Statistical Learning Technique Solution: Kernel Trick ! 0 x x2 x Experiments: Overview Classification in Question: Two types of experiments: Nucleotide sequences – ATCG Alignments (reduced 5-symbol) - SWVIG (S: match involving G & C, W: match involving A & T, G:gap V:transversion, I: transition) Two datasets: Regulatory regions (REG) vs Ancestral Repeats (AR) Elnitski et al. dataset Dataset from PennState CCGB Mapping Sequences/Alignments → Real Numbers Frequencies of short length K-mers (K=1, 2, 3) Normalizing factor - sequence length (Ambiguous for K > 1) Stability of variance – Equal length sequences (whenever possible) Experiments: Feature Selection Total number of features: Relatively high-dimensionality: Sequences: 4 + 42 + 43 = 84 Alignments: 5 + 52 + 53 = 155 Curse of dimensionality: Convergence of estimators very slow Over-fitting: Poor generalization performance Solutions: Dimension Reduction – e.g., PCA Feature Selection - e.g., Forward Selection, Backward Elimination Experiments: Training and Validation Training Set: SVM setup: Elnitski et al. dataset Sequences: 300 samples of 100 bp each class (REG and AR) Alignments: 300 samples of length 100 from each class RBF Kernel: k(x1, x2) = exp( δ || x1 – x2 || ) Implementation: LibSVM (http://www.csie.ntu.edu.tw/~cjlin/libsvm/) Validation: N-fold Cross-validation Used in feature selection, parameter tuning, and testing Results: The Elnitski et al. dataset Parameter selection Feature Selection SVM Parameters: δ and C Assessing Feature Importance G-C Normalization Sequences: 10 out of 84 Symbols: 10 out of 155 Accuracy scores Overall Ancestral Repeats (AR) Regulatory Regions (Reg) Results: SVM Parameter Selection Iterative selection procedure Coarse selection – Initial neighborhood Fine-grained selection - Brute force Validation Set from data Within-loop CV Chosen Parameters: δ = 1.6 C = 1.5 Chosen by One-dimensional SVMs Results: Feature Selection - Sequence Distribution of Nucleotide frequencies of the top 9 most significant k-mers Chosen by One-dimensional SVMs Results: Feature Selection - Symbol Distribution of 5-symbol frequencies of the top 9 most significant k-mers Results: Feature Selection Procedure: Greedy Forward Selection + Backward Elimination Chosen Features: Sequence: [5 68 3 20 63 4 16 10 1 22] ( 0 = A, 1 = T, 2 = G, 3 =C, 4 = AA, 5 = AT, etc. ) [AT,CAA,C,AAA,GGC,AA,CA,TG,T,AAG] Symbol: [3 5 4 18 24 124 17 143 19 95 103] ( 0 = G, 1 = V, 2 = W, 3 =S, 4 = I, 5 = GG, 6 = GV, etc. ) [S, GG, I, WS,SI,SIG,WW,IWI,WI,WSV,WII] Results: Accuracy Scores Experiment Type Overall Accuracy Elnitski et al. 5-symbol ≈ 74.7% Hexamers ≈ 75% Reg Precision AR Precision 78.49% 81.4% 73% 72.5% Sequences only 1-mers 2-mers 3-mers Selection 78.33% 77.67% 80.17% 80.33% 76.54% 72.84% 83.67% 80.87% 80.54 82.97% 77.21% 79.63% Symbols only 84.33% 84.33% 85.17% 86.00% 79.39% 77.53% 78.83 % 80.58% 90.03% 90.96% 92.42% 91.54% 1-mers 2-mers 3-mers Selection Results: Laboratory Data Training: Data: SVM models built using Elnitski et al. data Same parameters; Same features selected 9 candidate cis-regulatory regions predicted by RP score 1: negative control based on the definition. 5 of the 9 candidates passed current biological testing,positive Accuracy Classification result for sequence (1-, 2-, 3-mer): 1 negative control 4 out of 5 positive element + 3 out of 4 “negative” element Classification result for alignment (1-, 2-, 3-mer): 1 negative control 9 original candidates Discussion High validation rate for Ancestral repeat The structure of selected training set is not that diverse Ancestral repeat tends to be AT-rich AR: LINE, SINE etc. SVM performs a little better than RP scores in training set Statistically more powerful RP: Markov model for pattern recognition SVM: Hyper-plane in high-dimensional feature space Feature selection using wrapper method possible Discussion (cont’d) Performance degradation in Lab Data classification No improvement in SVM classification compared to RP score Features identified from the Elnitski et al. data may have some bias – other features may be more informative on the Lab data Sequence classification vs Alignment (Accuracy Table) SVM yields higher overall cross-validation accuracy for aligned symbol sequences compared to nucleotide sequences Gained accuracy rate: Ancestral Repeat driven No improvement for aligned symbol sequence In Lab data classification, sequence classification is better than aligned symbol sequence No information gained from evolutionary history !!! Alphabet reduction not optimal Assumption worng!!! Summary Generally, SVM is a powerful tool for classification SVM: answer “yes or no” question Performance better than RP in distinguishing AR training set from Reg training set RP: Probabilistic method, can generate quantitative measurement genome-wide SVM: Results can be extended using probabilistic forms of SVM SVM can reveal potentially interesting biological features e.g. the transcription regulation scheme Future Directions: Possible extensions Explore more complex features Refine models for neutral non-coding genomic segments Utilize multi-species alignment for the classification Combining sequence and alignment information to build more robust multi-classifiers – “Committee of Experts” Pattern recognition for more accurate prediction Questions and recommendations? Using original alignment features, 20 columns. Other lab data (avoiding the possible bias of RP preselection) for SVM performance testing. References L. Elnitski et al., “Distinguishing Regulatory DNA From Neutral Sites,” Genome Research, 2003. Machine Learning Group, University of Texas at Austin, “Support Vector Machines,” http://www.cs.utexas.edu/~ml/ . N. Cristianini, “Support Vector and Kernel Methods for Pattern Recognition,” http://www.support-vector.net/tutorial.html. Acknowledgement Dr. Webb Miller Dr. Francesca Chiaromonte David King