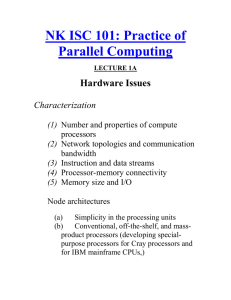

Week 1 Power Point Slides

advertisement

Why Parallel Computing?

• Annual performance improvements drops

from 50% per year to 20% per year

• Manufacturers focusing on multi-core

systems, not single-core

• Why?

– Smaller transistors = faster processors.

– Faster processors = increased power

– Increased power = increased heat.

– Increased heat = unreliable processors

Parallel Programming Examples

• Embarrassingly Parallel Applications

– Google searches employ > 1,000,000 processors

• Applications with unacceptable sequential run times

• Grand Challenge Problems

– 1991 High Performance Computing Act (Law 102-94)

“Fundamental Grand Challenge science and

engineering problems with broad economic and/or

scientific impact and whose solution can be advanced

by applying high performance computing techniques

and resources.”

• Promotes terascale level computation over high bandwidth

wide area computational grids

Grand Challenge Problems

• Require more processing power than available

on single processing systems

• Solutions would significantly benefit society

• There are not complete solutions for these,

which are commercially available

• There is a significant potential for progress with

today’s technology

• Examples: Climate modeling, Gene discovery,

energy research, semantic web-based

applications

Grand

Challenge

Problem

Examples

• Associations

– Computer Research

Association (CRA)

– National Science

Foundation (NSF)

Partial Grand Challenge Problem List

1. Predict contaminant seepage

2. Predict airborne contaminant affects

3. Gene sequence discovery

4. Short term weather forecasts

5. Predict long term global warming

6. Predict earthquakes and volcanoes,

7. Predict hurricanes and tornados

8. Automate natural language understanding

9. Computer vision

10. Nanotechnology

11. Computerized reasoning

12. Protein mechanisms

13. Predict asteroid collisions

14. Sub-atomic particle interactions

15. Model biomechanical processes

16. Manufacture new materials

17. Fundamental nature of matter

18. Transportation patterns

19. Computational fluid dynamics

Global Weather Forecasting Example

• Suppose whole global atmosphere divided into cells of size 1 mile 1

mile 1 mile to a height of 10 miles (10 cells high) - about 5 108 cells.

• Suppose each calculation requires 200 floating point operations. In one

time step, 1011 floating point operations necessary.

• To forecast the weather over 7 days using 1-minute intervals, a

computer operating at 1Gflops (109 floating point operations/s) takes

106 seconds or over 10 days.

• To perform calculation in 5 minutes requires computer operating at 3.4

Tflops (3.4 1012 floating point operations/sec).

1.5

Modeling Motion of Astronomical Bodies

• Astronomical bodies attracted to each other by

gravity; the force on each determines movement

• Required calculations: O(n2) or at best, O(n lg n)

• At each time step, calculate new position

• A galaxy might have1011 stars

• If each calculation requires 1 ms, one iteration

requires 109 years using the N2 algorithm and

almost a year using an efficient N lg N algorithm

Types of Parallelism

• Fine grain

– Vector Processors

• Matrix operations in single instructions

– High performance optimizing compilers

• Reorder instructions

• Loop partitioning

• Low level synchronizations

• Coarse grain

– Threads, critical sections, mutual exclusion

– Message passing

Von Neumann Bottleneck

CPU speed exceeds than memory access time

Modifications (Transparent to software)

• Device Controllers

• Cache: Fast memory to hold blocks of recently

used consecutive memory locations

• Pipelines to breakup an instruction into pieces

and execute the pieces in parallel

• Multiple issue replicates functional units to

enable executing instructions in parallel

• Fine grained multithreading after each

instruction

Parallel Systems

• Shared Memory Systems

– All cores see the same memory

– Coordination: Critical sections and mutual exclusion

• Distributed Memory Systems

– Beowulf Clusters; Clusters of Workstations

– Each core has access to local memory

– Coordination: message passing

• Hybrid (Distributed memory presented as shared)

– Uniform Memory Access (UMA)

– Cache Only Memory Access (COMA)

– Non-uniform Memory Access (NUMA)

• Computational Grids

– Heterogeneous and Geographically Separated

Hardware Configurations

• Flynn Categories

–

–

–

–

SISD (Single Core)

MIMD (*our focus*)

SIMD (Vector Processors)

MISD

• Within MIMD

– SPMD (*our focus*)

– MPMD

P0

P1

P2

P3

Memory

Shared Memory Multiprocessor

P0

P1

P2

P3

M0

M1

M2

M3

• Multiprocessor Systems

– Threads, Critical Sections

• Multi-computer Systems

– Message Passing

– Sockets, RMI

Distributed Memory Multi-computer

Hardware Configurations

• Shared Memory Systems

– Do not scale to high numbers of processors

– Considerations

• Enforcing critical sections through mutual exclusion

• Forks and joins of threads

• Distributed Memory Systems

– Topology: The graph that defines the network

• More connections means higher cost

• Latency: time to establish connections

• Bandwidth: width of the pipe

– Considerations

• Appropriate message passing framework

• Redesign algorithms to those that are less natural

Shared Memory Problems

• Memory contention

– Single bus inexpensive, sequential access

– Crossbar switches, parallel access but

expensive

• Cache Coherence

– Cache doesn’t match memory

• Write through writes all changes immediately

• Write back writes dirty line when expelled

– Processor cache requires broadcast of

changes and complex coherence algorithms

Distributed Memory

• Possible to use commodity systems

• Relatively inexpensive interconnects

• Requires message passing, which

programmers tend to find difficult

• Must deal with network, topology, and

security issues

• Hybrid systems are distributed, but

present a system to programmers as

shared, but with performance loss

Network Terminology

•

•

•

•

•

•

Latency – Time to send “null”, zero length message

Bandwidth – Maximum transmission rate (bits/sec)

Total edges – Total number of network connections

Degree – Maximum connections per network node

Connectivity – Minimum connections to disconnect

Bisection width – Number of connections to cut the

network into equal two parts

• Diameter – Maximum hops connecting two nodes

• Dilation – Number of extra hops needed to map one

topology to another

Web-based Networks

•

•

•

•

•

•

•

•

•

•

•

Generally uses TCP/IP protocol

The number of hops between nodes is not constant

Communication incurs high latencies

Nodes scattered over large geographical distances

High Bandwidths possible after connection established

The slowest link along the path limits speed

Resources are highly heterogeneous

Security becomes a major concern; proxies often used

Encryption algorithms can require significant overhead.

Subject to local policies at each node

Example: www.globus.org

Routing Techniques

• Packet Switching

– Message packets routed separately; assembled at the sink

• Deadlock free

– Guarantees sufficient resources to complete transmission

• Store and Forward

– Messages stored at node before transmission continues

• Cut Through

– Entire path of transmission established before transmission

• Wormhole Routing

– Flits (a couple of bits) held at each node; the “worm” of

flits move when the next node becomes available

Myranet

•

•

•

•

•

•

•

Proprietary technology (http://www.myri.com)

Point-to-point, full-duplex switch based technology

Custom chip settings for parallel topologies

Lightweight transparent cut-through routing protocol

Thousands of processors without TCP/IP limitations

Can embed TCP/IP messages to maximize flexibility

Gateway for wide area heterogeneous networks

Rectangles: Processors, Circles: Switches

Type

Bandwidth

Latency

Ethernet

10 MB/sec

10-15 ms

GB Ethernet

10 GB/sec

150 us

Myranet

10 GB/sec

2us

Parallel Techniques

• Peer-to-peer

– Independent systems coordinate to run a single

application

– Mechanisms: Threads, Message Passing

• Client-server

– Server responds to many clients running many

applications

– Mechanisms: Remote Method Invocation, Sockets

The focus is this class is peer-to-peer applications

Popular Network Topologies

•

•

•

•

•

•

•

Fully Connected and Star

Line and Ring

Tree and Fat Tree

Mesh and Torus

Hypercube

Hybrids: Pyramid

Multi-stage: Myrinet

Fully Connected and Star

Degree?

Connectivity?

Total edges?

Bisection width?

Diameter?

Line and Ring

Degree?

Connectivity?

Total edges?

Bisection width?

Diameter?

Tree and Fat Tree

Edges Connecting Node at level k to k-1 are twice the

number of edges connecting a Node from level k-2 to k-1

Degree?

Connectivity?

Total edges?

Bisection width?

Diameter?

Mesh and Torus

Degree?

Connectivity?

Total edges?

Bisection width?

Diameter?

Hypercube

•A Hypercube of degree zero is a single node

•A Hypercube of degree d is two hypercubes of degree d-1

With edges connecting the corresponding nodes

Degree?

Connectivity?

Total edges?

Bisection width?

Diameter?

Pyramid

A Hybrid Network Combining a mesh and a Tree

Degree?

Connectivity?

Total edges?

Bisection width?

Diameter?

Multistage Interconnection Network

Example: Omega network

switch elements with

straight-through or

crossover connections

000

001

010

011

Inputs

1.26

000

001

010

011

Outputs

100

101

100

101

110

111

110

111

Distributed Shared Memory

Making main memory of group of interconnected

computers look as though a single memory with single

address space. Then can use shared memory

programming techniques.

Interconnection

network

Messages

Processor

Shared

memory

Computers

1.27

Parallel Performance Metrics

• Complexity (Big Oh Notation)

– f(n) = O(g(n)) if for constants z, c>0 f(n)≤c g(n) when n>z

• Speed up: s(p) = t1/tp

• Cost: C(p) = tp*p

• Efficiency: E(p) = t1/C(p)

• Scalability

– Imprecise term to measure impact of adding processors

– We might say an application scales to 256 processors

– Can refer to hardware, software, or both

Parallel Run Time

• Sequential execution time: t1

– t1 = Computation time of best sequential algorithm

• Communication overhead: Tcomm = m(tstartup + ntdata)

–

–

–

–

tstartup = latency (time to send a message with no data)

tdata = time to send one data element

n = number of data elements

m = number of messages

• Computation overhead: tcomp=f (n, p))

• Parallel execution time: tp = tcomp + tcomm

– Tp = reflects the worst case execution time over all processors

Estimating Scalability

Parallel Visualization Tools

Observe using a space-time diagram (or process-time diagram)

Process 1

Process 2

Process 3

Computing

Time

Waiting

Message-passing system routine

Message

Superlinear speed-up (s(p)>p)

Reasons for:

1. Non-optimal sequential algorithm

a. Solution: Compare to an optimal sequential algorithm

b. Parallel versions are often different from sequential versions

2. Specialized hardware on certain processors

a. Processor has fast graphics but computes slow

b. NASA superlinear distributed grid application

3. Average case doesn’t match single run

a. Consider a search application

b. What are the speed-up possibilities?

Speed-up Potential

• Amdahl’s “pessimistic” law

– Fraction of Sequential processing (f) is fixed

– S(p) = t1/ (f * t1 + (1-f)t1/p) → 1/f as p →∞

• Gustafson’s “optimistic” law

– Greater data implies parallel portion grows

– Assumes more capability leads to more data

– S(p) = f + (1-f)*p

• For each law

– What is the best speed-up if f=.25?

– What is the speed-up for 16 processors?

• Which assumption if valid?

Challenges

• Running multiple instances of a sequential

program won’t make effective use of

parallel resources

• Without programmer optimizations,

additional processors will not improve

overall system performance

• Connect networks of systems together in a

peer-to-peer manner

Solutions

• Rewrite and parallel existing programs

– Algorithms for parallel systems are drastically

different than those that execute sequentially

• Translation programs that automatically

parallelize serial programs.

– This is very difficult to do.

– Success has been limited.

• Operating system thread/process allocation

– Some benefit, but not a general solution

Compute and merge results

• Serial Algorithm:

result = 0;

for (int i=0; i<N; i++) { sum += merge(compute(i)); }

• Parallel Algorithm (first try) :

– Each processor, P, performs N/P computations

– IF P>0 THEN Send partial results to master (P=0)

– ELSE receive and merge partial results

Is this the best we can do?

How many compute calls? How many merge calls?

How is work distributed among the processors?

Multiple cores forming a global

sum

Copyright © 2010,

Elsevier Inc. All rights

•How many merges must the master do?

•Suppose 1024 processors. Then how many

merges would the master do?

•Note the difference from the first approach

Parallel Algorithms

• Problem: Three helpers must mow, weed

eat, and pull weeds on a large field

• Task Level

– Each helper perform one of the tasks over the

entire large field

• Data Level

– Each helper do all three tasks over one third

of the field

Case Study

• Millions of doubles

• Thousands of bins

• We want to create a histogram of the

number of values present in each bin

• How would we program this sequentially?

• What parallel algorithm would we use?

– Using a shared memory system

– Using a distributed memory system

In This Class We

• Investigate converting sequential

programs to make use of parallel facilities

• Devise algorithms that are parallel in

nature

• Use C with Industry Standard Extensions

– Message-Passing Interface (MPI) via mpich

– Posix Threads (Pthreads)

– OpenMP

Parallel Program Development

• Cautions

– Parallel program programming is harder than sequential programming

– Some algorithms don’t lend themselves to running in parallel

• Advised Steps of Development

–

–

–

–

–

–

Step 1: Program and test as much as possible sequentially

Step 2: Code the Parallel version

Step 3: Run in parallel; one processor with few threads

Step 4: Add more threads as confidence grows

Step 5: Run in parallel with a small number of processors

Step 6: Add more processes as confidence grows

• Tools

–

–

–

–

There are parallel debuggers that can help

Insert assertion error checks within the code

Instrument the code (add print statements)

Timing: time(), gettimeofday(), clock(), MPI_Wtime()