Lecture 3: Deconvolution and FRET

advertisement

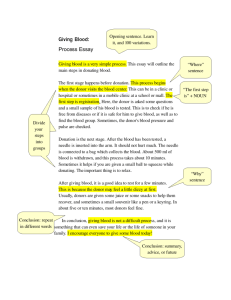

Today’s Topic: Lec 3 Prep for Labs 1 & 2 3-D imaging—how to get a nice 2D Image when your samples are 3D. (Deconvolution, with point scanning or with Wide-field Imaging.) Getting distances out with FRET– donor quenching, sensitized acceptor emission; and orientation effects. You should have read the book chapter on microscopy AND either Lab 1 or Lab 2 Handout Paul Selvin (Last time) Convolution (pinhole) microscopy Way to improve the z-resolution by collecting less out-of-focus fluorescence, via a pinhole and scanning excitation with focused light. Great especially for thick samples, but takes time, complicated optics and requires fluorophores that are very stable. (why?) Focused Light creates fluorescence which Light mostly gets to detector gets rejected Confocal microscopy prevents out-of-focus blur from being detected by placing a pinhole aperture between the objective and the detector, through which only in-focus light rays can pass. http://micro.magnet.fsu.edu/primer/digitalimaging/deconv olution/deconintro.html Fluorescence (dark-field) Point-Spread Deconvolution Imaging http://www.microscopyu.com/articles/confoca l/confocalintrobasics.html What if you let light go in (no pinhole) and don’t scan—i.e. use wide-field excitation. Can you mathematically discriminate against out-of-focus light? Deconvolution For each focal plane in the specimen, a corresponding image plane is recorded by the detector and subsequently stored in a data analysis computer. During deconvolution analysis, the entire series of optical sections is analyzed to create a threedimensional montage. By knowing the (mathematical) transfer function, can you do better? Called deconvolution techniques. Common technique: take a series of z-axis and then unscramble them. http://micro.magnet.fsu.edu/primer/digitalimaging/deconv olution/deconintro.html Wide-field deconvolution imaging There are many ways of doing this You will use this Nikon: http://www.meyerinst.com/imagingsoftware/autoquant/index.htm How to figure out what out-of-focus light gets through? Simplest way: make a 2D sample, scan through it in z and then back-calculate Deconvolution: 2 Techniques Deblurring and image restoration Deblurring Algorithms are fundamentally two-dimensiona: they subtract away the average of the nearest neighbors in a 3D stack. For example, the nearest-neighbor algorithm operates on the plane z by blurring the neighboring planes (z + 1 and z - 1, using a digital blurring filter), then subtracting the blurred planes from the z plane. In contrast, image restoration algorithms are properly termed "three-dimensional" because they operate simultaneously on every pixel in a three-dimensional image stack. Instead of subtracting blur, they attempt to reassign blurred light to the proper in-focus location. + Computationally simple. - Add noise, reduce signal Sometimes distort image http://micro.magnet.fsu.edu/primer/digitalimaging/deconv olution/deconalgorithms.html Deconvolution Image Restoration Instead of subtracting blur (as deblurring methods do), Image Restoration Algorithms attempt to reassign blurred light to the proper in-focus location. This is performed by reversing the convolution operation inherent in the imaging system. If the imaging system is modeled as a convolution of the object with the point spread function, then a deconvolution of the raw image should restore the object. However, never know PSF perfectly. Varies from point-to-point, especially as a function of z and by color. You guess or take some average. (De-)Convolution Get crisp images (De-)Convolution Blind Deconvolution an image reconstruction technique: object and PSF are estimated The algorithm was developed by altering the maximum likelihood estimation procedure so that not only the object, but also the point spread function is estimated. Another family of iterative algorithms uses probabilistic error criteria borrowed from statistical theory. Likelihood, a reverse variation of probability, is employed in the maximum likelihood estimation (MLE) Using this approach, an initial estimate of the object is made and the estimate is then convolved with a theoretical point spread function calculated from optical parameters of the imaging system. The resulting blurred estimate is compared with the raw image, a correction is computed, and this correction is employed to generate a new estimate, as described above. This same correction is also applied to the point spread function, generating a new point spread function estimate. In further iterations, the point spread function estimate and the object estimate are updated together. Images: AutoQuant Not-deconvoluted Blind Deconvolution Briggs, Biophotonics2004 Different Deconvolution Algorithms The original (raw) image is illustrated in Figure 3(a). The results of deblurring by a nearest neighbor algorithm appear in Figure 3(b), with processing parameters set for 95 percent haze removal. The same image slice is illustrated after deconvolution by an inverse (Wiener) filter (Figure 3(c)), and by iterative blind deconvolution (Figure 3(d)), incorporating an adaptive point spread function method. http://micro.magnet.fsu.edu/primer/digitalimaging/deconv olution/deconalgorithms.html Fluorescence Resonance Energy Transfer (FRET) Spectroscopic Ruler for measuring nm-scale distances, binding 1.0 E 0.8 Energy Transfer Donor Acceptor E 0.6 1 1 ( R / R0 ) 6 0.4 Ro 50 Å 0.2 0.0 0 25 50 75 100 R (Å) D A Time Dipole-dipole Distant-dependent Energy transfer Look at relative amounts of green & red R0 D D A A Time FRET Works First shown in 1967 (Haugland and Stryer, PNAS) How to measure Energy Transfer 1. Donor intensity decrease; 2. Donor lifetime decrease; 3. Acceptor increase. E.T. by changes in donor. E.T. by increase in acceptor Need to compare two samples, D-only, and D-A. fluorescence and compare it to residual donor emission. Need to compare one sample at two l and also measure their quantum yields. Where are the donor’s intensity, and excited state lifetime in the presence of acceptor, and ________ are the same but without the acceptor. D A Time R0 D D A A Time With a measureable E.T. signal E.T. leads to decrease in Donor Emission & Increase in Acceptor Emission http://mekentosj.com/science/fret/ Example of FRET Fluorescein Rhodamine Fluorescein: Donor Rhodamine Acceptor Example of FRET via acceptor-emission Exc = 488 nm Fluorescein Rhodamine From “extracted acceptor emission” You can determine how much direct fluorescence there is by shining a second wavelength at acceptor where donor doesn’t absorb. (For Fl-Rh pair, go to the red, about 550 nm.) Then multiply this by the relative absorbance of Rh at 488/550 nm! Terms in Ro 1 E 1 ( R / R0 ) 6 Ro = 0.21( JqD n k -4 2 ) 1 6 in Angstroms • J is the normalized spectral overlap of the donor emission (fD) and acceptor absorption (eA) . Does donor emit where acceptor absorbs? • qD is the quantum efficiency (or quantum yield) for donor emission in the absence of acceptor (qD = number of photons emitted divided by number of photons absorbed). • n is the index of refraction (1.33 for water; 1.29 for many organic molecules). • k2 is a geometric factor related to the relative orientation of the transition dipoles of the donor and acceptor and their relative orientation in space. Very important; often set = 2/3. Terms in Ro J: Does donor emit where acceptor absorbs? Ro 0.21 Jq D n k 4 2 1 6 in Angstroms where J is the normalized spectral overlap of the donor emission (fD) and acceptor absorption (eA) Spectral Overlap between Donor (CFP) & Acceptor (YFP) Emission Ro≈ 49-52Å. k2 : Orientation Factor The spatial relationship between the DONOR emission dipole moment and the ACCEPTOR absorption dipole moment y qD D R z qDA q A A (0< k2 >4) k2 often = 2/3 x where qDA is the angle between the donor and acceptor transition dipole moments, qD (qA) is the angle between the donor (acceptor) transition dipole moment and the R vector joining the two dyes. k2 ranges from 0 if all angles are 90°, to 4 if all angles are 0°, and equals 2/3 if the donor and acceptor rapidly and completely rotate during the donor excited state lifetime. k 2 is usually not known and is assumed to have a value of 2/3 (Randomized distribution) This assumption assumes D and A probes exhibit a high degree of rotational motion Can measure whether donor & acceptor randomize by looking at polarization. The End