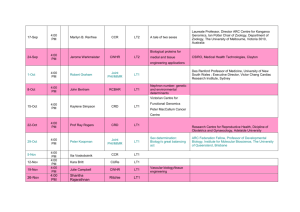

06-04-18 Wayne State..

advertisement

Rapidly Constructing

Integrated Applications from

Online Sources

Craig A. Knoblock

Information Science Institute

University of Southern California

Motivating Example

BiddingForTravel.com

Priceline

?

Map

Orbitz

Outline

Extracting data from unstructured and

ungrammatical sources

Automatically discovering models of sources

Dynamically building integration plans

Efficiently executing the integration plans

Outline

Extracting data from unstructured and

ungrammatical sources

Automatically discovering models of sources

Dynamically building integration plans

Efficiently executing the integration plans

Ungrammatical & Unstructured Text

Ungrammatical & Unstructured Text

For simplicity “posts”

<hotelArea>univ. ctr.</hotelArea>

Goal:

<price>$25</price><hotelName>holiday inn sel.</hotelName>

Wrapper based IE does not apply (e.g. Stalker, RoadRunner)

NLP based IE does not apply (e.g. Rapier)

Reference Sets

IE infused with outside knowledge

“Reference Sets”

Collections of known entities and the associated

attributes

Online (offline) set of docs

CIA World Fact Book

Online (offline) database

Comics Price Guide, Edmunds, etc.

Algorithm Overview – Use of Ref Sets

Post:

$25 winning bid at

holiday inn sel. univ. ctr.

Reference Set:

Holiday Inn Select

Hyatt Regency

Ref_hotelName

University Center

Downtown

Ref_hotelArea

Record Linkage

$25 winning bid at

holiday inn sel. univ. ctr.

Holiday Inn Select University Center

“$25”, “winning”, “bid”, …

Extraction

$25 winning bid … <price> $25 </price> <hotelName> holiday inn

sel.</hotelName> <hotelArea> univ. ctr. </hotelArea>

<Ref_hotelName> Holiday Inn Select </Ref_hotelName>

<Ref_hotelArea> University Center </Ref_hotelArea>

Our Record Linkage Problem

Posts not yet decomposed attributes.

Extra tokens that match nothing in Ref Set.

Post:

“$25 winning bid at holiday inn sel. univ. ctr.”

Reference Set:

hotel name

hotel area

Holiday Inn

Greentree

Holiday Inn Select

University Center

Hyatt Regency

Downtown

hotel name

hotel area

Our Record Linkage Solution

P = “$25 winning bid at holiday inn sel. univ. ctr.”

Record Level Similarity + Field Level Similarities

VRL = < RL_scores(P, “Hyatt Regency Downtown”),

RL_scores(P, “Hyatt Regency”),

RL_scores(P, “Downtown”)>

Binary Rescoring

Best matching member of the reference set for the post

Extraction Algorithm

Post:

$25

winning bid at holiday inn sel. univ. ctr.

Generate VIE

Multiclass SVM

VIE = <common_scores(token),

IE_scores(token, attr1),

IE_scores(token, attr2),

…>

$25 winning bid at holiday inn sel. univ. ctr.

price

$25

hotel name

holiday inn sel.

Clean Whole Attribute

hotel area

univ. ctr.

Experimental Data Sets

Hotels

Posts

1125 posts from www.biddingfortravel.com

Pittsburgh, Sacramento, San Diego

Star rating, hotel area, hotel name, price, date booked

Reference Set

132 records

Special posts on BFT site.

Per area – list any hotels ever bid on in that area

Star rating, hotel area, hotel name

Comparison to Existing Systems

Record Linkage

WHIRL

RL allows non-decomposed attributes

Information Extraction

Simple Tagger (CRF)

State-of-the-art IE

Amilcare

NLP based IE

Record linkage results

Prec.

Recall

F-Measure

Hotel

Phoebus

93.60

91.79

92.68

WHIRL

83.52

83.61

83.13

10 trials – 30% train, 70% test

Token level Extraction results:

Hotel domain

Prec.

Area

Date

Name

Price

Star

Recall

F-Measure

Phoebus

89.25

87.50

88.28

Simple Tagger

92.28

81.24

86.39

Amilcare

74.2

78.16

76.04

Phoebus

87.45

90.62

88.99

Simple Tagger

70.23

81.58

75.47

Amilcare

93.27

81.74

86.94

Phoebus

94.23

91.85

93.02

Simple Tagger

93.28

93.82

93.54

Amilcare

83.61

90.49

86.90

Phoebus

98.68

92.58

95.53

Simple Tagger

75.93

85.93

80.61

Amilcare

89.66

82.68

85.86

Phoebus

97.94

96.61

97.84

Simple Tagger

97.16

97.52

97.34

Amilcare

96.50

92.26

94.27

Freq

809.7

751.9

1873.9

850.1

766.4

Not Significant

Outline

Extracting data from unstructured and

ungrammatical sources

Automatically discovering models of sources

Dynamically building integration plans

Efficiently executing the integration plans

Discovering Models of Sources

Required for Integration

Provide uniform access to heterogeneous sources

Source definitions are used to reformulate queries

New service, no source model, no integration!

Can we discover models automatically?

United

Mediator

Query

SELECT MIN(price)

FROM flight

WHERE depart=“MXP”

AND arrive=“PIT”

Source

Definitions:

- United

- Lufthansa

- Qantas

Web

Services

Lufthansa

Reformulated Query

Qantas

calcPrice(“MXP”,“PIT”,”economy”)

Alitalia

new

service

Inducing Source Definitions:

A Simple Example

Step 1: use metadata to classify input types

Step 2: invoke service and classify output types

known

source

Mediator

LatestRates($country1,$country2,rate):exchange(country1,country2,rate)

Semantic Types:

currency {USD, EUR, AUD}

rate {1936.2, 1.3058, 0.53177}

currency

Predicates:

exchange(currency,currency,rate)

new

source

rate

RateFinder($fromCountry,$toCountry,val):- ?

{<EUR,USD,1.30799>,<USD,EUR,0.764526>,…}

Inducing Source Definitions:

A Simple Example

Step 3: generate plausible source definitions

Step 4: reformulate in terms of other sources

Step 5: invoke service and compare output

match

currency

rate

Input

RateFinder

Def_1

<EUR,USD>

1.30799

1.30772

<USD,EUR>

0.764526

RateFinder($fromCountry,$toCountry,val):0.764692

1.30772

<EUR,AUD>

1.68665

Mediator

1.68979

Def_2

new

source

0.764692

?

0.591789

def_1($from, $to, val) :- exchange(from,to,val)

def_2($from, $to, val) :- exchange(to,from,val)

def_1($from, $to, val) :- LatestRates(from,to,val)

Predicates:

exchange(currency,currency,rate) def_2($from, $to, val) :- LatestRates(to,from,val)

The Framework

Intuition: Services often have similar semantics, so we

should be able to use what we know to induce that

which we don’t

Two phase algorithm

For each operation provided by the new service:

1.

Classify its input/output data types

Classify inputs based on metadata similarity

Invoke operation & classify outputs based on data

Induce a source definition

2.

Generate candidates via Inductive Logic Programming

Test individual candidates by reformulating them

Use Case: Zip Code Data

Single real zip-code service with multiple operations

The first operation is defined as:

getDistanceBetweenZipCodes($zip1, $zip2, distance) :centroid(zip1, lat1, long1),

centroid(zip2, lat2, long2),

distanceInMiles(lat1, long1, lat2, long2, distance).

Goal is to induce definition for a second operation:

getZipCodesWithin($zip1, $distance1, zip2, distance2) :centroid(zip1, lat1, long1),

centroid(zip2, lat2, long2),

distanceInMiles(lat1, long1, lat2, long2, distance2),

(distance2 ≤ distance1),

(distance1 ≤ 300).

Same service so no need to classify inputs/outputs or match

constants!

Generating definitions: ILP

Want to induce source definition for:

getZipCodesWithin($zip1, $distance1, zip2, distance2)

Plausible

Source Definition

Predicates available

for generating

definitions:INVALID

1

cen(z1,lt1,lg1), cen(z2,lt2,lg2),

dIM(lt1,lg1,lt2,lg2,d1),

{centroid, distanceInMiles,

≤,=} (d2 = d1)

2

cen(z1,lt1,lg1), cen(z2,lt2,lg2), dIM(lt1,lg1,lt2,lg2,d1), (d2 ≤ d1)

3

cen(z1,lt1,lg1), cen(z2,lt2,lg2), dIM(lt1,lg1,lt2,lg2,d2), (d2 ≤ d1)

Use known definition as starting point for local

cen(z1,lt1,lg1), cen(z2,lt2,lg2), dIM(lt1,lg1,lt2,lg2,d2), (d1 ≤ d2)

4

d2 unbound!

New type signature contains that of known source

#d is a

constant

search:

6

getDistanceBetweenZipCodes($zip1, $zip2, distance)

:UNCHECKABLE

cen(z1,lt1,lg1),

cen(z2,lt2,lg2),

dIM(lt1,lg1,lt2,lg2,d2),

(d1 ≤ #d)

centroid(zip1,

lat1,

long1),

lt1 inaccessible!

cen(z1,lt1,lg1),

cen(z2,lt2,lg2),

dIM(lt1,lg1,lt2,lg2,d2),

(lt1 ≤ d1)

centroid(zip2,

lat2,

long2),

contained in

distanceInMiles(lat1,

long1, lat2, long2, distance).

…

defs 2 & 4

n

cen(z1,lt1,lg1), cen(z2,lt2,lg2), dIM(lt1,lg1,lt2,lg2,d2), (d2 ≤ d1), (d1 ≤ #d)

5

Preliminary Results

Settings:

Number of zip code constants initially available: 6

Number of samples performed per trial: 20

Number of candidate definitions in search space: 5

Results:

Converged on “almost correct’’ definition!!!

getZipCodesWithin($zip1, $distance1, zip2, distance2) :centroid(zip1, lat1, long1),

centroid(zip2, lat2, long2),

distanceInMiles(lat1, long1, lat2, long2, distance2),

(distance2 ≤ distance1),

(distance1 ≤ 243).

Number of iterations to convergence: 12

Related Work

Classifying Web Services

(Hess & Kushmerick 2003), (Johnston & Kushmerick 2004)

Classify input/output/services using metadata/data

We learn semantic relationships between inputs & outputs

Category Translation

(Perkowitz & Etzioni 1995)

Learn functions describing operations available on internet

We concentrate on a relational modeling of services

CLIO

(Yan et. al. 2001)

Helps users define complex mappings between schemas

They do not automate the process of discovering mappings

iMAP

(Dhamanka et. al. 2004)

Automates discovery of certain complex mappings

Our approach is more general (ILP) & tailored to web sources

We must deal with problem of generating valid input tuples

Outline

Extracting data from unstructured and

ungrammatical sources

Automatically discovering models of sources

Dynamically building integration plans

Efficiently executing the integration plans

Dynamically Building

Integration Plans

Traditional Data Integration

Techniques

(1). SwissProtein: P36246

(2). GeneBank: AAS60665.1

………

Find information about all

proteins that participate in

Transcription process

Mediator

Dynamically Building

Integration Plans (Cont’d)

Problem Solved Here

Create a web service that

accepts a name of a biological

process, <bname>, and

returns information about

proteins that participate in it

New web service

Mediator

Problem Statement (Cont’d)

Assumption

Information-producing web service

operations

Applicability

Biological data web services

Geospatial services (WMS, WFS)

Other applications that do not focus on

transactions

Query-based Web Service

Composition

Query-based approach

View web service operations as source relations with

binding restrictions

Create domain ontology

Describe source relations in terms of domain relations

Can be inferred from WSDL

Combined Global-as-View / Local-as-View approach

Use data integration system to answer user queries

Template-based Web Service

Composition

Our goal is to compose new web services

We need to answer template queries, not specific

queries

Template-based Query Approach

Generate plans to take into account general parameter

values,

Easy to generate universal plan

i.e. Universal Plan [Schoppers, et. al.]

Plans that answer template query as oppose to specific

query

But, plans can be very inefficient

Need to generate optimized “universal integration plans”

Example Scenario

Sources

HSProtein($id, name, location, function, seq, pubmedid)

MMProtein($id, name, location, function, seq, pubmedid)

Protein

TranducerProtein($id, name, location, taxonid, seq, pubmedid)

MembraneProtein($id, name, location, taxonid, seq, pubmedid)

DipProtein($id, name, location, taxonid, function)

Protein-Protein

Interactions

MMProteinInteractions($fromid, toid, source, verified)

HSProteinInteractions($fromid, toid, source, verified)

Example Rules and Query

ProteinProteinInteractions(fromid, toid, taxonid, source, verified):HSProteinInteractions(fromid, toid, source, verified),(taxonid=9606)

ProteinProteinInteractions(fromid, toid, taxonid, source, verified):MMProteinInteractions(fromid, toid, source, verified), (taxonid=10090)

ProteinProteinInteractions(fromid, toid, taxonid, source, verified):ProteinProteinInteractions(fromid, itoid, taxonid, source, verified),

ProteinProteinInteractions(itoid, toid, taxonid, source, verified)

Q(fromid, toid, taxonid, source, verified):fromid = !fromid,

taxonid = !taxonid,

ProteinProteinInteractions(fromid, toid, taxonid, source, verified)

Unoptimized Plan

Optimized Plan

Exploit constraints in source description to

filter queries to sources

Example Scenario

Q1(fromid, fromname, fromseq, frompubid, toid, toname, toseq, topubid):fromid = !fromproteinid,

Protein(fromid, fromname, loc1, f1, fromseq, frompubid, taxonid1),

ProteinProteinInteractions(fromid, toid, taxonid, source, verified),

Protein(toid, toname, loc2, f2, toseq, topubid, taxonid2)

Output

Input

Fromproteinid, fromseq,

Toproteinid, toseq

Fromproteinid

ComposedPlan

Fromproteinid, fromseq

Join

Protein

Protein-Protein

Interactions

Fromproteinid,

Toproteinid

Fromproteinid,

Toproteinid, toseq

Protein

Example Integration Plan

Adding Sensing Operations for

Tuple-level Filtering

Compute original plan for a template query

For each constraint on the sources

Introduce constraint into the query

Rerun inverse rules algorithm

Compare cost of new plan to original plan

Save plan with lowest cost

Optimized Universal Integration Plan

Outline

Extracting data from unstructured and

ungrammatical sources

Automatically discovering models of sources

Dynamically building integration plans

Efficiently executing the integration plans

Dataflow-style, Streaming

Execution

Map datalog plans into streaming, dataflow execution

system (e.g., network query engine)

We use the Theseus execution system since it

supports recursion

Key challenges

Mapping non-recursive plans

Mapping recursive plans

Data processing

Loop detection

Query results update

Termination check

Recursive callback

Example Translation

ProteinProteinInteractions(fromid, toid, taxonid, source, verified):HSProteinInteractions(fromid, toid, source, verified),(taxonid=9606)

ProteinProteinInteractions(fromid, toid, taxonid, source, verified):MMProteinInteractions(fromid, toid, source, verified), (taxonid=10090)

ProteinProteinInteractions(fromid, toid, taxonid, source, verified):ProteinProteinInteractions(fromid, itoid, taxonid, source, verified),

ProteinProteinInteractions(itoid, toid, taxonid, source, verified)

Q(fromid, toid, taxonid, source, verified):ProteinProteinInteractions(fromid, toid, taxonid, source, verified),

(fromid = !fromproteinid),

(taxonid = !taxonid)

Example Theseus Plan

Bio-informatics Domain Results

Experiments in Bio-informatics domain where we have 60 real

web services provided by NCI

We varied number of domain relations in a query from 1-30 and

report composition time with execution time

Time in Miliseconds

16000

14000

12000

10000

Execution Time

8000

Composition Time

6000

4000

2000

0

1

2

3

4

5

6

# of Relations in Query

7

8

Tuple-level Filtering

Tuple-level filtering can improve the execution time of the

generated integration plan by up to 53.8%

Improvement due to Theseus

Theseus can improve the execution time of the generated web

service with complex plans by up to 33.6%

Discussion

Huge number of sources available

Need tools and systems that support the dynamic

integration of these sources

In this talk, I described techniques for:

Extracting data from unstructured and ungrammatical

sources

Discovering models of online sources required for

integration

Dynamic and efficient integration of web sources

Efficient execution of integration plans

Much work still left to be done…

More information…

http://www.isi.edu/~knoblock

Matthew Michelson and Craig A. Knoblock.

Semantic Annotation of Unstructured and Ungrammatical Text

In Proceedings of the 19th International Joint Conference on Artificial

Intelligence (IJCAI), Edinburgh, Scotland, 2005

Mark James Carman and Craig A. Knoblock.

Inducing source descriptions for automated web service composition,

In Proceedings of the AAAI 2005 Workshop on Exploring Planning and

Scheduling for Web Services, Grid, and Autonomic Computing, 2005.

Snehal Thakkar, Jose Luis Ambite, and Craig A. Knoblock.

Composing, optimizing, and executing plans for bioinformatics web

services,

VLDB Journal, Special Issue on Data Management, Analysis and Mining

for Life Sciences, 14(3):330--353, Sep 2005.