Chapter questions - Department of Computer Science and Electrical

advertisement

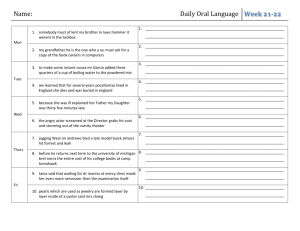

HONR 300/CMSC 491: Computation, Complexity, and Emergence Spring 2010 – Chapter Questions Last revised March 10, 2016 These questions are “food for thought” that you can use to guide your reading and understanding as you work your way through the book. They are not meant as a study guide or as required questions for the class journal assignments, but you should be able to answer (at least) these questions – and you may use them to structure your journal entries, especially if you’re having trouble deciding what to write about. In your journal entries, you should also feel free to suggest other reading questions that you think would have been useful – I will add them to next year’s question list! These are just the questions that I generated as I read through the textbook, to try to enhance your thinking and understanding (and my own). Not all of them have a single correct answer. For all I know, some of them don’t have answers at all! The point is not to know the right answer to every question. The point is to use the questions to help you think about the topics and readings in the class. You should also ask your own questions, and you should be willing to speculate on possible answers – or speculate on ways that you might be able to go about finding an answer, even if you’re not sure what the answer is. C1: Wed 1/26: First class – no reading! C2: Mon 1/31: Complexity game: Preface, Chapter 1 What should you do if you don’t understand an equation as you’re reading the book? What is reductionism? Why aren’t we all physicists? What are some examples of agents and their interactions at different levels of scale or abstraction? Why does Flake say, “[R]eductionism fails when we try to use it in a reverse direction” (p. 2)? Explain the concepts of self-similarity, parallelism, self-organization, iteration, recursion, and adaptation, and give examples of each. What is the difference between adaptation and evolution? C3: Wed 2/2: Number system basics: Chapter 2 Would Zeno’s paradox still seem paradoxical if Achilles was three times as fast as the tortoise, instead of twice as fast? The book analyzes this problem and shows that the Achilles will catch up to the tortoise in exactly twice as long as it takes him to run the distance of the tortoise’s headstart. 1 Figure 2.1 shows graphically that i 1. Can you create a similar 2 i1 graphical depiction for 1 i ? What is the result of this summation? 3 i1 CMSC 491: You should be able to answer this type of summation in the general case, and give an inductive proof that your answer is correct. Are the following sets finite, countably infinite, or uncountable? (1) The integers between -∞ and +∞. (2) The real numbers between 0.1 and 0.2. (3) The atoms in the universe. (4) The number of integers that have a finite number of digits. (5) The number of strings (character sequences) that can be created using only the characters “a” and “b.” (6) The number of prime numbers. (7) The number of real numbers that are rational numbers. (8) The number of real numbers that are not rational numbers. (9) The number of different ways in which the atoms in the universe could be combined into different groups. C4: Mon 2/7: Algorithms: Wikiversity articles (no reading journal) C5: Wed 2/9: The Game of Life Meet in CASTLE, 10-10:50 C6: Mon 2/14: Fractals: Chapter 5 (Note: CMSC 491 students should generally be able to derive precise mathematical answers to the questions in this chapter. HONR 300 students will find it useful to try to analyze these questions precisely as well, but on the midterm, will not be expected to provide mathematical answers to such questions.) What is self-similarity? What would the result be if the Cantor set construction process was applied to the [0, 1] line segment by removing the middle half of each line segment at each iteration? The book shows that the Cantor set consists of exactly those points that can be written in ternary (base 3) notation without using any 1s. Can you come up with an analogous rule for the “middle half” Cantor set? Does it make intuitive sense to you that the Cantor set has width zero but still has an uncountably infinite number of points? (This is an opinion question, not a factual question!) Express the Koch curve’s length at iteration i as a mathematical summation. (Hmm, I wrote this, but don’t find it to have an interesting or satisfying answer…) What is the length of the “middle half” Koch curve at iteration i if we use a construction analogous to the “middle half” Cantor set? What would happen if we applied a “middle half” construction to try to generate a Peano curve? Can you think of other real-world examples where the “coastline measuring” phenomenon applies? (that is, in which measuring something precisely depends on the “yardstick” used) What is the fractional dimension of a “middle half” Cantor set? A “middle half” Koch curve? A “middle half” Peano curve? Think of some fractal-like objects that you may have seen in nature. (Ferns, mountains, coastlines…) What are the underlying processes that cause these fractal objects to be created? Are they similar to the mathematical constructions introduced in this chapter for the mathematical fractals, or are they quite different? C7: Wed 2/16: Fractals lab: Chapter 6 Meet in ECS 021/021A What intuition does Flake provide for why we see fractal curves in nature? What does “L” stand for in “L-system?” Why does one need to specify a depth value when applying an L-system like the one in Table 6.1? C8: Mon 2/21: Mandelbrot and Julia sets: Chapter 8 We skipped Chapter 3, so here is a very brief summary of RE and CO-RE sets: o Recursively enumerable (RE) sets are sets of numbers that can be computed (i.e., for which a program exists that will halt and output “yes” if it is given a member of the set as input). You can think of these sets as corresponding to partially computable functions. o CO-RE sets, or “complement recursively enumerable” sets, are sets of the form “S’ = all numbers that are not in some RE set S” (so there is a CO-RE set S’ for each RE set S). You can think of these sets as a special kind of uncomputable function (i.e., one for which there exists a program that will halt and output “no” if it is given a number that is not in the set as input). o Recursive sets are both RE and CO-RE: that is, they are fully computable, in the sense that a program exists that will always halt for any input, outputting “yes” if the input is a member of the set and “no” if the input is not a member of the set. o Finally, the really tricky type of sets, which the book calls “algorithmically indescribable objects,” are all sets that are neither RE nor CO-RE. That is, there is no program that will consistently halt and recognize whether or not a number is the member of the set: for some inputs, any program that tries to recognize the input will simply never halt. You may want to refresh your memory on imaginary numbers, but really all you need to know for this section is that an imaginary number c = a + bi can be represented by the coefficient pair, (a, b). Minor correction: In the algorithms for computing the Mandelbrot set (Table 8.1, p. 114) and Julia set (Table 8.2, p. 121), instead of “xt = xt + 1,” it should say “xt = xt-1 + 1.” Referring to the function x2t + c, Flake says (p. 114), “If a2 + b2 is greater than 4, then… the sequence will diverge.” Why is this the case? o The Mandelbrot set is the set of all complex constants c such that the Mandelbrot function x2t + c will diverge as t goes to infinity if x0 = 0. o A Julia set Jc is defined for a given constant c, and is the set of all points x0 that result in a divergent Mandelbrot series as t goes to infinity. In Figure 8.2 (the first picture of the Mandelbrot set), what does the (x,y) location of each point correspond to? What is the description of the set of points contained within the black region? Is Figure 8.2 actually a picture of the Mandelbrot set? If not, why not? Do you have any intuition for why Jc for values of c in the Mandelbrot set is a connected set, but Jc for values of c outside the Mandelbrot set (i.e., values that lead to divergence when x0 = 1) is disconnected? (I have to admit that I don’t!) Do you have any intuition for why the number of iterations that it takes for the “neck of the M-set” to converge is an approximation to / ?? Me either! How can such a complex structure possibly result from such a trivial function?? C9: Wed 2/23: Complexity and simplicity: Chapter 9; Murray & Gell-Mann, “Effective Complexity” What does it mean for a number to be “random”? Information as complexity is a common theme in computer science – Chaitin’s notion of “information theory” as the number of bits that are needed to encode a string is at the center of much of AI and knowledge management. In information theory, randomness is “complex,” which seems counterintuitive. I thought the quote, “it may very well be that [fractals] offer the greatest amount of functionality for the amount of underlying complexity consumed” captured what I was thinking of when I asked this. Randomness is “complex” in the sense that it’s hard to precisely replicate – but it’s not very useful. Fractals are “complex” and also store a lot of potentially useful information. Hence the idea of effective complexity. C10: Mon 2/28: Fractals in nature: Articles and video posted on website Due: Fractals Exploration Returning to Flake, recall that he explains the appearance of fractal curves in nature as an optimization for “packing efficiency.” Identify some fractals that are seen in nature, and discuss the tradeoffs that might have led an optimization (evolutionary) process to generate those fractals. C11: Wed 3/2: Chaos/nonlinear dynamics: Chapter 10 Chaotic systems are a great example of the distinction we make in AI between “stochastic” or “nondeterministic” environments and “incomplete” environments. “Stochastic” means that there are (at least apparently) probabilistic effects of actions. “Incomplete” means that actions may have different outcomes depending on conditions of which we have insufficient knowledge. The interesting idea revealed by this chapter is that a sufficiently complex (but incomplete) deterministic system can appear stochastic – for example, when we roll a set of dice, is the outcome probabilistic or deterministic? Maybe if we could completely specify the conditions (starting location, angle and speed of the throw, surface properties of the dice and table, air pressure) with complete precision, down to the molecular level, we would be able to characterize the system as deterministic. Notice that modeling dynamical systems, as with the discussion of “effective complexity,” requires us to decide what properties of a dynamical system matter. For a chemical reaction, the chapter indicates that “the ratio of reactants to reagents” matters – but what if the distribution of these materials over the space in which the reaction occurs also matters? Four types of motion: fixed point (stable or convergent, unvarying behavior); limit cycle (periodic motion into which a system stabilizes), quasiperiodic motion (periodic motion within some “envelope”), chaotic (predictable but only if the starting point is known with infinite precision). Will a particular state in a chaotic system go in one direction or another? Is a particular point in the Mandelbrot set? These are somehow mathematically similar questions, in the sense that as you increase the precision of the representation of the state, your answer may flip, then flip again, then flip again. The logistic map equation looks kind of like the Mandelbrot equation, doesn’t it? Make sure you understand the visualization of the state space in Figure 10.2(b) – this is just showing the logistic map function (parabola), which can be used to plot how a value at time t (on the x axis) projects to a value at time t+1 (on the y axis). You can see that the system converges to a fixed point where the parabola intersects the identity line – because that value will always map back to itself, and any nearby value will map to a closer value. This picture may remind you a bit of Newton’s method. It still seems surprising to me that shifting the parabola in these visualizations can so dramatically change the behavior (fixed point vs k-point limit cycle vs. chaotic behavior). Bifurcation = qualitative change in state space behavior that results in a doubling of the number of points in the limit cycle. Chaos = “infinite-period limit cycle” – a regime in which so many bifurcations have occurred that Check out the self-similarity of the bifurcation diagram in Figure 10.7! The Feigenbaum analysis, that lets one predict when chaos will occur based on the first few bifurcations, is pretty cool… In the middle of Section 10.4, Flake alludes to what we talked about in class: no matter how much precision you give to your digital computer, there are some functions that simply can’t be computed. Key properties of chaos: o Determinism: The system behavior is completely defined by the previous state. o Sensitivity: The system is very sensitive to initial conditions, so any measurement error can cause arbitrarily large divergence over a period of time. o Ergodicity: This just means that over time, all regions of the state space that are reachable will be revisited with regularity. This property lets us understand the likelihood that a region of the state space will be visited, even if we can’t say when that region will be visited. C12: Mon 3/7: Producer-consumer dynamics: Chapter 12 (Lab) Meet in ECS 021/021A Hand out producer-consumer lab, “Chaos Exploration” assignment Due: Participation Portfolio I With respect to our discussion about whether it’s possible/reasonable/ accurate to model real-world systems, it’s interesting that the equationbased and agent-based modeling approaches for predator-prey systems can lead to such similar global behaviors. Predator and prey interact with each other, leading to mutually recursion in the system dynamics. You should be sure to understand the Lotka-Volterra system dynamics model, and what the different parameters are intended to represent. The set of fixed-point behaviors in these systems is quite different from the logistic map, and depends critical on the initial sizes of the two populations. Yet there is a similarity in the shape of the limit cycle; it’s just “scaled” in the state space. Thought question: In figure 12.1, what do you think happens at the boundaries or near the “corners” of the state space (e.g., when the fish population is very close to 3.5 and the shark population is very close to 0)? Some people have the ability to easily view a stereogram (side-by-side dual pictures) like the images shown in Figure 12.2. I can’t. Can you? If so, check out some of the stereograms you can find online: for example, http://www.magiceye.com/3dfun/stwkdisp.shtml . If you don’t have the mathematical inclination, don’t worry too much about the matrix-based representation towards the end of 12.4 for capturing the system dynamics of multi-species populations. In our lab, we’ll play around with a NetLogo agent-based predator-prey model (i.e., a cellular automaton model). C13: Wed 3/9: Chaos: strange attractors: Chapter 11, 14 Kathleen Hoffman – guest lecturer! Chapter 11: o “If you are less inclined to dive into the mathematics, feel free to skip the details” (Flake p. 159). What he said. o The chaotic systems in this chapter, like all chaotic systems, are represented by nonlinear iterated functional systems (IFSs). o Note that because yt+1 is always set to xt, the Henon map can also be seen as a single time series in which each value is dependent on the previous two values. For those of you who have seen Markov chains before, this is just a history-two Markov chain. o The idea of an “unstable fixed point” that was mentioned in Chapter 10 becomes clearer in Figure 11.1, where we see that the chaotic series passes close to an unstable fixed point. Obviously it doesn’t ever exactly reach that value (if it did, it would stay there forever, since that is the definition of a fixed point). o Now we start to see what the idea of “embeddedness” refers to: Every chaotic system has an infinite number of embedded unstable periodic orbits. When we are in the chaotic region, the system will approach but never exactly enter those periodic orbits. They are embedded because they occur within the region of the strange attractor. They are unstable because there is no “basin of attraction” that causes nearby values to fall into the periodic orbit, so the system can get arbitrarily close to them but never stabilize. o A strange attractor is just a region of the state space that the chaotic system “circles” around without ever actually reaching. Interestingly, strange attractors actually have a fractal dimension! This fractal behavior can be seen in Figure 11.2, which shows that the state space behavior of the system never converges but also does not entirely fill the space or “envelope” of the orbit cycle. (It can’t entirely fill that space, because of the infinite number of embedded unstable periodic orbits within the strange attractor region – the existence of those embedded orbits tells us that there is an infinite number of points in the state space that are not visited in the chaotic regime.) o Notice in Figure 11.3 that we see exactly the same kind of bifurcation behavior in the Henon map as we saw in the logistic map. The localized regions of non-chaotic behavior (the white “gaps” between the black regions of chaos) are even more obvious. These are ranges of a for which (when b=0.3) the system has a periodic limit cycle, although these limit-cycle ranges for a are “bounded” by chaos on either side. Interestingly, you can clearly see a period-7 limit cycle around a=1.3, so that answers our question about limit cycles: they don’t have to be powers of two. There are still a lot of things I don’t know: Does the Henon map, after the period-7 limit cycle, descend into chaos through a series of bifurcations? (period-14, period28, etc.) If it does bifurcate after this “embedded limit cycle,” does the Feigenbaum constant apply to the bifurcations that follow these regions At the left-hand side of the period-7 region, does the system also “emerge” from chaos through a rapid series of Feigenbaum-consistent “unbifurcations” (pairwise mergings seen left-to-right)? o It’s really cool that Flake mentions this unproven conjecture about the Lorenz attractor: “for any finite sequence of integers that are not too large, say 7, 3, 12, 4… there exists a trajectory on the Lorenz attractor that loops around one half of the attractor for the first number of times, switches to the other half for the second number of loops, and so on.” Most of us have this idea about mathematics that it is fixed and immutable, and things are either known or not known. But really, mathematics is sort of fractal: There are infinitely many more things that are true and provable but not yet proven, than there are that are proven. Similarly, there are infinitely many more things that are true but not provable than are true and provable. This view of mathematics is related to the idea of “algorithmically indescribable objects” that we read about earlier in the book. Someday, this conjecture about the Lorenz attractor may be proven. Interestingly, it may be digital computing that permits us to prove the conjecture. The Four-Color Map Theorem1 was recently proven using a powerful supercomputer. This proof was accomplished by exhaustively The Four-Color Map Theorem says that any planar (two-dimensional) map can be colored with four colors, such that no two neighboring regions have the same color. (Regions that touch only at a single corner are not considered to be neighboring.) Try it yourself – some maps can be colored with only three colors; can you come up with any useful ways to characterize the class of three-colorable maps? Can you come up with a non-planar counterexample to prove that non-planar maps can’t always be colored with four colors? 1 enumerating all possible “equivalence classes” of map configurations, and proving that each of these classes are solvable. Chapter 14: o The one thought that struck me again as I read this postscript was the comment by Tom T. in the last discussion that he “didn’t believe in chaos.” In fact, the chaotic behavior of the tent map emerges only for non-rational numbers. So there is a philosophical question: Can this kind of chaotic behavior actually be induced in reality? It seems that it can do so only if reality has infinite precision – for example, if a particle can be located at any possible distance (including an irrational distance) from another particle. Maybe there is some aspect of our universe (some kind of quantum behavior) that means that the universe is, in fact, a discrete world – but with extremely high resolution. That could mean that chaos can’t really exist after all, because chaos depends on infinite precision. So maybe chaos is really just a mathematical abstraction in the end. (Then again, chaos may in fact be a useful mathematical abstraction – it could be “true” for all intents and purposes: given the life span of the universe, perhaps the limit cycles of real phenenoma are so very long that we can’t reach the end of them before the universe ends.) o I love the mathematically oxymoronic sentence, “the smallest number that cannot be expressed in fewer than thirteen words.” Wow. C14: MIDTERM C15: Wed 3/16: Cellular automata: Chapter 15, Kurzweil Chapter 15 o Most of the cellular automata that we see here are binary (two possible states per cell), but notice the k parameter that defines the number of states and the r parameter that defines the number of neighbors that affect each cell. The size of the rule table grows exponentially as k grows, where r determines the exponent of this growth. o The four classes of cellular automata should seem reminiscent of ideas we’ve seen before: Class I cellular automata reach a fixed point; Class II CAs enter a limit cycle; Class III CAs are effectively random (one might think of them as having low “effective complexity”); and Class IV CAs seem like hybrid chaotic systems – unpredictable, but with patterns and attractors. o The idea that CAs can be (somewhat) characterized by the simple parameter (which represents the fraction of rules or table entries that map a state into “state zero” or the “off” state) is a bit surprising. Of course, it’s never that simple… o You will probably want to just skim most of section 15.4, about how the Game of Life can act as a universal computer. The link I’ve provided for the Monday 3/28 reading assignment gives a slightly different spin on this topic. It’s interesting but more like a mind game than something that seems useful in practice… in a way, it’s like the idea of NP-complexity – we have this class of systems (“complex systems”) that are in some sense equivalent to each other, because they can all be used to model “universal computation.” o Hopefully you can see how useful CAs are for modeling real-world phenomena: Flake gives a number of examples in section 15.5, and in a way, the whole rest of the class can be seen as different types of cellular automata. Kurzweil, “Reflections on Stephen Wolfram’s ‘A New Kind of Science’” o Flake mentions Wolfram’s extensive research on cellular automata; after Flake’s book was published, Wolfram wrote the book, A New Kind of Science, which as you can see from Kurzweil’s review is a sort of polemic that extols cellular automata as a universal explanation of complexity. o If you’ve ever read anything by Ray Kurzweil (who believes that the Singularity is coming and that he is its prophet), you will undoubtedly find it as amusing as I do that he accuses Wolfram of “hubris.” o I do have to go along with Kurzweil in his “hubris” interpretation when I read the quote by Wolfram that refers to his “discovery that simple programs can produce great complexity.” As Kurzweil points out, Rule 110 is yet another instance of a phenomenon we’ve seen repeatedly in this class, where a very simple, deterministic rule leads to unexpected and unpredictable complexity. o I haven’t read Wolfram’s book (I believe it’s around 1000 pages long), but at least as Kurzweil summarizes his argument (and as I have seen other reviewers summarize it), Wolfram seems to be claiming that “Cellular automata can produce complexity; the world is complex; therefore, the world is a cellular automaton.” This is definitely not a supportable logical argument! o In particular, I think that Kurzweil’s point that systems in the world are adaptive is at the heart of what makes cellular automata inadequate to capture many phenomena in the real world. At a minimum, modeling the world requires some storage devices. I particularly resonated with the quote, “It is the complexity of the software that runs on a universal computer that is precisely the issue.” o Shortly after that, Kurzweil shares his own hubris and shortsightedness (in my opinion): “To build strong AI, we will short circuit this process, however, by reverse engineering the human brain, a project well under way,…” o We talked in class about the question that Wolfram, Kurzweil, and others have all raised: “whether the ultimate nature of reality is analog or digital.” Maybe we will never be able to answer this question. Still, it does seem to me that while it may be philosophically interesting to speculate on this question, it is perhaps not very useful to imagine the world as being discrete – because for all practical purposes (given our own computational limitations), it seems to behave like a continuous system. C16: Mon 3/28: Finite state automata: Turing Machine article, Game of Life TM Due: Chaos Exploration Turing Machine article (Stanford Encyclopedia of Philosophy): o A Turing Machine is just a slightly more general kind of finite state machine (aka finite state automaton) – it’s a FSA plus memory (i.e., it can write stuff down to remember it for later). o A TM consists of a finite set of possible “states” (think of the “state” as a single memory location that the TM can write a single symbol into); an infinite one-dimensional tape with discrete locations or “cells” that can be written with 1s and 0s; a read-write head that can be positioned at any location at the tape, and can write a 0, write a 1, move one step left, or move one step right; and a set of rules (transition table) that tells the machine what to do, given its current state (symbol in the “state” memory location) and the contents of the current cell (where the read-write head is positioned). o The Church-Turing Thesis states (rather informally) that for any intuitively computable task, a Turing Machine can compute the solution. It’s not exactly provable, but nobody has ever disproved it (by describing a computable task that can’t be computed by a TM). o A Universal Turing Machine (UTM) is a state table that can read a Turing Machine specification written on its tape and “execute” that Turing Machine. Basically, it’s a programmable Turing Machine that can do anything that any Turing Machine can do. Wow. o Because this very simple TM can emulate a much more complex Turing machine (more memory, more symbols, more tapes, even a “nondeterministic” machine that can explore multiple transitions simultaneously), every digital computer (even a 1,024-cell supercomputer) can be shown to be Turing-equivalent. Also wow. o Will a particular TM halt? Who knows – that’s the Halting Problem! (Approximation of the proof that we can’t, in general, know: If we run this machine on itself, it won’t halt.) Game of Life TM article: o I have to confess that I didn’t completely understand the details of the Game of Life Turing Machine, but it’s interesting to think about the idea that you can “design” the complexity of a Game of Life simulation to carry out a specific computation. o The Universal Turing Machine on this website reminds me of the RNA transcription process, which is basically a little biological “machine” that walks along a genome, turning the DNA encoding into “implemented” proteins. C17: Wed 3/30: NetLogo Lab (no reading) Meet in ECS 021/021A C18: Mon 4/4: Self-organization: Chapter 16; Strogatz article Chapter 16: o Self-organization is just another name for “parallelism that leads to interesting group behaviors.” o Before I started studying self-organization and multi-agent systems, I hadn’t seen the termite model. It still surprises me that these simple rules lead to such interesting patterns. It does make some sense – termites pick up wood chips anywhere, but only drop wood chips near other wood chips, so the wood chips are going to tend to gather. o The ant simulation in Figure 16.3 has the feeling of the Game of Life, with a “trajectory” of the simulation that extends itself spatially. In fact, the ant rules are a bit like Game of Life rules, except that the ant can only be in one location at a time (so only one location at a time can change). o Figure 16.5 just blows my mind – the patterns are so rich yet so unpredictable. o This chapter doesn’t talk about real ants very much, but I know that there are computational biologists who are developing agent-based computational models of ants, to try to simulate their colony behaviors in silico. o Changing the relative weights of the different rules (avoidance, copying, centering, clearing view, and momentum) in the “boid” flocking model gives remarkably different sorts of behaviors. I had a Ph.D. student (Don Miner) who completed a whole Ph.D. dissertation studying how to predict the emergent behavior of boids (and other agent-based models) from the values of the low-level parameters (i.e., weights on the different rules). Strogatz article: o Terminology: graphs (or networks) consist of nodes or vertices, connected by edges. The degree of a node is the number of edges that it has. The density of a graph is the average degree. The transitivity is the probability that two neighbors of a randomly selected node will also be connected to each other. (This can also be thought of as the number of triangles in the graph.) o Mathematicians have their own version of “Six Degrees of Kevin Bacon”: the “Erdös Number.” Paul Erdös was an incredibly prolific mathematician who published with a huge number of co-authors. His work, combined with recent research on social networks, has led to the exploration of “co-authorship chains” that lead to Erdös. My own Erdös number is 4: I have co-authored with a former student (Matt Gaston) who has a paper co-authored with Miro Kraetzl, who was a co-author with Erdös. o For a long time, people primarily studied regular networks (lattices or fully connected graphs) or random networks (where any given edge has an equal probability of being in the graph). These networks aren’t reflective of most real-world network topologies, so recently there has been a lot of interest in other types of network structures, like smallworld graphs and scale-free graphs. o Small-world graphs are “almost-lattices” where there are some “shortcut” links. These graphs have the interesting property that the diameter (shortest path) of the graph becomes very small (compared to a full lattice). o Scale-free networks are modeled by a “growth pattern” where new nodes are more likely to be connected to highly-connected node in the graph. This makes a lot of sense as a growth model of many realworld networks: when you move to a new town, you’re more likely to meet people with a lot of friends; when a new node is added to the Internet, it’s more likely to be connected to a hub node. Scale-free networks have an exponential degree distribution (in contrast to random graphs, which have a Poisson (bell-curve-like) degree distribution). That is, there are a few very highly connected nodes (orders of magnitude higher than average). This pattern is sometimes referred to as “heavy tailed” (i.e., with individuals that are much further out on the “tail” of the degree distribution than one would expect). Color Trends / Pantone Articles o I included these articles because I had read about color trends in the newspaper and thought it was an interesting example of a complex system in which the agents are consciously trying to control the system’s trajectory. o “You might think that this has elements of a self-fulfilling prophecy….” – that’s exactly what makes the color “market” different from, say, the stock market. (Though of course in the stock market, the agents also have a conscious desire to control the system’s trajectory, so maybe they are more similar than not?) o The Pantone “color of the year” just cracks me up (not being a fashionista) – the idea that there’s this company that just decides what the hot color will be in two years makes the whole fashion thing seem, well, a bit silly to me. But perhaps not quite as silly as the quote from the executive director of Pantone in the NYT article: ““Blue Iris brings together the dependable aspects of blue, underscored by a strong, soul-searching purple cast. Emotionally, it is anchoring and meditative with a touch of magic.” Wow. And here I thought it was blue. o Also amusing (to me) is the director of “branding and design” firm who also thinks that Pantone’s prediction is a bit silly – but would take it entirely seriously if it had come from a designer instead. Also adorable: “forecasts are for the mass market” – whereas, obviously, high fashion is much more dynamic and unpredictable. C19: Mon 4/6: Multi-Agent Game Day (no reading) Due: Participation Portfolio II C20: Mon 4/11: Competition and cooperation: Chapter 17 I didn’t know that slime mold cells are usually independent but sometimes self-organize into a single organism – so weird! Game theory is the “economics of games” – i.e., the study of what a “rational” player should do in the context of an interaction with other players, where each player’s payoff depends on what the other players do. The policy for taking actions in this setting is called a “strategy.” In most games, the strategy is just a single action. In some games, a player can have a “mixed strategy” (where they pick different actions with some probability). In general, the player has to pick their action without knowing what the other player will choose (though they may have some information about the other player’s previous actions). The payoff matrix defines what the reward for each player will be, given that player’s actions and the actions of the other player(s). (Most games studied in game theory are two-player games, but in principle, there can be any number of players.) Social welfare is said to be maximized when the sum of the payoffs of all of the players is maximized. A Nash equilibrium is a set of player strategies where no player will change their strategy if they know the other players’ strategies. The reason that life is difficult is because the set of strategies that maximizes social welfare is quite often not the Nash equilibrium. In fact, the Nash equilibrium can be the minimum social welfare strategy set! This situation can lead to a “race to the bottom” with self-interested agents. The “tragedy of the commons” is a classic example of this scenario. In the iterated Prisoner’s Dilemma, Tit-for-Tat can beat almost all comers. As Flake points out, though, there is no such thing as a “best strategy.” (Things do get more complicated when there is “noise” – i.e., when players sometimes try to cooperate but unintentionally defect, or vice versa.) The IPD is an interesting model for the evolution of cooperation. C21: Wed 4/13: Phase transitions: Chapter 18 / Tipping Point / Egypt / Tulips DUE: Outline and model design Chapter 18: o The tendency of systems to “optimize” themselves by reaching a minimum-energy state is the inspiration for many computational optimization mechanisms. Graph layout, for example, is most commonly performed using a “spring-embedding” algorithm that searches for a minimum-energy state by optimizing the lengths of the edges in the graph. o “Optimization” can be thought of as a process of finding a state, or set of parameter assignments, that minimizes (or sometimes maximizes) the value of an “objective function” (i.e., some function of the state or parameter assignments). o “Combinatorial optimization” just means optimization of an objective function that is defined on a set of assignments or choices, where the assignments may have various constraints between them. o HONR students will probably want to skip most of Sections 18.1-18.4. Don’t worry about the math. The main ideas are: Section 18.1 – Computational models of neurons can be “trained” to learn (optimize) various predictive functions. Section 18.2, 18.3 – Neural models can be trained to “remember” various sets of patterns, using mathematical feedback rules. Section 18.4 – Hopfield networks are a more sophisticated model for neural nets that can learn complex functions and solve difficult constrained optimization problems. Tipping Point: o It’s fascinating how Gladwell connects such disparate phenomena as the Hush Puppies trend, the drop in crime in Brooklyn, and the spread of disease using the “tipping point” idea. o Can you think of other “tipping points” and how they might have been generated, using the three characteristics Gladwell identifies – contagiousness, small causes leading to large effects, and change happening suddenly? Can you identify any Mavens, Connectors, or Salespeople in these tipping point phenomena? Is there a Law of the Few, a Stickiness Factor, or a Powerful Context that applies? o If you don’t smoke but know people who do, or don’t do drugs but know people who do, or don’t engage in other risky behaviors but know people who do, it’s easy from the outside to just dismiss these as individual choices. But understanding why people engage in these behaviors in the context of the society in which they live is the key to developing interventions that might halt or slow these “epidemics.” Egypt article: o As I’ve mentioned in class, the popular uprising Egypt strikes me as a fascinating example of a social “tipping point.” What was the trigger that caused the populace to shift from unexpressed frustration with the system to vocal frustration with the system? Can you connect the “tipping point” concepts of contagion, small causes, and sudden change to this social movement? o This article doesn’t really address the sources of the tipping point, but it does hint at an intriguing thought – on the other side of any tipping point, we enter into a new regime that we don’t yet understand and can’t predict based on pre-tipping point system behavior. Tulips article: o “Tulip mania” is a great example of groupthink leading to an unsustainable positive feedback loop. o “At the peak of tulip mania, in February 1637, some single tulip bulbs sold for more than 10 times the annual income of a skilled craftsman.At the peak of tulip mania, in February 1637, some single tulip bulbs sold for more than 10 times the annual income of a skilled craftsman.” – This sentence reminds me a bit of our discussions about Gucci bags and Jimmy Choo sandals, though it’s a different phenomenon, really – Gucci and Jimmy Choo are seen as having “value” of some sort, whereas the tulip prices during tulip mania were based on their perceived value as an investment. Luxury pricing seems to be more sustainable than speculation – they are both equally “false” in that neither of them are based on intrinsic reality-based value, but the latter relies on having a market in which to resell the products – and when that market collapses, all of the value dissipates. o Housing bubbles, subpar mortgage repackaging – we have seen many speculative bubbles recently, so it’s not as though “we” collectively have learned much from tulip mania… C22: Mon 4/18: Optimization and search: Reading TBA C23: Wed 4/20: Genetics and evolution: Chapter 20 / Dawkins article C24/25: Mon 4/25 / Wed 4/27: NetLogo project presentations DUE: NetLogo project C26: Mon 5/2: Classifier systems: Chapter 21 C27: Wed 5/4: Additional topics TBA C28: Mon 5/9: Additional topics TBA C29: Wed 5/11: Student presentations