SPDG Year 2 Grant Performance Report

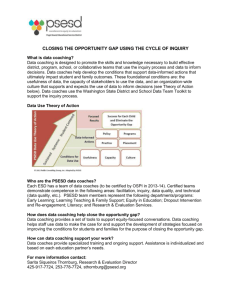

advertisement