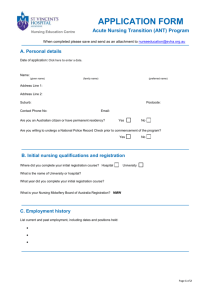

qnr

advertisement