Project book

advertisement

The Technion Electrical Engineering Software Lab

in the Technion Electrical Engineering

Software Lab

Design Document for Project

Content based spam web

pages detector

Last Revision Date: 10.1.2006

Team members:

Avishay Livne avishay.livne@gmail.com 066494576

Itzik Ben Basat sizik1@t2.technion.ac.il 033950734

Instructor Name:

Maxim Gurevich

1

The Technion Electrical Engineering Software Lab

Index

0

ABSTRACT ........................................................................................................................................... 3

1

INTRODUCTION ................................................................................................................................. 4

2

DESIGN GOALS AND REQUIREMENTS ....................................................................................... 4

3

DESIGN DECISIONS, PRINCIPLES AND CONSIDERATIONS .................................................. 5

4

SYSTEM ARCHITECTURE ............................................................................................................... 6

5

COMPONENT DESIGN ...................................................................................................................... 6

6

DATA DESIGN ..................................................................................................................................... 8

7

RESULTS............................................................................................................................................... 9

8

SUMMARY...........................................................................................................................................16

9

FUTURE WORK .................................................................................................................................16

10 DEFINITIONS, ACRONYMS, AND ABBREVIATIONS – ............................................................17

11 REFERENCE MATERIAL ................................................................................................................19

2

The Technion Electrical Engineering Software Lab

Abstract

This document is the design document for the Content Based Spam Web Pages

Detector project. The project was done by Avishay Livne and Itzik Ben Basat in

the software lab. This is an educational project with no commercial intentions.

The project implements an application that classifies HTML web pages as spam

or legal pages. The classification process is based purely on the content of the

HTML source of the specific page. The classifier doesn't need any external data,

such as links between different pages.

Briefly, the classification process is compound of the following steps:

1. Parsing the to-be-classified pages in a format the application can handle,

evaluating each of the page's attributes.

2. Constructing a decision tree, based on a manually tagged dataset of

pages. Each page has a tag, marking it as spam or legal.

3. Running each of the pages through the decision tree and making a

decision whether it's a spam or not.

The theory behind this classification method is described in the paper "Detecting

Spam Web Pages through Content Analysis" by Ntoulas et al. This paper was

the theoretical background for the project.

After implementing the classifier we tested its performance on different datasets

and compared the results to those in the paper. In order to investigate the

differences in the results we produced histograms that describe the distribution of

each of the attributes.

3

The Technion Electrical Engineering Software Lab

1 Introduction

Recently, spam has invaded Web as it earlier invaded e-mail. Web spammer’s

goal is to artificially improve the ranking of a specific web-page within search

engine. Numerous bogus web-sites are created and clog Web as e-mail spam

clogs inboxes. More and more resources are directed by search engines to fight

spam. Many search engines struggle to provide high-quality search results in the

presence of spam.

Recent study by Ntoulas et al. proposes an efficient technique to detect spam

web pages. It analyzes certain features of a web page such as fraction of visible

content, compressibility, etc. and then employs machine learning in order to build

page classifier based on these features.

The rest of the document describes the goals of the project, the requirements

from the application, major design decisions, the system architecture, a deeper

explanation of the system’s components and some data structures we used.

2 Design Goals and Requirements

This part describes the goals of the project and gives a detailed requirements list

of the application.

The purpose of the project is to implement a web spam classifier, which given a

web page, will analyze its features and try to determine whether the page is

spam or not. The efficiency of the classifier will be compared to the results of

Ntoulas et al.

The main purposes of the project are:

1. Learning algorithms and methods to classify web pages as spam pages,

by only analyzing the web pages.

2. Implementing the algorithms and methods in order to create a classifier

that will detect web spam pages.

3. In this project we shall not use methods that track links and construct

graphs that model the web.

4

The Technion Electrical Engineering Software Lab

4. After implementing the classifier we shall test its performance on data sets

of web pages. The pages are tagged manually so we could get a good

measure of the quality of our classifier.

Other goals are:

1. Getting familiar with hot topic in the field of search engines.

2. Getting familiar with HTML parsing.

3. Getting familiar with machine learning and decisions trees.

4. Having fun

3 Design Decisions, Principles and Considerations

The classification of a web page will be done according to the following

attributes:

1. Domain name (if the URL is given)

2. Number of words in page

3. Number of words in the page’s title

4. Average length of words

5. Amount of anchor text

6. Fraction of visible content

7. Compressibility

8. Fraction of page drawn from globally popular words

9. Fraction of globally popular words

In order to train our classifier we shall use free data sets of manually tagged

HTML pages that we found on the internet.

We shall implement our parser using HTMLParser, a popular HTML parser (more

information can be found at http://htmlparser.sourceforge.net/).

The decision making process will be done according to the C4.5 algorithm. We

shall use jaDTi, an open source decision tree implemented in Java, to implement

our decision tree.

5

The Technion Electrical Engineering Software Lab

4 System Architecture

The architecture we designed is composed of two major components; each of

them uses two major tools.

The major components are the Trainer and the Classifier. Both of the classes use

the Parser and the DecisionTree.

The Trainer's input is a set of HTML pages manually tagged as spam or notspam. It'll create a new DecisionTree and will train it according to this dataset.

After the whole training process is complete, the Trainer shall save the

DecisionTree in a file, for future use of the Classifier.

The Classifier's input is a set of HTML pages. The goal of the classifier is to tag

each of the HTML pages as a spam or not-spam page correctly. In order to

decide which page is spam or not the Parser will use the DecisionTree that was

built by the Trainer.

Both the Trainer and the Classifier uses the Parser to gather the wanted statistics

of every HTML page. The Parser's input is an HTML page and its output is a list

of attributes and their values.

We considered combining the Trainer and the Classifier as one component, but

decided it'll be better to split them up to create a clearer encapsulation and more

organized distribution of authorities.

The classes diagram of the project:

5 Component Design

5.1

The Parser:

The Parser component is responsible for extracting the required statistics

from each HTML page. In order to implement the Parser we use external

library – HTMLParser.

The Parser iterates a few times over the HTML source of the page and

6

The Technion Electrical Engineering Software Lab

calculates the following statistics for each page: number of words in page,

number of words in title, average length of words, amount of anchor text,

fraction of visible content, fraction of page drowns from globally popular

words and fraction of popular words. In addition the Parser compresses the

page in order to calculate its compressibility.

5.2

The DecisionTree:

The DecisionTree component is responsible for deciding whether a page is

web spam or a legitimate web page. In order to build a new DecisionTree

we supply it with a set of manually tagged pages. Each page in the data set

was tagged by a person as "spam", "normal" or "undecided". For

DecisionTree each page is represented as a map of attributes and values,

according to them DecisionTree decides whether a webpage is spam or

not.

Once a DecisionTree is instantiated and built, it can be used for classifying

untagged webpages.

DecisionTree's output is the result of the classification algorithm – that is the

value that represents how "spammy" the website is.

5.3

The Trainer:

The Trainer component is responsible for training the decision tree. This

component uses the parser to read a set of manually tagged HTML pages,

instantiate a decision tree and trains it with this data set to create a welltrained decision tree.

5.4

The Classifier:

The classifier component is responsible for classifying untagged HTML

webpages. The final goal of this project is to utilize this component, using

the other components of the project, to investigate its performance (mainly

successful classification rate) and hopefully to use it in order to improve the

experience of finding information on the internet and improve search

engines' results.

7

The Technion Electrical Engineering Software Lab

6 Data Design

This section describes the formats of the files used in the project, and a few data

structures that were used in the project.

6.1 File Formats:

The system uses the following files: parsed data-set file, decision tree file, and

HTML webpages.

Parsed data-set file contains a list of the pages and their attributes. This file is

produced by the parser and is used by the DecisionTree.

Below is the format of this file:

<Data set name>

<Attributes' names and types>

<Page's address and its attributes' values>

Example for parsed data set file (the "line#:" marks don't appear in the real file

and are only for clarifying):

line1: Dataset1

line2: object name wordsInPage numerical wordsInTitle numerical

averageWordLength numerical anchorText numerical

fractionOfVisibleContent numerical compressibility numerical

fractionDrownFromPopularKeywords numerical fractionOfPopularKeywords

numerical spam symbolic

line3: http://2bmail.co.uk 371 5 4.0 0.0 0.0 2.0 0.0 0.0 undecided

line4: http://4road.co.uk 278 18 5.0 0.0 0.0 7.0 0.0 0.0 spam

line5: http://amazon.co.uk 780 11 4.0 0.0 0.0 5.0 0.0 0.0 normal

Implementation issues:

We had to modify the code of jaDTi so its file reader will be able to read HTTP

addresses (the original code can't handle names with various chars like '.', '/', '-',

'_' etc.).

In addition to the parsed data set file, the system uses a file to store the

DecisionTree which the Trainer is responsible to build. We're saving the

DecisionTree on the disk using the ObjectOutputStream class. In order to save

the DecisionTree class via ObjectOutputStream we had to modify the code of

jaDTi, so all the relevant classes will implement the Serializable interface.

8

The Technion Electrical Engineering Software Lab

6.2 Data structure – PageAttributes class:

The PageAttributes data structure is a representation an HTML webpage, which contains

the information needed by the system's components. Each field contains the value of a

different attribute of the webpage, therefore the fields are:

Name – String. The HTTP address of the webpage.

WordsInPage – long. The number of words in the webpage's text.

WordsInTitle – long. The number of words in the webpage's title.

AverageWordLength – double. The average number of letters in each word in the text.

AnchorText – long. The number of words in the anchor text.

FractionOfVisibleContent – double. The value of the ratio (amount of words in invisible

text / amount of words in invisible text + amount of words in visible text). Invisible text

is hidden text, usually appears under HTML tags like META or ALT attributes.

Compressibility – double. The ratio (gzipped compressed file size / original file size).

fractionDrownFromPopularKeywords - double. The value of the ratio (amount of popular

keywords that appear in the text / amount of words in the text).

fractionOfPopularKeywords – double. The value of the ratio (amount of popular

keywords that appear in the text / amount of words in popular keywords' list).

Tag – String. Possible values = {normal, undecided, spam}. In training sets this

represents the value of the manually tagged page. This value tells the DecisionTree

whether the page is a spam or a legitimate webpage.

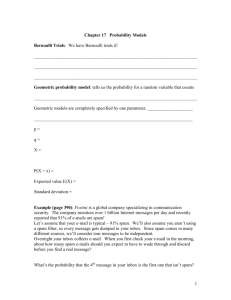

7 Results

This chapter summarizes the results of the experiments we executed in the

project.

In our experiments we constructed a few decision trees out of the given data set.

We divided the data set to several chunks, each of them contains equal number

of pages. For each of the chunks we built a decision tree, and tested the tree on

the rest of the chunks.

We measured the performance of each tree by calculating the following values:

Match Rate (MR): the amount of correct marks the DT made out of total

observed pages.

Spam Precision Rate (SPR): the fraction of spam pages that were actually

9

The Technion Electrical Engineering Software Lab

marked as spam by the DT, out of the number of pages that were marked as

spam.

Spam Recall Rate (SRR): the fraction of spam pages that were actually marked

as spam by the DT, out of the number of real spam pages.

Non-spam Precision Rate (NPR): the fraction of non-spam pages that were

actually marked as non-spam by the DT, out of the number of pages that were

marked as non-spam.

Non-spam Recall Rate (NRR): the fraction of non-spam pages that were actually

marked as non-spam by the DT, out of the number of pages that are really nonspam.

The best results we managed to reach are summarized in the following list:

MR = 92.7%

SPR = 60.6%

SRR = 71.5%

NPR = 96.9%

NRR = 95.3%

These results aren't as good as in the paper but are at least positive.

In order to investigate what hurt the performance we produced histograms for

each attribute.

Experimental setup:

The data set we used is taken from http://www.yr-bcn.es/webspam/datasets and

contains a large set of .uk pages downloaded in May 2006. A group of volunteers

classified manually the whole dataset with normal/undecided/spam tags.

The data set contains 8415 pages, from them we found 656 (7.8%) were

dead/not responding.

From the 7759 good pages we found

7024 (90.5%) were tagged as normal (not spam).

582 (7.5%) were tagged as spam.

153 (2%) were tagged as undecided.

After filtering out very small pages (with less than 20 words in their text) that we

consider as noise the data set contained 6875 pages (about 11% of the pages

were filtered).

The distribution was the following:

6183 (90%) were tagged as normal.

10

The Technion Electrical Engineering Software Lab

545 (8%) were tagged as spam.

147 (2%) were tagged as undecided.

We reached our best results by constructing a decision tree from half of the

dataset. The decision tree was built using entropy threshold = test score

threshold = 0.

11

The Technion Electrical Engineering Software Lab

spam

non-spam

number of words

18%

16%

14%

12%

10%

8%

6%

4%

2%

20

0

35

0

50

0

65

0

80

0

95

0

11

00

12

50

14

00

15

50

17

00

18

50

20

00

21

50

23

00

24

50

26

00

27

50

29

00

30

50

32

00

33

50

35

00

50

0%

spam

non-spam

number of words in title

18%

16%

14%

12%

10%

8%

6%

4%

2%

12

49

47

45

43

41

39

37

35

33

31

29

27

25

23

21

19

17

15

13

11

9

7

5

3

1

0%

The Technion Electrical Engineering Software Lab

spam

non-spam

Average word length

8%

7%

6%

5%

4%

3%

2%

1%

spam

non-spam

9.

6

9.

1

8.

6

8.

1

7.

6

7.

1

6.

6

6.

1

5.

6

5.

1

4.

6

4.

1

3.

6

3.

1

2.

6

2.

1

1.

6

1.

1

0.

6

0.

1

0%

fraction of anchor text

15%

13%

11%

9%

7%

5%

3%

1%

0.

01

0.

06

0.

11

0.

16

0.

21

0.

26

0.

31

0.

36

0.

41

0.

46

0.

51

0.

56

0.

61

0.

66

0.

71

0.

76

0.

81

0.

86

0.

91

0.

96

-1%

13

The Technion Electrical Engineering Software Lab

spam

non-spam

fraction of visible content

15%

13%

11%

9%

7%

5%

3%

spam

non-spam

96

91

0.

0.

86

0.

81

76

0.

0.

71

0.

66

61

0.

0.

56

0.

51

46

0.

0.

41

0.

36

0.

31

0.

26

0.

21

0.

16

0.

11

0.

0.

0.

01

-1%

06

1%

compression ratio

15%

13%

11%

9%

7%

5%

3%

14

4.

3

4.

6

4.

9

5.

2

5.

5

5.

8

6.

1

6.

4

6.

7

20

4

0.

1

0.

4

0.

7

-1%

1

1.

3

1.

6

1.

9

2.

2

2.

5

2.

8

3.

1

3.

4

3.

7

1%

-1%

15

0.85

0.89

0.93

0.97

0.85

0.89

0.93

0.97

0.61

0.57

0.53

0.49

0.45

0.41

0.37

0.33

0.29

0.25

0.81

1%

0.81

3%

0.77

5%

0.77

7%

0.73

9%

0.73

11%

0.69

13%

0.69

15%

0.65

fraction of popular keywords

0.65

0.61

0.57

0.53

0.49

0.45

0.41

0.37

0.33

spam

non-spam

0.29

0.25

0.21

0.17

0.13

0.09

0.05

spam

non-spam

0.21

0.17

0.13

0.09

0.05

0.01

-1%

0.01

The Technion Electrical Engineering Software Lab

fraction of text drown from popular keywords

15%

13%

11%

9%

7%

5%

3%

1%

The Technion Electrical Engineering Software Lab

8 Summary

In this project we had the opportunity to explore a few hot topics in the field of

search engines. One can learn the importance of this field by watching the

impact of search engines like Google and Yahoo on the humanity. We focused

on the topic of web spam, which is one of the corner stones for a good search

engine. The increasing size of the advertisement market on the internet leads

many people to exploit different flaws in order to optimize their website's rank.

Many books have already written about Search Engines Optimization (SEO)

introducing a broad diverse of techniques, some of them legal (white hat), some

of them less (grey hat) and some of them illegal (black hat).

Search engines' developers are in constant contest with the developers of the

SEO techniques. Our project investigated a narrow part of the methods used

against SEO.

We started with reading some theoretical background, and then we designed and

implemented a classifier that marks web pages as spam or non-spam pages.

After the design and implementation phase we constructed a few decision trees

using different data sets of manually tagged pages. Using the decision trees we

classified the rest of the pages and compared the results to the original tags. We

measured the results of the classifier and produced histograms for each attribute.

The histograms helped us investigate why the performance weren't as good as in

the paper. We conclude that the size of the data set affected the accuracy of the

decision tree, and there were many small pages that added noise to the process

of constructing the decision trees.

9 Future work

Our suggestions for those who are willing to contribute to this project are:

Observing more attributes: In the paper few more page attributes are described,

which we didn't observe in our parser. A classifier that analyzes each page using

more attributes might reach better performance.

Using alternative decision tree algorithm: In our project we used the jaDT

package in order to construct a decision tree. Implementing an alternative

decision tree constructor might lead to better results.

16

The Technion Electrical Engineering Software Lab

Using the classifier in a search engine: It's possible to use the classifier in order

to filter unwanted pages in a real search engine. The classifier can be combined

with other filtering tools which use different techniques to filter spam pages.

10 Definitions, Acronyms, and Abbreviations –

HTML - In computing, HyperText Markup Language (HTML) is the predominant

markup language for the creation of web pages. It provides a means to describe the

structure of text-based information in a document — by denoting certain text as headings,

paragraphs, lists, and so on — and to supplement that text with interactive forms,

embedded images, and other objects. HTML can also describe, to some degree, the

appearance and semantics of a document, and can include embedded scripting language

code which can affect the behavior of web browsers and other HTML processors. [From

Wikipedia, the free encyclopedia]

Spam - Spamming is the abuse of electronic messaging systems to send unsolicited,

undesired bulk messages. While the most widely recognized form of spam is email spam,

the term is applied to similar abuses in other media: instant messaging spam, Usenet

newsgroup spam, Web search engine spam, spam in blogs, and mobile phone messaging

spam.

Spamming is economically viable because advertisers have no operating costs beyond the

management of their mailing lists, and it is difficult to hold senders accountable for their

mass mailings. Because the barrier to entry is so low, spammers are numerous, and the

volume of unsolicited mail has become very high. The costs, such as lost productivity and

fraud, are borne by the public and by Internet service providers, which have been forced

to add extra capacity to cope with the deluge. Spamming is widely reviled, and has been

the subject of legislation in many jurisdictions. [From Wikipedia, the free encyclopedia]

Web Spam, Spamdexing - Search engines use a variety of algorithms to determine

relevancy ranking. Some of these include determining whether the search term appears in

the META keywords tag, others whether the search term appears in the body text or URL

of a web page. A variety of techniques are used to spamdex (see below). Many search

engines check for instances of spamdexing and will remove suspect pages from their

indexes. [From Wikipedia, the free encyclopedia]

17

The Technion Electrical Engineering Software Lab

The rise of spamdexing in the mid-1990s made the leading search engines of the time less

useful, and the success of Google at both producing better search results and combating

keyword spamming, through its reputation-based PageRank link analysis system, helped

it become the dominant search site late in the decade, where it remains. While it has not

been rendered useless by spamdexing, Google has not been immune to more

sophisticated methods either. Google bombing is another form of search engine result

manipulation, which involves placing hyperlinks that directly affect the rank of other

sites.

Common spamdexing techniques can be classified into two broad classes: content spam

and link spam. [From Wikipedia, the free encyclopedia]

Decision Tree - Decision tree is a predictive model; that is, a mapping of observations

about an item to conclusions about the item's target value. More descriptive names for

such tree models are classification tree or reduction tree. In these tree structures, leaves

represent classifications and branches represent conjunctions of features that lead to those

classifications. The machine learning technique for inducing a decision tree from data is

called decision tree learning, or (colloquially) decision trees. [From Wikipedia, the free

encyclopedia]

Anchor Text – This text is suppose to give additional information about the links in the

webpage. Some search engines use this text in order to rank relevance of a webpage to

different keywords. Therefore some web spammers use this as a place to implant popular

keywords in order to mislead the search engine.

Popular keywords – We use this term to describe a list of N keywords that are very

popular. A keyword is considered popular if it appears in many searches performed by

end users. The more searches it appears in, the more popular this keyword is.

Web spammers tend to implant popular keywords in their webpages, hoping this will

make the search engine to rank the page higher. Therefore the appearance of ma many

popular keywords in a webpage could hint this is a spam webpage.

Our list contains 1000 popular keywords and we shall run experiments with different

values of N to see the impact of the size of this list on the classifier performance.

18

The Technion Electrical Engineering Software Lab

11 User Interface

This section describes how to run every module in the project.

DestinedParser:

Command line format:

DestinedParser <list_of_pages> <db_name> <train/classify>

Command line example:

DestinedParser full_list.txt full_list train

DestinedParser full_list.txt full_list classify

The first argument is a file that contains a list of the to-be-parsed pages.

The second argument is the name of the output db.

The last argument tells the parser if the output should be in train format or in classify

format.

In train format the output file will contain the tag for each webpage in the end of its line.

In classify format the output file won't contain tags.

The output file will be named <db_name>.db

Trainer:

Command line format:

Trainer <db_filename>

Command line example:

Trainer full_list.db

The only argument is the name of the database from which the decision tree will be

constructed.

The resulted decision tree will be saved in the file <db_filename>.dt

Classifier:

Command line format:

Classifier <dataset_file> <decision_tree_name> <output_file>

Command line example:

Classifier list1.db list1.db tree1_list1.txt

The first argument is a file that contains the parsed webpages that we want to classify.

The second argument is the name of the decision tree. The classifier assumes there's a file

named <decision_tree_name>.dt which contains the decision tree.

The last argument is the filename that will contain the output.

The output will contain the same line for each of the webpages, with the decided tag in

the end of its line.

12 Reference Material

12.1 The free data set of manually tagged HTML pages we used to train our classifier.

http://www.yr-bcn.es/webspam/datasets/webspam-uk2006-1.1.tar.gz

12.2 HTMLParser's homepage

http://htmlparser.sourceforge.net/

12.3 Description of the C4.5 algorithm

http://en.wikipedia.org/wiki/C4.5_algorithm

12.4 jaDTi's homepage

http://www.run.montefiore.ulg.ac.be/~francois/software/jaDTi/

19

The Technion Electrical Engineering Software Lab

20