EntropyDerivation

advertisement

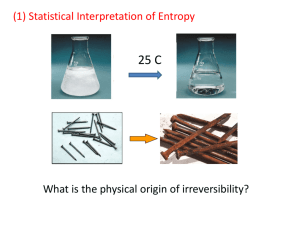

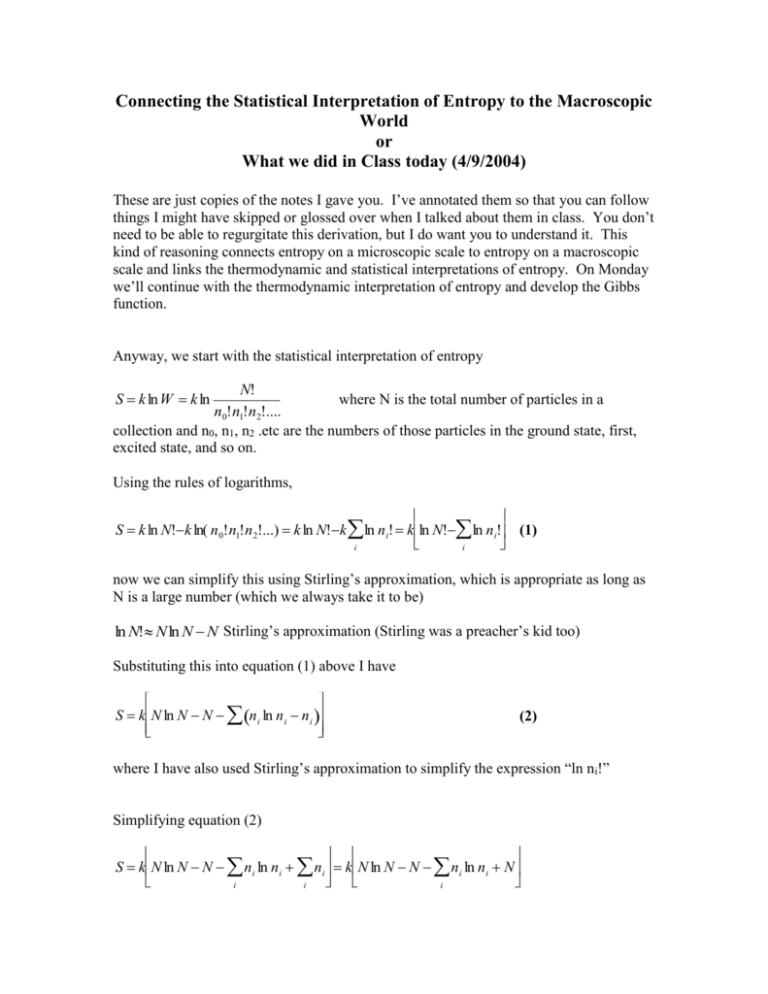

Connecting the Statistical Interpretation of Entropy to the Macroscopic World or What we did in Class today (4/9/2004) These are just copies of the notes I gave you. I’ve annotated them so that you can follow things I might have skipped or glossed over when I talked about them in class. You don’t need to be able to regurgitate this derivation, but I do want you to understand it. This kind of reasoning connects entropy on a microscopic scale to entropy on a macroscopic scale and links the thermodynamic and statistical interpretations of entropy. On Monday we’ll continue with the thermodynamic interpretation of entropy and develop the Gibbs function. Anyway, we start with the statistical interpretation of entropy N! where N is the total number of particles in a n0!n1!n2!.... collection and n0, n1, n2 .etc are the numbers of those particles in the ground state, first, excited state, and so on. S k ln W k ln Using the rules of logarithms, S k ln N!k ln( n 0!n1!n 2!...) k ln N!k ln n i! kln N! ln n i! (1) i i now we can simplify this using Stirling’s approximation, which is appropriate as long as N is a large number (which we always take it to be) ln N! Nln N N Stirling’s approximation (Stirling was a preacher’s kid too) Substituting this into equation (1) above I have S kN ln N N n i ln n i n i (2) where I have also used Stirling’s approximation to simplify the expression “ln ni!” Simplifying equation (2) S kN ln N N n i ln n i n i kN ln N N n i ln n i N i i i kN ln N n i ln n i i (3) Okay, now this next part is tricky. Notice that the sum of the numbers of particles in each state, ni, must equal the total number of particles in the collection N. Therefore we can reexpress equation (3) as S k n i ln N n i ln n i i (4) We can do this because ln N is a constant independent of the sum, so it doesn’t matter if we put it inside the sum or not Anyway, using the rules of logarithms equation (4) can be rewritten N n S k n i ln k n i ln i N n i i i (5) ni is simply the probability that a molecule in a collection of N molecules will be N in the ith state. Written another way using Boltzman statistics Now, i ni e kT pi ; ni pi N N q (6) Substituting this into equation (5) gives. S kN pi ln pi (7) i Equation (6) also allows us to express ln pi in terms of the partition function, q. i kT i e ln ln pi ln e kT ln q i ln q q kT Using this in equation (7) kN S kN pi i ln q kN pi i pi ln q pii Nk ln q pi kT i kT i kT i i i This reduces to S N i N pii Nk ln q 1 Nk ln q T i T where the second equality holds because the sum of the probabilities, pi, has to equal 1 and because the sum of the energies of the states, i, weighted by their probabilities, pi, equals the average energy, <i>, of particles in the collection. Finally, the number of particles N multiplied by the average energy of those particles gives the total energy of particle in the sample- relative to the zero point energy which we have taken as the bottom of our energy scale (see earlier notes on the derivations of the partition functions or see me if this last part doesn’t make sense). So N i E E(0) Finally S E E(0) Nkln q T (8) and we now have an expression relating the statistical interpretation of entropy with the thermodynamic quantity. We can apply this to a collection of N identical harmonic oscillators which has the partition function 1 qvib 1 e h 0 where 0 is the fundamental vibrational frequency of the molecules kT in question. (we showed why this is the vibrational partition function in an earlier lecture, see your notes for details). Using the expression for thermodynamic energy that we derived earlier for the energy above the zero-point energy E E(0) N q q ( 1 ) kT We can derive the following expression for the vibrational entropy of a collection of harmonic oscillators kT S Nk ln(1 e kT ) kT e 1 Note, for those of you in class on Friday- I made a sign error in the formula I gave you in class. This exponent should be positive, not negative. where is just shorthand for h0. Homework: 1. Using the formulas I have given you, try to derive this expression given above for the entropy of a collection of oscillators. 2. Calculate the vibrational entropy of 1 mole of CO molecules at 100 K. The fundamental frequency of CO is 2143 cm-1. (you’ll have to convert this to n0 using the fact E=h=hc0, c=2.998x1010 cm/s)