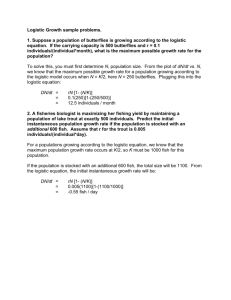

Data Analysis with SPSS

advertisement

Statistics – Spring 2008 Lab #5– Logistic Defined: Variables: Relationship: Example: Assumptions: A model for predicting one variable from other variable(s). IVs is continuous/categorical, DV is dichotomous Prediction of group membership Can we predict bar passage (yes, no) from LSAT score Multicollinearity (not Normality or Linearity) 1. Graphing - Scatterplot The first step of any statistical analysis is to first graphically plot the data. In the last lab about linear regression, if you wanted to see the relationship between two continuous variables, you would conduct a scatterplot. However, if you want to look at the relationship between a continuous and dichotomous variable, there is no graph that will display useful information. For example, try conducting a scatterplot between “age” and “sex”. FYI – See “Lab3 – Correlation” for how to conduct a scatterplot. The scatterplot for “age” and “sex” is displayed below. Notice how it is uninterruptible. 2. Dichotomous variables Logistic regression involves dichotomous outcome variables. For our dataset, we are going to create a dichotomous outcome variable for Question “errors1a”, which asks: Every legal system is bound to have the following two types of error: first, a certain amount of truly guilty people do not get convicted. Second, a certain amount of truly innocent people get convicted for crimes they did not commit. The two types of error are related to each other: in harsh legal systems, fewer truly guilty people escape punishment but more truly innocent people get convicted, whereas in lenient systems, more truly guilty people escape punishment but fewer innocent people get convicted. a. What, in your opinion, is the most appropriate ratio of the two types of error? 1 Better to acquit 30 or more guilty people than to convict one innocent person 2 Better to acquit 20 people than to convict one innocent person 3 Better to acquit 10 guilty people than to convict one innocent person 4 Better to acquit 5 guilty people than to convict one innocent person 5 Better to acquit 3 guilty people than to convict one innocent person 6 Better to acquit 1 guilty person than to convict one innocent person 7 The errors are equivalent 8 Better to convict 1 innocent person than to acquit one guilty person 9 Better to convict 3 innocent people than to acquit one guilty person 10 Better to convict 5 innocent people than to acquit one guilty person 11 Better to convict 10 innocent people than to acquit one guilty person 12 Better to convict 20 innocent people than to acquit one guilty person 13 Better to convict 30 or more innocent people than to acquit one guilty person 1 We are going to dichotomize “errors1a” so that: a. 0=people who think it is better to acquit guilty people than convict innocent people (1 through 6) b. 1=people who think it is better to convict innocent people than acquit guilty people (8 through 13) You dichotomize according to the instructions in “Lab2 – Descriptives” for “Transforming continuous variables into categorical variables” 1. Select Transform --> Recode into different variables 2. Move “errors1a” into the “Input Window” 3. Type a name for the new variable, such as “errors1a_di” 4. Click “Changes” 5. Click “Old and New Values” 6. Click “Range” and enter the range of values of the “old” variable, and assign a number for new variable. (e.g., 1-6 become a “0”, and 8-13 becomes a “1”) 7. Click Continue 8. Click OK. VERY IMPORTANT I would highly suggest you label the new categories such as 0=better acquit guilty, 1=better convict innocent 1. Go to “Variable view” 2. Scroll to bottom where it says “errors1a_di” 3. Click on “Values. 4. First, put a “0” for Value, and “better acquit guilty” for Label, and click “Add” 5. Second, put a “1” for Value, and “better convict innocent” for Label, and click “Add” 6. Click OK. 3. Assumptions: Multicollinearity SPSP does not have multicollinearity output for logistic tests. Instead, you use the “Linear Regression” tests we learned in “Lab4 – Regression” to conduct Multicollinearity analysis for your logistic analysis. For example, later in this document we will conduct multiple logistic analysis to predict “errors1a_di”using three variables: “age”, “threshold2”, and “defendant4”, using Analyze --> Regression --> Binary Logistic. However, to test Multicollinearity for our logistic analysis, we use Analyze --> Regression --> Linear, and insert our logistic variables into the linear analysis. 1. Select Analyze --> Regression --> Linear 2. Move “errors1a_di” into the DV box; move into the IV box: “age”, “threshold2”, and “defendant4 3. Click “Statistics” and click “collinearity diagnostics.” 4. Click OK. Output below is Multicollinearity analysis. Multicollinearity exists when Tolerance is below .1; and VIF is greater than 10 or an average much greater than 1. In this case, there is not multicollinearity. 2 4. “Empty cells” Logistic analysis in SPSS will sometimes not produce output if there are variables without data in one or more “cells” in the study. Categorical IV and Dichotomous DV -- For example, we are analyzing the relationship between “errors1a_di” and other variables. Imagine if one of those other variables was gender: male, female. What if for category “0” in “errors1a_di”, there are no subjects who are also male. Since in this hypothetical example there are only four combinations (e.g., “0” in “errors1a_di” and male or female, “1” in “errors1a_di” and male or female), not having data in ¼ of the combinations may stop the logistic analysis. Those four combinations are called “cells”. Continuous IV and Dichotomous DV -- Now, imagine if the predictor for “errors1a_di” was “age”. Having no data in one or more of the combinations (cells) of these two variables is less of a problem than when you have a categorical predictor because there are so many more combinations (e.g., “0” in “errors1a_di” and age 18, 19, 20, 21, 22, etc). I have conducted logistic analysis without data in one or more cells, and not encountered a problem, but I wanted to present this issue to you just in case you encounter a problem. The easiest way to identify if data is missing from cells is to conduct “crosstabs”” 1. Select Analyze --> Descriptive Statistics --> Crosstabs 2. Move 1 variable into the “row” and 1 variable into the “column” 3. Click “display clustered bar charts” 4. Click OK. Output below is for “errors1a_di” and “defendan4”. Notice there are data in all combinations. Output below is for “errors1a_di” and “threshold2”. Notice there are not data in all combinations, and yet you can still conduct a logistic analysis with these variables, I suspect because one of the variables is continuous so there is enough data to analyze. 3 5. Bivariate Logistic Bivariate logistic tests involve 1 IV and 1 DV. In our dataset we are going to predict a dichotomous DV “errors1a_di” using 1 continuous predictor “age”. Since “errors1a_di” was coded such that 0=better acquit guilty and 1=better convict innocent, then a. LOWER on the dichotomous scale means you favor acquitting guilty people, and b. HIGHER means favor convicting innocent people. “Age” is a continuous variable, such that a. LOWER means younger, and b. HIGHER means older What could be the predicted relationship between these two variables? For example, is the relationship positive or negative? I would predict a negative relationship, such that older people are more likely to favor acquitting guilty people (as compared to convicting innocent people). In other words, you as go HIGHER on “age”, you go LOWER on “errors1a_di”. Here is how to conduct logistic analysis: 1. Select Analyze --> Regression --> Binary Logistic 2. Move “errors1a_di” into the DV box; move into the covariates box: “age” 3. Click OK. The output is produced in three parts: a. (1) two boxes of descriptive analysis, b. (2) block 0, which is the baseline condition without predictors, and c. (3) block 1, which shows the results when the predictor is entered into the analysis. Descriptive analysis: Notice that the first box tells you there are many missing cases. When we dichotomized “errors1a”, we omitted the middle response which said “The errors are equivalent”. Thus, there were missing values for “errors1a_di”. Logistic tests use “listwise deletion” which means that any subject who did not have a value for “errors1a_di” or “age” was omitted from the analysis. The second box tells you how the dichotomous predictor was coded. Block 0 shows the baseline condition when no predictors are entered into the analysis. a. Classification Table – Since we are doing logistic analysis in which there are only two categories (0, 1) and we are interested in the “odds ratio”, the baseline condition is chosen by the category with the highest “odds”, which is the category with the most cases. The baseline condition is then analyzed for how well it fits the data. In this case, there are more subjects in the “better acquit guilty” category, so the baseline condition looks at how well that model fits the data. In other words, the baseline condition is a model that predicts all subjects are in the “0” condition, so how well does that fit the actual data where we have subjects in both conditions? The first box below tells you that 176 subjects were in condition=0, and 31 in condition=1, thus, this models would classify 85% of the subjects correctly. b. Variables not in the Equation – This box tells you that “age” would be a significant predictor (p = .015) if it was added to the model. In other words, this is the contribution of age in predicting “errors1a_di”. 4 Block 1 shows the results when we enter the predictors into the analysis. a. Omnibus Tests – This box tells you whether the overall model is significant. This box is more important to multiple logistic tests because if there is a significant overall effect, then at least 1 of the individual predictors in multiple logistic analysis will be significant. b. Model Summary – This box tells you the “variance explained” by the model. FYI – see the PowerPoint slide about why this is not true “variance explained.” The last two numbers are called “pseudo” R2. Both can be reported when writing-up the analysis. “Cox and Snell” is usually an underestimate. c. Classification Table – Notice that including age into the analysis did not change the Classification Table found in the block 0. Thus, including “age” into the model did not improve the predictive rate. d. Variables in the Equation – This box tells you the unique contribution of the variables. In this case, age was significant, p = .017. Notice that the “B” was negative. This means the relationship is negative. Notice that the “Exp(B) is below 1. This is another way of saying the relationship is negative. For example, the “B” tells us that for one unit increase in age, the log odds of being a “1”, goes down (so move toward 0) by -.041. The “Exp(B)” tells us that for one unit increase in age, the odds of being a “1” increases by a factor of .960 (e.g., decreases). 5 6. Comparing bivariate logistic and bivariate linear regression We dichotomized “errors1a” to create a dichotomous variable. Since “errors1a” is continuous, we can compute a linear regression analysis between “errors1a” and “age”, and thus compare and contrast to the bivariate logistic output from above. 1. Select Analyze --> Regression --> Linear 2. Move “errors1a_di” into the DV box; move into the IV box: “age” 3. Click OK. As you can see from the output below, the linear regression analysis is analogous to the logistic from above. a. Overall variance explained was .022 b. Unique contribution of age in predicting “errors1a” was = -.148, p = .007 7. Multiple Logistic Now we are going to conduct multiple logistic analysis to predict “errors1a_di”using three variables: “age”, “threshold2”, and “defendant4”. I am including “threshold2” because it may be interesting to see whether your threshold level for what is “beyond a reasonable doubt” predicts your favoring to acquit guilty people. In other words, maybe having a higher threshold to convict predicts your favoring to not convict. I am including “defendant4” because it may be interesting to see if your feelings toward defendants predicts your favoring to convict. In other words, if you dislike defendants, maybe you favor to convict even if this means you are convicting innocent people. FYI – to be precise, I would actually want to composite all five of the “defendant” questions together because that composite would be more indicative of your feelings toward defendants, but I am using “defendant4” as a proxy for your general feelings toward defendants even though it is only measuring one small aspect of your feelings toward defendants. age Age: ____ years threshold2 In criminal trials, jurors are instructed to vote for conviction only if they find the defendant guilty “beyond a reasonable doubt.” As you understand this instruction, to convict the defendant of a crime, jurors should feel that it is at least _____% likely that the defendant is guilty of the crime. To what extent do you agree with the following statement: defendants who decide not to testify at trial do so because they are in fact guilty. [1-11 point scale: “strong disagreement” – “strong agreement”] defendant4 6 How to conduct multiple logistic: 1. Select Analyze --> Regression --> Binary Logistic 2. Move “errors1a_di” into the DV box; move into the covariates box: “age”, “threshold2”, and “defendant4”, 3. Click OK. Descriptive Analysis: Block 0 a. Classification Table – The baseline model would classify 84.7% of the subjects correctly. Notice this is different than the baseline model for the bivariate logistic test (even though both baseline models are the same) because the sample size is different. When you include different variables into the analysis (e.g., in this case, we have three predictors instead of 1), then any subject with missing values on any of the variables is excluded from the analysis. b. Variables not in the Equation – Notice that all three variables are significant predictors of the outcome variable when you don’t control for the other variables in the analysis. In other words, this output shows you the contributions of each variable, whereas in Block 1 below you see the UNIQUE contributions of each variable while controlling for the others. 7 Block 1 a. Omnibus - The overall model is significant, which means that at least 1 of the individual predictors will be significant. b. Model Summary – This box tells you the “variance explained” by the model. The last two numbers are called “pseudo” R2. Both can be reported when writing-up the analysis. “Cox and Snell” is usually an underestimate. c. Classification Table – This model would classify 84.2% of the subjects correctly. d. Variables in the Equation – This box tells you the unique contribution of the variables. In this case, age is not significant, p = .072. Notice that age was significant when not controlling for the other variables (see Block 0 above), but is now non-significant when controlling for the other variables. Notice also that “threshold2” is negative, whereas “defendant4” is positive. This confirms our hypothesis that being HIGHER on threshold2 means LOWER for “errors1_di”, and HIGHER on defendant4 means HIGHER on “errors1_di”. Notice also that when the “B” is negative, the “Exp(B)” is below 1, whereas when “B” is positive, the “Exp(B)” is higher than 1. WRITE-UP a. Multiple logistic analysis was conducted to predict whether subjects favored acquitting guilty defendants as compared to convicting innocent defendants. Three predictors were entered simultaneously into the analysis. The first predictor was age. The second predictor was the percentage that a defendant needs to be guilty in order to surpass the “reasonable doubt” standard. The third predictor was the extent to which subjects believed defendants who decide not to testify at trial do so because they are in fact guilty. The overall model was significant, X2 = 18.038, df = 3, p < .000. The Cox and Snell R2 was.085, and Nagelkerke R2 was .148. Age was not significant, p = .072, with an odds ratio of .968. The second variable was significant, p = .009, with an odds ratio of .969. The third variable was significant, p = .021, with an odds ratio of 1.184. EVALUATION a. You evaluate multiple logistic analysis by first looking at the overall model and variance explained. b. You then evaluate each predictor separately. You evaluate the p-value just as you would for correlation and bivariate regression, except that with multiple logistic the outcome for each predictor is the UNIQUE effect while controlling for the other variables. You also evaluate the odds ratio. Notice that you evaluate the odds ratio differently than you would the “b” effect size in linear regression. In linear regression, the “b” is evaluated similarly to a correlation effect size. However, the “odds ratio” is interpreted based upon the 1 unit change in the predictor variable. 8 8. Comparing multiple logistic and multiple linear regression We dichotomized “errors1a” to create a dichotomous variable. Since “errors1a” is continuous, we can compute a multiple linear regression analysis between “errors1a” and the three predictors, and thus compare and contrast to the multiple logistic output from above. 1. Select Analyze --> Regression --> Linear 2. Move “errors1a_di” into the DV box; move the three predictors into the IV box. 3. Click OK. FYI – To keep the number of subjects in the analysis comparable between this multiple linear analysis and the multiple logistic analysis from above, I ONLY INCLUDED SUBJECTS IN THE ANALYSIS IF THEY HAD A VALUE ON “errors1a_di”. Remember, we lost subjects when we dichotomized “errors1a_di”, so those same subjects are excluded from this analysis. Later in this document I repeat the multiple linear analysis and include all subjects. I am conducting the multiple linear analysis both ways (with and without those subjects) because I want to show you how they both compare to the multiple logistic analysis. As you can see from the output below, the multiple linear regression analysis is NOT analogous to the multiple logistic from above. For example, age is significant here, whereas not significant with logistic. Also, threshold2 is not significant here, whereas it was significant with logistic. WHY? When you move from a continuous outcome variable to a dichotomous outcome variable you lost data and explanatory power. In other words, this is a concrete example of why you typically want continuous variables, rather than dichotomous variables. a. Overall variance explained was .080 b. Unique contribution of age in predicting “errors1a” was = -.132, p = .016 Unique contribution of threshold2 in predicting “errors1a” was = -.089, p = .104 Unique contribution of defendant4 in predicting “errors1a” was = .216, p = .000 9 NOW, I REPEATED THE SAME MULTIPLE LINEAR ANALYSIS, BUT THIS TIME I INCLUDED ALL SUBJECTS, EVEN IF THEY DID NOT HAVE A SCORE ON “errors1a_di”. In other words, I wanted to show you what happens when you have larger sample sizes. As you can see from the output below, the multiple linear regression when including more subjects produces a result where the overall variance explained is bigger, all three predictors are significant, and all three have larger effect sizes. This is a concrete example of why you want larger sample sizes. a. Overall variance explained was .113 b. Unique contribution of age in predicting “errors1a” was = -.158, p = .023 Unique contribution of threshold2 in predicting “errors1a” was = -.142, p = .038 Unique contribution of defendant4 in predicting “errors1a” was = .215, p = .002 9. Dummy coding Unlike linear regression where you need to manually dummy code categorical predictors, logistic analysis in SPSS dummy codes the variables for you. Here is how to dummy code: Within the Analyze --> Regression --> Binary Logistic, after you move your categorical variable(s) into the covariate box, click on “Categorical” and move ONLY the categorical variables into the box on the right. Notice you have the option of having the baseline category (against which all the other categories are compared) to be either the first category or the last category. For example, if you are analyzing “deathopinion”, then you would want the “last” category to be the baseline category because the last category in “deathopinion” variable is “no opinion” whereas the other five categories in that variable are opinions about the death penalty. 10