Chapter 10

advertisement

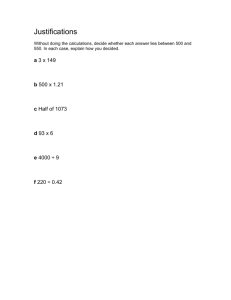

Chapter 10.2 Research methods; their design, applicability and reliability Gail Marshall Gail Marshall Associates Walnut Creek, USA gailandtom@compuserve.com Margaret Cox King's College London London, UK MJ.cox@kcl.ac.uk Abstract: A review of research and evaluation studies into IT in education shows us strengths and weaknesses. Both quantitative and qualitative studies conducted in the past have often been based on inadequate design, limited discussion of settings or measurement development and analysis. The limited consideration of different educational settings and the small sample sizes in many studies result in difficulties in generalizing the results. Few studies have been linked to specific learning activities using specific IT tools, further compromising generalisability of factors impacting innovation and implementation activities have seldom been addressed, making studies less than useful to a wider audience. Failure to acknowledge that some research questions, those based on Logo use, for example, are best answered by employing qualitative methods, has limited our understanding of IT’s impact on pupils’ learning. Similarly, the basic differences in epistemological theory and the consequent difference in research design and analysis have seldom been addressed by the research community.Suggestions are offered for improving the quality and applicability of future IT research studies. Keywords: Research; reliability; research methods; theories; research goals; standards 1 10.2.1 Introduction This chapter reviews the strengths and weaknesses of different research methods used to measure the impact of IT in education. It includes reviewing the designs and approaches, and the relevance and applicability of quantitative and qualitative methods drawing on previous research evidence. Many previous research studies have relied upon methods which measure changes in education due to innovations, generally resulting in many cases of a limited applicability of the methods to the research goals. Such studies could have been linked more specifically to the learning activities promoted by specific IT tools and the relative emphases required to take account of different factors influencing the integration of IT in education. A basic premise of this chapter and of research into IT in education is that important questions are generated, and strategies that are appropriate and rigorous are deployed to answer those questions as unambiguously as possible. A major goal of research and evaluation is to advance knowledge. 'Evaluation' is often considered a more pragmatic aspect of educational research than the techniques employed in “classic” research studies. Evaluation has usually been conducted to answer questions of interest to funding agencies, education authorities and other stakeholders who demand answers to questions of “How?” and “Why?” and “Of what influence?” Evaluation results, for better or for worse, have often been influential amongst policy makers in determining the effectiveness of IT in teaching and learning. We therefore include some discussion about evaluation design and approaches, and the consequent results in this chapter in addition to the often more rigorous research techniques which have been used. Design and evaluation of IT tools are discussed in more detail by Reeves (2008) in this Handbook. 2 10.2.2 Research Goals Before considering the effectiveness and relevance of different methods which have been used to research IT in education we review the different goals of researchers, which have been influenced by technological developments, national priorities, expectations of educators, the types of IT tools available and so on. The major research goals have changed as a result of the growth and uptake of IT in education as discussed by Cox (2008) in the Handbook. The research goals reported on most extensively are listed in Table 2.1 below showing the types of research methods most often used to address these goals. 3 Table 2.1- Research Goals and relevant research methods Research Goals Quantitative Qualitative To measure the impact of Large experimental and Small groups of learners IT on pupils’ learning. control groups. using specific IT tools either Pre- and post tests of as an intervention or part of learning gains. the natural class activities. Meta-analyses of Observations, specific task quantitative data. performance, focus groups Large-scale (on-line) Interviews of learners. surveys. Pre- and post tests. Uptake of IT by schools Large scale surveys. Questionnaire surveys of and teachers. Questionnaires of many teachers in schools. teachers and schools. Observations of classes. Effects of IT on learning Pre- and post tests of Assessment of prescribed strategies and specific subject processes. tasks. processes. Observations of pupilcomputer interactions. Effects of IT on Class observations, teacher collaboration, contextual interviews, questionnaires, effects etc. documentation. Attitudes towards Attitude tests of pupils and Interviews and focus group computers in education. teachers. discussions. Effects of IT on Large scale surveys of Class observations, teacher pedagogies and frequency of use, types of interviews, questionnaires, 4 practices of the teachers. IT use, etc. documentation. Computer use by girls Large scale questionnaire Class observations, pupil versus boys. surveys of computer use. interviews, questionnaires. Contribution of IT to Large scale surveys. Small groups of learners enhancing access and Questionnaires of many using specific IT tools either learning for special teachers and schools. as an intervention or part of needs. the natural class activities. Total operating costs and Online surveys of all school Teacher interviews, school cost effectiveness. staff, Large scale surveys. records, staff questionnaires. As explained by Cox (2008), in the late 1970s and 1980s the major emphasis of researching IT in education was on investigating the specific impacts which an IT intervention might have on students' learning outcomes, their motivation and the impact on understanding specific concepts and skills. The methods used were mostly methods of subject based assessment which had been used to measure impacts of other educational innovations and interventions. This focus on the impact of IT on learning changed as more factors were identified. The types of interventions were dependent upon the priorities of the educational establishment, the way the curriculum was delivered and the types of IT tools developed at the time. These changing goals shown in Table 1 and discussed below were as a consequence of the growth of IT in education and the outcomes of previous research studies. 10.2.3 To measure the impact of IT on learning Since the mid 1960s one of the major goals of researching IT in education has been to examine an intervention (“What was the treatment?”) and examine the impact (“What was the impact?”). Explaining the variance between what occurred (or what did not occur) and the 5 consequent impact should be part of any educational research project. As discussed by Pilkington (2008) and Reeves (2008), the original design for measuring the effects of an intervention was to expose a select group of pupils to using IT tools specifically designed for educational purposes, sometimes in a school setting, sometimes in a laboratory and to measure improvements in learning through pre- and post-testing. For examples of this type of research see the early editions of Computers & Education and similar journals. The various limitations of this type of research design included the researchers often assuming that the tests could be based on those which measured traditional learning gains. The intervention was often an additional extra to the pupils’ learning programme and the results could not be generalized to other schools and settings. 10.2.4 Uptake of IT by schools and teachers Once IT resources became cheap enough to be able to be purchased in large numbers, schools purchased networks of computers, clusters of stand-alone ones and some for administrative purposes. The increase in provision resulted in governments and local districts wanting to find out what the uptake was by individual schools and teachers. Many governments commissioned large-scale surveys of their schools to find out how IT resources were being used. A good example of the range of research instruments used for national surveys is given by Pelgrum and Plomp (1993, 2008). They coordinated several international surveys of the uptake of IT by schools, teachers, head-teachers etc. There have been many large-scale studies since the 1980s which have focused on measuring this level of resource use (e.g. Watson, 1993, Harrison, et al., 2002). 10.2.5 Effects of IT on learning strategies and processes Once there were sufficient IT resources in many schools and classrooms, there was an increase in research projects to measure in more detail how specific IT uses impacted upon learning strategies and processes, requiring different research methods as shown in Table 2.1 6 including audio-visual recordings of pupils’ IT uses, human computer interactions, and the devising of specific tasks which pupils had to complete relating to specific IT uses. The most reliable evidence of a positive impact of IT tools on learning strategies and processes as explained later was with this research approach where the pupils were assessed in great depth and detail regarding specific identified uses of IT (Cox & Abott, 2004). 10.2.6 Effects of IT on collaboration and the learning context. As a consequence of a large number of studies of pupils using computers in different class settings and the growing awareness of researchers about the importance of team work amongst pupils, research in IT in education expanded to include the goal of measuring what effects pupils’ collaborating had on their learning and team-work skills when using IT and conversely what effects the use of IT environments had on those collaborative skills. Since the rapid growth in the use of online learning then more sophisticated research tools have been developed, including online monitoring of pupils’ computer use, online assessment techniques and the need to take account of the different knowledge representations which such complex environments provide (Cheng, Lowe & Scaife, 2001) 10.2.7 Attitudes towards computers in education In spite of a large increase in IT resources in schools and informal educational settings, the research into uptake by teachers and pupils still showed that this was disappointingly low. It became apparent that the attitudes of teachers and learners significantly affected their willingness and abilities to use IT tools and thereby the level of benefit which could be achieved. As a consequence, there are now a large number of research studies into attitudinal and personality factors towards IT in education (Katz & Offir, 1988, 1993; Sakamoto, Zhao & Sakomoto, 1993; Gardner, Dukes & Discenza, 1993; Koutromanos 2004) in which attitude tests consisting of many questions about fear of computers, liking of technology, liking using them in 7 schools, etc., have shown strong links between pupils’ and teachers’ attitudes and the effects on IT use and learning (see Knezek and Christensen, 2008 for more details about this research). Many researchers also claimed to measure attitudes of pupils by simply asking a few questions about whether they liked using computers or not, a strategy which does provide some useful evidence but is not so robust as using tried and tested attitude tests which try to measure the underlying feelings about IT. 10.2.8 Effects of IT on pedagogies and practices of the teachers Even though there was a gradual growth in IT use by teachers and pupils reported in many countries, the research evidence showed that the ways in which IT was used was very dependent upon the teachers themselves, what they believed to be important, how they selected the IT tools for their curriculum, how they organized the lessons and so on. Therefore many researchers focused on measuring those factors, mostly either through questionnaire surveys which only obtained evidence about level of use and types of IT use in different curriculum subjects (see for example Pilkington, 2008; Pelgrum & Plomp, 2008). In order to understand the effects of teachers’ beliefs and their consequent practices, it was necessary to conduct interviews with individual teachers, observe their behaviour in lessons and follow their practices over a period of time (Somekh, 1995, Castillo, 2006, Cox & Webb, 2004). 10.2.9 Computer use by girls versus boys In the early days of IT in schools, many researchers reported that especially with teaching IT or Computer Science as a subject, many more boys were making regular use of IT compared with girls, resulting in a range of studies into the rate of access to IT by girls and boys and types of IT use etc. The studies involved both large-scale questionnaire surveys as well as actual class observations, pupils’ interviews and focused questionnaires. These more detailed methods are able to find out whether girls use IT in different ways to boys and how the design 8 and development of IT tools should take into account these differences (Hoyles,1989). See also Meelissen (2008) in this Handbook. 10.2.10 Contribution of IT to enhancing access and learning for special needs One important goal, which is sometimes overlooked by governments, is to measure the important contribution which IT use can make to pupils with special needs (Abbott, 1999, 2002). This is a very complex research area because of the contribution which IT can make to both physically and mentally disadvantaged pupils. Some IT devices may consist of hand-held manipulative toys which provide sound feedback when a task is performed correctly; others may involve sound output for blind pupils typing email messages on a computer; and others may involve providing safe IT environments for pupils who emotionally find it difficult to relate directly to humans. Measuring the effects of IT tools on pupils’ learning requires specifically designed measures to determine the specific characteristics of the IT device and how the device contributes to changes in skills and competencies. 10.2.11 Total operating costs and cost effectiveness A final goal which dominates many large-scale nationally funded research projects is to answer the questions; “Does IT provide a more cost effective solution to improve teachers’ and pupils’ performances? and “What are the total operating costs of IT in schools?” It is very difficult to provide clear irrefutable answers to the first question although some projects have tried to measure this by extrapolating financial benefits from the improvement in pupils’ learning (e.g. Watson, 1993). However national bodies and researchers are developing tools to answer the second question but they have to rely on teacher interviews, the accuracy of school records and questionnaire surveys of all staff (Becta/KPMG, 2006a, 2006b). See also Moyle (2008) in this Handbook. It is well known that it is very difficult to estimate the total operating costs of IT systems in educational establishments because account must be taken not only of the purchases of IT tools, systems, repairs, upgrades, online subscriptions and so on but also the 9 costs of training the staff, miss-use, inappropriate uses of IT tools etc., while the IT environment and its role in education is constantly changing. 10.2.12 Epistemological Theories and Research Design A fundamental influence on the research designs to measure the impact of IT in education since computers were first introduced into schools in the late 1960s and early 70s has been the beliefs of researchers, educators and policy makers into how pupils learn (epistemologies). The Structure of Scientific Revolutions (Kuhn, 1970) contributed to an acceptance of different beliefs about how knowledge was organized and how investigations into the nature of all manner of things could be conducted. Thus, theorists began to re-examine traditional theories and methods in a wide range of disciplines. For example, Reese and Overton (1970) said that the epistemological foundations of researchers in the field of child development (i.e., how learning occurred and was organized) result in different and mutually exclusive strategies for research design and analysis. Dede (2008) in this Handbook elaborates how different theoretical perspectives influence the use of IT in teaching and learning. Briefly stated, behaviourists believe that knowledge is a copy of reality, that learning occurs from the outside in, occurs in incremental bits and is facilitated by repetition and reward. According to this philosophy instructional design including that using IT should be organized in pre-ordained steps. Researchers working in the tradition of Comenius, (Kalas & Blaho, 1998), Piaget, (Sigel & Hooper, 1968), Bruner (1966) and Papert (1980) believe that pupils do not photocopy reality. Instead a mismatch between levels of mental maturity and instruction will result in “deformations” of what has been taught. Similarly, their research is based on the belief that pupils’ interactions, either physical or mental, are crucial components of learning, which, in turn, results in a partial or total reorganization of knowledge. Intrinsic needs, not reward and repetition, drive learning and instruction which should proceed in “spirals” (Bruner, 1966), or in the presentation of fully organized blocks of material. Serendipity, (Duckworth, 1972), capitalising on a spontaneous 10 event that engages pupils’ interest and motivation, is also recognised as a viable foundation for instruction. The different beliefs about learning have had major influences on the educational design of IT environments and researching IT in education. Some IT researchers design and conduct experiments based on a behaviourist epistemology. The early work of Bork (1980, 1985), Nishinosono (1989), Katz and Offir (1993) and many of the studies reviewed in the large scale meta-analysis by Niemiec and Walburg (1992) for example, report on investigations into the impact of IT on specific tasks through the analysis of test performances. Descriptions of the research might include resources, including costs associated with a “treatment” (Moonen, 2001), types of IT tools used but pedagogical methods are often only described very briefly. Pre- and post-tests are used to assess the extent to which changes (number of items correctly answered, for example), if any, occurred. Time; how much time pupils need to solve specific questions, is often an important variable. The methodology ignores questions about the conditions promoting changes in cognitive structuring because researchers worked within “the mind is a black box” framework. An example of such an approach is shown by the early work with pupils conducted by Katz and Offir (1988, 1993).The problem with this type of investigation is that we do not know whether the pupils understood all or part of the material used, or participated in the instructional activities, nor do we know if they understood directions or had the mental maturity needed to engage fully with the task. We do not know if teachers’ instructional practices were geared to all pupils nor if the time allowed for instruction was sufficient for all pupils to understand the tasks. Finally, we do not know what cognitive processes pupils used to solve the problems. Did all pupils use the same strategies? Did some pupils understand the problems but make simple mistakes? Could some pupils have solved the problems if more time were available? Many of these questions could be answered if researchers working within a behaviourist framework adhered to standards for conducting and reporting educational research. So while 11 research conducted in a behaviourist tradition answers important questions, many other important questions are unanswered by the research, questions which are equally important if we are to answer the question, “What impact does IT make and does the impact make a difference in terms of cost, effort and aims?” Equally important for the reliability of researching IT in education is the need for the researchers to understand the theories which might underpin the factorial relationships in the learning environment and the wider context. Schoenfeld (2004) comments on the “sterility” of experimental/quantitative research. Often the research has been based on no theoretical framework as was the case with studies of human problem solving, an important topic in mathematics. But models exist for conducting experimental and quasiexperimental research that allow for the examination of complex thinking and of the impacts of competing theories of educational practices, attitudes and personalities and contexts and their impact on pupils’ learning (e.g. see Webb & Cox, 2004). Stallings (1975) research on the effects of Follow Through, a U.S. federally funded program based on several different theories of learning, was a rich, robust attempt to demonstrate the differential impacts of models based on theories. An educational research programme comparing the effects of different learning theories and instructional programmes (curricula) involving writing, mathematical problem solving, computing skills, etc. could provide needed answers to perplexing questions about the depth and breadth of IT’s impact on a wide spectrum of pupils. Research conducted within a “constructivist” epistemological perspective examines changes in the way learning take place, or how knowledge and practice are re-organized in the mind as a result of an intervention. Research in that theoretical tradition asks “What was learned?” and “What does the learning tell us about teaching methods?” and the answers provided by pupils are viewed as indices of the effectiveness of curricular design as an instructional tool and/or the impact of that design on learners. 12 A classic example of the problem of failing to understand how learners think is a body of studies examining the impact of Logo on young children (Cox & Marshall, 2007). Many of the studies were designed and conducted within a social reality framework which assumed that children were essentially passive receptors who could be manipulated by external agents and events (Pea & Kurland, 1994). Their research was based on the hypothesis that young children’s ability to plan could be enhanced by instruction in Logo. But earlier research summarized by Ginsburg and Opper (1979) has shown us that young children’s information processing systems are different from adults’ systems. Hence a lack of improvement in children’s planning skills using Logo need not be an indictment of Logo but a further manifestation of developmental issues that frame hypothese and need to be considered in the design of studies. The work of Bottino &Furinghetti (1994; 1995) on teachers’ understandings and actions as well as studies of children and classrooms conducted by Cox and Nikolopoulou (1997), Yokochi (1996), Yokochi, Moriya & Kuroda (1997) and Chen and Zhang (2000) provide examples of the research based on the theory that changes in learning occur as the result of planned interventions. Traditional educational research methods rely on interviews with pupils or teachers, analysis of video-taped or audio-taped sessions or direct observation of behaviour. While inferences can be made about the level of understanding, based on responses, we do not know if all share the same understandings. The research strategy is dependent on the extent to which the instructor (or the software used) presents the problems in a way that can be understood without providing all the scaffolding needed to solve the problem, a situation which results in trivial learning. Carefully designed investigations, where measurements provide data on how pupils perform and what instructional strategies were employed, especially across a wide range of settings, would provide more definitive answers than are currently available to consumers of research. 13 If we are working in IT settings, we must be especially conscious of the fact that IT is not the only independent variable (e.g. see Katz & Offir, 1988, Kalas & Blaho, 1998). If we want to know if using computers improves writing skills, an analysis of pupils’ writing via computer is necessary but not sufficient. We must also know the structure of the writing curriculum, the ways that the curriculum is implemented in classrooms, the facility with which the IT tool can be used, the attitudes and skills that pupils bring to the tasks, and their understandings and intentions as they engage in writing. So interviews with pupils before and after a course of IT-based writing are necessary but it is also essential for the researcher to describe as many components of the design and delivery as possible so the question “What was learned?” can be directly or indirectly tied to pupils learning, teaching methods, instructional design and even the IT tool used. Researchers working in a “constructivist” tradition bear no less responsibility for following standards for acceptable and unambiguous research. A further complication here as elaborated by Pilkington (2008) is that the nature of the IT tool or environment itself will influence the effectiveness of different research designs and measures (Laurillard, 1978, 1992, Sakonidis, 1994, Triona &Klahr, 2005). The growth and range of IT resources had led to an expanding range of representational systems and different modes of human computer interfaces which has extended the specification of knowledge and knowledge domains that learners now meet in education (Klahr & Dunbar, 1988, Merrill, 1994, Mellar, Bliss, Boohan, Ogborn & Tompsett, 1994, Klahr & Chen, 2003). Research into pupils’ understanding of different representations produced by IT environments has shown that the learners may have a different understanding of the metaphors and symbolisms which are presented to them on a computer screen, for example, and consequently interpret the subject knowledge included in the learning task differently to that expected by the researchers (Mellar et al., 1994, Cheng, et al., 2001, Cox & Marshall, 2007). The way in which new technologies have changed the representation and codifying of knowledge and how this relates to learners’ mental 14 models has shown that learners develop new ways of reasoning and hypothesizing their own and new knowledge (Bliss, 1994, Cox, 2005). Therefore measuring the effect of IT on pupils’ learning needs to address the literacy of the pupils in the IT medium as well as the specific learning outcomes relating to the aims of the teacher or the IT designer. How learners think about the problem or task will be influenced by their familiarity with the IT medium and with the type of IT environment with which they are working. For example, the use of on-line IT environments for teaching and learning introduces contexts in which the learner is immersed in a virtual reality which will affect the learner's cognitive development according to the learner's ability to move between different states of reality (Sakonidis, 1994, Kim & Shin, 2001). Furthermore, research by Ijsselstein, Freeman and de Ridder (2001) showed that the extent to which the learner moves between these different states of reality will change continuously. Therefore research into the impact of IT on pupils’ learning and within different learning contexts needs to include measuring the transition between reality states to delve more deeply into the potential impact of IT on learning. There are theories which apply to many other areas of educational research relevant to the goals discussed earlier, such as attitudinal and pedagogical theories about teachers’ pedagogical beliefs (Webb & Cox, 2004), sociological theories about educational change and institutional innovations (e.g. Fullan, 1991), system theories relating to IT in schools such as activity theory (Engestrom, 1999 ), and psychological theories relating to human computer interactions and knowledge representations (Cheng et al., 2001), all of which can underpin different educational research studies to provide more reliable and robust methods of investigation. 15 10.2.13 Standards for research The American Educational Research Association (2006) provides a set of standards for educational research and the standards are used widely to judge the acceptability of research and evaluation studies. Two principles are the basis of the Standards: (1) “adequate evidence should be provided to justify the results and conclusions” and (2) “the logic of inquiry” resulting from the topic/problem choice and all subsequent data analysis and reporting should be understandable by readers. Problem formulation should be descriptive and clear, and should make a contribution to knowledge. The design of the study should be shaped by the intellectual traditions of the authors. Clear descriptions of the site characteristics, number of participants, roles of researchers, methods for obtaining participant consent, data on key actors (teachers, etc.), when the research was conducted, what “treatment” was used, data collection and analysis procedures, and types of data collection devices should be reported. The Standards also call for complete descriptions of measure development, descriptions of any classification scheme used, coding descriptions and procedures, complete description of statistics (including data on the reliability and validity of instruments), a statement of the relevance of the measures used and the rationale for one or another data collection procedure, especially as those procedures are relevant to the site or participants. Analysis and interpretation of the data are regarded as essential and “disconfirming evidence, counter examples, or viable alternative explanations” should be addressed. The Standards further call for discussions of intended or unintended consequences of significance and statements of “how claims and interpretation “support, elaborate, or challenge conclusions in earlier scholarship.” The AERA Standards recognize two types of research designs: quantitative and qualitative. Typically, research conducted within a behaviourist perspective will use quantitative 16 methods and many surveys, designed to provide evidence at a point in time of programme practices, features and outcomes. Examples of such work can be found by examining many of the studies analysed by Niemiec and Walburg , 1992) which rely primarily on statistics. In order to collect a large set of data of many variables to be able to make comparisons, large-scale international studies follow the standards described above to be able to make generalisabilities (see Pelgrum & Plomp, 1993; Pelgrum & Plomp, 2008; Malkeen, 2003). Similarly, meta-studies (see also Liao & Hao, 2008) involve methods which provide results which can also be generalised but which do not always take account of the interpretation which is made of the results in relation to specific national contexts, many local variables for the individual studies forming part of the meta-analysis and the assumptions made of many research studies of the standardization of knowledge representations explained above. In all cases, the statistical analyses, data collection and analysis and reporting of the results must follow the logic of the question, i.e., Is the statistical variance of the evidence caused by the variables being investigated or are there other local and contextual factors which have caused this variation? For example, some of the earlier large-scale studies which have shown a statistically significant positive effect of IT on pupils’ learning, e.g. the Impact1 (Watson, 1993) and Impact2 studies (Harrison et al., 2002) were only able to determine the impact of specific IT tools by conducting mini-studies and qualitative analysis of the use of IT in specific educational settings. In many cases it has not been possible to identify the actual types of IT use which have contributed to these learning gains. Therefore, although the outcome of such research may be that IT has had a positive effect on pupils’ learning, it is not known in such large scale studies if this was due for example to using simulations, or problem solving software or accessing additional relevant information over the Internet. The very complex nature of IT makes it more difficult to conduct research as compared with more confined educational innovations. So, according to Fendler (2006) and the discussion 17 above “More recent literature on research theory is indicating the emergence of scientific approaches to studies that are not focused on generalisability” and “one of the effects of an overweening belief in the generalisability of research findings has been to narrow the scope of intellectual and scientific inquiry.”(p. 447). To address such questions many investigations conducted from a constructivist perspective tend to be based on qualitative methods: local education authority, school, classroom or individual pupil observations, interviews, etc. The Standards for qualitative research are no less rigourous than those of quantitative studies. In fact, the plethora and complexity of data may place greater burdens on researchers if the Standards are followed. For example, “… it is important that researchers fully characterize the processes used so others can trace their logic of inquiry” (AERA, 2006). Researchers must look for alternative explanations of their data, seek confirming evidence, provide direct evidence supporting claims, ask participants for corroboration of claims, especially where evidence and conclusions are not shared by participants. Upon examining qualitative studies, we find that many in the IT field often fail to follow the Standards established for qualitative research. For example, there is evidence that teachers’ ideas, beliefs and values may also influence their uses of IT (Fang, 1996; Moseley, Higgins,, Bramald, Harman, Miller & Mroz, 1999; Webb & Cox, 2004) yet research papers which claim to measure the pedagogical practices of teachers by interview and observation when using IT in the classroom sometimes fail to measure the teachers’ beliefs which might be the main influence on the IT related educational experiences of the pupils and therefore a more significant variable than the range of IT tools which might contribute to the pupils’ knowledge. Frequently one finds published reports of the impact of IT on pupils’ learning without even specifying what IT tools were being used. The research conducted by Noss and Hoyles. (1992), however, provides a useful model for conducting large-scale studies of how and why children learn. 18 A literature review of IT and attainment by Cox and Abbott (2004) showed that the most robust evidence of IT use enhancing pupils’ learning was from studies which focused on specific uses of IT. Where the research aim has been to investigate the effects of IT on attainment without clearly identifying the range and type of IT use, then unclear results were obtained making it difficult to conclude any repeatable impact of a type of IT use on pupils’ learning. Also missing from many previous research publications are methodologically robust studies that might be based on large and varied samples, that are conducted over several years and that provide unambiguous answers to questions such as: “What impact have specific IT uses had on pupils?” “Does the way IT is implemented have a major/minor impact on pupils’ learning?” “Does the impact affect the surface or deep structure of pupils’ thinking and acting?” Failing to account for such situations raises an issue for educational research because although research into specific IT uses provide less ambiguous results as explained above, it is also clear from the evidence discussed above that adhering to standards would improve the quality of researching IT in education and to date these standards have been more rigorously addressed for large scale quantitative studies, as is explained in Chapters 4 and 6 of this Section than for the large number of small scale qualitative studies. 10.2.14 Formative and Summative Studies Formative and summative studies are typically conducted at a meta-level, i.e. an analysis of what occurred in several classrooms, schools or education authorities. Each type of research plays a major role in effectively answering questions about the impact of IT and the formative stage is no less important than the summative stage. In fact, without formative studies (“What were the initial conditions,” and “What critical factors delayed, impeded or deformed the intended plan?”) data collected in a summative study will miss key factors in the transformations of 19 programmes of instruction that resulted in success or contributed to the failure of a project. Furtbermore, formative studies play a very important part in the development and evaluation of IT tools through trials with pupils in schools providing feedback on the effectiveness of such tools on pupils’ learning, the design of the IT environment and how it can be used in a range of curriculum settings (Reeves, 2008). The formative phase often involves a set of pilot studies to refine instruments and ensure validity and reliability. (Cox, 1989; Squires & McDougall, 1994; Provus, 1971; Fendler, 2006). Collecting many different types of baseline data (type of school organization, roles of staff, teacher credentials, types of equipment, etc.) is essential so that changes over time can be assessed. Refining methods, ensuring the right questions are asked, providing information on problematic or promising situations, and analyzing variables that may later be used to explain the variance in outcomes, choosing the appropriate measures or designing instruments in the absence of measures that will yield information to ensure unambiguous data are key steps in the formative process (Walberg, 1974). It may be the case that examples provided via evaluations designed within a behaviourist framework and intended to assess impacts on pupils do not reflect the types of questions/measures considered necessary to answer questions raised in the “constructivist” tradition. Resources (Wolf, 1991; Ridgway & Passey, 1993; Ridgway, 1998) are available to assist evaluators in choosing or designing rich and robust measures that provide insights into pupils’ thinking. It is often the case that researchers do not report the results of their pilot tests even though these are an important part of the formative stages of the research, but use them instead to refine and edit tests, interview questions, survey questions. For example, a large study by the United Nations Educational, Scientific and Cultural organization [UNESCO] (2005) investigating the indicators of IT application in secondary education of South−Eastern European countries, devised a large questionnaire to gather evidence about the resourcing and use of IT in schools. 20 The evidence was derived from the use of 68 indicators such as the total number of schools with computer classrooms and whether IT was taught as a separate subject or across the curriculum, for example, with the purpose of developing recommendations to stimulate national educational policies, strategies and their implementation. However, there was no explanation for the final range of questions selected and whether any preliminary research was conducted at a formative stage to determine which types of indicators would provide the most reliable comparative data. There are many other examples in IT in education academic journals where the formative stages of research go unreported. However, in other cases where formative data are provided these serve as “guideposts” for evaluators working with experimental curriculum projects. For example, the evaluation of the Comprehensive School Mathematics Program (CSMP), included a formative phase for each level of curriculum development and teacher professional development activities (Marshall & Herbert, 1983). The formative period allowed evaluators to construct and re-tool measures of impact that were not biased towards either the CSMP nor the “traditional” mathematics curricula and allowed for rigorous reliability and validity checks over a course of several years. Where formative evaluation reports describe only a few variables they fail to address issues such as the impact of the planned programme on staff, pupils and school/community culture as in the case of the first few years’ evaluations of the programme Star Schools (2005). The evaluators of this programme failed to provide data that can inform the wider IT community about problems and prospects. To the degree that the reports document each stage of the programmatic change process, describe measures, their reliability, validity and results, they broaden the knowledge base of the IT community. A major reason to conduct formative evaluation is to avoid what Charters and Jones (1973) call “the risk of appraising non-events” when reviewing the impact of a planned educational change. If sufficient data haven’t been collected during the formative stages, 21 evaluators run the risk of attributing any changes to planning or implementation that did not occur during the course of the project. Ridgway (1997) comments on the same problem when he discusses the differences between the intended, implemented and attained curricula. The summative phase of evaluation is designed to collect and analyse data at the conclusion of an intervention. Several early primers, with guidelines and suggestions for conducting summative evaluation are worth a second look (Wittrock & Wiley, 1970; Guba & Lincoln, 1981). Since then IT researchers have conducted studies on the impact of a variety of IT-based approaches to instruction. For example, Azinian (2001) describes changes in two schools’ cultures as a result of the IT use. Midoro (2001) describes collaborative teacher development conducted online. Both studies are abbreviated descriptions of actual conditions, events, ongoing practices and comprehensive change but they highlight key factors that other researchers may reflect on and compare with their own experiences. Lee (2001) reports that in building capacity for IT use in the developing countries of Asia the role of human capacity development is critical, a facet of IT-based change that is often neglected in the change process. Attention in the IT community is more often paid to the hardware, and the tools and procedures of IT than is paid to issues of who can/should use IT and how IT use can be best introduced and utilised. More extensive reports of research (e.g. Somekh, 1995, Scrimshaw, 2004, Watson, 1993, Collis, Knezek, Lai, Myashita, Pelgrum & Plomp, 1996) elaborate to a greater or lesser degree on conditions, events and outcomes in efforts to transform classrooms and schools via IT. Many of these are discussed elsewhere in the handbook and show that increasingly there is a greater understanding of the plethora of key factors which need to be taken into account when measuring the impact of IT in education. So questions such as “What changes occurred?” and (less frequently asked) “What contributed to the change?” are requiring more complex and in- 22 depth measurements compared with the earlier superficial reporting of the IT tools used and the changes in test performance before and after the intervention. More troubling is the criticism Berman and McLaughlin (1974) pointed out in their discussion of “change” studies during the period of educational ferment in the 1950s, 1960s and 1970s. Few, if any, theories of implementation and institutionalisation of change processes guide the design and execution of summative studies. Of the many studies of school, local education authority or country plans for IT-based change, few start from questions based on a theory or theories of implementation nor the mix of components referred to above, conditions, critical elements and facilitating factors. The result is a melange of this and that, descriptions of what occurred in one or more places at one or more times in situations that may or may not mirror conditions occurring in other places. More recently, action research (Ziegler, 2001, Somekh, 1995) has gained currency as an evaluation strategy to document within and across school changes in practice. Originally envisioned as a way to collect both information about a social system and, simultaneously work to change the system, action research is viewed as a way for educators to study what they do, how they can effect change and look for the impact on themselves and their pupils (Calhoun, 2002). Action research often ignores many of the input/output and cognitive change methods employed by summative studies conducted. Instead, action research documents the activities associated with implementation, usually from multiple points of view and multiple foci on “reality”, and those data form the basis of the discussions of within classroom/school activities or across classroom/school activities related to IT-based initiatives. While action research studies play an important role in describing conditions in a classroom, school or local education authority, few attempts have been made to analyse the 23 data in order to explain the systematic conditions that yield variances in implementation and impact. Perhaps the greatest challenge for action researchers is fulfilling the Standard of “searching for confirming or disconfirming evidence, and (trying) out alternative interpretations.” (AERA, 2006, p.38). Most summative evaluations, regardless of what evaluation strategy is used, often fail to provide a complete and coherent description of the practices, measures, changes and interpretations of results that generate a fuller understanding of the challenges posed by the introduction of IT into schools. Passey (1999) has written an exposition of the importance of systematic studies and has provided an analysis of the differences in contexts in two different schools, and the resulting differences in implementation outcomes. Future action research studies would do well to study the issues and models provided by Passey. 10.2.15 Critical Factors Two hallmarks of researching IT in education are attention to reliability: “How likely are we to obtain the same result over several iterations?” and validity: “How important/universal/long term is the phenomenon?” and “Does the subject or another researcher/observer interpret the questions in the same way?” Unfortunately, few educational research studies of the impact of IT report on the reliability and validity of their instruments and even fewer attain the high standards set by the Follow Through and CSMP evaluations. The meta-analysis studies attempt to use systematic selection and analysis measures and can address the reliability factor but it is much more difficult to reach a robust standard of validity because of all the factors discussed in this chapter which are constantly changing due to the emerging nature of IT. 10.2.16 Conclusions Compared with other disciplines like physics and medicine, educational research is still in a great state of flux and none more so than researching IT in education. As we have shown in 24 this chapter there are many limitations to the research methods much in use and there is a lack of theory underpinning many of the previous research studies. The expanding goals for researching IT in education and the driving forces for more answers to questions about the impact of IT in education from politicians, IT companies and educators alike highlight the need for more systematic and rigorous research across the different educational settings in which pupils find themselves using IT. In order to achieve greater reliability of research findings which can inform important decisions about the role and place of IT in education we need the following: - Realistic goals which take account of the complexity of IT environments and the multiplicity of factors which influence their impact; - More experimental and quasi-experimental research that adheres to AERA and other Standards; i.e. provides complete data on what was done; - More appropriate measures with convincing reliability and validity, especially with larger samples and greater variance of samples; - Qualitative research must also adhere more closely to AERA Standards and encompass larger and more representative samples and provide data on measure development, reliability and validity; - Systematic use of appropriate theories and models to underpin research investigations; - More information on the intended, implemented and evaluated curricula must be provided; - More information on alternative explanations (lack of “ceiling” of measures, for example) should be provided; 25 - A recognition of the effects of IT environments on knowledge representation and therefore on the design of research instruments to capture such knowledge understanding; - More funding targeted at long-term studies where time and money can be allocated for the proper development of measures that adequately assess what the IT-based programmes intend to accomplish; - More researchers/evaluators with different theoretical/practical foci to form teams to conduct research in the mode of Stallings; - And finally, more information on the “goodness of fit” within and across countries on various uses of tools, etc. Although there are many reliable research studies which already comply with many of these recommendations, by having international standards which both researchers and funders recognize and follow, we shall possibly achieve much greater commonality of research reliability and outcomes across the international field which in the field of IT in education has been elusive up until now. References Abbott, C. (1999). Web publishing by young people. In J. Sefton-Green. (Ed.) Young people, creativity and the new technologies (pp. 111-121). London: Routledge. Abbott, C., Ed. (2002). Special educational needs and the internet: Issues in inclusive education. London: Routledge-Falmer. American Educational Research Association (2006). Standards for reporting on empirical social science research in AERA publications. Educational Researcher, 35, 6, 33-40. Azinian, H. (2001). Dissemination of information and communication technology and changes in school culture. In H. Taylor & P. Hogenbirk (Eds.). Information and Communication 26 Technologies in education: The school of the future (pp. 35- 41). Boston: Kluwer. Becta (2006a). Thin Client Technology in Schools: Case Study Analysis. Coventry: BECTA. Retrieved August 10, 2007 from http://partners.becta.org.uk/index.php?section=rh&catcode=_re_rp_ap_03&rid=11414&cli ent=. Becta (2006b). Thin Client Technology in Schools: Literature and Project Review. Coventry: Becta. Retrieved August 10, 2007 from http://partners.becta.org.uk/index.php?section=rh&catcode=_re_rp_ap_03&rid=11414&cli ent=. Berman, P. & McLaughlin, M. W. (1974). Federal programs supporting educational change (Vol. 1), A model of educational change. Santa Monica, CA: Rand Corporation. Bliss, J. (1994). Causality and common sense reasoning. In H. Mellar, J. Bliss, R. Boohan, J. Ogborn & C. Tompsett (Eds.) Learning with artificial worlds: Computer based modelling in the curriculum (pp. 117-127). London: The Falmer Press. Bork, A. (1980). Interactive learning. In R. Taylor (Ed.), The computer in school: Tutor, tool, tutee (pp.53-66). New York: Teachers College Press. Bork, A. (1985). Personal computers for education. New York: Harper & Row. Bottino, R. M. & Furinghetti, F. (1994). Teaching mathematics and using computers: Links between teacher’s beliefs in two different domains. In J. P. Ponte & J. F. Matos, (Eds). Proceedings of the PME XVIII, Lisbon, 2, 112-119. Bottino, R. M. & Furinghetti, F. (1995). Teacher training, problems in mathematics teaching and the use of software tools. In D. Watson & D. Tinsley (Eds.), Integrating Information Technology into eucation (pp. 267-270). London: Chapman & Hall. Bruner, J. S. (1966). Toward a theory of instruction. Cambridge, MA: The Belknap Press. 27 Calhoun, E. (2002). Action research for school. Educational Leadership, 59, 6, 18-24. Castillo, N. (2006). The implementation of Information and Communication Technology (ICT): An investigation into the level of use and integration of ICT by secondary school teachers in Chile. Unpublished Doctoral Thesis. King's College London, University of London. Charters, W. W. & Jones, J. (1973). On the risk of appraising non-events in program evaluation. Educational Researcher, 2, 11, 5-7. Chen, Q. & Zhang, J. (2000). Using ICT to support constructive learning. In D. Watson and T. Downes. (Eds.), Communications and networking in education: Learning in a networked society (pp. 231-241). Boston. Kluwer Academic Publishers. Cheng, P. C-H, Lowe, R.K. & Scaife, M. (2001). Cognitive science approaches to diagrammatic representations. Artificial Intelligence Review, 15,.1/2, 79-94. Collis, B. A., Knezek, G.A., Lai, K-L., Myashita, K.T., Pelgrum, W.J., Plomp, T., & Sakamoto, T. (1996) Children and computers in school. Mahwah, NJ: Lawrence Erlbaum. Cox, M.J. (1989). The impact of evaluation through classroom trials on the design and development of educational software. Education and Computing, 5, 1/2, 35-41. Cox, M.J. (2005). Educational conflict: The problems in institutionalizing new technologies in education. In G. Kouzelis, M. Pournari, M. Stoeppler & V. Tselfes (Eds.), Knowledge in the new technologies (pp. 139-165). Frankfurt: Peter Lang. Cox, M. (2008). Researching IT in education. In J. Voogt & G. Knezek (Eds.), International handbook of information technology in primary and secondary education (pp. xxx-xxx). New York: Springer. Cox, M. J. & Abbott, C. (2004). ICT and attainment: A review of the research literature. Coventry and London: British Educational Communications and Technology Agency / Department for Education and Skills. 28 Cox, M. J. & Nikolopoulou, K. (1997). What information handling skills are promoted by the use of data analysis software. Education and Information Technologies, 2, 105 –120. Cox, M.J. & Marshall, G. (2007) Effects of ICT: do we know what we should know? Education and Information Technologies. 12, 2, 59-70. Cox, M. J. & Webb, M. E., (2004). ICT and pedagogy: a review of the research literature. Coventry and London: British Educational Communications and Technology Agency / Department for Education and Skills. Dede, C. (2008). Theoretical perspectives influencing the use of Information Technology in teaching and learning. In J. Voogt & G. Knezek (Eds.), International handbook of information technology in primary and secondary education (pp. xxx-xxx). New York: Springer. Duckworth, E. (1972). The having of wonderful ideas. Harvard Educational Review, 42, 217-231. Engestrom, Y. (1999). Activity theory and individual and social transformation. Y. Engestrom, R. Miettinen, & R.-L. Punamaki (Eds.), Perspectives on activity theory (pp. 19-38). Cambridge, UK: Cambridge University Press. Fang, Z. (1996). A review of research on teacher beliefs and practices. Educational Research 38, 1, 47-65. Fendler, L (2006) Why generalisability is not generalisable. Journal of Philosophy of Education, 40, 4, 438-449. Fullan, M. G., (1991). The new meaning of Educational Change. London, Cassell. Gardner, D.G., Dukes, R.L. & Discenza, R. (1993). Computer use, self-confidence, and attitudes: A causal analysis. Computers in Human Behaviour, 9, 427 – 440. Ginsburg, H. & Opper, S. (1979). Piaget’s theory of intellectual development: An introduction. 29 Englewood Cliffs, NJ: Prentice-Hall. Guba, E. G. & Lincoln, Y. S. (1981). Effective evaluation: Improving the usefulness of evaluation results through responsive and naturalistic approaches. San Francisco, CA: JosseyBass. Harrison, C., Comber, C., Fisher, T., Haw, K., Lewin, C., Linzer, E., McFarlane, A., Mavers, D., Scrimshaw, P., Somekh, B., & Watling, R. (2002). ImpaCT2: The impact of information and communication technologies on pupil learning and attainment. Coventry: British Educational Communications and Technology Agency. Hoyles, G. (Ed.) (1989). Girls and computers. Bedford Way Papers. London: Institute of Education, University of London. IJsselstein, W.A, Freeman, J., & de Ridder, H. (2001). “Presence: Where are we?” in Cyberpsychology and Behavior, 4, 2, 179-182. Kalas, I. & Blaho, A. (1998). Young students and future teachers as passengers on the Logo engine. In D. Tinsely & D.C. Johnson (Eds.), Information and Communication Technologies in school mathematics (pp. 41-52). London: Chapman & Hall. Katz, Y. J. & Offir, B. (1988). Computer oriented attitudes as a function of age in an elementary school population. In F. Louis & E. D. Tagg (Eds.), Computers in education (pp. 371373). Amsterdam: Elsevier Science Publishers. Katz, Y. J. & Offir, B. (1993). Achievement level, affective attributes and computer-related attitudes: factors that contribute to more efficient end-use. In A. Knierzinger and M. Moser (Eds.), Informatics and changes in learning (pp. 13-15). Linz, Austria: IST Press. Kim, G. & Shin, W. (2001). Tuning the level of presence (LOP). Paper presented at the Fourth International Workshop on Presence, Temple, PA. 30 Klahr, D. & Chen, Z. (2003). Overcoming the positive-capture strategy in young children: Learning about indeterminacy. Child Development , 74, 5, 1256-1277. Klahr, D. & Dunbar, K. (1988). Dual space search during scientific reasoning. Cognitive Science, 12, 1, 1-48. Knezek, G. & Christensen, R, (2008). The importance of Information Technology attitudes and competencies in primary and secondary education. In J. Voogt & G. Knezek (Eds.), International handbook of information technology in primary and secondary education (pp. xxx-xxx). New York: Springer. Koutromanos, G. (2004). The effects of head teachers, head officers and school counsellors on the uptake of Information technology in Greek schools. Unpublished Doctoral Dissertation. Department of Education and Professional Studies, University of London Kuhn, T. (1970). The structure of scientific revolutions. Chicago, IL: University of Chicago Press. Laurillard D. M. (1978). Evaluation of student Learning in CAL. Computers and Education, 2, 259 -263. Laurillard, D. (1992). Phenomemographic research and the design of diagnostic strategies for adaptive tutoring systems. In M. Jones & P. Winne (Eds.), Adaptive learning environments: Foundations and frontiers (pp. 233-248). Berlin: Springer-Verlag. Lee, J. (2001). Education for technology readiness: Prospects for developing countries. Journal of Human Development, 2, 1, 115 –151. Liao, Y-K, C. & Hao, Y. (2008). Large scale studies and quantitative methods. In J. Voogt & G. Knezek (Eds.), International handbook of information technology in primary and secondary education (pp. xxx-xxx). New York: Springer. Malkeen, A. (2003). What can policy makers do to encourage the use of Information and communications Technology? Evidence from the Irish School System. Technology, 31 Pedagogy and Education. 12, 2, 277-293. Marshall, G. & Herbert, M. (1983). Comprehensive school mathematics project. Denver, CO: McRel. Meelissen, M. (2008). Computer attitudes and competencies among primary and secondary school students. In J. Voogt & G. Knezek (Eds.), International handbook of information technology in primary and secondary education (pp. xxx-xxx). New York: Springer. Mellar, H., Bliss, J., Boohan, R., Ogborn, J., & Tompsett, C. (Eds.) (1994). Learning with Artificial Worlds: Computer based modelling in the curriculum. London: The Falmer Press. Merrill, M.D. (1994). Instructional design theory. Englewood Cliffs, NJ: Educational Technology Publications. Midoro, V. (2001) How teachers and teacher training are changing. In H. Taylor and P. Hogenbirk (Eds.), Information and Communication Technologies in education: The School of the future (pp. 83-94). Kluwer: Boston. Moonen, J. (2001) Institutional perspectives for online learning: Policy and return on investment. In H. Taylor and P. Hogenbirk (Eds.), Information and Communication Technologies in education: The School of the future (pp. 193-210). Kluwer: Boston. Moseley, D., Higgins, S., Bramald, R., Harman, F., Miller, J., Mroz, M, Harrison, T., Newton, D., Thompson, I., Williamson, J., Halligan, J., Bramald, S., Newton, L., Tymms, P., Henderson, B. & Stout, J. (1999). Ways forward with ICT: Effective pedagogy using Information and Communications Technology for literacy and numeracy in primary schools. Newcastle: University of Newcastle-upon-Tyne. Moyle, K. (2008). Total cost of ownership & total value of ownership.In J. Voogt & G. Knezek (Eds.), International handbook of information technology in primary and secondary education (pp. xxx-xxx). New York: Springer. 32 Niemiec R.P. & Walburg, H.J. (1992). The effects of computers on learning. International Journal of Educational Research 17, 1, 99–107. Nishinosono, H. (1989). The use of new technology in education: Evaluating the experience of Japan and other Asian countries. In X. Greffe & H. Nishinosono (Eds), New educational technologies. Paris: United Nations Educational Scientific and Cultural Organization. Noss, R. & Hoyles, C. (1992). Looking back and looking forward. In C. Hoyles & R. Noss (Eds.), Learning Logo and mathematics (pp. 431-468). Cambridge, MA: MIT Press. Papert, S. (1980). Mindstorms: Children, computers and powerful ideas. New York: Basic Books. Passey, D. (1999). Strategic evaluation of the impacts of learning of educational technologies: Exploring some of the issues for evaluators and future evaluation audiences. Education and Information Technologies, 4, 223–250. Pea, R. D. & Kurland, D. M. (1994). Logo programming and the development of planning skills. Technical Report No. 11. New York: Bank Street College of Education. Pelgrum, W. & Plomp, T. (1993). The use of computers in education in 18 countries. Studies in Educational Evaluation, 19, 101-125. Pelgrum, W.J. & Plomp, T. (2008). Methods for large scale international studies [check title]. In J. Voogt & G. Knezek (Eds.), International handbook of information technology in primary and secondary education (pp. xxx-xxx). New York: Springer. Pilkington, R. (2008). Measuring the impact of IT on students’learning. In J. Voogt & G. Knezek (Eds.), International handbook of information technology in primary and secondary education (pp. xxx-xxx). New York: Springer. Provus, M. (1971). Discrepancy evaluation for educational program improvement and assessment. Berkeley, CA: McCutchan. 33 Reese, H W. & Overton, W. F. (1970). Models of development and theories of development. In L. R. Goulet & P. B. Baltes (Eds.), Lifespan Developmental Psychology: Research and Theory (pp. 65 -145). New York: Academic Press. Reeves, T. C. (2008). Evaluation of the design and development of IT tools in education. In J. Voogt & G. Knezek (Eds.), International handbook of information technology in primary and secondary education (pp. xxx-xxx). New York: Springer. Ridgway, J. (1997). Vygotsky, informatics capability, and professional development. In D. Passey & B. Samways (Eds.), Information Technology: Supporting change through teacher education (pp. 3-19). London: Chapman & Hall. Ridgway, J. (1998). From barrier to lever: Revising roles for assessment in mathematics education. National Institute for Science Education Brief, 8. Ridgway, J. & Passey, D. (1993). An international view of mathematics assessment: Through a glass, darkly. In M. Niss (Ed.), Investigations into assessment in mathematics education: An ICMI Study (pp. 57 -72). London: Dordrecht Kluwer. Sakamoto, T., Zhao, L.J., & Sakamoto, A. (1993). Psychological IMPACT of computers on children. The ITEC Project: Information Technology in Education of children. Final Report of Phase 1. Paris: United Nations Educational Scientific and Cultural Organisation. Sakonidis, H. (1994), Representations and representation systems. In H. Mellar, J. Bliss, R. Boohan, J. Ogborn & C. Tompsett (Eds.), Learning with artificial worlds: Computer based modelling in the curriculum (pp.39-46). London: Falmer Press. Schoenfeld, A. (2004). Instructional research and practice. Draft report for the MacArthur Research Network on Teaching and Learning. Scrimshaw, P. (2004). Enabling teachers to make successful use of ICT, Coventry: British Educational Communications and Technology Agency. 34 Sigel, I. & Hooper, F. (Eds.) (1968). Logical thinking in children: Research based on Piaget’s theory. New York: Holt, Rinehart and Winston. Somekh, B. (1995). The contribution of action research to development in social endeavours: A position paper on action research methodology. British Educational Research Journal, 21, 3, 339-355. Squires, D., & McDougall, A. (1994). Choosing and using educational software. London: Falmer Press. Stallings, J. (1975). Implementation and child effects of teaching practices in Follow Through classrooms. Monographs of the Society for Research in Child Development,40 (7/8), 1133. Star Schools (2005). Office of innovation and improvement. Washington, DC: United States Department of Education. Retrieved August 10, 2007 from http://www.ed.gov/programs/starschools/eval.html Triona, L. & Klahr, D. (2005). A new framework for understanding how young children create external representations for puzzles and problems. In E. Teubal, J. Dockrell., & L. Tolchinsky (Eds.), Notational knowledge: Developmental and historical perspectives (pp. 159-178). Rotterdam: Sense Publishers United Nations Educational Scientific and Cultural organization (UNESCO) (2005). Indicators of information and communication technologies (ICT) application in secondary education of South−East European countries. Moscow: United Nations Educational, Scientific and Cultural Organization. Institute for Information Technologies in Education. Walberg, H. J. (Ed.)(1974). Evaluating educational performance: A sourcebook of methods, instruments and examples. Berkeley, CA: McCutchan. Watson, D. M. (Ed.) (1993). The ImpacT report: An evaluation of the impact of information 35 technology on children’s achievement in primary and secondary schools. London: King’s College, London. Webb, M. E. & Cox, M. J. (2004). A review of pedagogy related to ICT. Technology, Pedagogy and Education 13, 3, 235-286. Wittrock, M. C. & Wiley, D. E. (Eds.) (1970). The evaluation of instruction: Issues and problems. New York: Holt, Rinehart and Winston. Wolf, K. (1991). Teaching portfolios. San Francisco: Far West Regional Laboratory for Educational Research and Development. Yokochi, K. (Ed.) (1996). Report of the first stage experiment by CCV education system. Mitsubishi Electric Company. Yokochi, K., Moriya, S. & Kuroda, Y. (1997). Experiment of DL using ISDN with 128kbps. New Education and Computer, 6, 58 –83. Ziegler, M. (2001) Improving practice through action research. Adult Learning 12, 1, 3-4. 36

![afl_mat[1]](http://s2.studylib.net/store/data/005387843_1-8371eaaba182de7da429cb4369cd28fc-300x300.png)