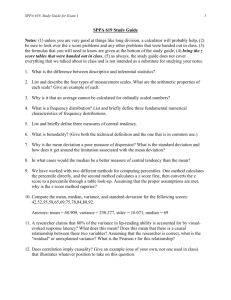

Section 1 & 2 - People Pages

advertisement