Probability Distributions for Reliability

advertisement

Probability Distributions for Reliability

1

Module PE.PAS.U10.3

Probability Distributions for Reliability

Primary Author:

Email Address:

Co-author:

Email Address:

Last Update:

Reviews:

Prerequisite Competencies:

James D. McCalley, Iowa State University

jdm@iastate.edu

None

None

7/12/02

None

1. Define random variables and distinguish between discrete

(module U6) and continuous (module U7) random

variables.

2. Relate probability density functions and distributions for

discrete and continuous random variables (module U7).

3. Compute first and second moments (module U9).

Module Objectives:

1. Distinguish between different distributions used in

reliability in terms of their attributes and applications.

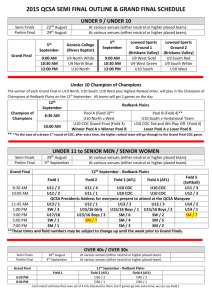

U10.1

Introduction

I

n this module, we review some basic types of probability distributions that are important within the

domain of reliability analysis. This includes two discrete distributions: the binomial and the Poisson; and

three continuous distributions: the exponential, Weibull, normal, and lognormal.

U10.2

The Binomial Distribution

C

onsider a random trial having only two possible outcomes. Such a trial is referred to as a Bernoulli

trial. We refer to one of the outcomes as “success” and the other as “failure.” The probability of

success is denoted as p, and the probability of failure is denoted as q, so that p+q=1.0. An experiment is a

series of n Bernoulli trials, with the outcome of each trial independent from the outcomes of all other trials

before it. Denote the random variable X as the total number of successes out of the n trials, where r is used

to denote the values that the random variable may take, and therefore the number of failures is n-r. Since r

must be nonnegative, the sample space is S={0, 1, 2, …, n}. The probability of getting r successes is p r, and

the probability of getting n-r failures is (1-p)(n-r). So what is the probability that we will observe X=r

consecutive successes followed by n-r consecutive failures? It is pr(1-p)(n-r). If we now want the probability

of exactly X=r successes out of n trials, then p r(1-p)(n-r) must be multiplied by the number of ways these r

successes can occur, which is the number of combinations of n distinguishable elements taken r at a time,

n!

. Therefore, the probability distribution function for the random variable X is

r!n r !

n!

Pr[ X r , n, p]

p r (1 p) ( nr )

(U10.1)

r!(n r )!

given by C r

n

PX r

Probability Distributions for Reliability

2

Example U10.1

An electric power system is supplied from a total of 150 generating units. Calculate the probability of

having (a) 2, (b) 5, and (c) 10 units being out of service simultaneously, given that the outage rate of each

unit is 6%, 3%, and 1%.

(a) The probabilities of having r=2 units out of service, for the various outage rates, is:

150!

0.06 2 (0.94) (148) 0.00424

2!(148)!

150!

Pr[ X 2,150,0.03]

0.032 (0.97) (148) 0.11084

2!(148)!

150!

Pr[ X 2,150,0.01]

0.012 (0.99) (148) 0.25250

2!(148)!

Pr[ X 2,150,0.06]

Note that the probability of having 2 units out is highest for the lowest outage rate of 1%..

(b) The probabilities of having r=5 units out of service, for the various outage rates, is:

150!

0.06 5 (0.94) (145) 0.05839

5!(145)!

150!

Pr[ X 5,150,0.03]

0.035 (0.97) (145) 0.17359

5!(145)!

150!

Pr[ X 5,150,0.01]

0.015 (0.99) (145) 0.01378

5!(145)!

Pr[ X 5,150,0.06]

Note that the probability of having 5 units out is highest for the intermediate outage rate of 3%.

(c) The probabilities of having r=10 units out of service, for the various outage rates, is:

150!

0.0610 (0.94) (140) 0.12230

10!(140)!

150!

Pr[ X 10,150,0.03]

0.0310 (0.97) (140) 0.00971

10!(140)!

150!

Pr[ X 10,150,0.01]

0.0110 (0.99) (140) 0.00000

10!(140)!

Pr[ X 10,150,0.06]

Note that the probability of having 10 units out is highest for the highest outage rate of 6%.

Comparing the illustrations (a), (b), and (c), we observe the intuitively pleasing feature that the probability

of having a small number of units out is much greater when the outage rate is low, and the probability of

having a large number of units out is much greater when the outage rate is high.

One should note several requirements on situations amenable to use of the binomial distribution. They are:

There must be a fixed number of components

Each component must be characterized by exactly two states: success and failure

All components must have the same probability of success and the same probability of failure

All components must behave independently

Probability Distributions for Reliability

3

Finally, the first and second moments of a random variable X having a binomial distribution is given by

E ( X ) np

(U10.2)

2 ( X ) npq

(U10.3)

U10.3

The Poisson Distribution

Consider a random variable X characterized by the binomial distribution, where, as before, n is the number

of trials, and p is the probability of success. But now let’s consider the special case that the probability, p,

of a success in a single trial approaches zero as the number of trials, n, approaches infinity during a certain

time period t, such that the product n*p is constant. In addition, let be the number of successes per unit

time. Then we see that n*p=t. Noting that p=t/n, we have from (U10.1)

n!

n! t t

Pr[ X r , n, p]

p r (1 p) ( n r )

1

r!(n r )!

r!(n r )! n

n

r

PX r

( nr )

(U10.4)

Now there are two simplifications we can make at this point.

First, when n is very large, then n>>r, and (n-r)n such that

(1-t/n)(n-r) = (1-t/n)n e-t

(U10.5)

Second, we have that

n!

n(n 1)(n 2)...( n r 1)(n r )(n r 1)...( 2)(1) n(n 1)(n 2)...( n r 1) n r

r!(n r )!

r!(n r )( n r 1)...( 2)(1)

r!

r!

(U10.6)

Substitution of (U10.5) and (U10.6) into (U10.4) yields:

n r t

(t ) r t

Pr[ X r , n, p ] e t

e

r! n

r!

r

PX r

(U10.7)

The parameter is called the intensity of occurrence of Poisson events. More loosely, it is called the

transition rate, and if the random variable X represents the number of failures, then is the failure rate. The

units of are transitions per unit time (or failures per unit time). It provides the mean number of failures

expected per unit of time.

The previous development indicates that the Poisson distribution is an approximation to the binomial

distribution under the given conditions of large n and constant np. However, one should note that the

Poisson distribution is more than just a useful approximation; in fact, it is an important distribution in its

own right, in that it effectively characterizes a process through time in which we count occurrences of a

certain type of random event. The fact that we count only event occurrences, but not event nonoccurrences, distinguishes the Poisson distribution from the binomial distribution. Again, let X represent

the random variable corresponding to the number of occurrences. We will now denote its distribution as a

function of time, PX=r(t), and, for convenience, drop the subscripted notation “X=” so that Pr(t) is “the

probability that the random variable X equals r during the time interval (0,t).” In addition, a Poisson

counting process must satisfy the following requirements:

The numbers of events counted in non-overlapping intervals of time are independent.

The intensity is constant.

Consider that the interval of time (0,t) is divided into n very small intervals of increment t such that more

than one occurrence is impossible in t. Then the expected number of occurrences in (0,t) is given by

E(X)=n*Pr(occurrence in t)

But if is the mean rate of occurrence, then E(X)=t. Thus,

t=n*Pr(occurrence in t) Pr(occurrence in t)= t/n

Probability Distributions for Reliability

4

However, t=t/n so that

Pr(occurrence in t)= t

Thus, the probability of having exactly one occurrence in (t, t+dt) is given by dt, and the probability of

having zero occurrences in (t, t+dt) is 1-dt.

We now express the probability of having zero occurrences in (0,t), denoted by P 0(t). Our approach is to let

dt represent a very small amount of time such that it is not possible to have more than one transition, and

then express P0(t+dt). In expressing P0(t+dt), we note that 0 events may occur during (0, t+dt) in only one

way:

0 events occur in (0,t) and 0 events occur in (t, t+dt)

Recalling that events in nonoverlapping intervals are independent, the probability P 0(t+dt) may be

expressed as a product of the probabilities of the component events:

P0 (t dt ) P0 (t ) * (1 dt )

P0 (t dt ) P0 (t )

P0 (t )

dt

(U10.8)

In the limit as dt goes to zero, the numerator goes to dPX(t)(0) so that

dP0 (t )

P0 (t )

dt

(U10.9)

Integrating, we have:

ln P0 (t ) t C

(U10.10)

If at t=0, we assume that no event has occurred (i.e., the device is operable), then P 0(0)=1, which forces

C=0, so that

P0 (t ) e t

(U10.11)

Equation (U10.11) provides the probability of having zero occurrences during (0,t), and 1-P0(t) provides the

probability of having more than one occurrence in (0,t).

But what if we want the probability of having r failures during (0,t)? This is denoted as P r(t). Our approach

is to, again, let dt represent a very small amount of time such that it is not possible to have more than one

transition, and then express Pr(t+dt). In expressing Pr(t+dt), we note that r events may occur during (0, t+dt)

in only two different ways:

r events occur in (0,t) and 0 events occur in (t, t+dt), or

r-1 events occur in (0,t) and 1 event occurs in (t, t+dt)

Recalling that events in nonoverlapping intervals are independent, the probabilities of the above two ways

may be expressed as a product of the probabilities of the component events:

Pr (t dt ) Pr (t ) * (1 dt ) Pr 1 (t ) * dt

Pr (t dt ) Pr (t ) Pr (t ) * dt Pr 1 (t ) * dt Pr (t ) dt Pr (t ) Pr 1 (t )

Manipulating,

Pr (t dt ) Pr (t )

dP (t )

Pr 1 (t ) Pr (t ) r

Pr 1 (t ) Pr (t )

dt

dt

(U10.12)

Combining (U10.9) and (U10.12), we have

dP0 (t )

P0 (t )

dt

dPr (t )

Pr 1 (t ) Pr (t )

dt

(U10.13)

Solving (U10.13) for r=1, with initial conditions P 0(0)=P1(0)=0 results in

P1 (t ) te t

And similar effort for r=2, …, results in the general solution of

(U10.14)

Probability Distributions for Reliability

Pr (t )

(t ) r t

e

r!

5

(U10.15)

which is the same as (U10.7).

It is of interest to recall that e-x = 1 – x + x2/2! - x3/3! + …, and for x very small, e-x 1 – x. Thus, when t

is very small (either because is very small or t is very small, or both), then e -t 1 – t, and (U10.15)

becomes

Pr (t )

(t ) r

(1 t )

r!

(U10.16)

Thus, for r=0 we have that

P0 (t ) 1 t

from which we see that the probability of having 1 or more occurrences in (0,t) is

Pr 0 (t ) 1 P0 (t ) 1 (1 t ) t

(U10.17)

From (U10.16), the case of r=1 is

P1 (t ) t (1 t ) t (t ) 2 t

(U10.18)

Comparison of (U10.17) with (U10.18) shows that

Pr 0 (t ) P1 (t )

implying that, when t is small, the probability of having more than one occurrence in (0,t) is the same as

the probability of having exactly one occurrence in (0,t). The conclusion is that, when t is small, the

probability of having 2 or more occurrences in (0,t) is zero.

Example U10.2

A power plant has a constant forced outage rate of once every two years. What is the probability that over a

period of 3 years, (a) no outage will occur (b) at least three outages will occur?

(a)

0.5 occurrences/year P0 (t ) e t P0 (3) e ( 0.5)(3) e 1.5 0.2231

(t ) 2 t

Prr 2 (t ) 1 P0 (t ) P1 (t ) P2 (t ) 1 e t tet

e

2

1.5

(1.5) 2 1.5

Prr 2 (3) 1 P0 (3) P1 (3) P2 (3) 1 e

1.5e 1.5

e 0.1913

2

(b)

Example U10.3

Draw the (a) 1 year and (b) 10 year probability distributions (for r=0,…,20) for a power plant of Example

U10.2 having constant forced outage rate of 0.5/year.

(a) The distribution at 1 year is shown below.

Probability Distributions for Reliability

6

Distribution at 1 year

Probability

0.8

0.6

0.4

Series1

0.2

0

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

0

Number of outages

(b) The distribution at 10 years is shown below. Compare the probability of having 0 outages in 1 year to

the probability of having 0 outages in 10 years. Compare the probability of having 4 outages in 1 year to

the probability of having 4 outages in 10 years.

Distribution at 10 years

Probability

0.2

0.15

0.1

Series1

0.05

0

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

0

Number of outages

The mean and variance of a random variable X characterized by a Poisson distribution

Pr (t )

(t ) r t

e is:

r!

E ( X ) t

(U10.19)

2 ( X ) t

(U10.20)

We mentioned that the Poisson distribution is distinguished from the binomial distribution in that the

random variable characterized by the Poisson distribution only counts event occurrences but not event nonoccurrences. When the event is failure, as it so often is in power systems, the implication is that the Poisson

distribution does not model repairs. This implication is not of much concern when computing the

probability of having zero or one failure. But when we use the Poisson to obtain the probability of two or

more failures, one must be aware of implicitly assuming that the repair time is zero, i.e., we are able to

instantly replace any failed component. This assumption is quite reasonable if the average repair time is

quite short when compared to the average time to failure. If this assumption is not valid, then the process

should be modeled as a Markov process [1].

Finally, one should note that the counting process characterized by the Poisson distribution is a random

process, which means that it is a sequence of random events occurring through time. Specifically, we may

call our random process a Poisson process. The fact that we require constant intensity means that it is a

Probability Distributions for Reliability

7

homogeneous Poisson Process (HPP). Allowing variable intensity would result in a non-homogeneous

Poisson process (NPP).

Example U10.4

This example is obtained from [2] and allows comparison of the binomial and Poisson distributions. A

large static var system is protected by digital devices having self-checking capability to determine whether

they are functioning properly. Three such devices are specified for the protective system under study and

two different implementation schemes have been suggested.

The first scheme places the three devices in parallel, with any one of the three then being able to

identify a fault and take appropriate action.

The second scheme places only one device in service, with the other two devices switched off on the

theory that the non-energized device will have a lower failure rate. One of the standby devices will be

switched into service immediately if the active device fails its self-check test.

Which arrangement has the higher probability of failure? The probability of failure of one device in one

time period is 0.1.

The first scheme is one for which each device may be considered to represent a Bernoulli trial having the

two distinct outcomes of success, with probability p, or failure, with probability 1-p. Here, 1-p=0.1 so

p=0.9. The probability of system success occurs if we have 3, 2, or 1 component success. Thus, we have:

Pr(system success | scheme 1) Pr[ X 3,3,0.9] Pr[ X 2,3,0.9] Pr[ X 1,3,0.9]

3!

3!

3!

0.9 3 (0.1) ( 0)

0.9 2 (0.1) (1)

0.91 (0.1) ( 2) 0.729 .243 .027 0.999

3!(0)!

2!(1)!

1!(2)!

For the second scheme, the binomial distribution does not apply because the probability of success depends

on the failure of the preceding unit. Yet we may represent this as a Poisson process where we may have up

to two failures before failing the system. But what is the failure rate ? To get this, we note that the

probability of no failure of one device in one time period (which is 0.9) is the same as P 0(1), which is given

by e-. Thus, e-=0.9 =0.10536. So:

Pr(system success | scheme 2) P0 (1) P1 (1) P2 (1) e e

( ) 2 e

2

0.9 0.0948 0.005 0.999824

It is easier to compare the failure probabilities, computed as 1-0.999=0.00100 for scheme 1 and 10.999824=0.00018 for scheme 2. Hence, scheme 1, with parallel operation, is about 5 times as likely to fail

as scheme 2, with redundant backups.

U10.4

The Exponential Distribution

Consider again example U10.2 and U10.3, where we used the Poisson distribution to characterize the

probability distribution of a random variable X(t) representing the number of failures occurring in the time

interval (0,t). Let’s denote [3] by T 1 the time of the first failure, T 2, the time between the first failure and

the second failure, …, and T n as the time between the failure n-1 and failure n. For example, if T 1=3 years

and T2=4 years, then the first event would occur at year 3 and the second event at year 7. What is the

distribution of any one of the T i, i=1,…,n?

Note first that the event {T 1>t} takes place if and only if no events of the Poisson process occur in the

interval (0,t), and therefore:

Probability Distributions for Reliability

Pr(T1 t ) P0 (t ) e t

8

(U10.21)

The equation (U10.2) is a probability distribution function for the random variable T 1. It appears to be the

same distribution as that of the Poisson distribution for the case r=0 per (U10.11). However, there is a

subtle difference. In the case of (U10.11), the random variable is X with values it can take denoted by r;

here, the random variable is T1, with values it can take denoted by t. Whereas the Poisson distribution

characterizes a discrete random variable - the number of occurrences in (0,t), (U10.21) characterizes a

continuous random variable - the time interval until the first occurrence.

Equation (U10.21) is called an exponential distribution. It has some important properties that can be

observed by considering the probability distribution for the random variable T 2. This would be Pr(T 2>t).

However, in considering T2, it is implicit that the first failure has occurred (and, since the process is

Poisson, the repair time is instantaneous). We denote the time at which this first failure occurs as s.

Therefore,

Pr(T2 t ) Pr(T2 t | T1 s)

(U10.22)

The event {T2>t} takes place if and only if no events of the Poisson process occur in the interval (s, s+t).

This means that

Pr(T2 t ) Pr(0 events in (s, s t ) | T1 s)

(U10.23)

Yet, if the process is indeed Poisson, then it is required that the numbers of events counted in nonoverlapping intervals of time are independent. Therefore, what happens after s is independent from what

happens before s. Thus, the event {0 events in (s,s+t)} is independent of the event {T 1=s}, and (U10.23)

becomes

Pr(T2 t ) Pr(0 events in (s, s t ))

(U10.24)

The Poisson process also requires that the intensity be constant. Thus, (U10.24) becomes

Pr(T2 t ) e t

(U10.25)

Repeating the same arguments for T 3,…,Tn results in observation of a fundamental quality associated with

exponentially distributed random variables: the process from any time onwards is independent of all that

has previously occurred (by independent non-overlapping time intervals), and also the process has the same

distribution as the original process (because of constant ). In other words, the process is said to have no

memory. If an occurrence is a failure, then we would say that the reliability is constant for equal operating

periods throughout the useful life of the component, i.e., the probability of failure depends only on the

exposure time [1]. Thus, an old component that still works is just as good as a new one! The exponential

distribution is the only continuous distribution with this property.

Equation (U10.21) gives the probability of the event not occurring in the time period (0,t). Thus, the

probability that the event does occur in the time period (0,t) is

Pr(T1 t ) 1 e t

(U10.26)

Equation (U10.26) is clearly a cumulative distribution function. Then the probability density function,

denoted by fT(t), is given by its derivative:

f T (t ) e t

(U10.27)

Equation (U10.27) is the probability density function for an exponentially distributed random variable; its

graph is illustrated in Figure U10.1.

Probability Distributions for Reliability

9

Exponential density function

0.6

density

0.5

0.4

0.3

Series1

0.2

0.1

20

18

16

14

12

10

8

6

4

2

0

0

tim e

Fig. U10.1: Exponential density function for =1

Example U10.5

An electronic switch has a constant failure rate of 0.0001 failures per hour. What is the probability that it

will fail within the year (8760 hours)?

Pr(T1 8760) 1 e ( 0.0001)(8760) 1 0.4164 0.58355

This is not a very reliable switch.

The mean and variance of a random variable X characterized by an exponential distribution with density

function

E (T )

f T (t ) e t is:

1

(U10.28)

2 (T )

1

(U10.29)

2

and we see that the expectation and the standard deviation of the exponential distribution are equal.

U10.5

The Weibull Distribution

The Weibull distribution is a continuous distribution heavily used for fatigue and breaking strength of

materials and also for failure-time distributions and is therefore of particular interest within the field of

reliability evaluation. A random variable T is characterized by the Weibull distribution if it obeys the

probability density function:

Probability Distributions for Reliability

10

t B 1

t

exp , t , , 0

f T (t )

0, otherwise

(U10.30)

The parameter is called the shape parameter because it determines the shape of the Weibull probability

density function. The parameter is called the scale parameter because it determines the scale. The scale

parameter has the same units as the random variable, but the shape parameter is dimensionless. Figure

U10.2 illustrates that by appropriately varying the shape and scale parameters, the Weibull probability

density function can take on many different kinds of shapes; it is this fact that makes it so useful in that it

can be used to characterize many kinds of data.

Weibull Distribution for alpha=10

density

0.2

0.15

Beta=0.5

0.1

Beta=1

0.05

Beta=4

19

17

15

13

11

9

7

5

3

1

0

Tim e

Fig. U10.2

One notes from Fig. U10.2 that, when =1, the Weibull distribution reduces to the exponential distribution

with =1/, so the exponential distribution may be considered to be a special case of the Weibull. For

values of >1, the Weibull becomes bell-shaped with some skew.

U10.6

The Rayleigh distribution

The Rayleigh distribution is a special case of the Weibull where, =2.

U10.7

The Normal Distribution

The most well known distribution is the normal distribution, also known as the Gaussian distribution. A

normally distributed random variable has a probability density function of:

f T (t )

(t ) 2

, - t ,- , 0

exp

2

2

2

1

(U10.29)

Here, and are parameters of the distribution and represent the mean (first moment about the origin) and

standard deviation (second moment about the mean), respectively. The integral of (U10.29) cannot be

evaluated in closed form,

Example U10.6

Probability Distributions for Reliability

11

A manufacturer states that an electronic switch has mean life of 10000 hours and a standard deviation of

1000 hours. Assume that the lifetimes are normally distributed, and calculate the probability that a given

light bulb will last less than 8000 hours.

8000

Pr( T 8000)

1

f T (t )dt

1000 2

8000

(t 10000) 2

dt

exp

2

2 *1000

One problem here that we face is that the above integral cannot be evaluated in closed form, so we must use

numerical integration. Alternatively, we may use tables that have been prepared based on such numerical

integration. However, it would be impractical to provide a separate table for every conceivable value of

and [4]. To avoid this difficulty, we transform the normal density function to the so-called standard

normal density function. The standard normal density has =0 and =1. The transformation occurs by

defining a new random variable Z such that it is transformed from the old random variable T through

Z

T

(U10.31)

Thus, in this example, we need to find the transformed values of t=- and t=8000 with =10000 and

=1000:

z

10000

1000

z

8000 10000

2

1000

Thus, the evaluation of the desired probability is given by

2

1

Pr( Z 2) f Z ( z )dt

1 2

( z 0) 2

exp

2 *12 dt

2

From Table 3 of [5], we have that this probability is given as 0.9772-0.5=0.4722.

There are two important facts worth mentioning in regards to the normal distribution.

If T is normally distributed with parameters and , then Y=aT+b is also normally distributed, but its

parameters are a+b and a.

If X1, …, Xn are identical independently distributed (IID) random variables, then the distribution of

their sum is normal as n , whatever the original distribution. This is a simple statement of the

central limit theorem and is proved in many textbooks, among which are [5] and [6].

For purposes of modeling lifetime distributions, the normal distribution has the disadvantage that it allows

negative time. This disadvantage can be made negligible by selecting the mean and variance appropriately.

The other disadvantage of using the normal distribution for lifetime distributions stems from the fact that

most lifetime distributions tend to be asymmetric with a long right-hand tail. In contrast, the normal

distribution is symmetric.

U10.8

The Lognormal Distribution

The lognormal distribution is reported to be used in modeling failure times for some semiconductor

devices, such as LEDs, diodes, and lasers [7], and also component repair times [8]. It has also been used in

modeling the growth of fatigue cracks. Its probability density function is given by:

f T (t )

(ln t ) 2

, 0 t ,- , 0

exp

2

t 2

2

1

(U10.32)

Probability Distributions for Reliability

12

This distribution is an exponential transformation of the normal distribution [9]. If lifetimes have a

lognormal distribution, then the log of the lifetime has a normal distribution. Note that the parameters µ and

σ2 of the lognormal distribution are the mean and variance of the log lifetime distribution. One important

attribute of the lognormal distribution is that it allows the random variable to assume only positive values,

an important attribute in modeling lifetime distributions.

U10.9

The Gamma distribution

The gamma distribution has a probability density function given by

f (t )

(t ) 1 e t

,

( )

t 0

where the function Γ is given by

( ) x 1e x dx

0

A special case of Γ(α) arises when α is a positive integer [9]. Then Γ(α)=(α-1)!. In all cases, Γ(α+1)=α Γ(α).

The parameter α is the shape parameter, and it controls the behavior of the hazard function in the following

way: 0<α<1 gives a decreasing hazard rate and α>1 gives an increasing rate [9]. The case α=1 is the

exponential distribution. The parameter λ is the scale parameter.

The gamma distribution has a particular application as the model arising for the sum of identical

independent distributed (IID) exponential variables, but its practical usefulness is inhibited by the fact that

its survivor and hazard function cannot in general be expressed in closed form.

U10.10

The Gumbel distribution

U10.11

The Pareto distribution

Problems

Problem 1

Probability Distributions for Reliability

13

Problem 2

References

[1]

[2]

[3]

[4]

[5]

[6]

[7]

[8]

[9]

“Power System Reliability Evaluation,” IEEE Tutorial Course 82 EHO 195-8-PWR, 1982, IEEE.

P. Anderson, “Power System Protection,” 1996 unpublished review copy.

S. Ross, “Introduction to Probability Models,” Academic Press, New York, 1972.

M. Modarres, M. Kaminskiy, and V. Krivsov, “Reliability Engineering and Risk Analysis, A Practical

Guide,” Marcel Dekker, Inc. New York, 1999.

L. Bain and M. Engelhardt, “Introduction to Probability and Mathematical Statistics,” Duxbury Press,

Belmont, California, 1992.

A. Papoulis, “Probability, Random Variables, and Stochastic Processes,” McGraw-Hill, New York,

1984.

W. Blischke and D. Murthy, “Reliability: Modeling, Prediction, and Optimization,” John Wiley, New

York, 2000.

R. Billinton and R. Allan, “Reliability Evaluation of Engineering Systems,” Plenum Press, New York,

1992.

L. Wolstenholme, “Reliability Modeling, A Statistical Approach,” Chapman and Hall, New York,

1999.