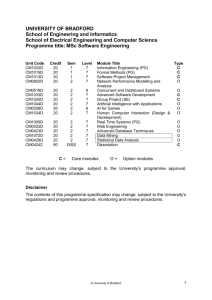

AVA 2006, Vision in Perception and Cognition, Bradford

advertisement

AVA Annual Meeting 2006 Vision in Perception and Cognition Tuesday, 4th April 2006 University of Bradford John Stanley Bell Lecture Theatre Abstracts for oral presentations (in session order): Session 1 Colour and shape of faces and their influence on attributions David Perrett (School of Psychology, University of St Andrews, St. Mary's College, St. Andrews, KY16 9JP. e-mail: dp@st-andrews.ac.uk) We are highly sensitive to the shape and colour cues to faces. These visual attributes control our aesthetic judgments influence psychological attributions about face owners (do we trust them, are they healthy). I will discus data and theory concerning the basis of such visual influences focusing on underlying biology of face owners and perceivers. Co-authors: Miriam Law Smith, Ben Jones, Lisa deBruine, Jamie Lawson, Michael Stirrat, Vinet Coetzee, Peter Henzi. Contrasting the disruptive effects of view changes in shape discrimination to the disruptive effects of shape changes in view discrimination Rebecca Lawson1 and Heinrich H. Bülthoff2 (1School of Psychology, University of Liverpool, Liverpool, L69 7ZA; 2Max Planck Institut für biologische Kybernetik, Spemannstraße 38, 72076 Tübingen, Germany. e-mail: r.lawson@liverpool.ac.uk) A series of three sequential picture-picture matching studies compared the effects of a view change on our ability to detect a shape change (Experiments 1 and 2) and the effects of a shape change on our ability to detect a view change (Experiment 3). Relative to no-change conditions, both view changes (30° or 150° depth rotations) and shape changes (small or large object morphing) increased both reaction times and error rates on match and mismatch trials in each study. However, shape changes disrupted matching performance more than view changes for the shape-change detection task ("did the first and second pictures show the same shape?"). Conversely, view changes were more disruptive than shape changes when the task was to detect view changes ("did the first and second pictures show an object from the same view?"). Participants could thus often discriminate between the effects of shape changes and view changes. The influence on performance of task-irrelevant manipulations (view changes in the first two studies; shape changes in the final study) does not support Stankiewicz's (2002; Journal of Experimental Psychology: Human Perception & Performance, 28, 913-932) claim that information about viewpoint and about shape can be estimated independently by human observers. However the greater effect of variation in the AVA Annual Meeting 2006 task-relevant than the task-irrelevant dimension indicates that observers were moderately successful at disregarding irrelevant changes. Detection of convexity and concavity: no evidence for a concavity advantage Marco Bertamini and Amy O'Neill (School of Psychology, University of Liverpool, Bedford Street South, Liverpool L69 3BX. e-mail: M.Bertamini@liverpool.ac.uk) We compared sensitivity to shape changes, and in particular detection of convexity and concavity changes. The available evidence in the literature is inconclusive. We report three experiments that used a temporal 2AFC change-detection task in which observers viewed two polygonal contours. They were either identical, or one vertex had been removed (or added). The polygonal stimuli were presented as random dot stereograms to ensure unambiguous figure-ground segmentation. We report evidence that any change of sign of curvature along the contour is salient, leading to high sensitivity to change (d') (experiment 1, also in Bertamini & Farrant, 2005, Acta Psych, 120, 35-54). This in itself is not surprising. However, in experiments 2 and 3 we failed to find any evidence that changes to concave vertices, changes within concave regions, or the introduction of a new concave vertex are more salient than, respectively, a change to a convex vertex, a change within a convex region, or the introduction of a new convex vertex. We conclude that performance in detection for shape changes is good when there is a change of sign, which in turn may signal a change in part structure. Performance was also higher for changes nearer the centre of the object. This factor can explain why a concavity advantage has been previously reported (cf. Cohen, Barenholtz, Singh & Feldman, 2005, Journal of Vision, 5, 313-321). Modelling the spatial tuning of the Hermann grid illusion Michael J. Cox, Jose B. Ares-Gomez, Ian E. Pacey, Jim M. Gilchrist, Ganesh T. Mahalingam (Vision Science Research Group, School of Life Sciences, University of Bradford, Bradford. e-mail:M.Cox@bradford.ac.uk) Purpose: To test the ability of a physiologically plausible model of the retinal ganglion cell (RGC) receptive field (RF) to predict the spatial tuning properties of the Hermann Grid Illusion (HGI). Methods: The spatial tuning of a single intersection HGI was measured psychophysically in normal subjects using a nulling technique at different vertical cross line luminances. We used a model based upon a standard RGC RF, balanced to produce zero response under uniform illumination, to predict the response of the model cell to the equivalent range of stimulus conditions when placed in the 'street' of the stimulus cross, or its intersection. We determined the equivalent of the nulling luminance required to balance these responses and minimise the HGI. Results: The model and the psychophysics demonstrated broad spatial tuning with similarly shaped tuning profiles and similar strengths of illusion. The limb width at the peak of the model tuning function was around twice the RGC RF centre size, whilst psychophysically the peak of the spatial tuning function was at least twice the size of RF centres expected from physiological evidence. In the model and psychophysically the strength of the illusion varied with the luminance of the vertical line of the cross when expressed as a Michelson contrast, but not when expressed as a luminance. Conclusions: The shape, width, height and position of the spatial tuning function of the HGI can be well modelled by a single-size RGC RF based model. The broad tuning of these -2- AVA Annual Meeting 2006 functions does not appear to require a broad range of different cell sizes either in the retina or later in the visual pathway. Fused video assessment using scanpaths Timothy D. Dixon1, Stavri G. Nikolov2, Jian Li1, John Lewis2, Eduardo Fernandez Canga2, Jan M. Noyes1, Tom Troscianko1, Dave R. Bull2, C. Nishan Canagarajah2 (1Department of Experimental Psychology, 8 Woodland Rd, Bristol, BS8 1TN; 2Centre for Communications Research, Merchant Venturer’s Build, Woodland Road, Bristol BS8 1UB. e-mail: Timothy.Dixon@bris.ac.uk) There are many methods of combining two or more images of differing modalities, such as infrared and visible light radiation. Recent literature has addressed the problem of how to assess fused images using objective human methods (Dixon et al, 2005, Percep 34, Supp, 142; accepted, ACM Trans Applied Percep). As these have consistently shown that participants perform differently in objective and subjective assessment tasks, it is essential that a reliable assessment method is used. The current study extends this work, considering scanpaths of individuals involved in a tracking task. Participants were shown two video sequences of human walking down a path amongst foliage, each containing the original visible light and infrared input images, as well as fused ‘averaged’, discrete wavelet transformed (DWT), and dual-tree complex wavelet transformed (DT-CWT) videos. Each participant was shown the sequences on three separate occasions, and was asked to track visually the figure, as well as performing a key-press reaction task, whilst an eye-tracker recorded fixation data. The two sequences were similar in content, although the second had lower luminance levels. Accuracy scores were obtained by calculating the amount of time participants looked at the target as a ratio of the total time they looked at the screen. Results showed that the average and DWT methods resulted in better performance on the high luminance sequence, whilst the visible light was poorer than all others on the low luminance sequence. Secondary task results were also analysed, although only small variations were found in these data. The current findings suggest that this new method of fused image assessment could have wide-reaching further potential for differentiating between fusion methods. Session 2 Aversion to contemporary art Dominic Fernandez, Arnold J. Wilkins and Debbie Ayles (Department of Psychology, University of Essex, Colchester CO4 3SQ. e-mail: dfernaa@essex.ac.uk) Discomfort when viewing square-wave luminance gratings can be predicted from their spatial frequency (Wilkins et al., 1984, Brain, 107, 989-1017). Gratings with a spatial frequencies close 3 cycles per degree tend to evoke greatest discomfort and the largest number of visual illusions/distortions. We asked people to rate the discomfort they experienced when viewing images of non-representational art in order to examine the effect of the luminance energy at different spatial frequencies in more complex images. To do this we calculated the radial Fourier amplitude spectrum of image luminance. Images rated as more comfortable showed -3- AVA Annual Meeting 2006 a regression of luminance amplitude against spatial frequency similar to that found for natural images (Field, D.J., 1987, Journal of the Optical Society of America A 4 2379-2394), whereas more uncomfortable images had greater proportion of energy at spatial frequencies close to 3 cycles per degree. Luminance amplitude at this spatial frequency explained between 7% and 51% of the variance in the discomfort reported when viewing art. The more uncomfortable images were rated as having lower artistic merit. The Fourier amplitude spectra of photographs of urban and rural scenes, which had been classified as pleasant or unpleasant, were also examined and exhibited a similar pattern, with the energy at 3 cycles per degree accounting for 6% of the variance. This suggests that predictions of subjective discomfort when viewing complex and real world images can be based on the distribution of luminance energy across spatial frequencies. Dependencies between gradient directions at multiple locations are determined by the power spectra of the image Alex J. Nasrallah and Lewis D. Griffin (Department of Computer Science, UCL, Malet Place, London, WC1E 6BT. e-mail: a.nasrallah@cs.ucl.ac.uk) A well-known statistical regularity of natural image ensembles is that their average power spectra exhibit a power-law dependency on the modulus of spatial frequency. This result does not describe the correlation between gradient directions but instead describes the correlation between pairs of pixel intensities. In this study, we have used informationtheoretic measures to compute the amount of dependency, which exists between two gradient directions at separate locations; we classify this result as 2-point statistics. This is then extended to measure the dependencies of gradient directions at three separate locations which we classify as 3-point statistics. To assess the influence of the power spectrum on the interactions of gradient directions, we collected statistics from four different image classes: A - natural images, B - phase-randomized natural images, C - whitened natural images and D - Gaussian noise images. The image classes A and B had the same power spectra, as did C and D. The main result was that the dependencies between gradient directions at multiple locations are determined by the power spectra of images. This is based on image classes A and B, as well as C and D, having the same amount of 2-point and 3-point gradient direction dependencies. Further, we have studied other image classes with different forms of powerspectra, and these experiments did not invalidate the main result. Visual search with random stimuli William McIlhagga (Department of Optometry, University of Bradford, Bradford, BD7 1DP. email: W.H.Mcilhagga@Bradford.ac.uk) Visual search experiments are usually conducted with "designed" stimuli, which possess, lack, or conjoin one or more obvious features chosen by the experimenter. In this study, visual search was conducted with randomly generated stimuli, to see what - if any - new results might be uncovered using them. Target stimuli were generated by randomly colouring half the squares in a 5-by-5 grid black, the other half white. Each random target was paired with a distractor, obtained by flipping 1,2, or 3 of the target's white squares for black, and -4- AVA Annual Meeting 2006 black squares for white. Stimuli were displayed on a white background. As usual, set size was the dependent variable. Using these stimuli, search times when the target was present varied from 2 to 50 ms per distractor. The search times were usually unrelated to the number of squares flipped between target and distractor. They were also unrelated to the discrimination threshold for target vs. distractor obtained in a separate experiment. The search rates when the target was absent were on average 3 times slower than when the target was present, rather than the more usual 2 times slower. In particular, some searches that were essentially "parallel" when the target was present, became "serial" searches (~30ms/item) when the target was absent. This result is discussed in terms of memoryless search and a tradeoff between miss rate and search effort. The perception of chromatic stimuli in the peripheral human retina Declan J. McKeefry1, Neil R. A. Parry2 and Ian J. Murray3 (1Department of Optometry, University of Bradford, Bradford BD7 1DP; 2Vision Science Centre, Manchester Royal Eye Hospital, Manchester; 3Faculty of Life Sciences, University of Manchester, Manchester. email: D.McKeefry@Bradford.ac.uk) Perceived shifts in hue and saturation that occur in chromatic stimuli with increasing retinal eccentricity were measured in 9 human observers using a colour matching paradigm for a range of colour stimuli which spanned colour space. The results indicate that hue and saturation changes are dissociable from one another in the respect that: i) they exhibit different patterns of variation across colour space, and ii) they possess different dependencies on stimulus size, perceived shifts in hue being relatively immune to increases in stimulus size whilst the desaturation effects are eliminated by increases in size of peripherally presented stimuli. This dissociation implies that different physiological mechanisms are involved in the generation of perceived changes in the hue and saturation of chromatic stimuli that occur in the retinal periphery. Furthermore, when we model the magnitude of activation produced in the L/M and S- cone opponent systems by the peripheral chromatic stimuli we find that the variations in perceived saturation seem to be largely mediated by changes in output from the L/M opponent system, with the S-cone opponent system affected to a much lesser degree. Perception of colour contrast stimuli in the presence of scattering Maris Ozolinsh1, Gatis Ikaunieks1 , Sergejs Fomins1, Michèle Colomb2 and Jussi Parkkinen3 (1Dept.of Optometry, University of Latvia, Latvia; 2Laboratoire Régional des Ponts et Chaussées de Clermont-Ferrand, France; 3Color Research Laboratory, University of Joensuu, Finland. e-mail: ozoma@latnet.lv) Visual acuity and contrast sensitivity were studied in real fog conditions (fog chamber in Clermont-Ferrand) and in the presence of light scattering induced by light scattering eye occluders. Blue (shortest wavelength) light is scattered in fog to the greatest extent, causing deterioration of vision quality especially for the monochromatic blue stimuli. However, for colour stimuli on a white background, visual acuity in fog for blue Landolt-C optotypes was higher than for red and green optotypes. The luminance of colour Landolt-C optotypes presented on a screen was chosen corresponding to the blue, green and red colour -5- AVA Annual Meeting 2006 contributions in achromatic white stimuli. That results in the greatest luminance contrast for the white-blue stimuli, thus improving their acuity. Besides such blue stimuli on the white background have no spatial modulation of the blue component of screen emission. It follows that scattering which has the greatest effect on the blue component of screen luminance has the least effect on the perception of white-blue stimuli comparing to white-red and, especially, to white-green stimuli. Visual search experiments were carried out with simultaneous eye saccade detection. Red, green and blue Landolt-C stimuli were blurred using a Gaussian filter to simulate fog, and were shown together with distractors. Studies revealed two different search strategies: (1) for low scattering - long saccades with short total search times; (2) for high scattering, shorter saccades and long search times. Results of all experiments show better recognition of white-blue comparing to white-green colour contrast stimuli in the presence of light scattering. Session 3 Models of binocular contrast discrimination predict binocular contrast matching Daniel H. Baker, Tim S. Meese and Mark A. Georgeson (School of Life & Health Sciences, Aston University, Birmingham B4 7ET. e-mail: bakerdh@aston.ac.uk) Our recent work on binocular combination does not support a scheme in which the nonlinear contrast response of each eye is summed before contrast gain control (Legge, 1984, Vis Res, 24, 385-394). Much more successful were two new models in which the initial contrast response was almost linear (Meese, Georgeson & Baker, J Vis, submitted). Here we extend that work by: (i) exploring the two-dimensional stimulus space (defined by left- and right-eye contrasts) more thoroughly, and (ii) performing contrast discrimination and contrast matching tasks for the same stimuli. Twenty five base-stimuli (patches of sine-wave grating) were defined by the factorial combination of 5 contrasts for the left eye (0.3-32%) with five contrasts for the right eye (0.3-32%). Stimuli in the two eyes were otherwise identical (1 c/deg horizontal gratings, 200ms duration). In a 2AFC discrimination task, the base-stimuli were masks (pedestals) where the contrast increment was presented to one eye only. In the matching task, the base-stimuli were standards to which observers matched the contrast of either a monocular or binocular test grating. In both models discrimination depends on the local gradient of the observer's internal contrast-response function, while matching equates the magnitude (rather than gradient) of response to the test and standard. Both models produced very good fits to the discrimination data. With no remaining free parameters, both also made excellent predictions for the matching data. These results do not distinguish between the models but, crucially, they show that performance measures and perception (contrast discrimination vs contrast matching) can be understood in the same theoretical framework. Binocular combination: the summation of signals in separate ON and OFF channels Mark A. Georgeson and Tim S. Meese (School of Life & Health Sciences, Aston University, Birmingham B4 7ET, UK. e-mail: m.a.georgeson@aston.ac.uk) How does the brain combine visual signals from the two eyes? We quantified binocular summation as the improvement in contrast sensitivity observed for two eyes compared with -6- AVA Annual Meeting 2006 one, and asked whether the summation process preserves the sign of signals in each eye. If it did so, then gratings out-of-phase in the 2 eyes might cancel each other and show lower sensitivity than one eye. Contrast sensitivity was measured for horizontal, flickering gratings (0.25 or 1 c/deg, 1 to 30 Hz, 0.5s duration), using a 2AFC staircase method. Gratings inphase for the 2 eyes showed sensitivity that was up to 1.9 times better than monocular viewing, suggesting nearly linear summation of contrasts in the 2 eyes. The binocular advantage was greater at 1 c/deg than 0.25 c/deg, and decreased to about 1.5 at high temporal frequencies. Dichoptic, anti-phase gratings showed a very small binocular advantage, a factor of 1.1 to 1.2, but with no evidence of cancellation. We present a nonlinear filtering model that accounts well for these effects. Its main components are an early linear temporal filter, followed by half-wave rectification that creates separate ON and OFF channels for luminance increments and decrements. Binocular summation occurs separately within each channel, thus explaining the phase-specific binocular advantage. The model accounts well for earlier results with brief flashes (eg. Green & Blake, 1981, Vis Res, 21, 365-372 ) and nicely predicts the finding that dichoptic anti-phase flicker is seen as frequency-doubled (Cavonius et al, 1992, Ophthal. Physiol. Opt, 12, 153-156). The effect of induced motion on pointing in depth Julie M Harris1 and Katie J German2 (1School of Psychology, University of St. Andrews, St. Mary’s College, South St., St. Andrews, KY16 9JP, Scotland, UK; 2Psychology, School of Biology, University of Newcastle upon Tyne, Henry Wellcome Building, Framlington Place, Newcastle NE2 4HH, UK. E-mail: jh81@st-andrews.ac.uk) An object appears to move if, although stationary, it is flanked by another moving object or frame (termed induced motion, IM). As well as affecting relative motion perception, IM can bias an observer's ability to point to the absolute locations of objects. It has been suggested that IM may cause pointing errors due to mislocalisation of the body midline. Here we studied observer's responses to objects that underwent IM in depth where there were no changes in ego-centric direction. Observers viewed a stationary target dot flanked vertically by a pair of inducing dots oscillating in depth (9.6 arcmin/s on each retina, amplitude 7.7 arcmin), at a viewing distance of 50cm. The CRT was located on a raised platform so that observers could point to locations beneath it. Observers pointed (open-loop) to directly below the start-location, then the end-location of the motion. We computed the IM as the difference between start and end locations. This was compared with the difference between locations pointed to for a target undergoing real motion in depth. We compared the proportion of IM with that found in an IM nulling task, where observers were asked to adjust a target's motion until it appeared stationary. There was a strong effect of IM on the pointed-to locations in depth, similar to that for the IM-nulling perceptual task. Thus, IM can impact on open-loop pointing for motion in depth. IM effects on pointing cannot be fully accounted for by mislocalisation of the body midline. How we judge the perceived depth of a dot cloud David Keeble1, Julie Harris2 and Ian Pacey1 (1Department of Optometry, University of Bradford, Bradford, BD7 1DP; 2School of Psychology, University of St Andrews, St Andrews, KY16 9JU. e-mail: D.R.T.Keeble@Bradford.ac.uk) -7- AVA Annual Meeting 2006 Some visual properties such as position and texture orientation (Whitaker, et al, 1996, Vis. Res., 36, 2957-2970) seem to be encoded as the centroid (ie mean) of the distribution of the relevant variable. Although the perception of stereoscopic depth has been extensively investigated little is known about how separate estimates of disparity are combined to produce an overall perceived depth. We employed base-in prisms mounted on a trial frame and half-images presented on a computer screen, to produce stereoscopic images of dot clouds (eg as in Harris & Parker, 1995, Nature, 374, 808-811) with skewed (ie asymmetrical) distributions of disparity. Subjects were to judge in a 2AFC task whether the dot cloud was in front of or behind an adjacent flat plane of dots. Psychometric functions were generated and the point of subjective equality was calculated. Three subjects were employed. For dot clouds of thickness up to about 6 arc min disparity the perceived depth was close to the centroid for all three subjects, regardless of skew. For thicker clouds, (we tested thicknesses up to 25 arc min), the perceived depth was slightly closer to the subject than the centroid, regardless of skew. The human visual system integrates depth information veridically for thin surfaces. For thick surfaces, the dots closest to the observer have a higher weighting, possibly due to their greater salience. Spatial uncertainty governs the extent of illusory interaction between 1st- and 2ndorder vision David Whitaker1, James Heron1 and Paul V. McGraw2 (1Department of Optometry, University of Bradford, Bradford BD7 1DP; 2Department of Psychology, University of Nottingham, Nottingham NG7 2RD. e-mail: d.j.whitaker@Bradford.ac.uk) Illusory interactions between 1st-order (luminance-defined) and 2nd-order (texture-defined) vision have been reported in the domains of orientation, position and motion. Here we investigate one type of such illusion within a Bayesian framework that has not only proven successful in explaining the perceptual combination of visual cues, but also the combination of stimuli from different senses (e.g. ‘sound and vision’ or ‘touch and vision’). In short, the perceptual outcome is considered to be a weighted combination of the sensory inputs, with the weights being determined by the relative reliability of each component. We adopt the ‘Stationary Moving Gabor’ illusion introduced by DeValois &DeValois (Vision Research, 31, 1619-1626, 1991) in which a sinusoidal luminance grating (1st-order) drifting within a static contrast-defined Gaussian envelope (2nd-order) causes the perceived position of the envelope to be offset in the direction of motion. A vernier task was used to quantify the extent of the illusion, and several manipulations of the stimuli were used to vary the spatial uncertainty associated with the task. These included the spread (size) and spatial profile of the envelope and the separation of the elements in the vernier stimulus. The 1st-order characteristics were held constant. Results clearly demonstrate that the magnitude of the illusion increases in line with the spatial uncertainty of the 2nd-order envelope. As judgment of its spatial position is made less reliable, so it becomes increasingly susceptible to an illusory effect. -8- AVA Annual Meeting 2006 Abstracts for Posters (in alphabetical order): Motion-induced localization bias in an action task Bettina Friedrich1, Franck Caniard2, Ian M Thornton3, Astros Chatziastros2 and Pascal Mamassian4 (1Department of Psychology, University of Glasgow, 58 Hillhead Street, Glasgow G12 8QB; 2Max Planck Institute for Biological Cybernetics, Spemannstraße 38, 72076 Tübingen, Germany; 3Department of Psychology, University of Wales Swansea, Singleton Park, Swansea, SA2 8PP; 4CNRS & Université Paris 5, France. e-mail: Bettina@psy.gla.ac.uk) DeValois and DeValois (Vis Research, 31, 1619-1626) have shown that a moving carrier behind a stationary window can cause a perceptual misplacement of this envelope in direction of motion. The authors also found that the bias increased with increasing carrier speed and eccentrcity. Yamagishi et al. (2001, Proceedings of the Royal Society, 268, 973977) showed that this effect can also be found in visuo-motor tasks. To see whether variables such as eccentricity and grating speed increase the motion-induced perceptual shift of a motion field also in an action task, a motor-control experiment was created in which these variables were manipulated (eccentricity values: 0 deg, 8.4 deg and 16.8; speed values: 1.78 deg/sec, 4.45 deg/sec and 7.1 deg/sec). Participants had to keep a downwardsliding path aligned with a motion field (stationary Gaussian and horizontally moving carrier) by manipulating the path with a joystick. The perceptual bias can be measured by comparing the average difference between correct and actual path position. Both speed and eccentricty had a significant impact on the bias size. Similarly to the recognition task, the bias size increased with increasing carrier speed. Contrary to DeValois and DeValois’ finding, here the perceptual shift decreased with increasing eccentricity. There was no interaction of the variables. If we assume an ecological reason for the existence of a motion-induced bias, it might be plausible to see why the bias is smaller in an unnatural task such as actively manipulating an object that is in an eccentric position in the visual field (hence the decrease of bias magnitude in the periphery). Contrary to this, recognition tasks carried out in the periphery of the visual field are far more common and therefore might “benefit” from the existence of a motion-induced localization bias. As expected, task difficulty increased with increasing speed and eccentricity. It seems interesting to further compare action and perception tasks in terms of factors influencing the localization bias in these different task types. Attending to more than one sense - on the dissociation between reaction time and perceived temporal order James Hanson1, James Heron1, Paul V. McGraw2 and David Whitaker1 (1Department of Optometry, University of Bradford, Bradford BD7 1DP; 2Department of Psychology, University of Nottingham, Nottingham NG7 2RD. e-mail: j.v.m.hanson@Bradford.ac.uk) Simple logic predicts that sensory stimuli to which we exhibit faster reaction times would be perceived to lead other stimuli in a temporal order judgment task (‘which one came first?’). This logic has received little empirical support. There is general agreement that reaction times to auditory stimuli are considerably shorter than to visual. Despite this, temporal order judgments involving the two types of stimuli usually require a small temporal lead of the -9- AVA Annual Meeting 2006 auditory stimulus in order to be perceived as simultaneous. We replicate these findings, but argue that whilst simple reaction times are measured to one sensory modality in isolation, temporal order judgments, by definition, require two modalities to be attended simultaneously. We therefore measure reaction times to auditory and visual stimuli randomly interleaved within the same experimental run. This requires subjects to simultaneously monitor both senses in an attempt to optimize their reaction times. Results show that reaction times to both senses are significantly increased relative to uni-modal estimates, but auditory reaction times suffer more (20-30% increase) than visual (6-10% increase). Nevertheless, whilst this goes some way towards accounting for the dissociation between reaction time and temporal order judgments, there still remains an unexplained visual lead in the perceptual judgment of which sense appeared first. Famous face recognition in the absence of individual features Lisa Hill and Mark Scase (Division of Psychology, De Montfort University, The Gateway, Leicester, LE1 9BH. e-mail: lhill@dmu.ac.uk) The perceptual operations, which enable face recognition, require a synthesis of precision and synchronicity. Insight into this process may be provided by exploring the timings and sequence for which individual features are perceived. Previous studies have masked off individual features along with the surrounding area (Lewis and Edmonds, 2003, Perception, 32, 903-920) and explored the feature by feature composition of faces from memory (Ellis, Shepherd & Davies, 1975, Brit J Psychol, 66, 29-37). We investigated the effects of removing individual features from famous faces, as opposed to masking areas of the face, in order to examine whether the absence of any feature in particular, significantly affected reaction times for face recognition. Participants viewed faces either without eyes, nose, mouth or external features (hair and ears). Images were presented for 160ms and reaction times for face identification were recorded. A significant increase in reaction times was observed where faces were viewed without hair and ears compared to control conditions; implying that during the initial stages of face perception, external features (lower spatial frequencies) may act as the primary catalyst towards face recognition, before the visual system is able to process the internal features of the face (higher spatial frequencies). This result has potential implications for the revision of a pre-established test for prosopagnosia: the Benton Facial Recognition Test (Benton et al, 1983, Contributions to neuropsychological assessment. NY: OUP) as during testing, other cues such as hairline and clothing may be available to participants in order to aid recognition, failing to provide an accurate account of actual face recognition abilities (Duchaine & Weidenfeld, 2002, Neuropsychologia, 41, 713-720). Further, these results help us understand the prominence of each feature in the face recognition process, and the points at which perceptual abilities become fragmented - 10 -