Chapter 1 Introduction

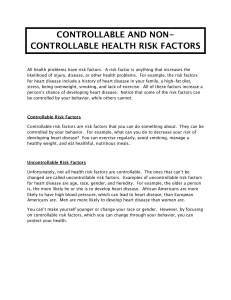

advertisement

Chapter 1 Introduction

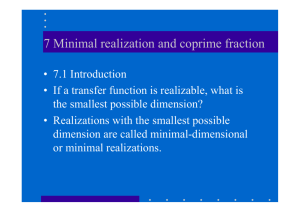

Empirical methods ---> Analytic ones.

1. Empirical methods:

Adjust some parameters, add a new compensator, … Strongly depends on experience. An art.

For masters.

Trial and error (low efficiency)

When the complexity is high, it doesn’t work or is too dangerous, too expensive (airplane,

aircraft).

2. Analytic methods (for ordinary people)

Basic knowledge

(common sense)

Good job

Mature theoretic/

practical results

(common sense)

There are 5 steps

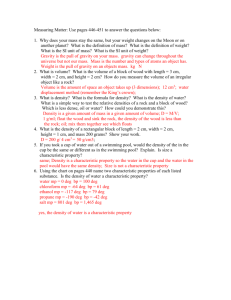

Modelling. We are only interested in certain properties, and ignore others.

We should model properly.

Mathematical description.

Pysical laws - mathematical equations. Tradeoff: precision v.s. complexity

This mathematical model is frequently referred to as “model”, “system”.

Analysis based on the mathematical model (equations): quantitative and qualitative

(1) Model verification: Under the same inputs, does the model yield the same outputs as

the real system? If not, the model is wrong. Redo it. It should be the first to do.

(2) Under a “trustable” model, “predict” the system’s properties, including the

qualitative ones, like stability, and the quantitative ones, such as the rising time. If

the predicted properties are not acceptable, we have to do something (synthesis).

Synthesis based on the mathematical model.

Modify some parameters of the (mathematical) “system”. Insert some compensators. We

want to the “system” to satisfy some requirements (stability, robustness), and optimize certain

performance.

Implement the obtained results into the real system.

(1) Good: The qualitative properties may still be preserved. Quantitative properties may

keep the trend (e.g., the new design could yield some performance improvement).

The exact improvement value may not be preserved. But this is already good

enough. Don’t forget that the mathematical model is only an approximation of the

real system.

(2) Bad: The expected qualitative properties are broken (e.g., loss of stability). No

1

quantitative improvement due to the new design is seen. Some possible reasons to

this failure:

1) Computation errors. Redo the synthesis.

2) Model mismatching. Search for more precise parameters of the system or find

a more general model.

In our class, we focus on the analysis and synthesis of the (mathematical) “system” (model).

Moreover, we only consider the linear models. By system, we mean the set of relevant equations.

2

Chapter 2. Mathematical Descriptions of Systems

2.1 Introduction

A system is a mapping (From the input to the output)

Input

Output

System

y (t ) / y[ k ]

u (t ) / u[k ]

Input

Input

1. It is a deterministic mapping. Existence and Uniqueness.

2. SISO, MIMO, SIMO:

dim(u)=1? dim(y)=1?

2.1.1 Causality and lumpedness

1. Memory v.s. memoryless (systems)

(1) A memoryless system

+

yt

ut

1

-

yt ut , the current output depends only on the current input.

(2) A system with memory

+

ut

yt

1F

-

yt u d . Both the current input and the past inputs determines the current output.

t

The history makes difference.

3

2. Causal/ non-anticipatory

A causal system: The current output yt is determined by only the current input ut and the

past inputs

u , t.

The future inputs

u , thave

nothing to do with the

current output yt , i.e., we cannot anticipate the future input from the current output.

Physical systems are all causal.

Some artificial systems can be non-causal (when the time is represented by other variables).

t

The output is selected as the electronic field E(t).

3. State (of a causal system)

y(t) is determined by

u , t 0

t

+ u , t 0

xu t 0

Define a state xu t 0 u , t .

xu t 0

yt ,

u , t 0 t

t , t 0 . The state plus the future inputs determines all future

outputs.

dim xu t 0 . It doesn’t help by defining a new set variable. Have a look at an example.

+

ut

yt

1F

-

y t u d u d u d

t

t0

t

t0

4

x t 0 u d .

t0

x t 0

yt ,

u , t 0 t

t , t 0 . x t 0 is a state. dim x t 0 1

Try your best to find as low state dimension as possible.

4. Lumpedness

A state xt 0 :

xt 0

yt ,

u , t 0 t

t , t 0

dim xt 0 is finite

the system is lumped

Example: Is the system yt ut 1 lumped?

Suppose it were lumped.

xt 0

yt ,

u , t 0 t

t , t 0

0,1, yt 0 ut 0 1 . It is obvious that

ut 0 1 u , t0 t

ut 0 1 xt 0

ut 0 1, 0,1 xt 0

dim xt 0

It is not lumped.

Not lumped - distributed.

2.2 Linear systems

1. Linearity

u1 (t )

u 2 (t )

System

System

y1 (t )

y2 (t )

5

(1) Additivity

u3 (t ) u1 (t ) u 2 (t )

System

y3 (t )

Additivity: y3 (t ) y1 (t ) y 2 (t )

(2) homogenity

u4 (t ) u1 (t )

System

y4 (t )

Homogenity: y4 (t ) y1 (t )

Linearity=additivity + homogenity

(3) Linearity defined as “state + partial inputs”

y (t )

u (t )

System

u(t ), t t 0

y (t )

System

u(t ), t t 0

xt 0

y (t )

System

u(t ), t t 0

6

xt 0 f u (t ), t t 0 ( f is actually a linear function).

1 x1 t 0 2 x2 t 0

y (t )

System

1u1 (t ) 2 u 2 (t ),

t t 0

yt 1 y1 t 2 y2 t

Example: Is yt

u 1d

t

linear? Lumped?

2. Input-output description

yi (t ) g t , t

t t i

System

t t i

(1) Homogenity

uti g t , ti

uti t ti

System

i=1,2,3,…

(2) Additivity

ut g t, t

ut t t

i

i

i

i

i

System

i=…, -3, -2, -1, 0,1,2,3,…

7

i

(3) Limitation

lim t t i t t i , lim u t i t t i u t , lim g t , t i g t , t i

0

0

0

i

lim u t i g t , t i y t u g t , d

0

i

yt u g t , d

For a causal system, yt does not depend on the future inputs. So

g t , 0, t

yt u g t , d

t

3. MIMO systems (DIDO example)

u1 t

y1 t

System

y 2 t

u 2 t

(1) Consider only y1 t

u1 t

y1 t

System

u 2 t

(2) Linear combinations of two fundamental systems

t t i

g11 t , t i

System

0

8

u1 t

y11 t g11 t , u1 d

System

0

g12 t , t i

0

System

t t i

y12 t g12 t , u 2 d

0

System

u 2 t

y1 t y11 t y12 t

Then we get the impulse response matrix (eq. 2.5).

4. State space model

Linear + lumped

There exists a state space model.

2.3 Linear Time-Invariant (LTI) Systems

1. (i) Two examples

0

y1 t

u1 t

1

1

System

9

0

1

y 2 t

u 2 t

1

3

2

2

System

3

We observe that u 2 t u1 t 2, y2 t y1 t 2

LTI:

Both ut and yt are shifted by the same amount of time (2).

(ii) For general case, a system is LTI if

y (t )

u (t )

System

u ' (t ) u (t T )

y ' (t ) y (t T )

System

Both ut and yt are shifted by the same amount of time of T.

When the parameters of a system change slowly enough, it is LTI.

2. Impulse response of an LTI system

y t u g t , d

y ' t u ' g t , d

We know that u ' (t ) u (t T ) , y ' (t ) y (t T ) .

(1) y' t

u' g t , d u T g t , d u g t , T d

(2) y' (t ) y(t T )

u g t T , d

So g t , T g t T , , t , , T

10

Let T . Then

g t ,0 g t , , t , ,

g t , g t ,0 g t

yt u g t d

3. Laplace transformation (defined only for LTI systems)

yt L1 yˆ s

pp. 39, Problem 2.9

Definitions: proper, strictly proper, biproper, improper.

3. State-space equation of a lumped LTI system

Op-amp circuit implementation.

2.4 Linearization

A nonlinear system - first order approximation

2.5 Examples.

11

12

13

Chapter 3 Linear Algebra

3.1 Introduction

Block operations of matrices

3.2 Linear space

n

1. Vector x R

2. Some definitions

Linear independence. Given a set of vectors, {x1 , x2 ,, xm }

(i)

If c1 x1 c2 x2 cm xm 0 c1 c2 cm ,

then {x1 , x2 ,, xm } is linearly independent.

i, xi cannot be represented as a linear combination of other vectors., i.e., every xi provides

new information.

(ii)

Linear dependence

x1 x 2 m x m , no innovation.

(iii)

n

Linear space: A set S R .

S is a linear space if

x , y S x y S , , R

3. Search for the maximum subset of linearly independent vectors among {v1 , v2 ,, vm } .

v1 0 v1 , v 2 is linearly independen t {v1 , v 2 ,, v r }is linearly independen t No more

{v1 , v2 ,, vr , vr 1} is NOT linearly independent

1v1 2 v2 r vr vr 1 0,[1 , 2 ,, r , ] 0 0

1

2

v r 1 [v1 , v 2 , , v r ]

r

{v1 , v2 ,, vr } is a basis.

coordination.

We can verify that {v1 , v2 v1 ,, vr } is also a basis.

14

There exist more than one basis.

Should all bases have the same number of vectors?

Suppose NOT. We have two bases.

{v1 , v2 ,, vr } and {z1 , z 2 ,, z m } . r m .

1

2

(1) [v1 , v 2 , , v r ]

0 1 r 0

r

q11

q1r

q

q

21

2r

(2) v1 [ z1 , z 2 , , z m ]

, , v r [ z1 , z 2 , , z m ]

q m1

q mr

q11 q r1

[v1 , v2 ,, vr ] [ z1 , z 2 ,, z m ]

q m1 q mr

1

2

We get [ z1 , z 2 , , z m ]Q 0,

r

q11 q r1

Q .

q m1 q mr

1

Qmr 2 0 , rank(Q) rank Q m r

r

[1 , 2 ,, r ] 0 . Contradict with (1). So r m .

Similarly, we can get m r . Then r m .

4. Norm of vectors.

Page. 46.

5. Correlation of vectors

x, y in1 xi yi .

x y x , y 0 .

A group of orthogonal vectors

v1 , v2 ,, vr ,

vi v j , i j

15

v1 , v2 ,, vr

are linearly independent.

If furtheremore,

vi , vi 1, v1 , v2 ,, vr

is an orthonormal basis.

Any basis can be transformed into an orthonormal basis by Schmidt orthonormalization

procedure.

3.3 Linear Algebraic Equations

1. Equation Ax y

x1

x2

Amn a1 , a 2 ,, a n , Ax a1 , a 2 ,, a n

x1 a1 x 2 a 2 x n a n .

xn

So Ax is a linear combination of a1 , a 2 ,, a n .

(1) Range sapce of A : Range A Ax x1 a1 x 2 a 2 x n a n , xi R R .

m

rank A rank A y

Existence of a solution: y Range A.

(2) Null space of A : Null A x | Ax 0 R

n

(3) Solution set of Ax y : x p Null A . x p : A particular solution of Ax y

Unique solution condition:

a1 , a2 ,, an

Null A 0

(4) Square matrices: A

1

is linearly independent.

Adj A

1

cij ' , non-singular, full rank

det A det A

3.4 Similarity transformation

n

Suppose there are two bases for R ,

q1 , q2 ,, qn

and

q1 , q2 ,, qn .

For any vector

v R n , we have two “different” representations.

n

v x1 q1 x 2 q 2 x n q n Qx , Q [q1 , q 2 ,, q n ]

i 1

n

v x1 q1 x 2 q 2 x n q n Q x , Q [q1 , q 2 ,, q n ] . Q,Q are non-singular.

i 1

16

x Q 1Q x Px , P Q 1Q is non - singular.

Qx Q x

Px APx Bu

Original system: x Ax Bu

x P 1 APx P 1 Bu A x B u

A P 1 AP : similarity transformation. It is actually a coordination transformation.

3.5 Diagonal Form and Jordan Form

1. Eigenvalue

, eigenvector v 0

Av v A I v 0 A I 0

A I 0 v 0, A I v 0 , v

2. Algebraic multiplicity

s A-I 1 1 r r ,

m

m

mi : the algebraic multiplicity of i .

3.

mi 1, i 1,, n : All distinct eigenvalues.

We can get n eigenvectors, which are all independent of each other. These eigenvectors can

form a transformation matrix, P [v1 , v2 ,, vn ] .

1

0

AP [1v1 , v 2 ,, n vn ] P

0

1

0

1

J P AP

0

4.

0

2

0

0

2

0

0

0

n

0

0

n

mi 1 :

(1) For each i , we can find mi linearly independent eigenvectors. Then we can still get a

P by combining all eigenvectors. J P 1 AP is still a diagonal matrix.

(2) For some i , we cannot find mi linearly independent eigenvectors, to say, only ni

ones. Then we have to derive

mi ni

17

generalized eigenvectors. All the eigenvectors

and generalized eigenvectors together form P .

J1 0 0

0 J 0

2

,

J P 1 AP

0 0 Jr

J i ,1 0 0

0 J

0

i,2

Ji

,

0 J i ,ni

0

i 1 0 0

0 1 0

i

J i , p 0 0 i 0

0 0 0 i

J i : mi mi

3.6 Functions of a Square Matrix

1

1. A PJP , J : Jordan form

A k PJP 1 PJP 1 PJP 1 PJ k P 1

Jk ?

1 0

Example: J 0 1 I T ,

0 0

0 1 0

T 0 0 1

0 0 0

0 0 1

3

c

We see that T 0 0 0 , T 0, T 0, c 3 ; T I I T

0 0 0

2

J k I T k C k1 k 1T C k2 k 2T 2

k

k

0

0

kk 1

k

0

k k 1 k 2

2

kk 1

k

2. Polynomials of a square matrix

(1) A simple example:

18

1 0

f x a 0 a1 x a 2 x a3 x , J 0 1

0 0

2

3

f A a 0 I a1 A a 2 A 2 a3 A 3

P a 0 I a1 J a 2 J 2 a3 J 3 P 1

a 0

P 0

0

0

a0

0

0 a1

0 0

a 0 0

a1

a1

0

a 0 a1 a 2 2 a3 3

P

0

0

a 2

0

a1 0

0

a1

2

a 2 2

a 2 2

0

0 a1 a 2 2 a3 32

a 0 a1 a 2 2 a3 3

0

a 2 a 3 3

a 2 2 0

a 2 2 0

a3 32

a 3 3

0

0 0 a 2 a3 3

0 a1 a 2 2 a3 32 P 1

a 0 a1 a 2 2 a3 3

d

1 d2

f

f

f

2

d

2! d

d

P 0

f

f P 1

d

0

0

f

(2) A general case

1 0

2

3

4

For a general polynomial, g x a 0 a1 x a 2 x a3 x a 4 x , J 0 1

0 0

g A a 0 I a1 A a 2 A 2 a3 A 3 a 4 A 4

P a 0 I a1 J a 2 J 2 a3 J 3 a 4 J 4 P 1

1 d

1 d2

1 d3

1 d4

g

g

g

g

g

P 1

2

3

4

P

1

!

d

2

!

3

!

4

!

d

d

d

0

J1

0

For general J , J

0

0

J2

0

J1k

0

0

0

k

, J

Jr

0

0

g J 1

0

g J 2

g A Pg J P 1 , g J

0

0

0

k

J2

0

0

0

g J r

19

a3 3

a3 32 P 1

a3 3

,

k

J r

0

0

J i ,1

0

For J i

0

0

J i,2

0

0

0

g J i ,1

0

0

g J i , 2

, g J i

J i ,ni

0

0

dim J i dim J i ,1 dim J i , 2 dim J i ,ni . Let i

So g J i is composed of g i ,

1 d

g i , ,

1! d

0

0

max dim J .

g J i ,ni

i, j

1 j ni

1

d i 1

g i .

i 1

i 1! d

i : geometric multiplicity.

i mi (algebraic multiplicity)

For

each

i

,

g i 0,

d

g i 0,

d

1

d mi 1

g i 0

mi 1

mi 1! d

if

,

then

g J i 0

g J 0 g A 0

(3). Characteristic equation.

Consider a particular polynomial,

g A-I 1 1 r r . We can easily get g A 0 . (Theorem 3.4,

m

m

pp. 63)

g A A n 1 A n 1 2 A n 2 n I 0 . So

A n 1 A n 1 2 A n 2 n I

Ak k ,1 An1 k , 2 An2 k ,n I

and

k n .

Any polynomials of A , f A , is equal to an polynomial of A with the order up to n 1 .

Another way to get the above result.

f q h , degh deg n

f A q A A h A q A0 h A h( A) . What is h ?

h 0 1 n 1n 1 . What are 0 , 1 ,, n1 ?

For i , we get the following mi equations

20

,

f i qi i hi hi

d

d

qi i d hi d hi

f i

d

d

d

d

d mi 1

d mi 1

d mi 1

d mi 1

f

q

h

hi

i

i

i

i

dmi 1

dmi 1

dmi 1

dmi 1

For all

i i 1,, r , we have m1 m2 mr n equations which are enough to

determine

0 , 1 ,, n1 . Then it is easy to see

f A h(A) .

Any function f can be expressed as a polynomial by the Taylor’s series. So it can be

accordingly computed by h A .

Example 3.8, pp. 65.

3. Minimal polynomial

J i ,1

0

Ji

0

0

J i,2

0

0

0

g J i ,1

0

0

g J i , 2

, g J i

J i ,ni

0

0

0

0

g J i ,ni

g J i 0 ,

g must be able to be divided by i i .

g J 0 ,

g must be able to be divided by p i i .

i

p is the lowest order polynomial to guarantee p A 0 .

3.7 Lyapunov Equation

AM MB C

All entries of AM and MB are linear functions of entries of M . So we have a linear

equation. Rewrite it into Ax y and find its solution.

A more systematic way is to utilize the Kronecker product so that the linear matrix equation is

transformed into a common vector equation.

3.8 Some Useful Formulas

AB min A, B

21

3.9 Quadratic Form and Positive Definiteness

M M T , M are all real and simple (no generalized eigenvector is needed).

(1) Proof: Suppose Mx x , x 0,

is complex.

x * Mx x * x .

Taking the conjugate operation over the above equation yields

x * x x * M * x x * Mx x * x

Then ( ) x x 0

*

M 0 M 0

(2) No generalized eigenvector: 3.67-3.68, pp. 73.

3.10 SVD

pp 76-77.

3.11 Norms of Matrices

Induced norms.

Homework: 3.1, 3.3, 3.13, 3.14, 3.17, 3.18, 3.21, 3.31, 3.32, 3.33, 3.38

22

Chapter 4 State-Space Solutions and Realizations

4.1 Introduction

We have a causal linear system

y t g t , u d (relaxed at t 0 )

t

t0

Distributed or lumped.

Under a given input ut , what is the output yt ?

1. Discretization

yk

k

g k, m um

m k0

: conflict between computational complexity and precision

2. Laplace method (only for LTI systems)

yˆ s gˆ s uˆs ,

gˆ s Lg t

yt L1 yˆ s

It requires computing poles, carrying out partial fraction expansion, and is sensitive to small

changes in data.

3. State-space method (only for lumped systems)

Integration

differention

x t At xt Bt u t

, ode (ordinary differential equation)

y t C t xt Dt u t

The existence and uniqueness of solution of an ODE.

x t hxt , t

n

xt 0 x0 R

If

hx, t

B for t t1 , t 2 t 0 t1 , t 2 , then the ODE has a unique solution xt

x

( t t1 ,t 2 ).

It is possible that t 0 t1 , i.e., the equation can be solved backwards.

4.2 Solution of LTI state equations

x t Axt Bu t

yt Cxt Du t

1. Some formula regarding e

At

23

e At I k 1

(1)

k

1

At k I k 1 t A k

k!

k!

d At

e :

dt

k 1

d At

t

e 0 k 1

Ak

dt

k 1

l

t

l 0 A l 1 l k 1

l!

l

t

Al 0 A l Ae At

l!

tl

l 0 A l A e At A

l!

d At

e Ae At e At A

dt

(2) L e

At

k

t

L e At L I k 1 A k

k!

k

A

I L1 k 1

L tk

k!

k

1

k!

A

I k 1

s

k! s k 1

1

1 A

I k 1

s

s

s

k

k

Q I A

k 1

s

1

Q

s

2

A

A A

Q Q I

s

s s

A

I Q I

s

A

Q I

s

1

ssI A

sI A

So L e

1

At

e At L1 sI A

1

1

.

At

(3) e :

24

1 0

A QJQ , J 0 1 = I T , T k 0k 3

0 0

1

e At Qe Jt Q 1

I T T I

e Jt e It e Tt

1 t

e t I 0 1

0 0

t

e

0

0

1 2

t

2!

t

1

1 2 t

t e

2!

te t

e t

te t

e t

0

Another two ways to compute e

(i) e

Jt

(ii) e

Jt

Jt

c0 t I c1 t J c 2 t J 2 , e xt c0 t x c1 t x c 2 t x 2

L1 sI J

1

,

sI J 1 ?

sI J 1 0 sI 1 s J 2 sJ 2 , sI x1 0 sx 1 sx 2 sx 2

x t Axt Bu t

, x0 x0

yt Cxt Du t

2. Solution of

(1) Verify its solution is xt e x0

At

e

A t

e e

At

A

:

t

e

At

0

Bu d

e At 0 t , 1 t , A n1 t , A n1

e At e A 0 t , 1 t , A n1 t , A n1

i t , i t ,

e x t e xt e x

xt e At x0 e At Bu d

t

0

e At x0 e At e A Bu d

t

0

25

e At e At e A

e

d

d At

d At

xt

e x0

e

dt

dt

dt

t

A

0

Bu d e At

d t A

e Bu d

dt 0

Ae At x0 Ae At e A Bu d e At e At Bu t

t

0

A e At x0 e At e A Bu d Bu t

0

Axt Bu t

t

(2) Laplace transform

Lxt LAxt Bu t

sxˆs x0 Axˆs Buˆs

1

1

xˆ s sI A x0 sI A Buˆ s

xt L1 xˆ s

sI A * L

L1 sI A x0 L1 sI A Buˆ s

1

e At x0 L1

1

1

1

Buˆs

e At x0 e At Bu d

t

0

(3) Zero input response ( ut 0 )

xt e At x0

1 0

When A QJQ , J 0 1 ,

0 0

1

xt Qe Jt Q 1 x0

t

e

Q 0

0

te t

e t

0

1 2 t

t e

2!

te t Q 1 x0

e t

So xt is the linear combination of e , te , t e

t

t

2 t

3. Discretization: xkT ?

(1) x t

xk 1T xkT

T

xk 1T I TAxkT TBu kT

26

(modes)

(2) xt e At x0

t

e

At

0

Bu d , t k 1T

xk 1T e Ak 1T x0

k 1T

0

e Ak 1T Bu d

kT

k 1T

0

kT

e Ak 1T x0 e Ak 1T Bu d

e Ak 1T Bu d

kT

k 1T A k 1T

e AT e AkT x0 e AkT Bu d

e

Bu d

kT

0

e AT xkT

k 1T

kT

e Ak 1T Bu d

e AT xkT e AT z Bu z kT dz

T

0

z kT

When ut u[k ], t [kT, k 1T ) ,

T

xk 1T e AT xkT e AT Bd u[k ]

0

T

e AT xkT e AT d Bu[k ]

0

T

0

e AT d ?

f x e x T d , e AT d f A

4.

T

T

0

0

Discrete-time systems

pp. 92-93. Modes:

k , kk 1 , k k 1k 2 ,

4.3 Equivalent state equations

x t Axt Bu t

yt Cxt Du t

1.

(i) Similarity transformation:

1

New state x Px x P x

P 1 x AP 1 x Bu

x PAP 1 x PBu A x B u

1

y CP x Du C x D u

A PAP 1 , B PB, C CP 1 , D D

Transfer functions: G s C sI A B D , G s C sI A

1

We can verify that Gs G s ,

A, B, C, D A, B , C , D

27

1

BD

Why do we need the similarity transformation?

Simplification. Simpler A . Canonical forms in 4.3.1, pp. 97

Acceptable signal magnitude (Example 4.5, pp. 99).

(ii) What does Gs G s mean?

CAm B C A m B , m 0,1,2, (Theorem 4.1)

A, A may have different dimensions. (example 4.4, pp. 96)

To reduce cost, we like the lower dimension.

4.4 Realizations

yt g t u d

(linear, causal, time-invariant)

yˆ s Gˆ s uˆ s

lumped

t

0

x t Axt Bu t

yt Cxt Du t

Theorem 4.2 Ĝ s is realizable

Ĝ s is a proper rational matrix

1

Gˆ s C sI A B D

Proof: (i) Realizable

(ii) Ĝ s is a proper rational matrix

?

Gˆ s Gˆ Gˆ sp s , Gˆ sp s is strictly proper.

1

1

Gˆ sp s

N s

N1 s r 1 N 2 s r 2 N r

d s

d s

d s s r 1 s r 1 r

Define a state space equation

1 I p

I

p

x 0

0

y N 1

2I p

r 1 I p

0

0

Ip

0

0

Ip

N 2 N r 1

r I p

I p

0

0

0 x 0 u

0

0

N r x Gˆ u

28

C sI A B ?

1

z1

I

z

0

1

2

Z sI A B sI A

B

0

zr

s 1 I p

I

p

0

0

2 I p r 1 I p r I p z1

sI p

Ip

0

0

0

Ip

0

0

sI p

I p

z2 0

z3 0

z r 0

The second line: z1 sz 2 s z 3 s

2

The third line: z 2 sz 3 s

r 2

r 1

zr

zr

The last line: z r 1 sz r

The first line: s 1 I p z1 2 I p z 2 r I p z r I p

s

r

1 s r 1 r z r I p

zr

1

s 1s

r

z r 1 sz r

r 1

r

Ip

1

Ip

d s

s

Ip

d s

z1

s r 1

Ip

d s

29

C sI A B D N 1

1

N 1

N 2 N r 1

z1

z

2

N r Gˆ

z r 1

z r

N 2 N r 1

s r 1

Ip

d

s

r 2

s I

d s p

N r Gˆ

s

Ip

d s

1

Ip

d s

Gˆ s

Example 4.6 v.s. Example 4.7 pp. 103-105

We may get realizations with different dimensions.

4.5 Solution of linear time-varying (LTV) equations

x At x Bt u

, x0 x0

y C t x Dt u

1. Model:

Time-varying parameters, At , Bt , Ct , Dt .

What is its solutions?

LTI: At A, Bt B, Ct C, Dt D.

(i)

xt e At x0 e At Bu d

t

0

t

t

A d x t e As ds Bu d in the most cases.

LTV: xt e 0

0

(ii)

0

Why? Take ut 0 . Suppose the equality holds. Then

t

A d

xt e 0

x

0

t

A d

1 t

t

t

e 0

I 0 A d 0 A d 0 A d

2!

d 0 A d

e

dt

t

1

1

0 At At 0t A d 0t A d At

2!

2!

t

t

At 0 A d 0 A d At in most cases.

30

0

0 0 t 0 0

1 2

,

d

2

2

2 t

t t 0

E.g. At

0 0 0

t

1 2

At 0 A d

2

t

t

2 t

0

A d At 1 t 2

2

0 0

1 3 1 4

t

t

3 2

0

1 5

t

3

0 0 0 0

1 3

1

t t t 2 t 4

3

3

0

1 5

t

3

t

0

So At 0 A d 0 A d At and

t

0

1 3

t

3

t

d 0 A d

e

dt

t

A d

At e 0

.

t

t

A d

xt e 0

x0 is not a solution to the T-V equation.

2. Zero input solutions of a T-V equation.

x At x

n

xt 0 x0 R

xt 0 x0

Consider x0 x0 ,, x0

(1)

xt 0 x0

i

Linear

System

( n)

xt

: A set of linearly independen t vectors, a basis.

Linear

System

xt x i t

Linearity? Verify it.

xt 0 i x0 j x0

i

j

Linear

System

xt i x i t j x j t

xt 0 0 xt 0

31

More general, xt 0 x0 , , x0

(1)

(n)

1

, then

n

1

1

xt x t ,, x t X t

n

n

(1)

(n)

Fundamental matrix: X t x

(1)

t ,, x ( n) t

X t 0, t . Why?

(i) t t 0 , X t 0 x0 ,, x0

(1)

(n)

0.

(ii) t t 0 , X t 0 ?

Suppose X t1 0, t1 t 0 . X t1 x

(1)

t1 ,, x ( n) t1 .

1

1

1

(1)

(n)

Due to X t1 0, X t1 x t1 ,, x t1 0, 0

n

n

n

xt1 x i t1

xt 0 x i t 0

Linear

System

By linearity of the ODE system,

1

xt1 x t1 ,, x t1

n

(1)

1

xt 0 x t 0 ,, x t 0

n

(n)

Linear

System

(1)

(n)

1

But in this case, xt1 x t1 ,, x t1 0 . So xt 0 0 , i.e.,

n

(1)

(n)

32

1

x t 0 ,, x t 0 0 .

n

(1)

(n)

So X t 0 x0 ,, x0

(1)

(n)

1

0.

n

0 . Contradiction.

(iii) t t 0 , X t 0 ?

Note that an ODE can be solved backwards.

3. State transition matrix

When X t 0 0 , X t 0, t .

So we can define a state transition matrix

t , t 0 X t X 1 t 0 . It is possible that t t 0 .

(1)

t , t1 t1 , t 0 X t X 1 t1 X t1 X 1 t 0

X t X 1 t 0

t , t 0

(2)

t , t X t X 1 t I

(3)

t , t 0 ?

t

, t , t1 , t 0 can have arbitrary relationships.

X t1 x (1) t1 ,, x ( n ) t1

d

d

d

X t x (1) t ,, x ( n ) t At x (1) t ,, At x ( n ) t

dt

dt

dt

At x (1) t ,, x ( n ) t

At X t

t , t 0

X t X 1 t 0 X t X 1 t 0 At X t X 1 t 0

t

t

t

At t , t 0

0 0

xt

t

0

Example: x t

x1 0 x1 t x1 t 0

x 2 tx1 t tx1 t 0 x2 t x2 t 0

1 2

2

t t 0 x1 t 0

2

33

(i) x

(1)

(ii) x

1

1

(1)

1

2

x

t

2

2 t t 0

0

t 0

( 2)

t 0

0

0

x ( 2) t

1

1

1

1

X t 2

2

2 t t 0

0

1 0

, X t 0

1

0 1

1

t , t 0 X t X 1 t 0 1 t 2 t 2

0

2

0

1

4. Solution of a LTV equation.

x At x Bt u

, x0 x0

y C t x Dt u

xt t , t 0 x0 t , B u d . Verify it.

t

t0

(i) xt 0 t 0 , t 0 x0

t , B u d Ix

t0

t0

0

0

0 x0

t

d

xt t , t 0 x0 t , B u d t , t Bt u t

t

0

dt

t

t

At t , t 0 x0 At t , B u d Bt u t

t

(ii)

t0

t

At t , t 0 x0 t , B u d Bt u t

t0

At xt Bt u t

y t C t t , t 0 x0 C t t , B u d Dt u t

t

t0

xt 0 0

y t C t t , B Dt t u d

t

t0

G t , u d

t

t0

G t , C t t , B Dt t

4.5.1

Discrete-time case

xk 1 Ak xk Bk uk

yk C k xk Dk uk

State transition matrix:

k , k 0 Ak 1Ak 2 Ak 0 , k k 0

,

k , k I

34

Solution:

xk k , k 0 xk 0

k 1

k , mBmum

m k0

k , k 0 could be singular.

4.6. Equivalent time-varying equations

1. T-V transformation:

x At x Bt u

y C t x Dt u

Let x t Pt xt , Pt 0, t . Then

xt P 1 t x t

(i)

d 1

P t ?

dt

Pt P 1 t I

d

Pt P 1 t 0

dt

d

d

d

Pt P 1 t Pt P 1 t Pt P 1 t 0

dt

dt

dt

d 1

d

1

1

P t P t Pt P t

dt

dt

x P t x Pt x P t x Pt At x Bt u

1

1

(ii) P t P t x Pt At P t x B t u

P t P 1 t Pt At P 1 t x Pt Bt u

A t , B t , C t , D t

2. Bounded x

? Bounded x

x t xt xt e t x0 lim xt

x t e

t

2t

xt e x0 lim x t 0

t

t

Source of the problem: Unbounded Pt e

( xt Pt x t )

2t

3. Lyapunov transformation: Both Pt and P

1

35

t

are bounded for any t .

4.7. Time-varying realizations

y t G t , u d

t

t0

Can it be realized by a time-varying state equation?

Theorem 4.5 Gt , is realizable

Gt, M t N Dt t

Proof: (i) Gt , is realizable. Then

x At x Bt u

y C t x Dt u

y t G t , u d

t

t0

G t , C t t , B Dt t

t , X t X 1 .

B Dt t

So Gt , C t X t X

1

(ii) Gt , M t N Dt t . Then

x N t u t

y M t xt Dt u t

t , I

xt N u d

t

t0

yt M t N u d Dt u t M t N Dt t u d

t

t

t0

t0

Homework: 4.2, 4.5, 4.8, 4.12, 4.15, 4.17, 4.19, 4.20, 4.22, 4.26

36

Chapter 5 Stability

5.1 Introduction

x0

y (t )

System

u(t ), t 0

x0

x0 0

y (t )

System

y (t )

System

u(t ) 0, t 0

u(t ), t 0

Zero-state response

Zero-input response

x0 x0 0

u t 0 u t

Response=zero-input response + zero-state response

5.2 Input-Output stability of LTI systems (zero-state response)

1. Definitions

(1). Model yt

u g t d ut g d

t

t

0

0

(causal)

(2). Boundedness:

Bounded input-

Bounded output-

ut u m

yt ym

(3).BIBO stability (scalar case, Theorem 5.1, pp. 122)

g t dt M

Bounded inputs yield bounded output

0

g t dt M

Proof: (i)

0

?

y t u t g d

t

0

u m g d

t

0

t

g d u m

0

Mu m y m

37

(ii) BIBO stable

?

yt ym . Suppose

We know

g t dt

0

T , g t dt 2 y m

T

0

sgn g T t , 0 t T

. Then

0,

other

Let u t

y T u T g d

T

0

g d

T

0

2 ym

ym

We get a contradiction. So

g t dt M

0

2. The outputs under several special inputs (Theorem 5.2)

ut

yt

g t

(1) Step input, the output converges to a constant function.

(2) Sinusoidal input, the output converges to a sinusoidal function.

(3) Any input not converging to 0, the output will converge to the “unstable” input mode.

3. Proper rational: ĝ s

Theorem 5.3 (pp. 124) BIBO stable

all poles are in the open left half plane

gˆ s gˆ gˆ sp s

k1,n1

k1,1

gˆ

s p1 n

s p1

g t L1 gˆ s gˆ t k1,1e p1t k1,n1 t n1 1e p1t

4. BIBO stability of MIMO systems

Theorem 5.M1(pp. 125)

All g ij t are BIBO stable

BIBO of G t g ij t

Proof: (1) BIBO of G t g ij t

?

38

Consider only yi t , u l t 0, l j . We get a SISO system with the input of u j t and the

outpout of yi t . So g ij t must be BIBO stable.

(2) All g ij t are BIBO stable

y i t

t

0

g i1 u1 t d g im u m t d

t

0

g u t d

t

i1

0

?

1

g u t d

t

0

im

m

SISO systems

So the boundedness of the outputs of all SISO systems guarantee that of the overall system.

It is not easy to check all g ij t . We can check whether

Gt dt M

0

where represents any norm (due to the equivalence of all norms).

5. BIBO stability of a state space equation

x t Axt Bu t

yt Cxt Du t

G s C sI A B D g ij s

1

is rational proper.

1

C Adj sI AB D

sI A

All poles of g ij s are poles of sI A 0 , i.e., the eigenvalues of A .

If A is stable, the system is BIBO stable. Due to possible pole-zero cancellation, BIBO stable

systems may not have stable A . Example 5.2 (pp. 126).

5.2.1

Discrete-time case

1. Model yk

k

m 0

gk mum m0 gmuk m

k

BIBO stability: bounded input, bounded output.

k 0

gk M

All poles of g z have magnitude strictly less than 1.

39

5.3 Internal stability

1. Model: zero-input response

x Ax , x0 x0

xt , the state, is treated as the response.

xt e At x0

Equilibrium: 0 is the desired working point.

2. Marginal stability or stability in the sense of Lyapunov

(1) Definition:

Every finite x 0 excites a bounded state response xt .

x0 M 0 xt M , t 0

(2) A necessary and sufficient condition:

Re i 0,

or

Re i 0, the Jordan block of i is diagonal

where the eigenvalues of A are denoted as

i i 1,, n

Proof: The Jordan form of A :

J1 0 0

0 J 0

2

1

,

J P AP

0 0 Jr

J i ,1 0 0

0 J

0

i,2

Ji

,

0 J i ,ni

0

i 1 0 0

0 1 0

i

J i , p 0 0 i 0

0 0 0 i

J i : mi mi

A similarity transformation: x Px

x Jx , x 0 Px0

xt is bounded

x t e Jt x 0

x t is bounded

40

e J1t

0

Jt

e

0

e J it

e

Ji, p

e J 2t

0

e J i ,1t

0

0

0

0

e J r t

0

0

e

J i , 2t

0

it

e

0

0

0

0

0

, J i , p : ni , p ni , p

J t

e i , ni

te it

1 2 i t

t e

2

e it

te i t

0

e i t

0

0

1

n 1

t i , p e i t

ni, p 1!

1

ni , p 1 i t

ni, p 2! t e

1

n 1

t i , p e i t

ni, p 3!

e i t

n

i , p ni , p

(i)

When Re i 0 , lim t e

(ii)

When Re i 0 , e

m i t

t

(1) . (i) + (ii)

0, m .

1, t 0 ; t m e i t is unbounded for m 1, t 0 .

i t

Marginal stability

under any initial state x 0 , x t is always stable.

(2). Marginal stability

Choose x 0 ei (the i th entry is 1 and the others are 0). We get all columns of e

t

t

bounded, which are composed of e , te , . So we get (i) and (ii).

3. Asymptotic stability

(1) Definition:

Stable + lim xt 0

t

lim xt 0

t

lim x t 0

t

(2) A necessary and sufficient condition:

Re i 0.

5.3.1 D-T case

xk 1 Ax , x0 x0

xk A k x0

41

Jt

is

J1 0 0

0 J 0

2

,

J P 1 AP

0 0 Jr

J i ,1 0 0

0 J

0

i,2

Ji

,

0 J i ,ni

0

i 1 0 0

0 1 0

i

J i , p 0 0 i 0

0 0 0 i

J i, p

k

k

i

0

0

0

ki

k 1

i k

J i : mi mi

k k 1 k 2

i

2!

ki

k 1

0

i k

0

0

So stability is related to

k k 1 k ni , p 1

ni, p 1!

k k 1 k ni , p 2 k ni , p 2

i

ni, p 2!

k k 1 k ni , p 3 k ni , p 3

i

ni, p 3!

k

i

i k n

i , p 1

i .

5.4 Lyapunov theorem

1. Motivations:

(1) In the previous sections, we determine stability of LTI systems by analytically solving an ode

(ordinary differential equation). Then we determine stability according to the obtained

solution. For general nonlinear systems, it is difficult, or impossible, to get the desired

analytic solution. Then how to determine stability?

(2) Lyapunov (direct) method (“direct” means that we doesn’t need to solve the ode first. We just

“directly” determine stability from the ode.

xt f xt , t

(i)

Lyapunov function V x, t

V x, t 0; V x, t 0 x 0

K1 x V x, t K 2 x

K1 x , K 2 x are monotonically increasing and positive functions.

42

d

V x, t 0 marginally stable

dt

(ii)

d

V x, t 0x 0 aymptocial ly stable or

dt

d

V x, t 0

dt

aymptocial ly stable

d

V x, t 0 xt 0

dt

2. Implement Lyapunov method to a linear system:

x Ax

(1) Choose P 0 , V x x Px

T

(2)

d

V x x T PA AT P x

dt

Let Q PA A P

T

If Q 0, asymptotic ally stable

marginally stable

If Q 0,

The key is the Lyapunov euation

AT P PA Q

(3) Conversely, if for a Q , the Lyapunov equation has a positive solution P . Then

If Q 0, asymptotic ally stable

marginally stable

If Q 0,

3. Lyapunov equation

AT M MA N

Theorem 5.5(pp. 132).

Re i A 0 (i 1,, n) N 0, M must exist and is unique, M 0

Proof: (i) Re i A 0 (i 1,, n) ?

M e A t Ne At dt 0(why ?)

T

0

AT M MA AT e A t Ne At e A t Ne At A dt

0

0

T

T

d AT t At

e Ne dt

dt

e A t Ne At |0

T

0 N e At 0, e At I

t

t 0

43

M is a solution.

Under Re i A 0 (i 1,, n) , the Lyapunov equation has a unique solution. An

alternative proof is shown in Theorem 5.6 (pp. 134).

(ii) N 0, M must exist and is unique, M 0 ?

Suppose Av v( , v can be complex) .

v * AT * v *

T

*

T

Left-multiply A M MA N by v and right-multiply A M MA N by v , we

get

v * AT Mv v * MAv v * Nv

v Mv v M v v Nv

* *

*

*

v Mv v Nv

*

*

*

M 0, N 0

Re 0

5.4.1 D-T case

We again need a Lyapunov function

V xk , k 0; V xk , k 0 x 0

K1 x V x, t K 2 x

V k V xk 1, k 1 V xk , k

V k 0 marginally stable

V k 0x 0 aymptocial ly stable or

V k 0

aymptocial ly stable

V k 0 xk 0

For xk 1 Axk .

V x x T Mx

We can get Theorems 5.D5, 5.D6.

44

T

Note that the solution of M A MA N is

M k 0 AT NAk

k

5.5 Stability of LTV systems

1. Model (input-output)

y t g t , u d

t

t0

2. BIBO Stability conditions:

g t , d M , t , t

t

(1) SISO- BIBO stability

t0

0

g t , d M , t

t0

0

Gt , d M , t , t

t

(2) MIMO- BIBO stability

t0

0

Gt , d M , t

t0

0

(3) State space model:

x At x Bt u

y C t x Dt u

The zero-state response is

y t G t , u d

t

t0

Gt , Ct t , B Dt t

Dt M 1 , C t t , B d G t , M 2 , t , t 0

t

BIBO stability

t0

3. Internal stability

(1) Zero-input response

x At x , xt 0 x0

Response: xt t , t 0 xt 0

(2) Marginally stable

Asymptotically stable

t , t 0 M , t , t 0

t , t 0 M , t , t 0

lim t , t 0, t

0

0

t

Eigenvalues can not be used to determine the stability of LTV systems.

Example 5.5 (pp. 139)

45

1 e 2t

e t

,

t

t

1

,

t

,

0

x

x

1

2

0

0 1

0.5 e t e t

e t

(3). Marginal stability and asymptotic stability are invariant under any Lyapunov transformation.

x At x , x t Pt xt ,

x t t , x t 0

t , Pt t , P 1 , t , P 1 t t , P

Pt L , P 1 t L' , t

Homework: 5.6, 5.11, 5.13, 5.14, 5.17, 5.20, 5.21, 5.22, 5.23

46

Chapter 6 Controllability and observability

6.1 Introduction

Input

State

u

x

Output

y

Controllability, reachability: u x

Observability: x y

6.2 Controllability

1. Model:

x t Axt Bu t , x R n , u R p

xt e At x0 e At Bu d

t

0

2. Reachability:

(i) Require: x0 0

xt e At Bu d

t

0

(ii). Reachable state x1 :

t1 , u t ,0 t t1 ,

u t , 0t t1

x0 0

Finite t1

xt1 x1

(iii). Reachable set: the set of all reachable states

S R x1 | x1 e At Bu d , t , u ,0 t

t

0

S R is a linear space. Why?

The system is reachable iff S R R .

n

Structure of S R :

e At l 0 cl t Al (Cayley-Hamilton Theorem)

n 1

47

e Bu d

c t A Bu d

c t A Bu d

A B c t u d

SR

t

A t

0

t

n 1

0

l 0

l

l

n 1 t

l

l 0 0

n 1

l

t

l

l 0

l

0

span B, AB, , A n 1 B span CTRB

It is necessary for reachability ( S R R ) that

n

(a)

spanCTRB R n

(b) What is a sufficient condition for reachability?

?

reachability?

spanCTRB R n

t1 0,Wc t1 0

Claim: spanCTRB R

n

Proof: Suppose t1 ,Wc t1 is singular. Then there must exist a non-zero vector v ,

Wc t1 v 0

v T Wc t1 v 0

t1

0

v T e At1 BB T e A

T

v

t1

0

T

t1

vd 0

e At1 B v T e At1 B d 0

T

f t v T e At B 0, t 0, t1

dl

f t 0, l 0,1,, n 1; t 0, t1

dt l

v T e At Al B 0, t 0, t1

v1 B, AB, A 2 B,, A n 1 B 0 , v1 v T e At 0 v T 0, e At 0

T

T

B, AB, A2 B,, An1 B n, dim spanCTRB n

48

Contradiction!

When spanCTRB R , take any t1 0 and any target state x1 , let

n

ut B T e A

T

Wc t1 x1 , t 0, t1 ,

t1 t

1

We get

xt1 e At1 BB T e A

t

T

Wc t1 x1 d

t1 t

0

e At1 BB T e A

0

t

T

t1 t

Wc t1 Wc t1 x1

1

1

d Wc t1 x1

1

x1

So spanCTRB R

reachability.

n

(c). Other equivalent reachability conditions

B, AB, A2 B,, An1 B n

Proof: (i)

I A, B n,

B, AB, A2 B,, An1 B n

?

I A, B n .

When

is not an eigenvalue of A , I A 0

When

is an eigenvalue of A : Suppose I A, B n . Then there exists v 0 such

that

v T I A, B 0

v T B 0, v T v T A

v T AB v T B 0,

v T B, AB, A2 B,, An1 B 0,

B, AB, A2 B,, An1 B n . Contradiction!

I A, B n,

(ii)

Suppose

?

B, AB, A2 B,, An1 B n . Then here exists v 0 such that

v T B, AB, A2 B,, An1 B 0,

v T B 0,, v T A n1 B 0

49

v T Al B 0, l n (Cayley-Hamilton Theorem)

V v | v T Al B 0, l N

is a linear space (it is close under the operations of addition and

scalar multiplicity).

V is not empty. V has a basis v1 , v2 ,, v s , V spanv1 , v2 ,, v s

Any vector v V , we have a linear coordination

y1

v j 1 y j v j v1 , v2 ,, v s y, y

y s

s

We can verify that any v V ,

AT v V ,

A v

T

T

Al B v T Al 1 B 0, l N

So A v j V , j 1,, s

T

z11

AT v v , v , , v z 21

1

1

2

s

z s1

z1s

z

AT v s v1 , v 2 , , v s 2 s

z ss

AT v1 , v 2 , , v s v1 , v 2 , , v s Z ,

z11 z1s

Z

z s1 z ss

Z has at least one eigenvalue and a non-zero eigenvector z ,

Zz z, z 0

Define v v1 , v2 ,, v s z , v 0

50

AT v AT v1 , v 2 , , v s z

v1 , v 2 , , v s Zz

v1 , v 2 , , v s z v1 , v 2 ,, v s z

v

v T A v

T

v I A B 0, v 0 I A B n Contradiction!

T

v V v B 0

So

3.

B, AB, A2 B,, An1 B n

I A, B n,

Controllability

(1) Controllable state x1 :

For a given state x1 R ,

n

t1 , u t ,0 t t1 ,

x0 x1

u t , 0t t1

Finite t1

xt1 0

The controllable set S c x1 | t1 , ut ,0 t t1 , x0 x1 , xt1 0

Sc R n

The system is controllable

(2) Controllability conditions:

0 xt1 e At x1 e At Bu d

t1

0

e At x1 e At e A Bu d

t1

0

e At x1 e A Bu d

0

t1

So x1

t1

0

e A B u d

A A, u t1 u

x1 e A Bu t1 d

t1

0

x1 e A t1 Bu d

t1

0

x1 is reachable for the following system

x A x Bu

51

Reachability.

Controllable

A, B

Reachable

A, B

C TRB n

C TRB B, A B, , A n 1 B

B, AB, , 1

n 1

A n 1 B

I

0

n 1

B, AB, , A B

0

0

I 0 0

0 I 0

CTRB

0 0

I

0 0

0

I 0 0

0

I

0

0

0

0

0

C TRB CTRB

For a continuous-time system x Ax Bu , Controllability

Reachability

For D-T systems, controllability and reachability are NOT equivalent.

(i)

A controllable but non-reachable example:

xk 1 0 xk 0u k

(ii)

Reachability

Controllability

x0 0

xk m0 Am Bu k-m-1 span B, AB,, An1 B

k 1

span B, AB,, A n1 B R n

Reachability

6.2.1 Controllability indicies

1. Definition:

B b1 , b2 ,, bm R nm , B m

CTRB B, AB,, A n1 B

b1 , b2 ,, bm | Ab1 , Ab2 ,, Abm | | A n1b1 , A n1b2 ,, A n1bm

How many linearly independent columns does bi contribute?

An example:

52

i

1

0

A

0

0

0 0 0

0

0

0 1 0

, B b1 , b2

0

0 0 1

0 0 0

1

b1

0

0

CTRB

0

1

Ab2 A 2 b1

1

0

0

1

|

0

0

0

0

b2 Ab1

1 0

0

0

0

0

|

1

0

0

0

1

0

A 3b2

1

0

0

0

A 2 b2 A 3b1

1

0

0

0

|

0

0

0

0

1 3, 2 1, 1 2 4 n

CTRB n i 1 i n

m

2. Under a similarity transformation, the controllability indicies do not change.

x Ax Bu , B b1 ,, bm

x Px

x A x B u, B b1 ,, bm

A PAP 1 , B PB, bi Pbi

(i) A B PAP

k

B , A B ,, A

n 1

PB PA B

1 k

k

B P B, AB,, An1 B

A, B is controllable

A, B

is controllable.

b , b ,, b | A b , A b ,, A b | | A b , A b ,, A b

Pb , b ,, b | Ab , Ab ,, Ab | | A b , A b ,, A b

n 1

(ii).

1

2

1

m

2

1

m

2

1

m

2

m

n 1

n 1

1

2

n 1

n 1

m

n 1

1

2

m

i i

3.

Decomposition based on controllability

When the system x Ax Bu is not controllable, then

(i). v span B, AB,, A

(ii).

n 1

B

B, AB,, An1 B n1 n .

Av span B, AB,, A n1 B

n 1

Find n1 linearly independent vectors from B, AB,, A

v1 , v 2 , , v n1 .

53

B , which are denoted as

Let P1 v1 , v 2 , , v n1 . It is obvious that

span B, AB , , A n 1 B spanP1 .

v spanP1

Av spanP1

1,i

2 ,i

Avi v1 , v 2 ,, v n1

n1 ,i

A v1 , v 2 ,, v n1

1,1 1, 2

2, 2

2 ,1

AP1 v1 , v 2 ,, v n1

n1 ,1 n1 , 2

1,n1

2,n1

P1 A1

n1 ,n1

n1 n1

(iii). Find another n n1 vectors, v n1 1 , v n1 2 , , v n , such that

v1 , v 2 , , v n1 , v n1 1 , v n1 2 ,, v n are linearly independent.

P2 v n1 1 , v n1 2 , , v n

A1

0

(a). AP1 P1 A1 P1 , P2

A

AP2 P1 , P2 12

A22

A

AP1 , P2 P1 , P2 1

0

A

A P 1 AP 1

0

A12

A22

A12

A22

B

B P1 B1 P1 , P2 1

0

(b). B spanP1

1

(c ). x P x ,

A

x 1

0

B1

A12

x u

A22

0

x1

,

x2

(iii) x

x1 A1 x1 A12 x2 B1u

x 2 A22 x2

(a). When x2 0 0 , x1 A1 x1 B1u . Is it still controllable?

54

B

B P 1 B 1

0

B , A B ,, A

n 1

B P B, AB,, An1 B

A

A B P 1 1

0

A12 1 B1

P P

A22

0

l

l

l

A

P 1

0

A12 B1

A22 0

A1l

P

0

* B1

l

A22 0

1

l

1

A l B

P 1 1 1

0

B , A B ,, A

n 1

B

B P 1

0

1

A1 B1 A1 B1

0

0

n

n1 B , A B ,, A n 1 B B1 , A1 B1 ,, A1

n 1

So the x1 subsystem is controllable and reachable.

6.3 Observability

1. Unobservable state

x Ax

y Cx

x 0 is unobservable if

yt 0, t 0

x0 x0

y t Ce At x0

Ce At x0 0, t

x 0 is unobservable

x0 0

Ce At 0 0, t

We cannot distinguish between an unobservabel state x0 x0 and x0 0 .

The set of all unobservable states

S o x0 | yt 0, t 0, x0 x0

S o is a linear space. Why?

The system is observable

S o 0 .

55

n 1

B1 B1 , A1 B1 ,, A1 1 B1

2. Observable condition (Theorem 6.4)

Wo t is non-singular for any t 0 .

Observable

Proof: (1) Wo t is non-singular for any t 0

?

e A t C T yt e A t C T Ce At x0

yt Ce At x0

T

t

e

AT

0

T

t

T

C T y d e A C T Ce A d x0

0

Wo t x0

x0 Wo t e A C T y d

t

-1

T

0

So yt 0 x0 0

S o 0 , i.e., the system is observable.

(2) The system is observable

?

Suppose t1 ,Wo t1 is singular. We can find v 0 such that

Wo t1 v 0

v T Wo t1 v 0

t1

t1

0

0

v T e A C T Ce A vd 0

T

Ce A v

2

2

d 0

Ce At v 0, t 0, t1

dm

Ce At v |t t2 0, m 0,1,2,

dt m

Take t 2 0, t1 . We know that

Ce At v 0,

C e At2 v 0

d

Ce At v |t t2 0,

CA e At2 v 0

dt

n 1

d

Ce At v |t t2 0, CA n 1 e At2 v 0

dt n 1

For any t , we know

y t Ce At v Ce At t2 e At2 v

C l 0 cl t t 2 A l e At2 v

n 1

l 0 cl t t 2 CAl e At2 v

n 1

. Then

l 0 cl t t 2 0

n 1

y t 0, t

x0 v 0

x0 v is not observable. So The system is not observable.

56

Contradiction! So Wo t is non-singular for any t 0 .

Why are we so insterested in x0 ?

The system is governed by x Ax Bu . ut is computed by us and is known. If we know

x0 , then by xt e At x0 e At Bu d , we can know

t

0

xt , t .

3. Duality of observability and controllability.

(i) x Ax Bu . Controllable

Reachable

Wr t e A BB T e A d is non-singular for any t.

t

T

0

x AT x

(ii)

, observability?

T

y B x

T

t

Wo t e A C T C e A d

0

t

T T

e A B T

0

t

T

B T e A d

T

e A BB T e A d

T

0

Wr t

A, B

B

is reachable/controllable

T

4. Equivalent observability conditions

(1) Wo t

t

0

e A C T Ce A d is non-singular for any t.

T

C

CA

has full column rank.

(2) O

n 1

CA

A I

has full column rank for any .

C

(3)

5. Observability indicies

57

, AT

is observable.

C1

C

q

CA

1

C1

C A

C ,O q ,

C q

n 1

C1 A

n 1

C q A

vi : The # of linearly independent rows contributed by C i

Observability indicies: v1 , , v q

Observability index: max vi

i

6. Unobservable space:

S o x0 | Ce At x0 0, t 0

(i) Ce At x0 C

c t Al x0 l 0 cl t CAl x0

l 0 l

n 1

n 1

x0 Null O CAl x0 0, l 0,1,, n 1

Ce At x0 0, t

x0 S o

Null o S o

(ii) Ce x0 0, t

At

dl

Ce At x0 0, t , l 0,1,2,

dt l

t 0

CAl x0 0, l 0,1,

x0 Null o

Null o S o

58

So Null o S o

7. Observability decomposition:

O n2 , dim Null O dim S o n n2

Select a basis for S o : v n2 1 , , v n , v n2 1 , , v n P2

AP2 A vn2 1 ,, vn vn2 1 ,, vn Ao , Ao R n n2 n n2

due to

Ax S o ( CAl x 0l 0,1,, n 1 CAl 1 x 0 )

x S o

Find another n 2 complementary vectors v1 , , v n2 , v1 , , v n2 P1

A

AP1 A v1 ,, vn2 v1 ,, vn2 | vn2 1 ,, vn o

A21

A

AP1 , P2 P1 , P2 o

A21

0

Ao

CP1 , P2 CP1 , CP2

Because spanP2 Null O

CP2 0

CP1 , P2 CP1 ,0

A

0

1

x Ax x P x x P 1 AP o

x

A

A

o

21

y Cx

y

C

0

x

o

8. Controllability-observability based decomposition

Controllable sub-space: S c ( AS c S c )

Unobservable sub-space: S o ( AS o S o )

(1) Decompose S c

Sc So : n1 n1,1 D, qn1,1 1 ,, qn1 Pco

Sc : n1 D

Sc \ So : n1,1 D, q1 ,, qn1,1 Pco

(2) Decompose S c R \ S c

n

59

S c S o : n n1 n2, 2 D, Pc o

Sc : n n1 D

S c \ S o : n2, 2 D, Pc o

P Pco

Pco

(i) Pc Pco

Pco

Pco , APco spanPc

*

Pco Pco

*

APco Pco

(ii) Po Pco

APc o Pco

Pco

Pco

Pc o

*

*

Pc o

0

0

Pco , APco spanPo

*

Pc o Pco

*

Pco

Pc o

0

*

Pc o

0

*

(iii) APco spanPc , APco spanPo . So

APco spanPco

APco Pco * Pco

(iv) APc o Pco

(v) APco

Pco

Pco

Pc o

(vi) B spanPco

Pco

Pc o

Pc o

0

*

Pc o

0

0

*

*

Pc o

*

*

Pc o Pco

Pco

Pc o

Pco

60

*

*

Pc o

0

0

0 * 0

* * *

0 * 0

0 * *

B Pco

*

Pco Pco

*

B P 1 B P 1 Pco

C CPco

Pco

Pco

CPco

(vii)

Pco

Pc o

0 Cc o

0

* *

* *

Pc o

0 0

0 0

Pc o CPco

Pc o

0 CPc o

Cco

Pc o

*

*

Pc o

0

0

0

CPco

CPc o

CPc o

Therefore we get Theorem 6.7 (page 163).

ˆ s C sI A

(viii) G

1

B ? We first compute z sI A B through

1

sI A z B

6.5 Conditions in Jordan-form equations

x Jx Bu

,

y Cx

J

J 1

0

J1,1

0

0

J 2

0

0

J1, 2

0

1 0 0

0 1

0 1 0 0

0

0 0 1 0

J 2

0 0 0 2

b1,1,1

B1,1

B1 b1,1, 2

B B1, 2

B2 B b1, 2,1

2 b

2,1,1

We want to verify the controllability condition in Theorem 6.8 (page 165)

vT J I

Controllable

B 0 has only the solution of v 0 for any .

(i)

When

1 & 2 , J I is non-singular. So we can easily get v 0 .

(ii)

When

2 ,

61

vT J 2 I

Because

B v1 v2

1 2

0

v4

0

0

v3

1

0

1 2

0

0

1 2

0

0

0 b1,1,1

0 b1,1, 2

0

0 b1, 2,1

0 b2,1,1

1 2 0 , then v1 v2 v3 0 .

v4b2,1,1 0

v4 0

b2,1,1 0

(iii)

When

vT J 1 I

1 ,

B v1 v2

0

0

v4

0

0

v3

b1,1,1

0 0

0 b1,1, 2

0

0 0

0 b1, 2,1

0 0 2 1 b2,1,1

1 0

0

We first get v1 0 .

Second,

1 2 0

v4 0

vT B 0 v2b1,1, 2 v3b2,1,1 0

b

1,1, 2

,b2,1,1 are linearly independent.

v2 v3 0

Fig 6.9(page 167): b1,1, 2 can effect both x1 and x2 .

6.6 Discret-time state equations

xk 1 Axk Bu k

yk Cxk

Model:

1. Controllable reachable

xk 1 0xk 0uk

It is controllable, but not reachable.

S R R n

2. Reachability condition

S R span B,

AB, ,

An1B

Reachability

Controllability

2. Controllability condition

An

B An1B B An1B

62

controllable.

An

(1)

B An1B B An1B

means

After n steps, any initial state can be moved into 0.

(2) controllable

?

Suppose

An

B An1B B An1B

A PJP 1 ,

J1

J 0

0

0

J2

0

0

1 1 0

0 , J1 0 1 1 , J 2 2

0

0 0 1

J 3

P P1

P2

P3 , P1 q1,1

Al PJ l P 1 P1

P1

P1

P2

P2

P2

J1l

P3 0

0

J1l

0 0

0

q1, 2

J1l

P3 0

0

0

J2

0

0

J2

l

0

l

0 1 0

1

, J 3 0 0 1, 3 0

2

0 0 0

q1,3 , P2 q2,1

0

J2

0

l

q2, 2 , P3 q3,1

q3, 2

0

0 P 1

l

J 3

0

0 P 1 , l n

0

0

l

l

0 P 1 , J1 0, J 2 0

0

span Al spanP1 , P2 span An , l n

Let x0 q1,1 . Due to controllability, we know there exists N1 such that

(i)

AN1 q1,1 l 10 AN1 1l B ul

N 1

A N1 q1,1 P1

P2

P1

P2

J1 N1

0 0

0

J1 N1

0 0

0

0

J2

N1

0

0

J2

N1

0

0

0 P 1q1,1

0

0 v1

1

0 0 , v1 0

0

0 0

1 1 q1,1

N

1N q1,1 l 0 AN 1l B ul spanB An1B

1

N1 1

1

63

q3,3

An1B

So q1,1 span B

(ii) Let x 0 q1, 2 . Due to controllability, we know there exists N 2 such that

AN2 q1, 2 l 20 AN2 1l B ul

N 1

A q1, 2 P1

N2

P1

J1 N 2

0 0

0

P2

J2

P2

N

N2

0

J1 N 2

0 0

0

1 2 q1,1 N 2 1

N 21

0

0

J2

N2

0

N 2 1

0

0 P 1q1, 2

0

0 v2

0

0 0 , v2 2

0

0 0

q1, 2

N 1

N

So q1, 2 span B

An1B

1 0

(iii) Similarly we can prove that q1,3 , q2,1 , q2, 2 span B

spanP1 , P2 span B An1B .

A P1

n

P2

J1l

0 0

0

0

J2

0

l

0

0 P 1 ,

0

span An spanP1 , P2 span B An1B

So

q1, 2 1 2 q1,1 l 20 AN2 1l B ul span B An1B

N 2 1

An

B An1B B An1B .

6.7 Controllability after sampling

We want to verify Theorem 6.9 (page 173) through an example.

1 1

1 1

x Ax Bu , A

1

2

*

*

, B B1,3

1

*

B2, 2

2

xk xkT , ut uk , kT t k 1T

xk 1 Ad xk Bd uk

64

An1B . Therefore

e 1T

Ad

a12

a13

e 1T

a23

e 1T

e 2T

, a12 0, a13 0, a23 0, a45 0

a45

e 2T

T

Bd e A Bd

0

eig Ad e 1T , e 2T . Under the condition of Theorem 6.9, e 1T e 2T .

vT Ad e 1T I , Bd 0 v 0 ?

v Ad e I v1 v2

T

1T

v3

v4

0 a12

0

v5

a13

a23

0

e 2T e 1T

0

a45

e 2T e 1T

v1a12 0 v1 0,

So

v1a13 v2 a23 v2 a23 0 v2 0

v4 e 2T e 1T 0 v4 0

, v3 ?

v4 a45 v5 e 2T e 1T v5 e 2T e 1T 0 v5 0

vT Bd 0 0 v3

0 0e A Bd

T

0

T

0 0 v3e 1

0

*

*

0 0 B1,3 d

*

B2, 2

T

T

v3 e 1 d B1,3 , e 1 d 0, B1,3 0controllab ility ( A, B )

0

0

So v Bd 0 v3 0 .

T

6.8 LTV state equations

1. Model

x At x Bt u

y C t x

xt t , t0 xt0 t , B u d

t

t0

2. Controllability:

65

xt0 x0 xt t , t0 x0 t , B u d 0

t

t0

1 t , t0 t , t0 , t t , t0 ,

x0 t0 , B u d

t

t0

Controllability matrix: Wc t0 , t

t , B B t , d

t

T

T

0

t0

0

Theorem 6.11 (page 176)

t ,Wc t0 , t is non-singular.

The system is controllable at time t 0

Wc t0 , is non-singular.

Proof: (1) t1 ,Wc t0 , t1 is non-singular.

x0 , u BT T t0 , Wc t0 , t1 x0 .

1

Then xt1 0

(2) The system is controllable at time t 0

?

Suppose Wc t0 , t is singular for any t .

t1 t2 Wc t0 , t1 Wc t0 , t2

vT Wc t0 , t1 v 0 vT Wc t0 , t 2 v 0

vT Wc t0 , v 0

vT Wc t0 , t v vT t0 , B B T T t0 , vd

t

So

t0

vT t0 , B

t

t0

0,

2

2

d ,

t

vT t0 , B 0,

Let x0 v 0 . The system is controllable, then t1 , u ,

v t0 , B u d

t1

t0

66

vT v vT t0 , B u d 0

t1

t0

v 0 . Contradiction!

2. Theorem 6.12 (teach yourselves).

3. Example

1

1

x u is controllable

2 1

(1) x

(2)

et

x

2t u

2

e

1

x

t , e

At

et

et

, t , B 2t

e 2t

e

Wc t0 , t t0 , B BT T t0 , d

t

t0

t

t e0

2t et0 e 2t0 d

0

t0

e

e 2 t 0 e 3t 0

t t0 3t

0

e 4t0

e

For any t , Wc t0 , t is singular.

Actually, e 0

t

1 Wc t0 , t 0 . So the system is NOT controllable.

4. Observability: a necessary and sufficient condition is

Wo t0 , t T t0 , C T C t0 , d is non-singular for some t .

t

t0

Wo t0 , is non-singular.

Homework: 6.3, 6.4, 6.6,6.9, 6.10, 6.12, 6.16, 6.19, 6.20, 6.21, 6.22。

67

Chapter 8. State feedback and state estimators

8.1 Introduction

1. Control problem formulation:

r

u

y

Plant

Design u so that y follows r as closely as possible.

2. Solutions:

(1) Open loop

r

controller

u

y

Plant

(2) Closed loop/feedback

r

controller

u

Plant

y

A closed-loop controller is usually more robust to parameter variation, noise, etc.

How can we design a good closed-loop/feedback controller? It is the main task of this chapter.

8.2 State feedback

1. Plant (SISO):

x Ax bu

, x Rn , u R

y cx

A state feedback controller:

u r kx r k1 k2 kn x .

2. Controllability is preserved under any state feedback: Theorem 8.1 (page 232)

A bk , b

Proof: (1)

Suppose

A, b

is controllable for any state feedback gain k

A, b

is controllable

A bk , b

is controllable.

?

is NOT controllable under a state feedback gain k . Then

, v R n , v 0 vT A bk I b 0 . So

vT A bk vT vT A vT bk vT vT

A, b

vT b 0

vT b 0

Contradiction! So

A bk , b

is

NOT

is controllable for any state feedback gain k .

68

controllable.

(2)

A bk , b

is controllable for any state feedback gain k

A, b

We can choose k 0 . Then we get

is controllable.

3. Effects of state feedback.

(1) State feedback may change observability

Example 8.1(page 233): The original observable system becomes unobservable after the state

feedback.

1 3 1

x u

3 1 0

(2) Example 8.2 (page 234): x

The original characteristic polynomial is s s 4s 2 , poles (eigenvalues): 4, -2.

State feedback: u r k1

k2 x . The closed-loop system equation becomes

1 k1 3 k 2 1

x

x

r

1 0

3

The new characteristic polynomial is f s s k1 2s 3k2 k1 8 .

2

By choosing k1 , k2 appropriately, eigenvalues (poles) of A f A bk can be arbitrarily

assigned (too good to be true).

4. Arbitrarily assign the poles of the closed loop system.

Theorem 8.2 (page 234), Theorem 8.3 (page 235).

x Ax bu

, x R n , u R, n 4 . The system is controllable.

y cx

s s 4 1s 3 2 s 2 3 s 4 .

By a state feedback u r kx r k1

k2

k3

k4 x , we can obtain any desired

closed-loop characteristic polynomial:

f s s 4 1s3 2 s 2 3s 4

Proof: We introduce a similarity transformation different from that in the book. A constructive

method.

(1) CTRB b

Ab

A2 b

A3 b is nonsingular.

69

T

Solve v b

Ab

A2b

0

0

3

A b . The solution exists and is unique.

0

1

vT b 0

T

v Ab 0

.

T 2

v A b 0

vT A3b 1

vT A3

T 2

v A

Let P T . P is non-singular because

v A

T

v

v T A3

T 2

v A

P CTRB T b

v A

T

v

1 * *

0 1 *

0 0 1

0 0 0

Ab

A2b

v T A 3b v T A 4 b v T A 5 b

T 2

v A b vT A3b vT A4b

3

A b T

v Ab vT A2b vT A3b

T

vT Ab vT A2b

v b

*

*

a non - singular matrix

*

1

A PAP 1

v T A 4 1 2 3

T 3

1

0

0

v A

A P PA T 2

v A 0

1

0

T

0

1

v A 0

1 2 3 4

1

0

0

0

P

0

1

0

0

0

1

0

0

4 v T A3

0 vT A2

0 vT A

0 vT

A4 1 A3 2 A2 3 A 4 I (Cayley-Hamilton Theorem)

A PAP1

1 2

1

So

0

A

0

1

0

0

3

0

0

1

4

0 .

0

0

70

v T A6 b

v T A5 b

v T A4b

vT A3b

vT A3b 1

T 2

v A b 0

1

, c cP 1

b Pb T

v Ab 0

T

v b 0

2

3

4

(2) Under the state feedback u r kx r k1

k2

k3

x A x b u

y cx

k4 x ,

x Af x b r

,

y cx

1 k1

1

Af A b k

0

0

2 k2

0

3 k3

0

1

0

0

1

4 k4

0

0

0

The new characteristic polynomial is

s 1 k1 2 k 2 3 k3 4 k 4

1

s

0

0

f s sI A f

0

1

s

0

0

0

1

s 44

s 1 k1 2 k 2 3 k3

n 1

s

1

s

0 (1)1 n 4 k 4 1

0

1

s 33

s 1 k1 2 k 2 3 k3

s

1

s

0 4 k 4

0

1

s 33

s 1 k1 2 k 2

4 k 4

ss

k

3

3

1

s

22

s s s s 1 k1 2 k 2 3 k3 4 k 4

s 4 1 k1 s 3 2 k 2 s 2 3 k3 s 4 k 4

By choosing ki i i , we can get any desired f s s 1s 2 s 3 s 4 , i.e.,

4

any poles can be achieved.

(3) Compute gˆ s c sI A

1

b . Let sI A b z . Then

1

71

3

2

s 1 2 3 4 z1 1

1

s

0 0 z2 0

b sI A z

0

1 s

0 z 3 0

0 1 s z 4 0

0

So

z3 sz 4 , z2 sz3 , z1 sz 2

1

1

z4 4

3

2

s 1 z1 2 z2 3 z3 4 z4 1

s 1s 2 s 3 s 4 s

s3

1

1 s 2

sI A b .

s s

1

So gˆ s c sI A

1

b

1s 3 2 s 2 3 s 4

.

s 4 1 s 3 2 s 2 3 s 4

Under the state feedback,

x Af x b r

y cx

Similarly, we get gˆ f s c sI A f

1

b

1s 3 2 s 2 3 s 4

.

s 4 1 s 3 2 s 2 3 s 4

We can see the nominator of the transfer function is not changed.

5. How can we choose the target closed-loop poles?

8.2.1 Solving a Lyapunov equation (to achieved the desired poles)

1. Procedure 8.1 (SISO) (page 239)

(1). F: whose eigenvalues are the desired poles. F and A have no common eigenvalue.

1n

(2). k R

: (k , F ) is observable.

(3). Solve AT TF bk w.r.t. T.

72

1

(4). Feedback gain: k k T .

When T is non-singular,

A bk A bk T 1

A AT TF T 1

A ATT 1 TFT 1

. So A bk has the same eigenvalues as F .

TFT 1

2. T is non-singular. Theorem 8.4 (page 240)

A, b

is controllable, k , F

is observable and F and A have no common eigenvalues

T is non-singular.

Proof: (1). The Lyapunov equation has a unique solution T because F and A have no common

eigenvalues.

(2). Suppose n 4 . The characteristic polynomial of A is

s s 4 1s 3 2 s 2 3 s 4

A 0 . But F is non-singular. The reason is shown below.

F PJ F P 1 .

F PJ F P 1 . J F is an upper triangular matrix whose diagonal

elements are

F ,i , all of which are non-zero because F and A have no common eigenvalues.

(3).

IT TI

AT TF bk

A2T ATF bk AT F Abk TF bk F Abk TF 2 Abk bk F

A T AA T ATF

A3T A A2T ATF 2 A2bk Abk F TF 3 A2bk Abk F bk F 2

4

3

AT TF b

TF b

Ab

3

A3bk A2bk F Abk F 2 TF 4 A3bk A2bk F Abk F 2 bk F 3

Ab

A2b

A2b

3 2 1

1 1

3 2

Ab

1 1 0

1 0 0

3 2 1

1

1

A3b 2

1 1 0

1 0 0

1 k

0 k F

0 k F 2

0 k F 3

1 k

0 k F

0 k F 2

0 k F 3

The right side square matrix is non-singular. So T is non-singular.

F, k can be a Jordan form, an observable form, etc.

73

8.3 Regulation and tracking

1. (1). Regulator problem: To find a state feedback controller so that the response due to the initial

condition will die out at a desired rate.

(2). Tracking: The response yt approaches the reference signal r t . Asymptotic tracking of

a step reference signal; servomechanism problem: time-varying r t .

2. Mathematical description

x Ax bu

, x Rn , u R

y cx

(1) Model:

(2). Regulation: Control law: u r kx

The response due to x0 : yt ce

The eigenvalues of

A bk

Abk t

x0

determine the convergence rate.

(3).Tracking: control law: u pr kx .

Closed-loop transfer function: gˆ f s p

The eigenvalues of

A bk

1s 3 2 s 2 3 s 4

s 4 1 s 3 2 s 2 3 s 4

lie within a certain region so that the tracking rate can be

guaranteed.

For r t a, t 0 ,

lim y t gˆ f 0 a p

t

4

a

4

t

In order to achieve asymptotic tracking ( y t a ), we need

p

4

, 4 0

4

8.3.1 Robust tracking and disturbance rejection

Although p

4

can achieve asymptotic tracking, it strongly dependes on the parameters

4

4 , 4 . What happens if there exists parameter variation?

Moreover, it cannot reject disturbance, particularly a step disturbance.

So we need to investigate a robust one.

1. Model

74

x Ax bu bw

, w: a constant disturbance with unknown magnitude.

y cx

2. Solution:

Design k, k a to achieve robust tracking and disturbance rejection.

The closed-loop equation

x A bk bka x 0 b

r

w

0 xa 1 0

xa c

x

y c 0

xa

3. Theorem 8.5 (page 244). If

A, b

is controllable and gˆ s csI A b has no zero at

1

A bk bka

can be assigned arbitrariliy by selecting k, k a .

0

c

0, then all eigenvalues of

A bk bka A 0 b

k

0 c 0 0

c

Proof:

k a Aˆ bˆk

We prove the theorem through showing the controllability of

Consider a controllability canonical form of

1

1

A

0

0

2

0

3

0

1

0

0

1

ka

Aˆ , bˆ .

A, b ,

4

1

0

0

, b , c 1

0

0

0

0

2

3 4 , 4 0

v4

v5

(1) We investigate the following equation

vT Aˆ I

vT Aˆ vT

bˆ 0 T

, v v1 v2

ˆ

v b0

75

v3

T

vT bˆ v1

T

T

v1 0 , together with v Aˆ v , yields

T ˆ

v b 0

v2 1v5 v1 0

v v v

3

2 5

2

v4 3v5 v3

4 v5 v4

0, or

0 v5

v5 0