252solnJ1 11/9/07

(Open this document in 'Page Layout' view!)

J. MULTIPLE REGRESSION

1. Two explanatory variables

a. Model

b. Solution.

2. Interpretation

Text 14.1, 14.3, 14.4 [14.1, 14.3, 14.4] (14.1, 14.3, 14.4) Minitab output for 14.4 will be available on the website; you must be able to

answer the problem from it.

3. Standard errors

J1, Text Problems 14.9, 14.14, 14.23, 14.26 [14.13, 14.16, 14.20, 14.23] (14.17, 14.19, 14.24, 14.27)

4. Stepwise regression

Problem J2 (J1), Text exercises 14.32, 14.34[14.28, 14.29] (14.32, 14.33)

(Computer Problem – instructions to be given)

This document includes solutions to text problems 14.1 through 14.26 and Problem J1. Note that there are

many extra problems included here. These are to give you extra practice in sometimes difficult

computations. You probably need it.

________________________________________________________________________________

Multiple Regression Problems – Interpretation

Exercise 14.1: Assume that the regression equation is Yˆ 10 5 X 1 3X 2 and R 2 .60 . Explain the

meaning of the slopes (5 and 3), the intercept (10) and R 2 .60 .

Solution: Answers below are from the Instructor’s Solution Manual.

(a)

Holding constant the effect of X2, for each additional unit of X1 the response variable Y is

expected to increase on average by 5 units. Holding constant the effect of X1, for each

additional unit of X2 the response variable Y is expected to increase on average by 3 units.

(b)

The Y-intercept 10 estimates the expected value of Y if X1 and X2 are both 0.

(c)

60% of the variation in Y can be explained or accounted for by the variation in X1 and the

variation in X2.

Exercise 14.3: Minitab output (faked) follows. We are trying to predict durability of a shoe as measured by

Ltimp as a function of a measure of shock-absorbing capacity (Foreimp)and a measurement of change

in impact properties over time (Midsole). State the equation and interpret the slopes.

Regression Analysis

The regression equation is

Predictor

Constant

Foreimp

Midsole

Coef

-0.02686

0.79116

0.60484

s = 0.2540

Stdev

0.06985

0.06295

0.07174

R-sq = 94.2%

t-ratio

-0.39

12.57

8.43

p

0.7034

0.0000

0.0000

R-sq(adj) = 93.2%

Analysis of Variance

SOURCE

Regression

Error

Total

DF

2

12

14

SS

12.61020

0.77453

13.38473

MS

6.30510

0.06554

F

97.69

p

0.000

252solnJ1 11/9/07

(Open this document in 'Page Layout' view!)

Answers below are (edited) from the Instructor’s Solution Manual.

(a)

(b)

(c)

(d)

Yˆ 0.02686 0.79116X 1 0.60484X 2 . The printout reads Ltimp = -0.0286 + 0.791

Foreimp + 0.604 Midsole.

For a given measurement of the change in impact properties over time, each increase of

one unit in forefoot impact absorbing capability is expected to result in an average

increase in the long-term ability to absorb shock by 0.79116 units. For a given forefoot

impact absorbing capability, each increase of one unit in measurement of the change in

impact properties over time is expected to result in the average increase in the long-term

ability to absorb shock by 0.60484 units.

R 2 rY2.12 SSR / SST 12.6102 / 13.38473 0.9421. So, 94.21% of the variation in the

long-term ability to absorb shock can be explained by variation in forefoot absorbing

capability and variation in midsole impact.

2

The formula in the outline for R adjusted

( R 2 adjusted for degrees of freedom) is

Rk2

n 1R 2 k

where k 2 is the number of independent variables. So

n k 1

n 1R 2 k 15 1 (.9421 ) 2 .93245 . The text uses

Rk2

n k 1

15 2 1

n 1

15 1

2

radj

1 (1 rY2.12 )

1 (1 0.9421 )

0.93245

n k 1

15 2 1

Exercise 14.4:

The data set given follows. The problem statement is on the next page.

MTB > Retrieve "C:\Berenson\Data_Files-9th\Minitab\Warecost.MTW".

Retrieving worksheet from file: C:\Berenson\Data_Files-9th\Minitab\Warecost.MTW

# Worksheet was saved on Mon Apr 27 1998

Results for: Warecost.MTW

MTB > Print c1-c3

Data Display

Original Data

Row

DistCost

Sales

Orders

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

52.95

71.66

85.58

63.69

72.81

68.44

52.46

70.77

82.03

74.39

70.84

54.08

62.98

72.30

58.99

79.38

94.44

59.74

90.50

93.24

69.33

53.71

89.18

66.80

386

446

512

401

457

458

301

484

517

503

535

353

372

328

408

491

527

444

623

596

463

389

547

415

4015

3806

5309

4262

4296

4097

3213

4809

5237

4732

4413

2921

3977

4428

3964

4582

5582

3450

5079

5735

4269

3708

5387

4161

252solnJ1 11/9/07

(Open this document in 'Page Layout' view!)

We are trying to predict warehouse costs in $thousands ( DistCost) as a function of sales in $thousands

(Sales) and the number of ordefrs received (Orders). From the output the text asks for a) the regression

equation, b) the meaning of the slopes, c) the meaning or rather the lack thereof of the intercept. It also asks

for rough c) confidence and d) prediction intervals.

The Minitab regression results follow. Regression was done using the pull-down menu. The ‘constant’

subcommand is automatic and provides a constant term in the regression. Response was ‘Distcost’ in c1,

Predictors were ‘sales’ and ‘orders’ in c2 and c3. The VIF option was taken.

According to the Minitab ‘help’ output, “The variance inflation factor is a test for collinearity. The variance

inflation factor (VIF) is used to detect whether one predictor has a strong linear association with the

remaining predictors (the presence of multicollinearity among the predictors). VIF measures how much the

variance of an estimated regression coefficient increases if your predictors are correlated (multicollinear).

VIF = 1 indicates no relation; VIF > 1, otherwise. The largest VIF among all predictors is often used as an

indicator of severe multicollinearity. Montgomery and Peck suggest that when VIF is greater than 5-10,

then the regression coefficients are poorly estimated. You should consider the options to break up the

multicollinearity: collecting additional data, deleting predictors, using different predictors, or an alternative

to least square regression. (© All Rights Reserved. 2000 Minitab, Inc.).”

The brief 3 option in results provides the most complete results possible, including the effect on the

regression sum of squares of the independent variables in sequence and the predicted value of the

dependent variable ‘fit’ and the variance for the confidence interval ‘SE Fit’

In order to do prediction and confidence intervals for x1 400 and x 2 4500 , these values were placed in

c4 and c5 and these were mentioned under options as ‘prediction intervals for new observations’ and ‘fits’,

‘confidence intervals’ and ‘prediction intervals’ were checked. The 2 lines below were generated by the

command because a storage option was also checked. The intervals requested in c, d and e appear at the end

of the printout.

MTB > Name c6 = 'PFIT1' c7 = 'CLIM1' c8 = 'CLIM2' c9 = 'PLIM1' &

CONT>

c10 = 'PLIM2'

MTB > Regress c1 2 c2 c3;

SUBC>

Constant;

SUBC>

VIF;

SUBC>

PFits 'PFIT1';

SUBC>

CLimits 'CLIM1'-'CLIM2';

SUBC>

PLimits 'PLIM1'-'PLIM2';

SUBC>

Brief 3.

Regression Analysis: DistCost versus Sales, Orders

Minitab regression Output.

The regression equation is

DistCost = - 2.73 + 0.0471 Sales + 0.0119 Orders

Predictor

Constant

Sales

Orders

Coef

-2.728

0.04711

0.011947

S = 4.766

SE Coef

6.158

0.02033

0.002249

R-Sq = 87.6%

T

-0.44

2.32

5.31

P

0.662

0.031

0.000

VIF

2.8

2.8

R-Sq(adj) = 86.4%

Analysis of Variance

Source

Regression

Residual Error

Total

DF

2

21

23

SS

3368.1

477.0

3845.1

Source

DF

Seq SS

Sales

Orders

1

1

2726.8

641.3

MS

1684.0

22.7

F

74.13

P

0.000

Note that these two add to the Regression SS

in the ANOVA.

252solnJ1 11/9/07

(Open this document in 'Page Layout' view!)

Obs

Sales

DistCost

Fit

SE Fit

Residual

1

386

52.950

63.425

1.332

-10.475

2

446

71.660

63.755

1.511

7.905

3

512

85.580

84.820

1.656

0.760

4

401

63.690

67.082

1.332

-3.392

5

457

72.810

70.127

0.999

2.683

6

458

68.440

67.796

1.193

0.644

7

301

52.460

49.839

2.134

2.621

8

484

70.770

77.528

1.139

-6.758

9

517

82.030

84.196

1.525

-2.166

10

503

74.390

77.503

1.126

-3.113

11

535

70.840

75.199

1.838

-4.359

12

353

54.080

48.800

2.277

5.280

13

372

62.980

62.311

1.483

0.669

14

328

72.300

65.626

2.847

6.674

15

408

58.990

63.852

1.152

-4.862

16

491

79.380

75.145

1.069

4.235

17

527

94.440

88.789

2.004

5.651

18

444

59.740

59.407

2.155

0.333

19

623

90.500

87.302

2.535

3.198

20

596

93.240

93.867

2.097

-0.627

21

463

69.330

70.087

1.049

-0.757

22

389

53.710

59.898

1.349

-6.188

23

547

89.180

87.401

1.657

1.779

24

415

66.800

66.535

1.107

0.265

R denotes an observation with a large standardized residual

Predicted Values for New Observations

New Obs

1

Fit

69.878

SE Fit

1.663

(

95.0% CI

66.420, 73.337)

(

St Resid

-2.29R

1.75

0.17

-0.74

0.58

0.14

0.62

-1.46

-0.48

-0.67

-0.99

1.26

0.15

1.75

-1.05

0.91

1.31

0.08

0.79

-0.15

-0.16

-1.35

0.40

0.06

95.0% PI

59.381, 80.376)

Values of Predictors for New Observations

New Obs

1

Sales

400

Orders

4500

Answers below are (edited) from the Instructor’s Solution Manual.

(a)

(b)

(c)

(d)

Yˆ 2.72825 0.047114 X 1 0.011947 X 2

For a given number of orders, each increase of $1000 in sales is expected to result in an

estimated average increase in distribution cost by $47.114. For a given amount of sales,

each increase of one order is expected to result in the estimated average increase in

distribution cost by $11.95.

The interpretation of b0 has no practical meaning here because it would have been the

estimated average distribution cost when there were no sales and zero number of orders.

Yˆi 2.72825 0.047114(400) 0.011947(4500) 69.878 or $69,878

According to the outline, crude intervals can be given as an approximate confidence interval of

s

Y0 Yˆ0 t e and an approximate prediction interval of Y0 Yˆ0 t se , where

n

df n k 1 and s e 22 .7 4.766 . . But we have checked the options for intervals and gotten

the intervals below.

(e)

(f)

(g)

66.420 Y 73.337

59.381 Y 80.376

R 2 rY .12 SSR / SST 3368.087 / 3845.13 0.8759 . So, 87.59% of the

variation in distribution cost can be explained by variation in sales and variation in

number of orders.

2

252solnJ1 11/9/07

(Open this document in 'Page Layout' view!)

n 1

24 1

2

radj

1 (1 rY2.12 )

1 (1 0.8759 )

0.8641 or

n k 1

24 2 1

(h)

Rk2

n 1R 2 k 24 1 (.8759 ) 2 .8641

n k 1

24 2 1

Multiple Regression Problems – Significance

Exercise 11.3 in James T. McClave, P. George Benson and Terry Sincich, Statistics for Business and

Economics, 8th ed. , Prentice Hall, 2001, last year’s text is given as an introductory example: We wished to

estimate the coefficients 0 ,1 , 2 and 3 , of the presumably 'true' regression

Y 0 1 X 1 2 X 2 3 X 3 . The line that we estimated had the equation

Yˆ b b X b X b X . In this case we can write our results, as far as they are available as

0

1

1

2

2

3

3

Yˆ 3.4 4.6 X 1 2.7 X 2 0.93 X 3

? ?

1.86 0.29 . The numbers in parentheses under the equation are the standard

H 0 : i i 0

b i0

deviations s b2 and s b3 . According to the outline, to test

use t i i

. First find the

s bi

H 1 : i i 0

26

degrees of freedom df n k 1 30 3 1 26 , and since .05 , use t .025

2.056 . Make a diagram

with an almost normal curve with 'reject' zones above 2.056 and below -2.056.

H : 0

b 0 2.7

a) So, if we wish to test 0 2

use t 2

1.452 . Since this is not in the 'reject' zone,

s b2

1.86

H 1 : 2 0

do not reject the null hypothesis and say that b1 is not significant.

H 0 : 3 0

b 0 0.93

b) If we wish to test

use t 3

3.206 . Since this is in the 'reject' zone, reject the

s b3

0.29

H 1 : 3 0

null hypothesis and say that b2 is significant.

c) Note that the size of the coefficient is only important relative to the standard deviation.

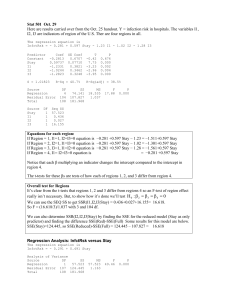

New problem J1 Old text exercise 11.5: Use the following data

Row

y

x1

x2

1

1.0

0

0

2

2.7

1

1

3

3.8

2

4

4

4.5

3

9

5

5.0

4

16

6

5.3

5

25

7

5.2

6

36

a) Do the regression of y against x1 and x 2 . Compute b) R 2 and c) s e d) do the ANOVA (not

anywhere near as much fun as the Hokey-Pokey) and, e) following the formulas in the outline, try to find

approximate confidence and prediction intervals when x1 5 and x 2 25. f)You may also run this on

Minitab using c1, c2 and c3 with the command

Regress c1 on 2 c2 c3

252solnJ1 11/9/07

(Open this document in 'Page Layout' view!)

Solution: Note that x 2 x12 , so that you are actually doing a nonlinear regression. Capital letters are used

instead of small letters throughout the following.

a) Row

Y

1

2

3

4

5

6

7

Sum

1.0

2.7

3.8

4.5

5.0

5.3

5.2

27.5

X1

X2

X 12

X 22

0

1

2

3

4

5

6

21

0

1

4

9

16

25

36

91

0

1

4

9

16

25

36

91

0

1

16

81

256

625

1296

2275

Y2

1.00

7.29

14.44

20.25

25.00

28.09

27.04

123.11

X 2Y 2

X1 Y

0.0

2.7

7.6

13.5

20.0

26.5

31.2

101.5

X1 X 2

0.0

2.7

15.2

40.5

80.0

132.5

187.2

458.1

0

1

8

27

64

125

216

441

Y 27.5, X 21, X 91, X 91, X 2275, Y 123.11,

X Y 101.5, X Y 458.1, X X 441 and n 7 .

Y 27.5 3.92857 , X X 21 3.00 and X X 914 13.0

Means:: Y

To repeat,

1

2

2

1

2

1

1

2

2

2

2

1

n

Spare Parts:

Y

107

2

1

n

2

7

2

n

7

nY 2 123.11 73.928572 15.0744 SST SSY

X Y nX Y 101 .5 73.03.92857 19.00 S

X Y nX Y 4 100.41.9 3.60 S

X 12 nX 12 91 73.02 28.00 SS X

X 22 nX 22 2275 713.02 1092 SS X

X X nX X 441 73.013.0 168 .00 S

1

1

2

X 1Y

2

X 2Y

1

1

2

1

2

2

X1 X 2

Note that df n k 1 10 2 1 7 . ( k is the number of independent variables.) SST is used later.

The Normal Equations: .

Y b0 X 1b1 X 2 b2 .

(Eqn. 1)

S X1Y SS X1 b1 S X1 X 2 b2

(Eqn. 2)

S X 2Y S X1 X 2 b1 SS X 2 b2

(Eqn. 3)

If we fill in the above spare parts, we get:

19 .00

28 .00b1 168 .00b2

100 .60

168 .00b1 1092 .00b2

3.92857 b0

3.0b1

13 .0b2

Eqn. 2

Eqn. 3

Eqn. 1

We solve the equations 2 and 3 alone, by multiplying one of them so that the coefficients of b1 or b2 are of

equal value. We then add or subtract the two equations to eliminate one of the variables. We have a choice

at this point, Note that 1092 divided by 168 is 6.5. So we could multiply equation 2 by 6.5 to get

123.5 182 b1 1092 b2 (Eqn 2)

. If we subtract one of these equations from the other, we will get an

100 .6 168 b1 1092 b2 (Eqn 3)

equation in b1 alone, which we could solve for b1 . The alternative is to note that 168 divided by 28 is 6, so

that we could multiply equation 2 by 6 to get

114.0 168 b1 1008 b2

(Eqn 2)

100 .6 168 b1 1092 b2

(Eqn 3)

.

252solnJ1 11/9/07

(Open this document in 'Page Layout' view!)

This is the pair that I chose, if only because 6 looked easier to use than 6.5. If we subtract equation 3 from

123.5 182 b1 1092 b2 (Eqn 2)

equation 2, we get 100 .6 168 b1 1092 b2

13 .4

b2

(Eqn 3) . But if 13.4 84b2 , then

84b2

13 .4

0.15952 .

84

Now, solve either Equation 2 or 3 for b1 . If we pick Equation 2 in its original form, we can write it as

28b1 19 168 b2 . If we substitute our value of b2 , it becomes 28b1 19 168 0.15952 45.7993 .

45 .7993

1.63569 .

28

Finally rearrange Equation 1 to read b0 Y X 1b1 X 2 b2 3.92857 31.63569 130.15952

1.09526 . Now that we have values of all the coefficients, our regression equation,

Yˆ b b X b X , becomes Yˆ 1.0952 1.63569X 0.15952X .

b1

0

1

1

2

2

1

b) To get R 2 , find in the outline R 2

b1

2

X Y nX Y b X Y nX Y b S

Y nY

1

1

2

2

2

2

2

1

X 1Y

b2 S X 2Y

SSY

or

SSR

, where SSR b1 S X1Y b2 S X 2Y 1.63569 19.00 0.15952 100 .60 15.0306 . Recall that

SST

SSR 15 .0306

.9971 .

15 .0744 SST SSY , so we get R 2

SST 15 .0744

SSE

c) To get s e , recall that s e2

, where k 2 is the number of independent variables. Note that

n k 1

SSE

0.0438

0.0195 and

SSE SST SSR 15.0744 15.0306 0.0438 . So s e2

n k 1 7 2 1

R2

s e 0.0195 0.1046 .

d) Remember that the total degrees of freedom are n 1 7 1 6 , and that the regression degrees of

freedom are k 2, the number of independent variables. This leaves df n k 1 7 2 1 4 for the

error (within) term. Our table thus reads

SOURCE

SS

DF

MS

F

F.05

686.33 F 2,4 10 .65

Regression SSR 15.0306 2 7.5153

Error

SSE 0.0438 4 0.01095

Total

SST 15.0744 6

Of course, since the F we computed is larger than the table F, we reject the null hypothesis that there is no

relation between the dependent variable and the independent variables and conclude that the Xs have some

explanatory power.

s

e) According to the outline and the text, to find an approximate confidence interval use Y0 Yˆ0 t e

n

and to find an approximate prediction interval Y Yˆ t s . If we pick the particular values X 5 and

0

0

e

25 , and use our regression equation Yˆ 1.0952 1.63569X 1 0.15952X 2

10

X 20

Yˆ0 1.0952 1.635695 0.1595225 5.28545 . Since the degrees of freedom are df n k 1

4

7 2 1 4 , assume .05 and use t .025

2.776 .

252solnJ1 11/9/07

(Open this document in 'Page Layout' view!)

n 7 and we found that s e 0.1046 so

se

n

0.1046

0.03954 . For the approximate confidence

7

s

interval we find Y0 Yˆ0 t e 5.285 2.776 0.03954 5.28 0.11 . The prediction interval is

n

ˆ

Y Y t s 5.285 2.776 0.1046 5.28 0.29 . Note that these are quite rough and that the intervals

0

0

e

should eventually get much larger if we pick values of the independent variables that are far away from

those in the data.

f) The problem was run on Minitab, with the following results.

MTB > Retrieve 'C:\MINITAB\MBS11-5.MTW'.

Retrieving worksheet from file: C:\MINITAB\MBS11-5.MTW

Worksheet was saved on 4/17/1999

MTB > print c1 c2 c3

Data Display

Row

y

x

x*x

1

2

3

4

5

6

7

1.0

2.7

3.8

4.5

5.0

5.3

5.2

0

1

2

3

4

5

6

0

1

4

9

16

25

36

MTB > Regress c1 on 2 c2 c3

Regression Analysis

The regression equation is

y = 1.10 + 1.64 x - 0.160 x*x

Predictor

Constant

x

x*x

Coef

1.09524

1.63571

-0.15952

s = 0.1047

Stdev

0.09135

0.07131

0.01142

R-sq = 99.7%

t-ratio

11.99

22.94

-13.97

p

0.000

0.000

0.000

R-sq(adj) = 99.6%

Analysis of Variance

SOURCE

Regression

Error

Total

DF

2

4

6

SS

15.0305

0.0438

15.0743

SOURCE

x

x*x

DF

1

1

SEQ SS

12.8929

2.1376

MS

7.5152

0.0110

F

686.17

p

0.000

252solnJ1 11/9/07

(Open this document in 'Page Layout' view!)

You should now be able to interpret almost all of this. Most of it has been covered in this problem and

the previous one. R-sq(adj) will be interpreted later as will the sequential Sums of Squares at the end. Of

course, if you do understand the material above and can reproduce it fairly rapidly if you are given most of

the numbers in the spare parts box, your chance of aceing the exam are fairly high.

Exercise 14.9 [14.13 in 9th ed]: This is your basic ANOVA problem. We start out with

Analysis of Variance

SOURCE

DF

SS

MS

F

p

Regression

2

60

Error

18

120

Total

20

180

You are asked for a) the mean squares, b) F, c) the meaning of F, d) R-squared and e) the adjusted Rsquared.

Solution: 20 n 1 and the number of independent variables is k 2. We divide the sums of squares by

the degrees of freedom.

MSR SSR / k 60 / 2 30

(a)

MSE SSE /(n k 1) 120 / 18 6.67

The table is now

SOURCE

DF

SS

MS

F

p

Regression

2

60

30

4.5

Error

18

120

6.67

Total

20

180

(b)

F MSR / MSE 30 / 6.67 4.5 , which is also shown above.

(c)

2,18 3.55 . Our null hypothesis is that there is

We compare the F we computed with F.05

(d)

no linear relationship between the independent variables and the dependent variable.

Because the F we computed is larger than the table F, we reject H0 and say that there is

evidence of a significant linear relationship.

R-squared is the regression sum of squares divided by the total sum of squares.

(e)

n 1R 2 k

is the formula that I gave you. We found above that n 1 20 ,

n k 1

n k 1 18 and R 2 60

. The rest is left to the student. While we are at it, the text

180

n 1

says that R k2 1 1 R 2

. Hand in the answer to this section with the

n k 1

adjusted R-squared computed both ways for 2 points extra credit.

Rk2

Exercise 14.14 [14.16 in 9th]: We got the following in 14.4 (Warecost)

Analysis of Variance

Source

Regression

Residual Error

Total

DF

2

21

23

SS

3368.1

477.0

3845.1

Source

DF

Seq SS

Sales

Orders

1

1

2726.8

641.3

MS

1684.0

22.7

F

74.13

P

0.000

Note that these two add to the Regression SS

in the ANOVA.

252solnJ1 11/9/07

(Open this document in 'Page Layout' view!)

The text asks you to a) tell whether there is a significant relationship between the independent variables and

Y, b) interpret the p-value, c)compute r-squared and explain its meaning and d) compute the adjusted rsquared.

MSR SSR / k 3368 .087 / 2 1684

(a)

MSE SSE /(n k 1) 477 .043 / 21 22.7

Source

Regression

2,21 3.47 Since the computed F exceeds the

F MSR / MSE 1684 / 22 .7 74 .13 . F.05

table F, reject H0 and say that there is evidence of a significant linear relationship.

We can also do the equivalent of a t test on orders by splitting the regression SS.

DF

SS

MS

F

F.05

Residual Error

Total

1

2726.8

2726.8

1

641.3

641.3

21

23

477.0

3845.1

641 .3

28 .25

22 .7

1, 21 4.32

F.05

22.7

The null hypothesis here is that the second independent variable is useless. We reject it.

(b)

2, 21 2.57 , F 2,21 3.47 , F 2,21 4.32 and F 2,21 5.78 .

For the original F test F.10

.05

.025

.01

The p value or probability of obtaining an F test statistic above or equal to 74.13 must be

considerably below .01. This means that we reject the null hypothesis in a). For c) and d)

see the last problem.

Exercise 14.23 [14.20 in 9th] (14.24 in 8th edition): The test gives a regression with the standard errors

for the coefficients and asks a) which coefficient has the largest slope in terms of t b) that you compute a

confidence interval for b1 and c) do a significance test on the coefficients.

Yˆ b0 5 X 1 10 X 2

Solution: Write the regression as

with the standard deviations below the

? 2

8

10

5

1.25

2.5 and t 2

8

2

Variable X1 has a larger slope in terms of the t statistic of 2.5 than variable X2, which has

a smaller slope in terms of the t statistic of 1.25.

2 1

s b1 5 2.074 2 5 4.148

95% confidence interval on 1 : 1 b1 t .25

025

coefficients, so that t1

(a)

(b)

or 0.852 1 9.148 .

(c)

21

Since t.25

2.074 , For X1: t b1 / sb1 5 / 2 2.50 t.025 2.074 with 22 degrees

025

of freedom for = 0.05. Reject H0. There is evidence that the variable X1 contributes to a

model already containing X2.

For X2: t b2 / sb2 10 / 8 1.25 t.025 2.0739 with 22 degrees of freedom for =

0.05. Do not reject H0. There is not sufficient evidence that the variable X2 contributes to

a model already containing X1. Only variable X1 should be included in the model.

Exercise 14.26 [14.23 in 9th] (14.27 in 8th edition): In the Warecost problem a) compute a confidence

interval for b1 and b) do a significance test on the coefficients.

Solution: Remember

The regression equation is

DistCost = - 2.73 + 0.0471 Sales + 0.0119 Orders

Predictor

Constant

Sales

Orders

S = 4.766

Coef

-2.728

0.04711

0.011947

SE Coef

6.158

0.02033

0.002249

R-Sq = 87.6%

T

-0.44

2.32

5.31

P

0.662

0.031

0.000

R-Sq(adj) = 86.4%

VIF

2.8

2.8

252solnJ1 11/9/07

(Open this document in 'Page Layout' view!)

Analysis of Variance

Source

DF

Regression

2

Residual Error

21

Total

23

SS

3368.1

477.0

3845.1

MS

1684.0

22.7

F

74.13

P

0.000

The degrees of freedom for the residual error are, from the ANOVA table,

21

n k 1 24 2 1 21 . So for a 95% confidence level, use t .025

2.080

(a)

95% confidence interval on 1 :

1 b1 t .21

025 s b1 .0471 2.080 0.0203 .0471 0.0422 or

(b)

0.0049 1 0.0893

For X1: t b1 / sb1 0.0471/ 0.0203 2.320 t.025 2.080 with 21 degrees of freedom

for = 0.05. Reject H0. There is evidence that the variable X1 contributes to a model

already containing X2.

For X2: t b2 / sb2 0.011947/ 0.002249 5.312 t.025 2.080 with 21 degrees of

freedom for = 0.05. Reject H0. There is evidence that the variable X2 contributes to a

model already containing X1.

Both variables X1 and X2 should be included in the model.

Exercise 11.16 in James T. McClave, P. George Benson and Terry Sincich, Statistics for Business and

Economics, 8th ed. , Prentice Hall, 2001, last year’s text:

We fit the model

y 0 1 x1 2 x 2 3 x3 4 x 4 5 x5 to n 30 data points and got SSE .33 and R 2 .92 .

We now want to ask whether this result is useful.

a) SSE 0.33 . SSE is not going to tell us anything unless we know the value of SST. But is high and

looks good, although the large value of k (the number of independent variables) should worry us.

Remember that you can always raise R 2 by adding independent variables.

b) There are two ways to go now. (i) Create or fake an ANOVA . If we are really careful we can observe

SSR SST SSE

SSE

SSE

1

.08 . If we solve this for the total sum of squares

that .92 R 2

. So .

SST

SST

SST

SST

SSE .33

SST

4.125 . SSR SST SSE 4.125 0.33 3.795 . Remember that total degrees of

.08 .08

freedom are n 1 30 1 29 , and that the regression degrees of freedom are k 5, the number of

independent variables. This leaves df n k 1 30 5 1 24 for the error (within) term. Our table

thus reads

SOURCE

SS

DF

MS

F

F.05

55.2 F 5, 24 2.62

Regression SSR 3.795

5 0.759

Error

SSE 0.330 24 0.01375

Total

SST 4.125 29

It would be a lot faster to fake an ANOVA, since only the proportions are important. This means that we

could use 100 R 2 92 for SSR and 100 for SST . The fake ANOVA table would read

SOURCE

SS

Regression SSR 92

SSE 8

Error

SST 100

Total

DF

5

24

29

MS

18.4

0.3333

F

F.05

55.2 F 5, 24 2.62

252solnJ1 11/9/07

(Open this document in 'Page Layout' view!)

Of course we could learn another formula, which is what the text does.

R2

SSR

k

R 2 n k 1

.92 30 5 1

k

k , n k 1

F

55 .2 . In any case, this value

2

2

k

1

.92

5

SSE

1 R 1 R

n k 1 n k 1

of F is compared to F k ,nk 1 F 5,24 2.62 . The null hypothesis is no relationship between the Xs

and Y or no significant regression coefficients except, maybe, 0 or 1 2 3 4 5 0 . We

reject the null hypothesis since the F we computed is larger than the table F and conclude that somewhere

there is a significant .

Exercise 11.19 in James T. McClave, P. George Benson and Terry Sincich, Statistics for Business and

Economics, 8th ed. , Prentice Hall, 2001, last year’s text:

We fit the model

2

y 0 1 x1 2 x 2 to n 20 data points and got SSE

y y 12.35 and

SST

y y

2

24.44 .: a) In the first part we are asked to compute the ANOVA table. We know that

SSR SST SSE 24.44 12.35 12.09 . Remember that total degrees of freedom are n 1 20 1 19 ,

and that the regression degrees of freedom are k 2, the number of independent variables. This leaves

df n k 1 20 2 1 17 for the error (within) term. Our table thus reads

252solnJ1 11/21/2003

SOURCE

SS

Regression SSR 12.09

Error

SSE 12.35

Total

SST 24.44

DF

2

17

19

MS

F

6.045

8.32

0.72647

F.05

F 2,17 3.59

SSR 12 .09

n 1R 2 k in my

.4947 . What the text calls R 2 adjusted, Ra2 , appears as Rk2

SST 24 .44

n k 1

19 .4947 2

2

.4352 .

outline. So R k

17

b) Our null hypothesis is 1 2 0 and one version of the test is above. We reject the null hypothesis

after seeing that the F we computed is above the table F and thus conclude that the model is useful. An

R2

alternate formula for F is F k , n k 1

R2

1 R

2

n k 1

.4947 20 2 1

8.322

k

1 .4947

2

Exercise 11.23 19 in James T. McClave, P. George Benson and Terry Sincich, Statistics for Business

and Economics, 8th ed. , Prentice Hall, 2001, last year’s text: We fit a model with seven independent

(mostly dummy) variables to 268 observations. The dependent variable is the logarithm of an auditing fee.

The results were summarized as follows:

Independent variable Expected sign of i

pvalue

bi

ti

Constant

-4.30 -3.45 .001

Change

+

-0.002 -0.049 .961

Size

+

0.336 9.94 .000

Complex

+

0.384 7.63 .000

Risk

+

0.067 1.76 .079

Industry

-0.143 -4.05 .000

Big 8

+

0.081 2.18 .030

NAS

?

0.134 4.54 .000

2

R .712 F for ANOVA is 111.1

252solnJ1 11/9/07

(Open this document in 'Page Layout' view!)

a) The model is Y 0 1 X 1 2 X 2 3 X 3 4 X 4 5 X 5 6 X 6 7 X 7 . If we substitute

the coefficients, we get

Y 4.30 0.002X 1 0.336X 2 0.384X 3 0.067X 4 0.143X 5 0.081X 6 0.134X 7

b) Assess the usefulness of the model. Assume that the F of 111.1 quoted is the ratio of MSR and MSE as

computed in previous problems, and should have k 7 and n k 1 268 7 1 260 degrees of

7, 200 2.06 and F 7, 400 2.03 , so we can guess that F 7, 260 is about 2.05.

freedom. From the table F.05

.05

.05

We reject the null hypothesis that the coefficients of the independent variables in general are insignificant.

It is probably a good idea to note that the value of R 2 is not thrilling.

c) We are asked to interpret b3 . The variable Complex is the number of subsidiaries of the firm audited.

The coefficient is 0.384 indicates that if the number of subsidiaries rises by one the expected value of the

logarithm of the audit fee goes up by 0.384.

d) Interpret the results of the t-test on b4 . It is customary to use two-sided tests, but the authors of the study

used one-sided tests in some cases because negative values of the coefficients would be meaningless. Our

null hypothesis is thus 4 0 and the alternate is 4 0 . The t-ratio is computed for you and is 1.76 with

a computed p-value of .079. Since this is above the significance level, we do not reject the null hypothesis

and thus conclude that we do not have evidence that the coefficient is positive.

252solnJ1 11/9/07

(Open this document in 'Page Layout' view!)

252solnJ1 11/21/2003

e) The purpose of this analysis was to see is new auditors charged less than incumbent auditors. Assuming

that their thesis would be validated with a negative coefficient, they seem to be working with a t of

-0.049.The author claims that their hypotheses are H 0 : 1 0 and H 1 : 1 0 . If the value of t is 0.049 ,

given the alternative hypothesis, the p-value is defined as Pt 0.049 and there is no way that could be

.961. My guess is that the actual value is about Pz 0.049 which is about half of the p-value they give,

but enough to convince us that the null hypothesis cannot be rejected, so there is no evidence that new

auditors charge less.