Associative Thinking Agents - USF Computer Science Department

advertisement

Associative Sources and Aggregator Agents

David Wolber and Christopher H. Brooks

Computer Science Department

University of San Francisco

2130 Fulton St, San Francisco, CA 94117-1080

{wolber, cbrooks}@usfca.edu

Abstract

The goal of our research is to develop associative agents that

assist users in locating and filtering useful information, thereby

serving as virtual librarians. To this end, we have defined a family

of web services called associative sources, and a common API for

them to follow. This API, coupled with a public registry system,

makes it possible for new associative sources to register as such,

and for associative agents (clients) to access this dynamic list of

sources. The dynamic nature of this system allows for the

development of clients that collect and repurpose information

from multiple associative sources, including those created after

development of the agent itself.

Categories and Subject Descriptors

H.4.3. [Information Systems]: Communication Applications

General Terms

Algorithms, Design, Human Factors, Standardization,

Keywords

Web services, Polymorphism, Aggregation, Reconnaissance,

Agents, Context, Associativity

1. Introduction

In many domains, web service providers are agreeing on standard

programmatic interfaces so that consumer applications need not

re-implement client code to access each particular service. For

instance, Microsoft has published a WSDL interface to which

Securities information providers can conform to[12].

Our research applies this standardization in a cross-domain

fashion by considering web services that provide similar

functionality but are not generally within the same topic domain.

In particular, we consider a class of web service which we call

associative information sources. Such services associate

documents with keywords, documents with other documents,

persons with documents, and in general information resources

with other resources. The Google and Amazon web services are

prime examples of services in this class, as are domain-specific

information sources like Lexis-Nexis for law, the Modern

Languages Association (MLA) page for literature, and CiteSeer

and the ACM Digital library for computer science.

Currently, many services fit within this class, but they all define

different programmatic interfaces (APIs). For instance, Google

and Amazon both provide a search method that accepts keywords

and returns a list of links, but a different method signature is used

by each. Because of this non-uniformity, client applications,

which we call associative agents, must talk to each associative

source using a different protocol. This prohibits a developer who

has written a client for the Google service from reusing the code

used to access the Amazon service.

More importantly, the lack of a uniform API prohibits the use of

polymorphic lists of associative sources. This is important for

associative agents that aggregate information from various

sources. Without polymorphism, the choice of which sources to

make available in a client application must be set at development

time, and the end-user of the client application is restricted to

those chosen. An end-user cannot add a newly created or

discovered source without the help of a programmer.

One potential solution to this problem involves providing an

intelligent agent with an ontology to identify functions, such as

keyword search, that are essentially the same in different services

but use different method signatures. Such automated service

discovery is an increasingly popular topic in the semantic web

community [4]. However, this is a vastly challenging problem

requiring a combination of agent intelligence and a sophisticated

description of the semantics of sources.

Our solution is based instead on standardization. We have defined

a common API for the associative information source class of web

services, and a public registry system for such sources. The API is

specified in a publicly available WSDL file that defines a number

of associative queries (see Figure 1).

With this open system, any organization or individual can expose

a data collection as an associative information source by creating

a web service that conforms to the API. If there is already a

service for the data collection, the owners or a third-party can

write a wrapper service that conforms to the API but implements

methods by calling the existing service. After the source is

implemented and deployed, it can be registered using a web page

interface found at http://cs.usfca.edu/assocSources/registry.htm.

Aggregator agents use the registry to make the list of registered

sources available to users. A registry web service is provided that

includes a getSources() method. The objects returned from this

method contain URLs referencing the WSDL for the source and

the actual endpoint, the particular associative methods that the

source provides, and metadata about the source. The aggregator

can list the available sources for the user to choose from, or

intelligently choose the source(s) for the working task. In either

instance, the list of sources is dynamic, allowing users to benefit

from newly developed sources as soon as they are available

without the aggregator being re-programmed.

To bootstrap the system, we have developed a number of

associative source web services, including ones that access data

from Google, Amazon, Citeseer, the ACM Digital library, and the

Meerkat RSS feed site. We have also developed sample C# and

Java

code

that

can

be

downloaded

from

cs.usfca.edu/assocSource/registry.htm and used to build new

associative sources.

Our goal in defining this associative sources system is to ease the

task of developing associative agents that aggregate information,

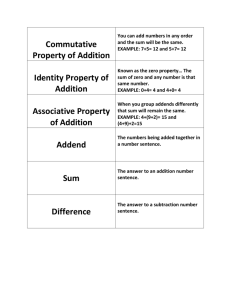

Figure 1: A snapshot of the WebTop user interface.

re-purpose it, and display it to the user. As a proof of concept, we

have modified an existing associative agent, WebTop [13], so that

it uses the common API and registry to access sources. Whereas

the old agent suggested associated links only from Google and the

user’s personal space, the new application allows the user to

dynamically choose the sources from which suggested links

appear. Our plan is to make the source code of this agent publicly

available for the benefit of other associative agent developers.

Though the agent’s primary purpose is to consume information

from other sources, it also allows the user to act, if he or she

chooses, as a producer of information-- an associative information

source. In this way, peer-to-peer knowledge sharing is facilitated,

and agents can get associations from services like Google’s and

from the user’s colleagues.

This paper is organized as follows: We first describe the newest

WebTop, our associative agent, both to share what we’ve learned

from building and using such agents, and to motivate the need for

a common associative source API. Next, we describe the

architecture of the overall system and the working API that has

been defined for an associative source. Following this, we show

how associative clients and sources can be implemented within

our framework, and discuss in more detail the idea that clients can

also serve as sources. Finally, we discuss our work in perspective

to other work, describe the current status of the system, and

suggest ideas for future work.

2. Associative Agents

Associative agents serve as virtual library assistants, peeking over

the user’s shoulder as the user writes or browses, analyzing what

associative information would be helpful, and then scurrying off

to virtual libraries (information sources) to gather data. Also

known as reconnaissance agents [7], personal information

assistants [1], and attentive systems [8], the goal is to augment the

user’s associative thinking capabilities and thereby improve the

creation and discovery process.

Figure 1 shows a screenshot of WebTop. The user can browse

web documents or edit MS-Word documents in the right panel. As

the user works, associative links are displayed in the left panel,

which we call the context view. The ‘I’,’O’, and ‘C’ icons specify

the type of association. ‘I’ stands for inward link, i.e. the

document points to the working one (the CS research page), ‘O’

stands for outward link, and ‘C’ means the documents are similar.

Color coding is used to distinguish links found from Google from

those found in the user’s own personal space.

Clicking on any expander (+) triggers another information source

access by the system. In Figure 1, the user has expanded the

“projects” page to view its associations.

public interface AssocInfoSource

{

// keyword related

ArrayList keywordSearch(String keyword,int n, Restriction res);

// url related

ArrayList getInwardLinks(String url, int n, Restriction res);

int getInwardLinkCount(String url);

ArrayList getSimilarWebPages(String url, int n, Restriction res); // similarity defined by source

// author related

ArrayList getAuthorDocs(String authorLast,String authorFirst, int n, Restriction res);

ArrayList getCitationsOfAuthor(String authorLast,String authorFirst, int n, Restriction res);

int getCitationCount(String authorLast,String authorFirst, Restriction res);

PersonList getSimilarAuthor(String authorLast, String authorFirst, int n, Restriction res);

// title related

ArrayList getCitationsByTitle(String title, int n);

ArrayList getSimilarDocuments(String title, int n);

// some sources allow for searches of sub-areas

ArrayList getCategories();

}

}

Figure 2. A working version of the API for an associative information source (using Java syntax).

Though many types of associative agents have been built, we have

identified several features that seem to be effective in helping

users locate and manage information. They include:

Zero-input interface [7]. In the traditional desktop, creation and

information retrieval are two distinct processes. When a user is in

need of information, he or she switches from the current task,

formulates an information query (e.g., a set of keywords) and then

invokes the query in a search engine tool.

Zero-input interfaces seek to integrate creation and information

retrieval. The agent underlying the interface analyzes the user’s

working document(s) and automatically formulates and invokes

information queries. For instance, the agent might use TFIDF[10]

or some a similar algorithm to identify the most characteristic

words in the document, then send those as keywords to an

information source search.

The results of such queries are listed on the periphery of the user’s

focus. The user periodically glances at the suggested links and

interrupts the working task only when something is of interest.

Because zero-input interfaces are always formulating associative

queries, impromptu information discovery is facilitated. There is

no need for the user to stop their current task and switch contexts

and applications in order to search for related work.

Graph/tree view of retrieved information. Search engines typically

provide results in a linear fashion. The user can select a link to

view the corresponding page, but there is no way to expand the

link to view documents related to it, and there is no mechanism

for viewing a set of documents and their relationships.

A more flexible approach, taken by WebTop, is to display

retrieved links in a file-manager-like tree view. When the user

expands a node in the tree, the system retrieves information

associated with that link and displays it at the next level in the

tree. By expanding a number of nodes, the user can view a

collection of associated documents, e.g., the citation graph of a

particular research area.

Mixing of association types. Search engines and file managers

typically focus on one type of association. For instance, Google’s

standard search retrieves content-related links, that is, links related

to a set of keywords. In the separate Advanced Search page, a

Google user can view inward links of a URL, which are pages

containing links to the URL. However, there are no queries or

views that integrate content-related and link-related associations.

Similarly, file managers focus on one association type—parentchild relationships of folders and files—and ignore hyper-link

associations and content-related associations.

An associative agent can integrate various association types, e.g.,

parent-child, link, and content-relation, into a single context tree

view. Associations from each type can be listed at each level of

the tree, allowing a user to view various multiple-degree

associations, e.g., the documents that point to the content-related

links of a document, or the inward links of the outward links of a

document (its siblings).

Mixing of external and local information. In the traditional

desktop, there are tools that work with web documents (search

engines) and tools that work with local documents (file managers

and editors). There is generally little integration between the two.

An associative agent can de-emphasize the distinction between

local and external documents by integrating both into a single

context tree view. For instance, if a local document contains a

hyperlink to a web document, the agent can display that

relationship. If an external document has similar content to that of

a local document, that association can be displayed. By

considering both the user’s own documents and documents from

external sources, the associative agent serves as both a a

remembrance agent [9] and a reconnaissance agent [7].

Aggregation of Multiple Sources. Often, a user would like to

aggregate information from various sources. For instance, a

computer science researcher might want the agent to suggest

papers from either the ACM Digital Library or Citeseer. At

another time, he might also want to see books from Amazon that

have similar content to the papers.

With the traditional desktop, users can open multiple web

browsers, visit each site, and manually aggregate the information,

but such work is tedious and the multiple-window view of the

information is hardly ideal. Associative agents can help by

automatically aggregating the information and displaying it in

useful ways.

An aggregator combines the data from various sources, processes

(repurposes) the data to better match a user’s preferences or

needs, then displays it to the user.

Unfortunately, if the web services to be aggregated do not share a

common API, the client programmer must write source-specific

code to access each source. The developer locates and learns the

API of the various sources, then writes different client code to

access the operations of each source. The following is indicative

of the code in a typical web service aggregator:

CiteseerResults = Citeseer.getCiteSeerCitations(title);

ACMResults = ACM.getACMCitations(title);

// combine and rank documents from two lists

// display links

With such code, the sources that are available to the user are

chosen by the developer at development time. When source access

is hard-coded in this way, the end-user is allowed to choose from

a provided list of sources, but the list of available sources is fixed

at development time. Without changing the code of the

aggregator, a newly discovered or newly created source cannot be

accessed by the user.

What we have defined is a scheme analogous to interfaces in

object-oriented programming. With interface inheritance, similar

behaving classes are made to conform to the API defined by the

interface, and flexible client code can be written that processes a

list of objects of the various implementing types. When new

classes are defined that conform to the interface, the client code

can access instances of these new classes without any change.

As will be shown later, both interface inheritance and

polymorphism is possible in a distributed web service setting.

3. Architecture and API

One challenge for clients is the discovery of new associative

sources. In our current architecture, we use a centralized registry

server to allow associative clients to dynamically locate new

associative sources. The following summarizes the workflow of

the dynamic associative sources and agents system:

1. Various entities create services that follow the common

“associative sources” API. Generally, these services are registered

using the registry web page. However, a programmatic web

service interface is also provided so that an agent can register a

source (our WEBTOP client does this when registering a user’s

personal space as a source).

2. Client applications access the list of all available sources

through the associative sources registry web service. Then either

the user is allowed to choose the active sources, or these are

chosen by the application using user profile and session

information.

3. When an information retrieval operation is invoked, either

explicitly or implicitly, the operation is invoked on the chosen

sources and the results processed and displayed.

Figure 3 illustrates a set of information sources registering

themselves with the associative source registry. Notice that a

source might be a previously existing information provider, such

as Google, or another user who has chosen to allow others to

access the information in her personal web. Once these sources

are registered, a client may query the registry to find out the

availability of information sources.

A second challenge is providing a uniform API for the

associative information source class of services. The current API

(Figure 1) was created through our experience analyzing and

implementing wrapper services for Google, Amazon, and

CiteSeer. It contains keyword search and citation association

methods which accept both URL and title/author parameters. The

methods allow the client to specify the number of links to be

returned (n) and to set restrictions (e.g. date, country) on the

elements that should be considered. Results are returned in a

generic list of Metadata objects, where the Metadata class is

defined to contain the Dublin Core fields and a URL.

The current API is a working one and presented here for

illustrative purposes only. It will no doubt be modified greatly as

we analyze more sources and refine our rather vague definition of

“associative source”. We expect there will always be tension

between broadening it to allow for specialized operations and

reducing it for simplicity. Our goal is to work collaboratively with

interested organizations to define the details of this standard.

3. Building Sources

One way to access an information source is to write a web

scraper. Scrapers use the same web interface as a human; they

send HTTP requests to web servers and then extract data from the

HTML that the server returns. Unfortunately, scrapers are

dependent on the layout of a page and thus can stop working if a

page’s structure is modified even minimally.

A better model is one based on web services. Web services

provide a programmatic interface to an underlying information

source, instead of the web page interface that is essential for

humans but taxing for agents. Data is retuned using XML, rather

than HTML, without any formatting information. Because the

programmatic interface is not affected by changes in layout, it

allows for more robust access by clients.

Developers can build associative information source web services

directly on top of a data collection by implementing the given

API. Such direct development is not always possible, however, as

a developer may not have direct access to the data source. In this

case, both primary and third-party developers can leverage

existing information sources by creating “wrapper” services.

Wrappers implement the common API by wrapping calls to the

existing services in the API methods. For instance, the first

associative source we implemented wrapped the Google web

service within the associative source API. Clients call the wrapper

service,

which

in

turn

calls

the

actual

source

(http://api.google.com/search/beta2).

At the public registry, we provide a WSDL file specifying the API

along with C# and Java skeleton code we have generated from

that WSDL. If the developer wants to use a different language, the

WSDL can be downloaded and a tool can be used to generate

skeleton code in that language. If C# or Java is the language of

Associative

Source

(Google)

Associative Source

Registry

1) Register

3) Request Sources

2) Confirm

Associative

Source

(User's

personal Web)

Associative

Client

(WebTop)

2)Confirm

4) List Sources

<googleSource,

personalSource1>

1) Register

Figure 3: As new associative information sources come on line, they register their presence wit h the registry. When a

client wants to find new information, it queries the registry to find the location and capabilities of each information

source. Once this is complete, clients and sources communicate directly.

choice, the developer can simply download the skeleton code, add

code in the methods to access the targeted source, and then deploy

the service.

Once an associated source has been created and deployed, it can

be registered with the associative data source registry web site.

The person registering enters the name, description, WSDL URL

and endpoint URL of the service. The register parses the WSDL

to record which methods of the common API are implemented by

the source. All of this information is then made available to clients

through the registry web service method “getSources()”.

4. Building Clients

The registry provides two WSDL files for client developers. The

first is the WSDL file that provides the common API for

associative information sources. Developers use this file to

generate web service client code that calls associative information

sources. Most Web Service tools (e.g., jax-rpc, the tools in

Microsoft’s Visual.Net, Apache Axis) generate such client code

automatically from a WSDL file.

The WSDL file from the registry actually specifies a URL for a

particular associative information source, specifically our Google

wrapper service. So any code automatically generated from the

WSDL will by default accesses Google. This URL is specified in

a field within the service stub object that is generated.

To access another associative source, say our Amazon wrapper,

the developer need only modify the URL field of the service stub

before calling one of the methods of the common API:

serviceStub.URL=”cs.usfca.edu/assocSources/amazonService”;

list = serviceStub.keywordSearch(keywords);

Aggregators, of course, access multiple sources. With the registry

system, this list of sources can grow dynamically. Web service

polymorphism is facilitated through setting the URL field as in the

above example. The typical client that uses the associative sources

system will store a list of source objects, where each source

contains metadata about the source along with the URL of its

endpoint service. The client code processes each source in the list

in the following fashion:

foreach source in sourceList

{

stubService.setURL(source.URL);

resultlist.append(stubService.keywordSearch(keywords)

}

Note that this is not traditional polymorphism/dynamic binding in

which an object (e.g., stubService) has a different dynamic type

on each iteration. In fact, there is only one “stubService” object

and it is always the object of the method call. The (distributed)

method is instead “bound” by changing the URL in that

“stubService” object, which changes the destination of the

underlying network operation.

To access the sources in the first place, the client developer makes

use of a second provided WSDL file. This WSDL file is used to

generate client code that obtains the list of available associative

sources from the registry. Some clients will access this list when

the user requests to modify the list of active sources. The list of

available sources are retrieved and displayed, and the user is

allowed to choose the sources that should be active. For instance,

a user working on a computer science research paper might

choose the ACM Digital Library and Citeseer as the two active

sources.

Other clients will intelligently choose sources for the user, i.e.,

analyze the working task and select sources that will predictably

return more useful results. Each source object returned from the

getSources() registry method contains metadata about the source,

including a textual description which can be used in source

discovery. We also plan to explore the use of more sophisticated

mechanisms for describing sources such as DAML-S[3].

Figure 4 illustrates how a client might use this system. After

querying the registry to determine the information sources that are

available, the client is able to use the same uniform interface to

contact each of the available associative sources. These requests

are routed through web service wrapper agents located on servers

at USF. The wrapper agents have the job of translating the client’s

request into the appropriate form for each information source. In

the case of Google or Amazon, this is a SOAP request, whereas in

the case of Citeseer, the request is done via HTTP. The wrapper

agents receives the reply from the source, either as XML, SOAP,

or an HTML-formatted web page, transforms this reply into a

uniform SOAP-encased format, and forwards the reply back to the

client. From the client’s point of view, each information source

uses the same interface, allowing the client to easily aggregate

data from multiple sources.

Associative Source

Wrappers (hosted at

usfca.edu)

Information Sources

SOAP request

Google.com

SOAP reply

Google Source

Wrapper

AS-SOAP reply

AS-SOAP

Request

SOAP request

Amazon.com

SOAP reply

HTTP request

Citeseer.org

Amazon Source

Wrapper

Associative

Client

AS-SOAP reply

AS-SOAP reply

Citeseer Source

Request

HTML reply

Figure 4: A client makes a uniform request to the source wrappers. Each source wrapper repackages this request into

the appropriate form (as a SOAP or HTTP request) and forwards it to the corresponding Associative Source(AS). The

requested information from the AS is then repackaged into a uniform format (denoted as AS-SOAP) and returned to the

client.

5. Clients as Sources

input manner. This allows users to share ‘knowledge’ in the same

way that they might share files with a traditional file-sharing

program such as Kazaa.

So far, our discussion has focused on the traditional client-server

approach, in which there are information sources (servers) who

only provide information and client applications that only

consume information.

The other scenario involves domain experts exposing their

personal webs to the larger community. Imagine working on a

document concerning autonomous agents and being able to view

associated information from Henry Lieberman’s personal space!

However, our system is also designed to function in a peer-to-peer

fashion in which a user acts as both a consumer and a producer of

information. When users install the WebTop client on their

desktops, they are asked if they want to expose their personal

spaces as information sources, and if so, the folders they want to

make public. The system then iterates through the chosen folders,

recording metadata about the documents in the system including

an inverse index and a link table. This personal web information

is updated as a user works so that it is always consistent with the

file system. For example, when a user bookmarks a web page or

adds a link from a document to a web page, that association

information is recorded.

When the user elects to expose the personal web, the system

automatically deploys a web service and registers the service as an

associative source. The personal web service follows the common

associative source API, so clients can send associative queries to

personal webs just as they do to sources such as Google. In this

way, peer-to-peer knowledge sharing is facilitated.

While this implementation is still at the prototype stage, we

imagine two powerful scenarios for its use. First, individuals in a

research group can attach to each others personal webs. As each

individual bookmarks newly discovered documents, writes new

material, or defines connections between entities, this knowledge

is instantly available to the others in the group, perhaps in a zero-

6. Related Work

Web service discovery has been the focus of many researchers in

the Semantic web community. One example is the development of

DAML-S[3,4], an agent-based language for describing Web

service semantics and complex ontologies. Most work with

DAML and DAML-S focuses on the composition of complex

tasks from simpler services, but one could also envision an

associative agent using DAML-S source descriptions to

automatically identify sources that provide associative methods

like those in our API.

By contrast, our strategy is to require sources to implement a

standard API, or wrappers that conform to it, and then register

themselves at the central registry. Agents are simplified greatly

with this scheme. However, building consensus on a standard may

prove to be an even bigger challenge.

RSS feeds provide an alternative to XML-SOAP web services and

another way for clients to aggregate data from multiple sources.

RSS feeds work on a push model where data is delivered to clients

as it become available. Web services are based on a “pull” model

in which the client specifically requests data meeting particular

criteria.

With the RSS scheme, processing and filtering of the data is all

the responsibility of the aggregator client—sources just dump

data, including information items and any associative data (link

information) they have. Associative source web services, on the

other hand, respond to queries and perform important processing

on the server end. This eliminates the need for every aggregator to

implement similar processing methods, and it is necessary or

preferable when the data collection is very large, such as with

Google’s link database.

Search engines such as Metacrawler have also been used to

aggregate search results from multiple search engines. However,

they do not typically incorporate domain-specific association

sources, nor do they provide the user with assistance in sorting or

managing this information.

One of the earliest associative agents was Letizia [7]. It identifies

the hyperlinks in the open document then performs a look-ahead

for the user by scanning the linked documents and ranking them.

Ranking is based on relevancy, as measured against a working

profile of the user.

Another early agent was Margin Notes [9]. It uses a TFIDF [10]

algorithm to find the most characteristic words in each section of

a document, then sends those words to a both a search engine and

a local information service. The resulting links are then displayed

in the margins of the document, section-by-section, providing

present-day annotations even for older documents (e.g., a link to a

document by present day novelist Milan Kundera might appear in

the margins of the Communist Manifesto).

Other associative agents include Watson [1] and Powerscout [7].

Watson performs zero-input searches on various information

sources including the web, news, images, and domain-specific

search engines. Watson has also been extended to use the

characteristic words of a document not just to build a query, but to

automatically choose the sources where the query should be sent

[6].

PowerScout uses an iterative approach in invoking automated

searches. It first sends a large set of keywords to the search

engine, looking for extremely close matches to the open

document. As searches fail, it eliminates keywords from the set

and resends the query. Searches in later iterations are more likely

to be successful, but return documents that are only somewhat

similar in content to the open document.

Status and Future Work

Though the registry web site and web service and a number of

information sources are publicly available, we have not yet

advertised their existence to the broader user community.

Similarly, the WebTop associative client is a working prototype

that has only been used by its developers and a handful of users.

We plan to publicly release WebTop in January 2004, following

extensive testing and user studies. We are extremely excited to see

what types of sources register and what types of clients are

developed to make use of the system. This information will help

in guiding the development of our API, as well as supporting

protocols.

We are particularly interested in further developing the peer-topeer aspect of this project by allowing associative clients to also

act as associative sources. This opens up a number of interesting

research questions, including helping users compactly describe

their interests and information needs, helping users find similar

users in a large population, and allowing groups of similar users to

dynamically band together into larger community structures. As a

first step, we plan to proactively encourage research groups at

USF to use the system, and to also encourage experts (e.g. an

instructor of a course) to expose their personal webs to those with

interest (e.g., the students in the course). We plan to draw on the

lessons of both the blogging community and the peer-to-peer file

sharing community to determine the best ways to allow users to

share information and expertise with each other in a scalable

fashion.

Allowing users to share information with each other will require

some sort of access mechanism that allows a user to specify what

can and cannot be shared. Currently, the mechanism for

specifying the public/private parts of ones personal web is

rudimentary. We plan to explore more sophisticated, declarative

mechanisms that allow a user more flexibility in specifying what

can be shared, who it can be shared with, and the allowable uses

of that information.

The addition of users as sources also provides a need for

associative clients to intelligently filter the list of available

sources, and also intelligently select sources on a user’s behalf.

The current WebTop prototype requires the user to explicitly

choose the sources that are currently active. This is fine for our

current model, which has more of a client-server feel, but as we

move into a more peer-to-peer approach, a more intelligent client

will be needed to help users manage information overload. We

plan to draw on the techniques for automatic source selection

explored in a version of Watson[6]. This will include both

intelligent querying and filtering of sources on the client end, and

the ability for sources to describe the information they provide in

a richer and more sophisticated manner.

References

[1] Budzik, J., Hammond, K.J., Marlow, C.A., and Scheinkman,

A., Anticipating information needs: Everyday Applications

as interfaces to Internet Information Sources. In the 1998

World Conference on the WWW, Internet, and Intranet.

[2] Google, Inc., http://www.google.com

[3] DAML-S. http://www.daml.org/services, 2001.

[4] DAML-S Coalition: A. Ankolekar, M. Burstein, J. Hobbs,

O. Lassila, D. Martin, S. McIlraith, S. Narayanan, M.

Paolucci, T. Payne, K. Sycara, and H. Zeng. DAML-S:

Semantic markup for Web services. In Proc. Int. Semantic

Web Working Symposium (SWWS), 411–430, 2001

[5] Lawrence, S., Bollacker, K, and Giles, C., Index and

Retrieval of Scientific Literature, Proceedings of the Eighth

Annual Conference on Information and Knowledge

Management (CIKM, November 1999), 139-146.

[6] Leake,D., Scherle, R., Budzik, J., Hammond, K., Selecting

Task-Relevant Sources for Just-in-Time Retrieval, AAAI-99

Workshop on Intelligent Information Systems, 1999.

[7] Lieberman, H., Fry, C., and Weitzman, L., Exploring the

Web with Reconnaissance Agents, Comm. of the ACM, Vol.

44, No. 8, August 2001.

[8] Maglio, P. Barrett, R., Campbell, C. Selker, T., Suitor: An

Attentive Information System, 2000 International Conference

on Intelligent User Interfaces, New Orleans, Louisiana, USA,

ACM Press.

[9] Rhodes, B.J., Maes, P. Just-in-time information retrieval

Agents, IBM Systems Journal, Volume 39, No. 3 and 4,

2000.

[10] Salton, G., McGill, M., Introduction to Modern Information

Retrieval. McGraw Hill, New York, 1983.

[11] Selberg, Erik and Etzioni, Oren. Multi-Service Search and

Comparison Using the MetaCrawler. Proceedings of the

Fourth International World WideWeb Conference,

Darmstadt, Germany, Dec 1995.

[12] Short, Scott, Building XML Web Services for the Microsoft

.Net Platform, Microsoft Press, 2002.

[13] Wolber, D. Kepe, M., and Ranitovic, I., Exposing Document

Context in the Personal Web, Proceedings of the

International Conference on Intelligent User Interfaces (IUI

2002), San Francisco, CA.