Lecture Note

advertisement

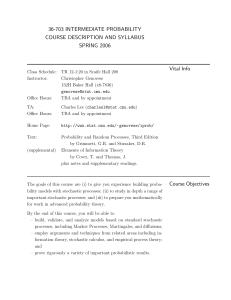

STAT 6310, Stochastic Processes

Jaimie Kwon

STAT 6310, Introduction to Stochastic Processes

Lecture Notes

Prof. Jaimie Kwon

Statistics Dept

Cal State East Bay

Disclaimer

These lecture notes are for internal use of Prof. Jaimie Kwon, but are

provided as a potentially helpful material for students taking the course.

A few things to note:

The lecture in class always supersedes what’s in the notes

These notes are provided “as-is” i.e. the accuracy and relevance of

the contents are not guaranteed

The contents are fluid due to constant update during the lecture

The contents may contain announcements etc. that are not relevant

to the current quarter

Students are free to report typos or make suggestions on the notes

via emailing or in person to improve the material, but they need to

understand the above nature of the notes

Do not distribute these notes outside the class

Best Practice for note-taking in class

-1-

STAT 6310, Stochastic Processes

Jaimie Kwon

I do not recommend students relying on this lecture notes in place of

actual notes he/she writes down

Bring a notepad and write down materials that I go over in the class,

using this lecture notes as the independent reference; you don’t

miss a thing by not having a printout of this lecture note in (and

outside) the class

If you still want to print these notes, it’d be better to print them 4

pages on a single page (using “pages per sheet” feature in MS

Word), preferably double sided (to save trees)

-2-

STAT 6310, Stochastic Processes

Jaimie Kwon

1 Basic probability

1.1 Sample spaces and events

A “sample space,” is the set of all possible outcomes of an

experiment. The sample points are the elements in a sample space.

An “event” in a subset of the sample space

Two events are mutually exclusive if …

1.2 Assignment of probabilities

Probability axioms

P(AB)=___

P(Ac)=___

1.3 Simulation of events on the computer

How do you simulate a coin tossing on a computer?

1.4 Counting techniques

Multiplication rule

Permutation of n distinct items taken r at a time

Combination: an unordered arrangement of r items selected from n

distinct items

1.5 Conditional probability

P(B|A)=___

Multiplication rule P(AB)=___

Law of total probability: if E1,…,Ek are a partition of the sample

space, then P(A)=___

-3-

STAT 6310, Stochastic Processes

Jaimie Kwon

1.6 Independent event

Events A and B are independent if ___

Events E1,E2,… are mutually independent if ___

2 Discrete random variables

2.1 Random variables

The probability mass function p(x) satisfies ___

CDF F(x)=P(Xx)

2.2 Joint distributions and independent random variables

The joint probability mass function p(x,y)=P(X=x,Y=y)

The marginal probability mass function pX(x)=___

The conditional probability mass function P(X=x|Y=y)

Discrete random variables X and Y are independent if ___

Discrete random variables X1,X2,…,Xn are mutually independent if

___

2.3 Expected values

E(X)=___

If Y is a function of a random variable X, then E(Y)=E(Q(X))=___

E(a+bX)=___

If Y=g1(X)+…+g2(X), then E(Y)=____

2.4 Variance and standard deviation

VAR(X)=___ = ____

STD(X)=___

Chebychev’s inequality

-4-

STAT 6310, Stochastic Processes

Jaimie Kwon

VAR(a+bX) = ___

STD(a+bX)=___

2.5 Sampling and simulation

Random variables X1,…,Xn are a random sample if they are i.i.d.

Empirical probability distribution; empirical probability mass function

2.6 Sample statistics

Sample mean X =___

Sample variance S 2

1 n

( X i X )2

n i 1

Sample standard deviation S=___

2.7 Expected values of jointly distributed random variables and

the law of large numbers

E(Q(X,Y))=___

E(aX+bY)=___

E(a1X1+a2X2+…+anXn)=___

If X and Y are independent, E(XY)=___

If X and Y are independent, VAR(aX+bY)=___

If X1,…,Xn are independent, VAR(a1X1+…+anXn)=___

If X1,…,Xn iid with E(Xi)= and VAR(Xi)=2, then

E ( X ),VAR( X ), STD( X ) ___

Weak law of large number: under the i.i.d. setup as above,

lim n P(| X | ) 0 for any >0.

2.8 Covariance and correlation

COV(X,Y)=___

-5-

STAT 6310, Stochastic Processes

Jaimie Kwon

CORR(X,Y)=___

VAR(aX+bY)=___

Bivariate random sample

The sample covariance cov(X,Y)

The sample correlation corr(X,Y)

2.9 Conditional expected values

E(Y|X=x)=___

E(Y)=E(E(X|Y))

VAR(Y|X=x)=___

VAR(Y)=E[___]+VAR[___]

3 Special discrete random variables

3.1 Binomial random variable

A binomial random variable is ___

For Y~bin(n,p), p(y)=___. Also, E(Y)=___ and VAR(Y)=___

3.2 Geometric and negative binomial random variables

The geometric random variable is ____. We write it as X~Geo(p).

In that case, p(x)=___, E(X)=___, VAR(X)=___

Given an integer k>1, the negative binomial random variable is ___.

We write it as Y~Nbinom(k,p).

In that case, p(x)=___, E(X)=___, VAR(X)=___

3.3 Hypergeometric random variables

Consider a jar containing the total of N balls, r of which red, the

remaining white. Randomly selecting n balls from the jar WOR, the

-6-

STAT 6310, Stochastic Processes

Jaimie Kwon

number of red balls in the sample X~Hypergeo(N,r,n).

In that case, p(x)=___, E(X)=___, VAR(X)=___

Approximately binomial with p=___

3.4 Multinomial random variables

Let Y1,…,Yk denote the number of times the mutually exclusive

outcomes C1,…,Ck occur in n iid trials. Let pi=P(Ci) for i=1,…,k. Then

we say (Y1,Y2,…,Yk)~multinom(n, p1,…,pk) and

P(Y1=y1,…,Yk=yk)=______ where ____

E(Yi)=__ and VAR(Yi)=___

COV(Ys,Yt)=____ if st.

3.5 Poisson random variables

X~Poisson() if p(x)=___

E(X)=___ and VAR(X)=___

If X~bin(n,p), P(X=x) ~ p(x) of Poisson() if ____ and =__

3.6 Moments and moment-generating functions (MGF)

-7-

STAT 6310, Stochastic Processes

Jaimie Kwon

4 Markov chains

4.1 Introduction: modeling a simple queuing system

A queue is a waiting line

Consider the following queuing system:

A company has an assigned technician to handle service for 5

computers.

Each computer independently fails with probability.2 during any

day

The technician can fix one computer per day.

If a machine breaks down, it will be fixed the day it fails provided

there is no backlog. If there is a backlog, it will join a service

queue and wait until the technician fixes those ahead of it.

Simulate the behavior of the system over 5 days of operation.

The system begins on Monday with no backlog of service and

ends on Friday evening.

We observe two characteristics of the system:

The Number of computers waiting for service at the end of

each day

The number of days in the week the technician is idle.

Simulation is “replicated” 1,000 times.

See the text for the output

The idea: many questions can be answered without any

computation from theory

-8-

STAT 6310, Stochastic Processes

Jaimie Kwon

4.2 The Markov property

Processes that fluctuate with time as a result of random events

acting upon a system are called “stochastic processes”. Formally, it

is a collection of random variables {X(n),nN}. In a typical context,

the “index set” N refers to time and the process is in “state” X(n) at

time n.

Time can be measured on a discrete scale or on a continuous scale

The states can be discrete or continuous

Four combinations: {discrete, continuous}-time, {discrete,

continuous}-state process

Discrete-time, discrete-state process

Discrete-time, continuous-state process

Continuous-time, discrete-state process

Continuous-time, continuous-state process

For now, we consider only discrete-time, discrete-state processes

X(0) : initial state

Two extremes:

Independent X(n)

X(n) depends on all the past: example?

Middle ground?

In a stochastic process having the “Markov property”, each outcome

depends only on the one immediately preceding it.

Definition 4.2-1. The process {X(n), n=0,1,2,…} is said to have

Markov property, or a Markov chain, if P[X(n+1)=s(n+1)| X(n)=s(n),

X(n-1)=s(n-1),…. ,X(1)=s(1),X(0)=s(0)]

-9-

STAT 6310, Stochastic Processes

Jaimie Kwon

=P[X(n+1)=s(n+1)|X(n)=s(n)]

for n=0,1,2,… and all possible states {s(n)}

Examples: insect on a circular box with 6 room

Position of a token on the board of many board games

Gambler’s net worth in a simple game of chance

One-dimensional position of a particle suspended in a fluid

(Random walk)

Number of customers in a “queue” over time

Total number of phone calls in each minute, when the number of

calls in each minute is independent 0, 1, 2 with probabilities 0.80,

0.15, 0.05, respectively.

State diagram

1

2

3

4

5

6

Markov property is more an assumption than a verifiable fact

The “one-step transition probability” is the conditional probability

defined as

Pn(ij)=P[X(n+1)=j | X(n)=i]

A process whose transition probabilities don’t depend on time n is

called a “time-homogeneous process”. Otherwise, the process is

called “nonhomogeneous”

For a time-homogeneous process, we simply write P(ij)

- 10 -

STAT 6310, Stochastic Processes

Jaimie Kwon

A useful way to represent the transition probabilities of a timehomogeneous Markov chain is with a “one-step transition matrix”

Ex.4.2-1 (insect in a circular box)

4.3 Computing probabilities for Markov chains

We consider time homogeneous Markov chains for now

The probability of Markov chain visits states s(1), s(2),…,s(n) at

times 1,2,…,n given that the chain begins in state s(0) can be

computed by

P[X(n)=s(n), X(n-1)=s(n-1),…. ,X(1)=s(1) | X(0)=s(0)]

= P[s(0)s(1)] P[s(1)s(2)] …P[s(n-1)s(n)]

Proof:

A “path” of a process is a sequence of states through which a

process may move through.

The initial state X(0) can also be a random variable. In such cases,

the “initial distribution” of X(0) also need to be figured into the

computation of the probabilities of various paths

Example: consider a transition matrix

1 2 3 4

1 0 1/ 3 1/ 3

0

P 2 1 / 2 0

3 1 / 2 0

0

4 1 / 3 1 / 3 1 / 3

1 / 3

1 / 2 :

1 / 2

0

What’s the probability of

Path 1242 given it begins on 1?

Path 2134 given it begins on 2?

- 11 -

STAT 6310, Stochastic Processes

Jaimie Kwon

Path 1242 if the probability of ¼ of starting in any of the

compartments?

What’s the probability of the rat ending up in compartment 1 two

moves after it starts from compartment 1?

Definition 4.3-1. The “k-step transition probability” is defined as

P(k)(ij) = P[X(n+k)=j | X(n)=i]

For going from state i to j in two steps, the list of all possible paths

can be written as isj where s ranges over all possible states.

Thus

P ( 2) (i j ) P(i s) P( s j )

s

The above equation shows that the “two-step transition matrix” is

given by P2. In general,

Theorem 4.3-1. The k-step transition probabilities are obtained by

raising the one-step transition matrix to k’th power

The matrix Pk is called the “k-step transition matrix”.

Example. If the rat begins in compartment 1, what’s the probabilities

of it being in compartments 1,2,3 or 4 after 4 moves?

Example. Signal transmission through consecutive noisy channels

Example. A communication channel is sample each minute. Let

X(n)= Busy(1) or not busy(2) at time n and let the transition matrix

be

.6 .4

P

.1 .9

- 12 -

STAT 6310, Stochastic Processes

Jaimie Kwon

We assumed we know the initial state. If the initial state is random,

for example, we know that

P[ X (1) i] P[ X (0) s]P( s i)

s

Let (n) be the row vector whose elements are probabilities

P[X(n)=s] for all possible states. Then the above formula can be

written as:

(1) = (0) P.

Likewise, the probabilities for the chain after k steps is:

(k) = (0) Pk.

4.4 The simple queuing system revisited

Model the queuing system as a Markov chain!

Let X(n) be the backlog on day n

What is P? i.e. what are P(ij)s?

p <- 0.2

P <- matrix(c(sum(dbinom(0:1, 5, p)), dbinom(2:5, 5, p),

dbinom(0:4, 4, p),

0, dbinom(0:3, 3, p),

0,0, dbinom(0:2, 2, p),

0,0,0, dbinom(0:1, 1, p)),

byrow=TRUE, ncol=5)

P

> P

[,1]

[,2]

[,3]

[,4]

[,5]

[1,] 0.73728 0.2048 0.0512 0.0064 0.00032

[2,] 0.40960 0.4096 0.1536 0.0256 0.00160

[3,] 0.00000 0.5120 0.3840 0.0960 0.00800

[4,] 0.00000 0.0000 0.6400 0.3200 0.04000

[5,] 0.00000 0.0000 0.0000 0.8000 0.20000

That’s the Monday’s backlog

Compute two, three, four, five-step transition probability matrix:

P%*%P

P%*%P%*%P

- 13 -

STAT 6310, Stochastic Processes

Jaimie Kwon

P%*%P%*%P%*%P

P%*%P%*%P%*%P%*%P

round(P%*%P, 4)

round(P%*%P%*%P, 4)

round(P%*%P%*%P%*%P, 4)

round(P%*%P%*%P%*%P%*%P, 4)

[1,]

[2,]

[3,]

[4,]

[5,]

[,1]

0.5153

0.4828

0.4192

0.3288

0.2202

[,2]

0.2991

0.3077

0.3233

0.3415

0.3533

[,3]

0.1452

0.1613

0.1934

0.2405

0.3009

[,4]

0.0368

0.0437

0.0578

0.0799

0.1117

[,5]

0.0036

0.0045

0.0064

0.0094

0.0139

Since we begin at X(0)=0, the top row of P5 gives the probabilities

of having 0-4 as backlog after five steps (on Friday)

4.5 Simulating the behavior of a Markov chain

Why simulate?

“Easy” way

“Only” way

As a part of a larger, complex system

Example 4.5-2: deterioration of an automobile over states “excellent,

good, fair, and poor” over years. What’s the amount of time it will

take an auto to reach state 4?

> P

[1,]

[2,]

[3,]

[4,]

[,1] [,2] [,3] [,4]

0.7 0.3 0.0 0.0

0.0 0.6 0.4 0.0

0.0 0.0 0.5 0.5

0.0 0.0 0.0 1.0

4.6 Steady-state probabilities

For the rat problem,

> P100

[,1] [,2] [,3] [,4]

- 14 -

STAT 6310, Stochastic Processes

[1,]

[2,]

[3,]

[4,]

0.3

0.3

0.3

0.3

0.2

0.2

0.2

0.2

0.2

0.2

0.2

0.2

Jaimie Kwon

0.3

0.3

0.3

0.3

All the rows of the 100th-step transition matrix are (almost) the same.

Regardless of the starting state, after many moves, the rat has

probabilities ____ of being in states 1,2,3, and 4, respectively.

Let’s limit ourselves further to only finite state Markov chains

Definition 4.6-1. A finite state Markov chain is said to be regular if

the k-step transition matrix has all nonzero entries for some value of

k>0

Example: insect in a circular box {regular, not regular}

Example: rat in the maze

Example: busy/free of a communication channel

Theorem 4.6-1. Let X(n), n=0, 1, 2, …, be a regular Markov chain

with one-step transition matrix P. Then there exists a matrix

having identical rows with nonzero entries such that,

lim k P k

Let be the common row vector of the limiting matrix .. Then is

the steady-state probability vector, whose elements are steady state

probabilities.

Consider the fraction of visits a Markov chain makes to a particular

state in k transitions, i.e.

V(j,k) = (Number of visits to state j in k transitions)/k

Example. If a chain visits 1,2,1,2,2 in the first 5 times, then

V(2,5)=___ and V(1,5)=___.

- 15 -

STAT 6310, Stochastic Processes

Jaimie Kwon

Q: what’s E(V(j,k))=?

Theorem 4.6-2. Let X(n), n=0,1,2,… be a regular Markov chain. Let

j be the steady state probability for state j. Then

lim k E (V ( j , k )) j .

i.e., the long-run fraction of visits to state j

= steady-state probability of j

Q: how to find without matrix multiplication?

Theorem 4.6-3. Let X(n), n=0,1,2,… be a regular Markov chain with

one-step transition matrix P. Then the steady-state probability vector

=(1, 2, …,S ) may be found by solving the system of equations

P=, 1+ 2+ …+S=1..

Informal proof: Note Pk-1P = Pk. Send k on both sides to infinity

then we have P=. The result follows.

Example: Rat in the maze

Example: busy/not state of a communication line

If the chain is currently in state 1 and the path (12321) is

observed, the return time is __ transitions.

Theorem 4.6-4. Let X(n), n=0,1,2,… be a regular Markov chain. Let

=(1, 2, …,S ) be the steady-state probability vector for the chain,

and let Tj denote the time it takes to return to state j given that the

chain is currently in state j, j=1,2,…,S. Then,

E(Tj)=1/j.

Beginning at a random initial state X(0) with initial probability vector

(0), the k-step probability vector is (k)=(0)Pk. If (0) happens to

- 16 -

STAT 6310, Stochastic Processes

Jaimie Kwon

be the same as , it follows that

=(0)= (1)= (2)=…

For this reason, the steady-state distribution is also called a

stationary distribution

4.7 Absorbing states and first passage times

Not all Markov chains are regular.

Ex. Automobile deterioration over years

Definition 4.7-1. A state j is said to be an absorbing state if P(jj)=1;

otherwise it is a non-absorbing state.

We assume there is a path that leads from each non-absorbing

state to an absorbing state.

Q: beginning at a non-absorbing state, how long does it take to

reach an absorbing state?

Theorem 4.7-1. Let A denote the set of absorbing states in a finite-

state Markov chain. Assume that A has at least one state and that

there is a path from every non-absorbing state to at least one state

in A. Let Ti denote the number of transitions it takes to go from the

non-absorbing state

i

to A. Then

PTi k P ( k ) i j

jA

Ti is called the time to absorption.

The above result gives the cumulative distribution function of Ti

How about the mean time to absorption?

We can use the above result to obtain PTi k up to a reasonably

large k.

- 17 -

STAT 6310, Stochastic Processes

Jaimie Kwon

Or, we can use the following result:

Let a chain consists of non-absorbing states 1,2,…,r and the set of

absorbing states A. Let 1 , 2 ,..., r be the mean time to absorption

from states 1,2,…,r respectively. It is easy to see that:

i Pi j Pi j 1 j .

r

jA

j 1

Combining this with

r

Pi j Pi j 1 , we obtain:

jA

j 1

_____.

Thus, we have proved,

Theorem 4.7-2. Let Q denote the matrix consisting of transition

probabilities among the non-absorbing states. The mean times to

absorption satisfy the system of equations

1

1

1

2 Q 2

r 1

r

1

1

Or, 2 I Q 1 . Even more compactly, μ I Q 11 for column

1

r

vectors and 1.

Note: the textbook notation Ti is a bit confusing since it’s used for

both

The return time for the regular Markov chain

- 18 -

STAT 6310, Stochastic Processes

Jaimie Kwon

The time to absorption

We talked about the expected return time for regular Markov chains.

For regular Markov chains, how about the first passage time from

state i to state j (where ij)?

The trick is to define a new chain that is identical to the original

chain except that the state j is redefined to be an absorbing state.

Time to absorption to the state from state i is the first passage

time from state i to state j.

Example: 4 speakers example. If speaker A just finished talking,

Expected time until speaker B speaks?

Let f(ij) denote the probability that the chain is eventually

absorbed into state j given that it starts in state i. Under the

assumption that there is a path from every non-absorbing state to

the set of absorbing states, we have

lim k P ( k ) i j f i j .

If j is non-absorbing state, f(ij) = ___

If j is the only abosrbing state, f(ij) = ____

Interesting cases are when _____

Theorem 4.7-3. Let the one-step transition matrix of a finite Markov

chain have the form

P

I 0

.

Non - absorbing states R Q

Absorbing states

Let F be the matrix whose (i,j)th element is f(ij). Then

F I Q R

1

- 19 -

STAT 6310, Stochastic Processes

Jaimie Kwon

Theorem 4.7-4. Let i and j be nonabsorbing states. Let ij denote

the expected number of visits to state j beginnning in state i, before

the chain reaches an abosrbing state. (If we are interested in returns

to state i itself, the initial state i is counted as one visit.) Let U be the

matrix whose ij’th element is ij . Then

- 20 -

U I Q

1

.

STAT 6310, Stochastic Processes

Quiz 1 result:

> mean(x)

[1] 27.70732

> median(x)

[1] 28

> length(x)

[1] 41

- 21 -

Jaimie Kwon

STAT 6310, Stochastic Processes

- 22 -

Jaimie Kwon

STAT 6310, Stochastic Processes

Jaimie Kwon

5 Continuous random variables

Probability density functions

Expected value and distribution of a function of a random variable

Simulating continuous random variables

Joint probability distributions

6 Special continuous random variables

Exponential random variable

Normal random variable

Gamma random variable

The Weibull random variable

MGFs

Method of moment estimation

- 23 -

STAT 6310, Stochastic Processes

Midterm #1 result (out of 90)

> mean(x)

[1] 75.30952

> median(x)

[1] 81

> sd(x)

[1] 14.28711

>

- 24 -

Jaimie Kwon

STAT 6310, Stochastic Processes

Jaimie Kwon

7 Markov counting and queuing processes

7.1 Bernoulli counting process

Definition 7.1-1. A stochastic process is called a “counting process”

if

i) The possible states are the nonnegative integers.

ii) For each state i, the only possible transitions are

i i, ii+1, ii+2,…

If a counting process that has the Markov propoerty is called

“Markov counting process”

Assume that we have divided a continuous-time interval into

discrete disjoint subintervals of equal lengths. The subintervals are

called “frames.”

Definition 7.1-2. A counting process is said ot be a “Bernoulli

counting process” if

i) the number of successes that can occur in each frame is either 0

or 1.

ii) The probability, p, that a success occurs during any frame is the

same for all frames.

iii) Successes in nonoverlapping frames are independent of one

another.

Theorem 7.1-1. Let X(n) denote the total # of successes in a

Bernoulli counting process at the end of the n’th frame, n=1,2,… Let

- 25 -

STAT 6310, Stochastic Processes

Jaimie Kwon

the initial state be X(0)=0. The probability distribution of X(n) is

n

P( X (n) x) p x (1 p) n x .

x

What’s the one-step transition matrix for X(n)?

For realistic modeling,

The frame length should be determined by practical

considerations and

The success probability can be comptued by the formula

p

n

where

, the rate of success, is the expected number of successes in

a unit time

n is the number of frames in this unit of time

In practice, we estimate by

ˆ

Number of successes in t unit of time

t

- 26 -

STAT 6310, Stochastic Processes

Jaimie Kwon

Table for the example 7.1-2, 3, 5

= 3 per hour

5 min or

1 min or

… =1/n

5/60 hour

1/60 hour

p =

1/4

1/20

= /n = 3/n

n (for t=1 hour)

12

60

Send to

E(X(n)) = np

3

3

3 (= mean of

Poisson(3 t))

Var(X(n)) = np(1-

12*1/4*3/4 60*1/20*19/

3 (= variance of

p)

= 2.25

20 = 2.85

Poisson(3 t))

Distribution of Y =

Geo(1/4)

Geo(1/20)

“# of frames

between one

success to the

next”

E(Y) = 1/p

4 (frames) 20 (frames)

V(Y) = (1-p)/p2

12

380

SD(Y)

3.46

19.5

(frames)

(frames)

T = Y = “Time

Exp() distribution

between one

success to the

next”

E(T) = 1/

1/3

1/3

- 27 -

1/3

STAT 6310, Stochastic Processes

V(T) =

1

2

(1-3/12) /

(1-3/60) / 32

32 = 0.083 = 0.106

- 28 -

Jaimie Kwon

1/32 = 0.111…

STAT 6310, Stochastic Processes

Jaimie Kwon

Empty Table for the example 7.1-2, 3, 5

= 3 per hour

5 min or

1 min or

5/60 hour

1/60 hour

… =1/n 0

p =

n (for t=1 hour)

E(X(n)) = np

Var(X(n)) = np(1p)

Distribution of Y =

“# of frames

between one

success to the

next”

E(Y) = 1/p

V(Y) = (1-p)/p2

SD(Y)

T = Y = “Time

between one

success to the

next”

E(T) = 1/

V(T) =

1

2

- 29 -

STAT 6310, Stochastic Processes

- 30 -

Jaimie Kwon

STAT 6310, Stochastic Processes

Jaimie Kwon

We denote the frame length by (=1/n)

p

Example: consider a call center that receives 3 calls per hour. If we

model the number of hits as a Bernoulli counting process,

What would be a good time unit? Hour

What would be a good frame length ? 1/60 hour

What is ? 3 calls per hour

What is p?

What’s the effect of using different on p? on E(X(n))? On V(X(n))?

The Bernoulli counting process is time homogeneous

Theorem 7.1-2. Let Y denote the number of frames from one

success to the next. Then,

P(Y>y)=P(Y=y)=(1-p)y-1p, y=1,2,…

(typo in the book! P.272)

E(Y) = 1/p, V(Y)=(1-p)/p2

Theorem 7.1-3. The amount of time T, that passes from one

success to the next, is given by T=Y. Thus,

E(T)=1/

V(T)=

1

2

7.2 The Poisson process

Definition 7.2-1. Let N(t) denote the # of successes in the interval

[0,t]. Assume that it is a continuous-time counting process and that

the count begins at zero. N(t) is said to be a “Poisson process” if

- 31 -

STAT 6310, Stochastic Processes

Jaimie Kwon

i) Successes in nonoverlapping intervals occur independently of one

another.

ii) The probability distribution of the number of successes depends

only on the length of the interval and not on the starting point of the

interval.

iii) The probability of x successes in an interval of length t is

P N (t ) x e t

t x , x 0,1,2,... where is the expected number of

x

successes per unit of time.

Consider a Bernoulli counting process with n frames in the interval

[0,t], each with length . The probability distribution of the # of

successes in [0,t] is approximately Poisson(), where = np = t,

i.e.,

n

t x .

x

P( X (n) x) p x (1 p) n x e

e t

x!

x!

x

This approximation holds for any interval of length t.

Example: recall the telephone call example ( = 3 calls per hour)

Let T be the time until the first success to occur in a Poisson

process. Note that:

P(T > t) = P(N(t) = 0) = e-t, Or,

FT(t) = P(T t) = 1 P(N(t) = 0) = 1 e-t

Theorem 7.2-1. Let T be the time between consecutive successes in

a Poisson process with rate . Then T ~ Exp(), with pdf

fT(t) = e-t

- 32 -

STAT 6310, Stochastic Processes

Jaimie Kwon

Note that E(T)=1/ and V(T)=1/2. They are limits of those in the

Bernoulli counting process as 0.

7.3 Exponential random variables and the Poisson process

Let Ti, i=1,2,… denote the interarrival times in a Poisson process

with rate . Then Ti ~ iid Exp()

Useful result for simulation

Let Wn be the waiting time until it takes to observe n successes in a

Poisson process.

Theorem 7.3-2. Note that

FWn t

n 1

t x

x 0

x!

=P(Wn t) = 1 P(Wn > t) = 1 P(N(t) n 1)=1 e t

and

f (t )

n t n1

(n 1)!

e t .

The distribution is called the Erlang distribution and

is a special case of the Gamma distribution

(Gamma(,)=Gamma(n, 1/))

Theorem 7.3-3. Wn T1 T2 ... Tn and thus E(Wn) = n/ and V(Wn) =

n/2

Example: 2 thunderstorms

7.4 The Bernoulli single-server queuing process

A queueing process: a stochastic process of the number of persons

or objects in the system

Arrivals and services

A finite or limited-capacity queue vs. an unlimited-capacity queue

We move from discrete-time to continuous-time as before

- 33 -

STAT 6310, Stochastic Processes

Jaimie Kwon

We move from singler-server process to multiple-server process

Definition 7.4-1. A queuing process is said to be a single-server

Bernoulli queuing process, with unlimited capacity, if

i) The arrivals occur as a Bernoulli counting process, with arrival

probability PA in each frame.

ii) When the server is busy, departures or completions of service

occur as a Bernoulli counting process, with service completion

probability PS in each busy frame.

ii) Arrivals occur independently of services

Convention: The earlist a customber can complete service is one

frame after arriving

The # in the queuing system: those being served and those in line

States: the # of customers in the system

Note that:

P(0 0) = 1- PA, P(0 1) = PA

For i 0,

P(i i 1) = PS(1 PA),

P(i i) = (1 –PA) (1 PS) + PA PS,

P(i i+1) = PA (1 PS)

Example: a single server queue with PA = .10 and probability service

PS = .15. What’s the one-step transition matrix?

- 34 -

STAT 6310, Stochastic Processes

Jaimie Kwon

The number of frames from the arrival of one customer to the next ~

Geo(PA)

The number of frames it takes to service a customer ~ Geo(PS)

PA

PS

A

n

S

n

A and

S

The expected service time S=1/S.

Suppose the system has a capacity of C customers. P(ij) is same

as before for i < C. If the system is full, we assume that potential

customers continue to arrive but do not join the system unless a

service has occurred.

P(C C) = P(0 arrivals and 0 services) +

P(1 arrival and 1 service) +

P(1 arrival and 0 service)

= (1 – PA)(1– PA) + _____ + ______

= ________

P(C C – 1) = P(0 arrivals and 1 service) = ________

For i < C, identical as above

Example: a telephone has the capability of keeping one caller on

hold while another is talking. While a caller is on hold, a new attempt

to call is lost (busy signal). Thus, at most two calls can be in the

system at any time. Suppose the probability that a call arrives during

a frame on 1 minute is PA = .10 and probability that a call is

completed during a frame is PS = .15. For this limited-capacity

- 35 -

STAT 6310, Stochastic Processes

Jaimie Kwon

Bernoulli queuing process, what is the one-step transition matrix P?

What is the steady-state probability vector?

0.453 0.336 0.211

7.5 The M/M/1 queuing process

Aim: to derive a continuous-time queuing process by letting 0 in

the single-server Bernoulli queuing process.

Such process is called M/M/1 queuing process

In general, we consider {M, G, D}/{M, G, D}/k queuing process

M: Markov

G: general

D: deterministic

k: # of servers

Let’s recall that:

Note that:

P(0 0) = 1- PA, P(0 1) = PA

- 36 -

STAT 6310, Stochastic Processes

Jaimie Kwon

For i 0,

P(i i 1) = PS(1 PA),

P(i i) = (1-PA) (1 PS) + PA PS,

P(i i+1) = PA (1 PS)

PA

PS

A

n

S

n

A and

S

Convert them to:

P(0 0) = 1 – A, P(0 1) = A

For i > 0,

P(i i 1) = S (1 A) _________,

P(i i) = (1 – A) (1 S) + AS __________,

P(i i+1) = A (1 S) _________

Approximate transition probabilities for the M/M/1 queuing

process in a small time interval of length

Transitions other than the above have negligible probability when

the frame size is small

Definition 7.5-1. A continuous-time, single-server queuing process is

said to be M/M/1 queuing process if

i) for sufficiently small time intervals of length , transition

probabilities can be approximated by the above equaitons.

ii) Transitions occurring in nonoverlapping intervals are

independent of one another

- 37 -

STAT 6310, Stochastic Processes

Jaimie Kwon

Theorem 7.5-1. The time between the arrivals of customers in

M/M/1 ~ Exp(A), and given that a customer is being served, the

time to complete service is ~ Exp(S)

Recall that Exp(A) is an exponential distribution with mean A =

1/A, and Exp(S) an exponential distribution with mean S = 1/S.

Aim: derive the steady-state distribution for the number of

customers in an M/M/1 queuing system.

Idea: if there are i>0 customers at time t + , there could have been

only i – 1, i + 1, or i customers at time t, if is made small enough.

Let i denote the steady-state probability that there are i > 0

customers in the system. If the system is in the steady-state

condition, then for all state i,

P(i customers at time t + ) = P(i customers at time t) = i

which leads to:

i = i-1 A + i+1 S + i (1 – A – S)

and

- 38 -

STAT 6310, Stochastic Processes

Jaimie Kwon

0 = 1 S + 0 (1 – S)

Simplifying, we have:

0A = 1 S

1A = 2 S

…

iA = i+1 S, i = 0, 1, ….

This makes sense and is called the “balance equation”

Why is it called the banance equation:

i A = i+1 S

i P(i i + 1) = i + 1 P(i + 1 i),

i.e., In steady state, mass leaving from i to i + 1 mass leaving from

i + 1 to i (otherwise, it wouldn’t be steady)

limt P(X(t) = i) P(X(t + ) = i + 1 | X(t + ) = i ) =

limt P(X(t + 1) = i) P(X(t + ) = i | X(t + ) = i +1 )

The solution of the balance equation can be found as follows:

- 39 -

STAT 6310, Stochastic Processes

Jaimie Kwon

Theorem 7.5-2 Let r = A/S denote the arrival/service ratio of an

M/M/1 queuing process. The steady-state distribution exists iff r < 1

and the steady-state probability mass function for the number in the

system is

i = (1 – r)ri, i=0, 1, 2, …

As a consequence:

P(X > x) = _____

P(X x) = _____

Example: 30 orders per day; service time of 12 mintes per order;

proportion of idle time period; P(X 2); P(X > 9); expected # of

orders in the system and the SD?

Let Y have the steady state distribution of the # of customers in the

M/M/1 queuing system. Then X = Y + 1 ~ Geo(1 – r)

E(Y) = ____

SD(Y) = _____

Theorem 7.5-3. The steady-state mean and SD of the number of

customers in an M/M/1 queuing system with arrival/service ratio r <

1 are

=

r

1 r

,=

r

1 r

- 40 -

STAT 6310, Stochastic Processes

Jaimie Kwon

What happens if r < 1 but near 1?

What happens if r 1?

Theorem 7.5-4. Assume the M/M/1 queuing system is in steady

state. Let T denote the amount of time a customer spends in the

system and r = A/S. Then

E(T) = ______________

1

r

1

1

1 r S (1 r )S

Example

7.6 K-server queuing process

Definition 7.6-1. A process with k-servers and unlimited capacity is

called a “k-server Bernoulli queuing process” if:

i) The arrivals occur as a Bernoulli counting process with PA.

ii) For each busy server, service completions occur as a Bernoulli

counting process with PS, which is same for all servers.

- 41 -

STAT 6310, Stochastic Processes

Jaimie Kwon

iii) Arrivals are independent of services; each busy servers function

independently of one another

n

P(j service completions | n busy servers) = PSj 1 PS n j , j n .

j

Recall that for a singler server:

P(0 0) = 1- PA, P(0 1) = PA

For i 0,

P(i i 1) = PS(1 PA),

P(i i) = (1-PA) (1 PS) + PA PS,

P(i i+1) = PA (1 PS)

PA

PS

A

n

S

n

A and

S

For two-servers: (if i = 0 or 1, behave just like a singler server)

P(0 0) = 1- PA, P(0 1) = PA

P(1 0) = PS(1 PA),

P(1 1) = (1-PA) (1 PS) + PA PS,

P(1 2) = PA (1 PS)

For i 2,

P(i i + 1)

= PA (1 PS)2

P(i i 1)

- 42 -

STAT 6310, Stochastic Processes

Jaimie Kwon

= P(one arrival and two services OR no arrival and one service)

= (1 PA)[2(1 – PS) PS] + PA PS2

(Bernoulli probability)

P(i i 2) =

P(i i) =

Now convert using PA

A

n

A and PS

S

n

S

A continuous-time process is an M/M/k queuing process with

unlimited capacity if it satisfies the following conditions:

i) when there are n busy servers and i customers in the system, the

following approximations apply to the possible transitions ina

sufficiently small frame of length :

P(i i + 1) A;

P(i i - 1) n S;

P(i i) 1 – A – nS

- 43 -

STAT 6310, Stochastic Processes

Jaimie Kwon

ii) transitions in nonoverlapping frames are independent of one

another

Rules of thumb: Estimating A and S for practical problems:

- To estimate A, use the method of moment, or

̂ A = (# of arrivals observed over a time period) /

(amount of the time period)

- To estimate S, solve

1

̂S

= sample mean of service completion times

This is the method of moment, too. (why?)

7.7 Balance equations and steady-state probabilities

Idea: want to let the arrival rate A and the service rate S depend

on the current number of customers in the system

Examples?

Definition 7.7-1 Let ai and si denote the arrival rate and system

service rate, respectively, of a queuing process with i customers in

the system. The process is called a “general Markov queuing

process” if the following conditions hold: In a sufficiently small time

- 44 -

STAT 6310, Stochastic Processes

Jaimie Kwon

frame of length , we have :

P(i i + 1) ai

P(i i - 1) si

P(i i) 1 – ai – si .

The error of approximation is negligible compared with the terms

involving , when is small. The transitions in nonoverlapping

intervals are independent of one another.

Example: For M/M/k queuing process,

aj = ___ and

sj = ____

sj = j S, j = 0, 1, 2, …, k – 1,

s j = k S, j k

Theorem 7.7-1. Let aj, j = 0, 1, 2, … be the arrival rates and let sj, j =

1, 2, … be the service rates of a general Markov queuing process.

Let j, j = 0, 1, 2, …, denote the steady-state probability distribution

of the process. Then, the j’s satisfy the system of balance

- 45 -

STAT 6310, Stochastic Processes

Jaimie Kwon

equations:

j a j j 1 s j 1 , j = 0, 1, 2, …

Verification is similar to that of M/M/1 queuing process (Ex. 7.7-9)

Theorem 7.7-2. The steady-state probability distribution for the

general Markov queuing process exists if and only if

1

a 0 a 0 a1 a 0 a1 a 2

...

s1 s1 s 2

s1 s 2 s3

and the steady-state probability distribution is:

j 0

a0 a1 ...a j 1

s1 s 2 ...s j

, j 1,2,...

and

a

a a a aa

0 1 0 0 1 0 1 2 ...

s1 s1 s 2 s1 s 2 s3

1

Proof:

- 46 -

STAT 6310, Stochastic Processes

Jaimie Kwon

When does M/M/k queuing process satisfy the the theorem?

Answer: When

r A

1.

k kS

In M/M/k, what is the general form of the steady state probability

distribution?

k 1 r j

rk

Answer: 0 1

j 1 j! k!1 r / k

rj

,

jk

0

j!

j

j k

j

r

r

k , j k

0 k!k j k

k

1

In M/M/2 with r = A / S = .25, what is the steady state probability

distribution?

Formula for the expected # of customers in an M/M/k system in

steady state:

- 47 -

STAT 6310, Stochastic Processes

E(N ) r

Jaimie Kwon

r k / k

1 r / k 2

Example: a single fast server vs. two slow servers. Consider

M/M/1 : S = 80 customers per minute

M/M/2 : S = 40 customers per minute

The arrival rate A = 10 customers per minute is same for both

cases.

Compute the steady state probability for both cases.

Find and compare the mean number of customers in each system.

Lesson?

Example: effect of adding a server. Consider

M/M/1 system with r = .9.

What’s the mean number of customers in the system?

- 48 -

STAT 6310, Stochastic Processes

Jaimie Kwon

How does it change if we add two servers with the same service

rate each? (or M/M/3 system)

The expected time for a customer to spend in each system? [Need

to know A . Let’s assume A = 6 customers/minute]

Theorem 7.7-3 (Little’s formula) Assume that an M/M/k system has

reached steady-state condition. Let T denote the amount of time a

customer spends in the system, and let N denote the number of

customers in the system. Then

A E(T) = E(N)

Why does this make sense?

7.8 More Markov queuing processes

For the steady-state probabilities for M/M/k process as k becomes

large,

1

rj

lim 0 1 e r

k

j 1 j!

- 49 -

STAT 6310, Stochastic Processes

Jaimie Kwon

Also,

rj

lim j e

, j 1,2,...

k

j!

r

Doesn’t this look familiar?

Mega-mart with a large # of checkout stations.

4 customers arrive per minute.

Each customer spends 1.5 minute being served.

Arrival/service ratio = _____

Average # of busy check-out stations in the steady state = _____

P(more than 10 busy lines) = ______

Other cases:

M/M/k process with finite capacity

M/M/k process with ai

A

i 1

- 50 -

STAT 6310, Stochastic Processes

Jaimie Kwon

7.9 Simulating an M/M/k queuing process

8 The distribution of sums of random variables

8.1 Sums of random variables

8.2 Sums of random variables and the CLT

Theorem 8.2-1. CLT for iid random sample

Proof

8.3 Confidence intervals for means

8.4 A random sum of random variables

Definition 8.4-1. Let N(t) be a Poisson process with rate and Y1, Y2,

… Yn be iid with mean and SD , independent of N(t). A process

X(t) is a “compound Poisson process” if

N (t )

X (t ) Yi

i 1

Examples?

Theorem 8.4-1. For a compound Poisson process X(t),

E[X(t)] = ____ and Var[X(t)] = ________

- 51 -

STAT 6310, Stochastic Processes

x = 75.9, medina = 78.5, sd = 5.14

- 52 -

Jaimie Kwon

STAT 6310, Stochastic Processes

Jaimie Kwon

9 Selected systems models

9.1 Distribution of extremes

9.2 Scheduling problems

9.3 Sojourns and transitions for continuous-time Markov

processes

Definition 9.3-1: a process is a continuous-time Markov process if

the following conditions are satisfied:

i) There exist nonnegative rates ij, i j, such that for frames of

sufficiently small length ,

P(i j) ij ,

P(i i) 1 –

i j

ij

where the approximation errors are negligible for small

ii) Transitions that occur in nonoverlapping frames are independent

of one another

- 53 -

STAT 6310, Stochastic Processes

Jaimie Kwon

Theorem 9.3-1. Let Pij(t) = P[Markov process in state j at time t

given that it starts in state i]. If the chain can visit every state, then

there exists = (1, …, S) such that

lim t Pij (t ) j .

The satisfies

the matrix equation = 0 and j j = 1, where = {ij} with ii = j i ij.

Informal Proof:

A “sojourn time”: the time spent in a state on one visit

A “conditional transition probability”: the probability of a transition

given that a transition to a new state is made

Theorem 9.3-2. The sojourn time for a state i in a continuous-time

Markov process ~ Exp(j i ij). Thus its mean i = 1/(j i ij).

Given that a transition is made from state i to j, j i, the transition

occurs according to a Markov chain with one-step transition

probabilities:

pij

ij

.

ij'

j 'i

Theorem 9.3-3. Suppose that the conditional probabilities pij above

define a regular Markov chain and pi the steady-state probability for

- 54 -

STAT 6310, Stochastic Processes

Jaimie Kwon

this regular Markov chain. Then

i

pi i

S

p

i 1

i

i

Informal proof

Sojourn times and conditional transition probabilities make the

simulation possible

9.4 Sojourns and transitions for continuous-time semi-Markov

processes

“Semi-Markov process”: continuous-time process in which the

sojourn time distributions are not exponential but the conditional

transition probabilities follow the Markov chain

9.5 Sojourns and transitions for queuing processes

The M/M/k queuing process and its variations are continuous-time

Markov processes with

i ,i1 ai

i ,i1 si

ii ai si

Theorem 9.5-1. In M/M/k queuing process, the sojourn time for state

i ~ Exp(ai + si) with mean i = 1/(ai + si). When a transition is made to

- 55 -

STAT 6310, Stochastic Processes

Jaimie Kwon

a new state, it occurs as a Markov chain with

P (i i 1)

ai

ai si

P (i i 1)

si

ai si

10 Reliability models

10.1 The reliability function

If T denote the time to failure of a system, then the “reliability at time

t” or “reliability function” R(t) is defined to be:

R(t) = P(T > t)

It’s easy to see that

R(t) = 1 – F(t)

For a system whose time to failure ~ Exp(), the reliability function

R(t) = _________

- 56 -

STAT 6310, Stochastic Processes

Jaimie Kwon

Theorem 10.1-1. Let a system consist of n components with

reliability functions R1(t), …,Rn(t) and assume they fail independently

of one another. The reliability function of the series system is

RS(t) = ______

The reliability function of the parallel system is

RP(t) = ______

n

RS (t ) Ri (t )

i 1

Many systems consist of series and parallel subsystems. The

reliability can be comptued via repeated application of the above

theorem.

10.2 Hazard Rate

Definition 10.2-1. Let f(t) be the probability density function of T, the

time to failure of a system. Then the “hazard rate” (“failure-rate” or

“intensity function”) is

h(t )

f (t )

R(t )

Motivation

The hazard rate h(t) can be modeled as:

Increasing function of t

- 57 -

STAT 6310, Stochastic Processes

Jaimie Kwon

Decreasing fucntion of t

Constant

Definition 6.4-1. A random variable X ~ Weibull(, ) for , > 0, if

its cdf is given by

F ( x) 1 exp x /

for x 0.

The pdf of Weibull is

f(x) = ______

1

1

2 2 1

2

1

2 1

Hazard rate of Weibull(, ) distribution?

Consider a parallel system consisting of two parts that fail

independently of each other with Exp(2) lifetime distribution. Find

RP(t) =

F(t) =

f(t) =

h(t) =

- 58 -

STAT 6310, Stochastic Processes

Jaimie Kwon

in turn. Draw h(t) and discuss the result.

Theorem 10.2-1 R(t ) exp 0 h( s)ds

t

Example: If h(t) = et, t > 0, then

R(t) = ______

F(t) = ______

f(t) = _______

10.3 Renewal Processes

Definition 10.3-1. A “renewal process” is a counting process in

which the times between the counted outcomes are iid nonnegative

random variables.

N(t): the number of “renewals” in an interval of length t

“renewal times”: T1, T2, …, with E(Ti) = and SD(Ti) = .

Ti : time between the (i-1)th and ith renewal.

Define the time of n’th renewal: Sn = T1 + …+ Tn

- 59 -

STAT 6310, Stochastic Processes

Jaimie Kwon

Theorem 10.3-1.

P(Sn t) = P(N(t) n)

The lifetime of a printer ribbon T1 ~ Uniform(1, 3) in months. Note

that N(24) = # of ribbon replacement over 24 months. Find:

P(N(24) 15) =

95% confidence interval of N(24) P(What is the number of ribbon

CLT on Sn for large n => CLT on N(t) for large t

Theorem 10.3-2. For large t,

t t 2

N (t ) ~ N N (t ) , N2 (t ) N , 3

approximately.

Informal proof

10.4 Maintained Systems

Consider a system that is either up or down, going through up-down

cycle.

- 60 -

STAT 6310, Stochastic Processes

Jaimie Kwon

TU(i) and TD(i) : “uptime” and “downtime” for i’th cycle. Assume both

are iid, continuous random variables.

Theorem 10.4-1. lim P(system is up at time t) =

U

U D

where E(TU(i)) = U and E(TD(i)) = D.

“maintained system”: a system consisting of k components that can

fail independently of one another. Broken components are repaired

on a first-come, first-served basis. Interested in the number of

components that are up at any given time. For this system:

i,i – 1 = ___

i,i + 1 = ___

where : “failure rate” of an individial component

: “service rate”

i,i – 1 = i , i=1, 2,…, k;

i,i + 1 = , i=0, 1,…, k - 1 ;

Behaves like finite-capacity (with at most k customers in the system)

M/M/k system

- 61 -

STAT 6310, Stochastic Processes

Jaimie Kwon

Solve balance equations to find the steady state distribution of the

number of “up” components

The system can be extended to the case when there are c ( k)

technicians working independently of each other.

10.5 Nonhomogeneous Poisson Process and Reliability Growth

In the Bernoulli counting process (Poisson process), what if the

frame success probability (rate) changes over time?

Definition 10.5-1. The counting process N(t) is said to be a

nonhomogeneous Poisson process if

i) N(0) = 0

ii) The counts in nonoverlapping intervals are independent.

iii) There exists a differentiable, increasing “mean function” m(t)

such that m(0) = 0 and

N (t ) N ( s) ~ Pois (m(t ) m( s)) for s < t

The “intensity function” (t )

d

m(t )

dt

The Poisson process is a special case with m(t) = _______

- 62 -

STAT 6310, Stochastic Processes

Jaimie Kwon

The number of failures of a system can be modeled by the

nonhomogeneous Poisson process.

“reliability growth” = “(t) is decreasing function of t”

A nonhomogenous Poisson process is called a “Weibull process” if

(t ) t 1 and

m(t ) t

The intensity function (t) increases with t if _____

The intensity function (t) decreases with t if _____ (reliability

growth)

The time to the first failure T1 ~ Weibull distribution (what

parameters?)

Unlike the renewal process, the time between the (n – 1)th and n’th

failure depends on when the (n – 1)th failure occurred.

____

Example: the daily failures of a new equipment is modeled as a

Weibull process with = 2 and = .5. Find:

- 63 -

STAT 6310, Stochastic Processes

P(N(10) > 5) = ________

P(N(20) – N(10) > 5) =_______

- 64 -

Jaimie Kwon

STAT 6310, Stochastic Processes

Jaimie Kwon

STAT/MATH 6310, Stochastic Processes, Spring 2006 Course Note

Dr. Jaimie Kwon

March 8, 2016

Table of Contents

1

2

Basic probability .............................................................................3

1.1

Sample spaces and events ......................................................3

1.2

Assignment of probabilities .......................................................3

1.3

Simulation of events on the computer.......................................3

1.4

Counting techniques .................................................................3

1.5

Conditional probability ..............................................................3

1.6

Independent event....................................................................4

Discrete random variables ..............................................................4

2.1

Random variables ....................................................................4

2.2

Joint distributions and independent random variables ..............4

2.3

Expected values .......................................................................4

2.4

Variance and standard deviation ..............................................4

2.5

Sampling and simulation ..........................................................5

2.6

Sample statistics ......................................................................5

2.7

Expected values of jointly distributed random variables and the

law of large numbers .........................................................................5

- 65 -

STAT 6310, Stochastic Processes

3

4

Jaimie Kwon

2.8

Covariance and correlation .......................................................5

2.9

Conditional expected values .....................................................6

Special discrete random variables ..................................................6

3.1

Binomial random variable .........................................................6

3.2

Geometric and negative binomial random variables .................6

3.3

Hypergeometric random variables ............................................6

3.4

Multinomial random variables ...................................................7

3.5

Poisson random variables ........................................................7

3.6

Moments and moment-generating functions (MGF)..................7

Markov chains ................................................................................8

4.1

Introduction: modeling a simple queuing system ......................8

4.2

The Markov property ................................................................9

4.3

Computing probabilities for Markov chains .............................11

4.4

The simple queuing system revisited ......................................13

4.5

Simulating the behavior of a Markov chain .............................14

4.6

Steady-state probabilities .......................................................14

4.7

Absorbing states and first passage times ...............................17

5

Continuous random variables .......................................................23

6

Special continuous random variables ...........................................23

7

Markov counting and queuing processes......................................25

7.1

Bernoulli counting process .....................................................25

7.2

The Poisson process ..............................................................31

7.3

Exponential random variables and the Poisson process .........33

7.4

The Bernoulli single-server queuing process ..........................33

- 66 -

STAT 6310, Stochastic Processes

Jaimie Kwon

7.5

The M/M/1 queuing process ...................................................36

7.6

K-server queuing process .......................................................41

7.7

Balance equations and steady-state probabilities ...................44

7.8

More Markov queuing processes ............................................49

7.9

Simulating an M/M/k queuing process ....................................51

8

The distribution of sums of random variables ...............................51

8.1

Sums of random variables ......................................................51

8.2

Sums of random variables and the CLT .................................51

8.3

Confidence intervals for means ..............................................51

8.4

A random sum of random variables ........................................51

9

Selected systems models .............................................................53

9.1

Distribution of extremes ..........................................................53

9.2

Scheduling problems ..............................................................53

9.3

Sojourns and transitions for continuous-time Markov processes

53

9.4

Sojourns and transitions for continuous-time semi-Markov

processes ........................................................................................55

9.5

10

Sojourns and transitions for queuing processes .....................55

Reliability models ......................................................................56

10.1

The reliability function ..........................................................56

10.2

Hazard Rate ........................................................................57

10.3

Renewal Processes .............................................................59

10.4

Maintained Systems ............................................................60

10.5

Nonhomogeneous Poisson Process and Reliability Growth 62

- 67 -

STAT 6310, Stochastic Processes

- 68 -

Jaimie Kwon

STAT 6310, Stochastic Processes

- 69 -

Jaimie Kwon

STAT 6310, Stochastic Processes

- 70 -

Jaimie Kwon