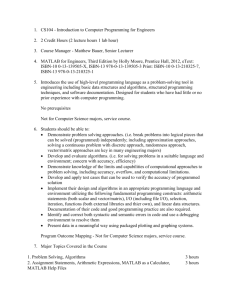

Laboratory 1

advertisement

Neural Network Models of Cognition and Brain Computation © W. B. Levy 2004 Laboratory 1: Generating random inputs and evaluating some of their statistics Goals 1. To become familiarized with the MatLab environment, including how to execute simple functions; 2. To learn about generating random numbers, seeding the random number generator, and controlling random numbers with respect to mean and variance; 3. To introduce elementary statistical ideas about sample size and convergence of a sample average; and 4. To learn how to produce normalized vectors that will serve as inputs to our neural networks in the future. Note: You are not required to do a formal write-up for this laboratory, so don’t worry about including an introduction, methods, and discussion section. Your grade will be based on your responses to the exercise questions and on the quality of your figures and figure legends. This means your figures should be appropriately labeled and you should give a clear explanation of the significance of each figure. See the In-Class Introduction to MatLab for tips on how to label your figures. Be sure to include the MatLab commands that you used in each exercise in an appendix at the end of your laboratory. Introduction Averages are useful to scientists, sports aficionados, politicians, and just about anybody else who wants to do a better job. One way we use an average is to summarize a set of observations and to represent this set. Averages can also be used to generate predictions of future events. Because the brain generates predictions, it may be the case that individual neurons generate predictions. If so, we might suspect that individual neurons base their predictions on some kind of averaging process. When a process generates events that are not perfectly predictable, we often use statistics to summarize these events and then to predict future events. The most common statistic is the mean. A mean can be calculated for anything that can be measured or counted. Consider two simple examples of measured events. The weight of a first-year university student is a variable that can be measured. If we calculate the mean weight of 1000 students, we can use this value to predict the average weight of a different sample of 1000 first-year students. Indeed, we can use this average to predict the weight of a single student. As a neural example, we can estimate the number of neurons in cerebral cortex of a mouse. This number differs among individual mice no matter how homogeneous their breeding. Despite this variability among individuals, we can calculate the mean number of neurons in one sample of mice and use this Generating random inputs and evaluating statistics 1 Laboratory 1-Spring 2005 Neural Network Models of Cognition and Brain Computation © W. B. Levy 2004 average to predict the number of neurons in a mouse that is not part of this sample. Although this prediction will not be perfect, we are likely to guess closer to the correct value than if we were to guess without knowing this average value. Events can also be averaged in terms of their frequency over time. For example, we can’t measure a person walking into the student union in the same sense that we can quantify that person’s weight. But we can count the number of people walking into the union between 3 and 4 PM. Thus, if 126 people come into the union over a 60-minute period, then we can conclude that, on average, 2.1 people enter every minute. Such averages are also useful in neuroscience—for example, we can estimate the rate at which neurons fire action potentials, or spikes, by counting the number of spikes that occur during a particular time interval. Simple Averages In describing averages, we must distinguish, in a simple way, between the population mean and the sample mean. Both values can be called means—we can think of the population mean as the “true mean” or the “predicted mean” and the sample mean as the "empirically observed mean." The population mean of MatLab’s1 random number generator (rand(), which returns a value on the interval [0,1)) approaches the value 0.5. However, because of the limited decimal precision of the underlying computational hardware and because the sample mean of a random variable is itself a random variable, this mean never reaches 0.5. As you will see below, the sample mean of the random number generator will almost certainly differ from the population mean. The rate at which a sample mean approaches the underlying population mean (assuming this theoretical value is finite and exists in a stationary form) is of interest if we are going to use a sample mean for prediction. When it comes to predicting future events, averages have optimal predictive properties. Because neurons are in the business of prediction, 1) we can hypothesize that synapses construct some kind of average as they incorporate information from the neuronal activity they experience, and 2) even if this is not the case, we can at least compare the behavior of a biological neuronal system to a model, computational system that does construct such averages. Finally, empirical averages are single values that summarize many observed events from the past. In this sense, these averages are a form of memory, and they are an example—perhaps the simplest example—of abstraction. Memory and abstraction are surely cognitive processes worthy of study and understanding. Convergence in Mean 1 Convergence starts out as a simple mathematical idea. For example, x converges to 0 as x grows larger. Mathematicians have taken this idea of convergence and applied it in a special way to a random variable. For random variables and functions of such variables, the only really sensible and interesting thing we can say about them concerns how certain computations converge. What 1Refer to ‘In-Class Introduction to MatLab’ for an overview of these commands. Generating random inputs and evaluating statistics 2 Laboratory 1-Spring 2005 Neural Network Models of Cognition and Brain Computation © W. B. Levy 2004 is most interesting to us is the convergence in mean or the mean of a function. In fact, this is probably the most we will ever want to say about many random variables. An important parameter that controls convergence of a function of a random variable is sample size. For example, if a stationary random process generates a value of variable x with uniform probability on the interval [0,1), then the mean value of x should converge to 0.5 if there are enough samples from this process.2 Section 1.1: Generating random numbers Neural network modelers need more than just a model of the brain—they also need a model of the world that provides inputs to the brain. It is part of the art (and science) of attempting to understand the biological basis of thought by quantitative modeling. To be successful, such modelers need to recreate the paradigmatic essence of the problems confronting living organisms on a day-to-day, moment-to-moment basis. In other words, here we’ll use computer simulations to understand how neural computations can yield cognition. To perform such simulations, we will need inputs (what we call input environments) to present to our neural-like systems. It might seem desirable to use real world inputs, but it is not always feasible to do so. Using real world inputs would require networks that are too large and too complex for beginners (and often too complex for any research lab in the world). Instead, we will create artificial worlds that are, in some specific aspects, prototypical of the real world problems that humans actually solve. A probability model is often the preferred way to create an artificial world. A probability model assumes that the real world is based on random variables with one or more underlying probability generators. A good analogy is the probability generator of MatLab—this generic generator is governed by a deterministic but chaotic process. Interestingly, chaotic processes might also be the basis of randomness in the real world. Let’s use MatLab’s random number generator to create a three-dimensional column vector that can be used as an input to a network. Enter the command >>VectorA=rand(3,1) This command generates an ordered list of three pseudo-random numbers. This ordered list, or vector, is in a special order with three rows and one column corresponding to the (3,1) of the rand() command3. In addition to generating a column vector of three random numbers, the rand() command also assigned this vector to the variable named VectorA. Enter VectorA to convince yourself of this fact—i.e., ask MatLab what, if any, values are currently assigned to VectorA by typing the following: As you’ll see in Laboratory 3, convergence in mean can actually move away from the asymptotic value so that, from sample to sample, convergence is not uniform. (The rate of approach to the mean is also known as the rate of convergence). When this happens, it is helpful to know, how much we should trust our sample mean as an approximation of the population mean as a sample size increases or decreases. 3 You should memorize this row-by-column notation—it is the standard of linear algebra and is important for taking full advantage of MatLab’s capabilities. If your notation is not consistent, you will get errors when you try to implement commands that require the proper dimensions of input vectors and matrices. 2 Generating random inputs and evaluating statistics 3 Laboratory 1-Spring 2005 Neural Network Models of Cognition and Brain Computation >>VectorA © W. B. Levy 2004 %VectorA will contain this set of numbers until you %clear VectorA, set VectorA equal to something else, %or exit MatLab MatLab will print the three-dimensional column vector of random numbers to the screen. Then you can clear the vector and make sure that it is empty by typing: >>clear VectorA >>VectorA %unassign VectorA %VectorA no longer implies any set of values Now MatLab will print nothing to the screen since VectorA is empty. There are many different generating processes that depend on the probability distribution used to generate random numbers. MatLab’s rand() command picks random numbers lying between zero and one with a uniform (i.e., the same) probability for picking any particular number. You can, however, alter what this generator produces. You will learn how to shift and rescale the random number generator in section 1.3. Convergence of Averages If an infinite number of samples could be chosen, the mean of these random numbers should be 1 just less than 0.5, and the variance should be 0.0833 (i.e., 12 ). Let’s see what happens with finite sampling. Exercise 1.1.1. Generate sets of 10, 100, 1,000 and 10,000 random numbers and calculate the mean, variance, and standard deviation of each set. >>VectorB = rand(10,1); %generate a column vector of 10 random numbers %and suppress output; set VectorB equal to this %set >>mean(VectorB) %generate the mean value of the elements in the %column vector named VectorB—this is the sample %mean of the elements in the vector >>var(VectorB) %generate the variance of the elements in %VectorB >>std(VectorB) %generate the standard deviation of the elements %in the column vector named VectorB Now generate 100 random numbers and find their mean and standard deviation. Do this five times. What are the mean and standard deviation of these five means? Do the same for five sets of 1,000 random numbers, and then for 10,000. Comment on how close the means of each sample come to what you would predict the mean to be as a function of sample size. Exercise 1.1.2. Generating random inputs and evaluating statistics 4 Laboratory 1-Spring 2005 Neural Network Models of Cognition and Brain Computation © W. B. Levy 2004 Now generate 1,000 random numbers and calculate the mean of the sample using a ‘for’ loop instead of MatLab’s mean command. A 'for' loop is a programming construct which performs the instructions it contains for a specified number of times. In other words, update the mean as you iterate through the elements in the vector, starting at element 1 and ending at element 1,000. Plot the mean as a function of sample number and comment on how the mean changes you iterate through the sample. >>VectorC = rand(1000,1); %generate a column vector of 1000 random numbers %and suppress output; set VectorC equal to this %set %initialize the current average to zero >>CurrentAve=0; >>for I=1:1000 CurrentAve=(CurrentAve*(I-1)+VectorC(I,1))/I; %update CurrentAve by StoredAve(I,1)=CurrentAve; %adding the value of CurrentAve at the end %previous timestep to the Ith value %of VectorC and dividing by I, the sample %number %StoredAve contains a history of CurrentAve >>plot(StoredAve) %the plot command is placed outside %the loop to speed the program The tiny 'program' above need not be beautifully formated when you enter it into MatLab, but formatting programs with indentation improves a program's readability and will help you to find problems with longer programs. Check that this program is actually working. Use the command >>mean(VectorC(1:I,1) to calculate the mean of the first N values stored as part of the vector named VectorC. Explain why the averaging algorithm works. (Hint: Write the formula for an average as n you have been taught xi n i 1 and show equality to the algorithm). It’s always important to check the quality of any random number generator because none of those available is truly random. Uniformity means that the generator supposedly produces values from [0,1) with equal probability for each value. A proper statistical check is beyond the scope of this course, but there is one way to take a quick look. A histogram is a convenient way to look at the distribution of many scalar, random numbers. A histogram is a bar graph which has been automatically constructed by a numerical categorization rule called 'binning'. For example, suppose are a set of 1000 elements where the values of the elements range from 1 to 100. MatLab's default number of bins for the graph is 10, that is, there are 10 categories or 'bins'. The number elements in each bin is plotted as an individual bar. MatLab's hist command will count the number of elements which fit into each bin and then display these counts as a bar graph. Ordinarily, the bins are evenly spaced (range of bin = range of data/number of values in a bin), but this does not have to be true. MatLab easily generates histograms using the following code: Generating random inputs and evaluating statistics 5 Laboratory 1-Spring 2005 Neural Network Models of Cognition and Brain Computation >>rand(‘seed’, 9771877) >>VectorZ = rand(10,1); >>subplot(1,2,1),hist(VectorZ) >>subplot(1,2,2),hist(VectorZ,8) © W. B. Levy 2004 %seed random number generator %VectorZ is a column vector of 10 %random numbers; screen printing is %suppressed by the semicolon %this subplot generates the 1st of two %absolute frequency histograms—here a %histogram of the 10 values using the %default bin width of 10, and each bin %has a range of 0.1 %this subplot generates the 2nd of two %absolute frequency histograms, this one %has a 8 bins Fig. 1.1 illustrates the plots created by the code above. These types of histograms are called absolute frequency histograms, but they can be converted into relative frequency histograms by dividing the individual bin counts by the total of all bin counts. With enough (infinite) samples, a relative frequency plot reproduces the underlying probability distribution that was used to generate the random numbers. MatLab easily generates relative frequency histograms as the following code illustrates (see Fig. 1.2). In the next set of commands, you use a command called 'figure.' Figure opens a blank figure window and is important because it will allow you to open several figures at once. Without opening another figure, MatlLab would overwrite your previous figure. >>figure %start the second figure (Fig. 1.2 below) >>[BinCounts,BinLabels] = hist(VectorZ) %BinCounts is assigned the number %of elements in each bin in the %histogram and BinLabels is %assigned the center of each bin in %the histogram >>RelativeFreq = BinCounts/sum(BinCounts) %RelativeFreq is BinCounts %by the division of BinCounts by %its sum >>bar(BinLabels,RelativeFreq) %creates a relative frequency histogram %from the values in RelativeFreq using the Generating random inputs and evaluating statistics 6 Laboratory 1-Spring 2005 Neural Network Models of Cognition and Brain Computation © W. B. Levy 2004 %bar command Fig. 1.2. Relative frequency histogram (bar graph) of the data plotted Fig. 1.1A. Fig 1.2 uses Fig. 1.1. inAbsolute frequency the information 1.1A to create a histograms of 10 in uniformly probabilityrandom distribution in the form distributed, numbers. of a relative frequency histogram. The absolute number of random As the number of generated values numbers generated is plotted in increases, a relative frequency each bin. In A, there are 10 histogram bins, each ofwill sizeapproach 0.1. Bina uniform random isdistribution 0.05-0.6 empty because no random numbers were generated in this range. In B, the same 10 random numbers are plotted as a histogram using only 8 bins. You can see how changing bin width alters the absolute frequency histogram. E x e r c i s e 1 . 1 . 3 . Compare the relative smoothness of the histograms and relative frequency plots when 10, 100, 1000, and 10,000 random numbers are used. How does bin width affect this smoothness? Be sure to consider absolute values of bin counts as well as relative values. Section 1.2: Seeding the random number generator Suppose that, as an afterthought, you want to determine the mean and variance of a set of random numbers. Each set of values must be called by a unique name. If you used the variable VectorZ to store three different vectors (with elements numbering 10, 100, and 1000) in the last exercise, then the first two vectors (i.e., those with 10 and 100 elements) are lost forever and you cannot recreate them. This lack of reproducibility can be a problem if you want to compare simulations of two different models given the same sequence of inputs, especially if you forget to save the inputs. Fortunately, there is a standard procedure for avoiding this problem. Because computer-generated random numbers are, properly speaking, pseudo-random numbers, we can generate the identical sequence whenever we want so long as we start the generator the Generating random inputs and evaluating statistics 7 Laboratory 1-Spring 2005 Neural Network Models of Cognition and Brain Computation © W. B. Levy 2004 same way every time. The initial value of the generator is controlled by a seed value using the command >>rand(‘seed’, 1204513528) %1204513528 is an example of a seed value Try the following sequence of commands: >>VectorX = rand(4,1) >>VectorX = rand(4,1) >>rand(‘seed’,16) >>VectorX = rand(4,1) >>rand(‘seed’,16) >>VectorX = rand(4,1) %generating a column vector of 4 random numbers twice %generates two different sets of random numbers %because the random number generator is not started %the same way both times %try it again; this time seed the generator with the %same number both times %here we happen to use 16 as the seed value %now the same set of random numbers is generated twice %because the random number generator was started the %same twice Important Tip: Remember from the in-class introduction to MatLab that it is important to seed the random number generator at the beginning of each lab and more often if desired. Your seed must be unique—use a value like your social security number or a simple perturbation of it. In any case, do not lose your seed values—without them, you can’t reproduce your results, and a non-reproducible result is not useful. Section 1.3: Relocating and Rescaling the Random Number Generator The default range for the command rand() is [0,1). You will often need a sample of random numbers with a different range. By adding or subtracting a constant, you can move the range, and by multiplying or dividing, you can change the width of the range. The following exercises will teach you how to move and rescale the random number generator. Exercise 1.3.1. Here you will generate random numbers on intervals that are different from the [0,1) MatLab default. First, generate nine random numbers on the interval [–.5, +.5). >>rand(9,1)-.5 %subtract 0.5 from each random number to relocate the %default interval Basically, what you are doing here is generating 9 random numbers between 0 and 1 and then subtracting .5 from each one to shift the scale. Now generate five random numbers on the interval [0, .5). >>(rand(5,1))/2 %contract the interval by dividing by 2; this also %relocates the interval Here, you are generating 5 random numbers between 0 and 1 and then dividing each one by 2 to reduce the range of the scale to [0, .5]. How do the mean and variance of the 5 Generating random inputs and evaluating statistics 8 Laboratory 1-Spring 2005 Neural Network Models of Cognition and Brain Computation © W. B. Levy 2004 elements in this sample in this range differ from those obtained with the default range of [0,1)? Now compare the estimated mean and variance for the two different ranges sampled above. For a uniform distribution with range [a,b) and an infinite number of samples, the mean is (a+b)/2 and the variance is (b–a)2/12). Your observations will be a little different than you might expect because you are using a finite number of samples. Exercise 1.3.2. 1. Create four different data sets using the uniform distribution and samples of 4, 16, 256, and 8192 random numbers over the interval [–1,1). (Hint: first expand the interval to the desired width and then shift its location). 2. Plot the results as histograms and comment on how well the results approximate the uniform distribution. 3. Calculate the means and variances for each set and compare these values to the theoretical ones. As the number of samples increases, how do your results compare to any observed or expected trend? 4. Comment on the rate of convergence as a function of sample size. Plot the means and variances as the sample size increments. Do these measures converge as sample size increases? (Note: the subplot command will make for a more compact set of plots). Hint – You will need to create a vectors to record the mean and variance for different sample sizes. This is much like recording the history of the average, and you could use a 'for' loop to help. Exercise 1.3.3. Compare the variances, means, and histograms for sample sizes of 10 and 1,000 random numbers. Use three different functions of the rand() command: 1. rand() 2. 2*rand() 3. 1+rand() Plot the results as histograms and rank order the means and variances by size. Explain how the distribution of the histograms reflects the rank orders. Also, plot the variances as a function of sample size. How does the variance change as sample size increases? If you could sample long enough, to what value would each mean converge? Discuss the relationship between number of samples and convergence. Optional: The command rand+rand produces an interesting result—compare it to the 2*rand command. Generating random inputs and evaluating statistics 9 Laboratory 1-Spring 2005 Neural Network Models of Cognition and Brain Computation © W. B. Levy 2004 Section 1.4: Normalization Subsequent laboratories will not work properly unless you normalize input vectors and synaptic weight vectors. Normalization is a technique used to standardize the length of a vector so that all vectors are of the same length. The idea of normalization also has some biological merit. A condition typically enforced on the inputs to a neural network is normalization of a multidimensional input or, in more realistic models, approximate normalization. Normalization makes pattern recognition much easier. The idea is that the absolute strength of a signal often says nothing about its meaning—it’s like playing the radio at two different volumes. The songs, weather, and news reports are the same, but the signal is louder in one case. Similarly, if we see an object in dim light or in bright light, we still see the same object, and we can scale for the amount of illumination. Indeed, there are neurons sensitive to global light levels (and even to global contrast) that make such scaling not only sensible but also feasible for the nervous system. We will use Euclidean normalization for our purposes in this class. We call it Euclidean because we use Euclidean geometry to define the measure of length. The Euclidean distance is defined as . For a right triangle* a b c , the length of c is a2 b2 . For a two x1 dimensional vector X x , the distance of this point from the origin is the Euclidean length 2 X-Y T X-Y of the vector, 2 2 2 x12 x22 . Normalization makes all vector lengths equal to one. Thus, x1 2 2 X x1 x2 is certain to be length one. The idea easily generalizes to any number of 2 2 x1 x2 x2 x12 x22 x1 X dimensions. For example, in three dimensions, X x2 and normalized it is 2 2 2 . x1 x2 x3 x3 You will recognize the denominator if you look carefully at the definition of Euclidean distance X-Y T X-Y . Consider the multiplication of a vector by its transpose: x1 x T x = x 1 x 2 x 3 x 2 x 1 * x 1 x 2 * x 2 x 3 * x 3 x 12 x 22 x 32 x3 This is exactly what you need in the denominator when you normalize your vector. To visualize this idea, we consider normalization to the all-positive orthant of the hypersphere (equivalent to quadrant one in two dimensions). A circle is a two-dimensional hypersphere, and a * It is conventional to describe the position of a point relative to axes at right angles to each other. Generating random inputs and evaluating statistics 10 Laboratory 1-Spring 2005 Neural Network Models of Cognition and Brain Computation © W. B. Levy 2004 sphere is a three-dimensional hypersphere. In general, hyperspheres are in higher dimensions than we can depict pictorially. Nonetheless, they exist as geometric objects, and they are very important in neural network theory. So what does normalization to the all-positive orthant of the hypersphere mean in two dimensions? In this case, the data points lie on the positive quadrant of the unit circle centered at the origin (see Fig. 1.3). To make this happen, you need a vector of non-negative elements and the sum of the squares of the elements of the vector must equal one. Fig. 1.3. A two-dimensional hypersphere, or circle. The outlined quadrant is quadrant one, or the all-positive orthant of the hypersphere. The remainder of this lab illustrates two ways to create input sets that are normalized. To see how to normalize to the unit circle, we first need to generate and display 50 random, 2dimensional data points. The following lines of code do just that (see also Fig. 1.4). >>dimension1 = rand(50,1);%generate 50 random numbers on [0,1) and %do not print >>dimension2 = rand(50,1); %generate 50 random numbers on [0,1) and %do not print >>plot(dimension1,dimension2,'o') %plot unconnected 2-D points as o’s as %shown in Fig. 1.4 >>axis(‘square’) >>axis([0,1,0,1]) Now we’ll normalize the set of 2-dimensional data points that was just generated. Remember that normalization can occur for any dimension. One way to perform this normalization is as follows. For the unit circle, (x12 + x22)=1, Generating random inputs and evaluating statistics 11 Laboratory 1-Spring 2005 Neural Network Models of Cognition and Brain Computation © W. B. Levy 2004 where x1 and x2 are the coordinates on the abscissa and ordinate for each pair of data points, respectively. We can use these two equations, as the bit of MatLab code below shows, to calculate two new vectors which, when plotted as pairs, fall on the unit circle (see Fig. 1.5). In other words, this bit of code uses MatLab’s period operator to help normalize the 50 pairs of data points to the unit circle. Example: Normalizing vectors Here is an example where we generate a single three-dimensional input and normalize the points to the unit circle: >>x = rand(3,1) >>ssx2 = x’*x >>normalizedx = x/sqrt(ssx2) >>normalizedx’normalizedx %generate 3 random numbers as a column vector %multiply x by its transpose to get the inner %product, which is a scalar %normalize the original vector by dividing each %of the original random numbers by this scalar %check to see if the new values are on the unit %circle Now we can try plotting a normalized vector so that we know how to visualize such data. >>dimension1=rand(100,1); %create each dimensions of 200 random numbers >>dimension2=rand(100,1); %in a column vector >>figure %start the first figure >>plot(dimension1, dimension2, ‘o’) %plots unconnected pairs of 2-D points as o’s >>axis(‘square’) %forces the axes to plot at the same scale >>axis([0,1.1,0,1.1]) %forces the plot to include the origin & %the value 1.1 >>figure %start the second figure >>NormalizationValue=sqrt(dimension1.^2+dimension2.^2); %this function is the sum of the squares %calculated individually for each column vector; %the period signifies an element-wise operation %that is not part of linear algebra %(see also >>help punct) >>plot(dimension1./NormalizationValue,dimension2./NormalizationValue,‘.’) %plots unconnected pairs of 2-D points as dot’s >>axis(‘square’) %forces the axes to plot at the same scale >>axis([0,1.1,0,1.1]) %forces the plot to include the origin & %the value 1.1 The above code will generate plots similar to those in Figures 1.4 and 1.5. Generating random inputs and evaluating statistics 12 Fig. 1.4. Scatterplot of the pairs of 50 random numbers before normalization to the unit circle. Note how the points are scattered all over the graph. The vector named dimension1 consists of n uniformly distributed random values dimension1(1), dimension1(2), ... , dimension1(n). Similarly the vector dimension2 also has n uniformly distributed random values, where n = 50. The data points plotted here are pairs of values (dimension1(i), dimension2(i)), Laboratory 1-Spring 2005 where 1 i n; e.g. the first point is (dimension1(1),dimension2(1)). Neural Network Models of Cognition and Brain Computation © W. B. Levy 2004 Fig. 1.5. The same 50 data points are normalized to the unit circle. The normalized vector, Dimension1, is called Normalized Dimension1; the normalized vector, Dimension2, is called Normalized Dimension2. Note the distribution of the data points along the arc of the unit circle. Exercise 1.4.1. Create two dimensions of 200 random vectors each and normalize them to the unit circle First take these same data and plot the sequential values of each dimension. Then plot them one against the other. Be sure to square the axes of your plot. Note: The idea of normalization is important enough that you might be quizzed on this at the beginning of the next lab. Most importantly, remember that normalization makes all vector lengths equal to one. Section 1.5: Generating uniformly random points on an arc of a circle This section will help you learn how to generate the input sets that will be used in the next laboratory. As you may have noticed, it might be difficult to generate points with uniform probability on an arc smaller than 90 (/2 radians). Generating points directly on the circle makes it easy. The key is to think in terms of the circumference of the unit circle. By definition, a unit circle has a radius of one. Generating random inputs and evaluating statistics 13 Laboratory 1-Spring 2005 Neural Network Models of Cognition and Brain Computation © W. B. Levy 2004 Calling radius r, the circumference of any circle is 2r. For the unit circle, the circumference is 2. This simple idea is used to generate points in a uniform way on the unit circle or an arc of that circle. If we think of the entire 2 circumference of a circle as an arc of 360, then we can translate any angle to an arc length. The angle of interest has its vertex at the origin. (Note that arc lengths are in units called radians). The arc length of a specified angle can be calculated as: arc length of angle x x degrees 360 degrees 2 . The arc length from 0 to 90 is ¼ of the circle and equals (90/360)*2 or /2 radians. Suppose we want to generate 100 uniform random values over an arc of 90. (Recall that 90=/2 radians). Then >>RandPoints=rand(1,100)*pi/2; %generate 100 random points from 0 %to /2 and suppress printing; here %we use the MatLab command, pi The points are now evenly distributed over the desired range. To plot these points requires trigonometry in order to convert a position on the unit circle to a point specified in rectangular coordinates. The following bit of MatLab code will plot the points and it is another way to normalize to the unit circle. >>dimx1=cos(RandPoints); >>dimx2=sin(RandPoints); >>plot(dimx1, dimx2, ‘.’) >>axis(‘square’) >>axis([0,1.1,0,1.1]) For a right triangle, remember that sin() equals the side opposite divided by the hypotenuse and that cos() equals the side adjacent divided by the hypotenuse. sin cos Generating random inputs and evaluating statistics x2 14 1 side opposite x 2 x2 hypotenuse 1 side adjacent x 1 x1 hypotenuse 1 Laboratory 1-Spring 2005 Neural Network Models of Cognition and Brain Computation © W. B. Levy 2004 Thus the cosine and sine of a point on an arc locate its position in terms of the abscissa and ordinate. Because MatLab requires that we use radians as the units, no further conversion is necessary given that the random points are in radians. Exercise 1.5.1. 1. Generate 100 points that are very uniformly distributed on the all-positive portion of the unit circle. 2. Generate points uniformly for the arc that goes from 30 to 60 (/6 to /3 radians). Recall the scaling and shifting technique introduced in Section 1.3. Section 1.6. Possible Quiz Questions How would you use a ‘for’ loop in MatLab to update the current average as you iterate through the elements in a vector? How does a relative frequency histogram differ from an absolute frequency histogram? Why is it important that you seed the random number generator? How would you shift the range in which random numbers are generated in MatLab from the default [0,1) to [-1,-.5]? Explain why it is important to normalize your vectors before using them as inputs to your network. Appendix 1.1. Programming technique and debugging4 Let's put things into perspective. Suppose you are building a house and a sloppy contractor will pour your foundation for $10,000 but it will probably be crooked. On the other hand, another, very careful foundation contractor who works a little slower want to charge you $20,000, but you will get a square and level foundation. To building the rest of the house on the square, level foundation cost $100,000 but to build the house properly on the crooked foundation will cost you $130,000. Now which contractor do you choose? When it comes to programming, your time is your cost. It is almost a certainty, especially for larger programs (and a large program is a relative thing), that you will spend more time debugging than the initial time spent programming. Thus, it makes sense to spend a little extra time writing the program from the start because it can easily save you a lot more time at the debugging stage. Debugging is often the hardest part of writing a complex program. Small typos, misspelled variables, improper use of functions, and in fact just about anything can cause a well-conceived program to run incorrectly. The trick is to find the errors quickly and get the program working with a minimum amount of rewriting. There are many ways to lessen the number of errors in 4 Thanks to Jason Meyers and Andrew Perez-Lopez for contributions to this appendix. Generating random inputs and evaluating statistics 15 Laboratory 1-Spring 2005 Neural Network Models of Cognition and Brain Computation © W. B. Levy 2004 your program code, and there are several ways to trace the errors once the code is written. Here we discuss both. Here are some ways to avoid making errors when you are your writing code: Make your code legible. Ideally, your code should as close to regular English as possible. For example, suppose you want to write a nested for loop to print each number in a weight matrix. The following code will work: for i=1:nr for j=1:nc x(i,j) end end but who can understand what this code fragment is doing? The following will be much more useful in the long run and lead to easier degugging. % go through each value in the weight matrix and print it out for rowIndex = 1:maxnumberOfRows for columnIndex = 1:maxnumberOfColumns weightMatrix(rowIndex,columnIndex) end end If the first set of code were positioned in the middle of a page, perhaps nested in another loop, it would be extremely confusing, while the second set of code would be clear. Using longer variable names can be taxing on the fingers, but with the copy, cut, and paste functions nedit provides, it’s not so bad, and it makes much less work for your brain in the long run. Write the code with lots of comments. After each line, write what any new variable is to be used for, and what the calculation is going to do. This will make it much easier to debug later and those who read your code will be able to understand what your program is doing. Far and away the hardest thing to do when you are trying to read code that you’ve written, or especially code written by someone else, is to figure out what the purpose of a certain process or variable is. Use descriptive variable names. Choose variable names that describe what they represent. For example, if you have a variable that represents the number of neurons in your network, call it number_of_neurons instead of something generic, like x. Descriptive variable names are crucial to the process of writing any code more than five or ten lines long A note about variable declaration: MatLab allows what computer science jargon calls ‘implicit’ declaration of variables. Basically what this means is that you do not have to tell MatLab when you want to make a new variable—you can just start using it. Not all programming languages share this feature. While implicit declaration of variables is sometimes very easy to understand, with long code it can sometimes complicate debugging. If you see a new variable when trying to trace through your code to find a bug, you often try to figure out what the variable means. It may be that the variable had no meaning until this particular line of Generating random inputs and evaluating statistics 16 Laboratory 1-Spring 2005 Neural Network Models of Cognition and Brain Computation © W. B. Levy 2004 code. This make it tough to figure out what code does. Thus, it again pays to use descriptive names for variables. Make your code more ‘robust.’ If you are writing a program that will be reused with different parameters (such as numbers of neurons or percentages of connectivity), write the original program with variables like number_of_neurons and number_of_synapses, in place of what are called ‘magic numbers,’ like constants 256 or 26. If you do this, then you can just copy and paste whole blocks of code, changing only those lines that define the number of neurons and the number of synapses. Many errors come from copying and pasting lines and then missing a few lines when making the changes to a new network. Write your code in small, reusable chunks. If you write your code piece-by-piece, it will be easier to locate errors and, once you have debugged these pieces, you can reuse them in future programs. For example, you can write a generic piece of code to calculate the inner excitation of a neuron (you might even create a function to do this). Once it is debugged, it can be used for all networks that need an inner excitation product calculated. Do not write your program all at once and then try to run it. Run the pieces as you write them. Try each function separately. Check the output at every step. Is it doing what you thought? Do the inputs and outputs make sense? Are they the size you would expect? It is much easier to debug small bits of code than an entire network. Test subroutines using extreme values. If a variable can vary between 0 and 1, test at the extreme of 0 and then the other extreme of 1. Then put the subroutines together and rerun the same test values. Use the clear command. If you frequently use some variables (e.g., input(x) or output(y)) the current implementation of the network can fail if a previous network had a different size input or output, or if the previous network left lots of data still in the matrices. To eliminate this problem, just include clear variable_name in your code. This command will erase all data in the stated variables so that the next running of the network can start fresh. Along with this, it is good to include a clear all statement at the very beginning of a long program, so that all of the variables are cleared off, the memory is wiped clean, and the program has a fresh slate. If you don’t have to carry any variables over between network models, you can clear all between each network. the absence of proper clear statements, outputs from previous runs can mess up current runs. Here’s a suggestion that may just kill two birds with one stone. At the beginning of your code, write a clear statement for each variable you are using and also state what the variable does. For example, the code used above might now look like this: In clear rowIndex %loop variable to specify the current row clear numberOfRows %the number of rows in my weight matrix clear columnIndex %loop variable to specify the current %column clear numberOfColumns %the number of columns in my weight %matrix clear weightMatrix %a matrix containing all the weights for %my network Generating random inputs and evaluating statistics 17 Laboratory 1-Spring 2005 Neural Network Models of Cognition and Brain Computation © W. B. Levy 2004 %……………………………………………………………………………… %the rest of your code goes in between the part above & the loop %below %……………………………………………………………………………… for rowIndex = 1:numberOfRows for columnIndex = 1:numberOfColumns weightMatrix(rowIndex,columnIndex) end end This method makes sure that all your variables are cleared out, provides a central location where you can find out what all your variables mean. You might be thinking, “I know what my own variables mean,” but when the code gets long, and you come back to code written weeks before, it gets tricky! Doing this generally makes your code more readable to yourself and any others who may feel inclined to read it. For small debugging runs, leave out the end of line semicolons. When trying some new code for the first time, it’s really useful to see how the data are changing. If you leave out the ; at the end of each line, Matlab can display the variables as they are changing. This is not very useful for 100x100 matrices, but it is really good for seeing how counters, excitations, etc. are changing. Once the program is written, you think you have finally solved the tough problems, mapped out a solution, and got it all coded in, but then you run the network and it grinds to a halt. Ack! What now? If the program stops running because of an error, look at the error message and at the line in the program where the error is. Do you have the number of inputs required by the called function? Check help function_name to see what the function needs. Are all of the variables the size you think they will be? When Matlab stops for an error, all of the variables are still stuck in memory (this is another good reason to include clear statements every once in a while). Type the variable names and see if they have the data that you thought they would. Make sure your vectors and matrices are the right size. Many an error comes from forgetting whether a matrix has 26 rows and 256 columns or 256 rows and 26 columns! If you have comments in the code telling you what the matrix size should be, then it’s easy to make sure it’s the expected size. Alas, sometimes the real error in a program is many lines before Matlab detects it. For this problem, it is useful to use a debugger, which will stop the program where you want it to stop and let you run through line-by-line. Matlab includes a debugger— here are some of its basic commands (use help debug for more information): >>dbtype mfile or >>dbtype mfile start:end Lists the specified m-file with line numbers for debugging (more on can be a useful way to look through the code). You need to know the numbers of the lines in your m-file code where you want to start and stop. Look Generating random inputs and evaluating statistics 18 Laboratory 1-Spring 2005 Neural Network Models of Cognition and Brain Computation © W. B. Levy 2004 through the program and write down the first line numbers of difficult sections that you think may be causing the errors. For example, if it’s a for loop, pick the line number of the for command. >>dbstop in mfile at lineno Places a breakpoint at the specified line number in the specified m-file. When you execute the m-file, Matlab will temporarily stop when it gets to the specified line and give the prompt: K>> This prompt means that Matlab is waiting to continue the program. At this point, you can inspect any variables by typing their names, or you can change the values of any variables. To restart the program, there are two commands: >>dbcont Continues running the program until it reaches the next breakpoint. >>dbstep Runs the next line of the program. This is very useful for watching how a variable is changed over a couple of lines of the program. >>dbclear all or >>dbclear in mfile at lineno Clears all breakpoints, or clears the specified breakpoint. >>dbquit Stops the program from running and returns Matlab to its normal state. Program is not completed and nothing is returned. Some other useful commands for debugging: >>keyboard When placed in an m-file, this command stops the m-file and gives the K>> prompt, but does not allow dbstep and dbcont to be used. To get the program running again, type return (the text, not the button on the keyboard). >>who Generating random inputs and evaluating statistics 19 Laboratory 1-Spring 2005 Neural Network Models of Cognition and Brain Computation © W. B. Levy 2004 Lists all of the active variables at that time. A good time to use this command would be when you want to clear all those unneeded variables which might interfere with your program. >>whos Like who, but also gives you the size of each of the variables. All too common mistakes: Typing in function(column,row) rather than function(row,column). Forgetting the final parentheses in embedded commands. For example, test=input(excitation(counter),output(neurons(counter)) needs one more parenthesis at the end. Leaving data in variables from previous runs of a program. For example, if you use the variable output(x) for a network with 6 outputs and then for a network with only 4 outputs, the variable will still have 2 outputs from the old network. This will mess up later calculations. Include a clear output line in the beginning of the program. Trying to assign something of the wrong size to a variable. If a network is giving a column vector output, make sure that the variable assigned to that output can accept a column vector. To help with this problem, write all of your programs so that they use column vectors and just stick with it! Above all, remember to keep things simple. When first testing ideas, don’t use lots of deeply embedded loops. Write simply, and you can debug simply. Once you know that your code is going to work, expand it out into the loops through each neuron, variable, synapse, etc. Hopefully you’ll take these suggestions and use them to write code that is easily understandable, easy to debug, and elegant. Good luck!Appendix 1.2. Glossary terms population mean the true mean or predicted mean sample mean the empirically observed mean absolute frequency histogram a histogram that displays individual bin counts for a set of data (the default bin width in MatLab is 10) relative frequency histogram a bar graph that displays the results of dividing each of the individual bin counts by total of all bin counts seed value the multi-digit unique number used to seed the random number generator in MatLab Normalization a technique used to standardize the length of a vector so that all vectors are of the same length aka Euclidean normalization Generating random inputs and evaluating statistics 20 Laboratory 1-Spring 2005 Neural Network Models of Cognition and Brain Computation abscissa the x-axis ordinate the y-axis Generating random inputs and evaluating statistics 21 © W. B. Levy 2004 Laboratory 1-Spring 2005