full report - Council of Deans of Nursing and Midwifery

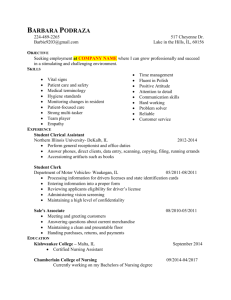

advertisement