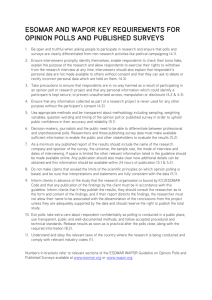

ESOMAR/WAPOR Guide to Opinion Polls including the ESOMAR

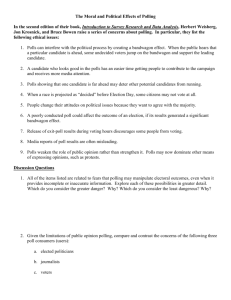

advertisement