Addressing attribution of cause and effect in small n impact

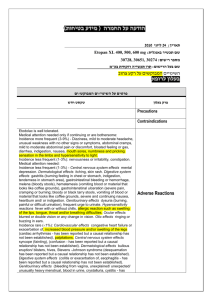

advertisement