Information Theory in Intelligent Decision Making

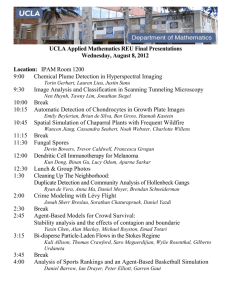

advertisement

Information Theory

in Intelligent Decision Making

Daniel Polani

Adaptive Systems and Algorithms Research Groups

School of Computer Science

University of Hertfordshire, United Kingdom

March 5, 2015

Daniel Polani

Information Theory in Intelligent Decision Making

Information Theory

in Intelligent Decision Making

The Theory

Daniel Polani

Adaptive Systems and Algorithms Research Groups

School of Computer Science

University of Hertfordshire, United Kingdom

March 5, 2015

Daniel Polani

Information Theory in Intelligent Decision Making

Motivation

Artificial Intelligence

modelling cognition in humans

realizing human-level “intelligent” behaviour in machines

jumble of various ideas to get above points working

Question

Is there a joint way of understanding cognition?

Probability

we have probability theory for a theory of uncertainty

we have information theory for endowing probability with a

sense of “metrics”

Daniel Polani

Information Theory in Intelligent Decision Making

Motivation

Artificial Intelligence

modelling cognition in humans

realizing human-level “intelligent” behaviour in machines (just

performance: not necessarily imitating biological substrate)

jumble of various ideas to get above points working

Question

Is there a joint way of understanding cognition?

Probability

we have probability theory for a theory of uncertainty

we have information theory for endowing probability with a

sense of “metrics”

Daniel Polani

Information Theory in Intelligent Decision Making

Random Variables

Def.: Event Space

Consider an event space Ω = {ω1 , ω2 , . . . }, finite or countably

infinite with a (probability) measure PΩ : Ω → [0, 1] s.t.

∑ω PΩ (ω ) = 1. The ω are called events.

Random Variable

A random variable X is a map X : Ω → X with some outcome

space X = { x1 , x2 , . . . } and induced probability measure

PX ( x ) = PΩ ( X −1 ( x )).

We also write instead

PX ( x ) ≡ P( X = x ) ≡ p( x ) .

Daniel Polani

Information Theory in Intelligent Decision Making

Neyman-Pearson Lemma I

Lemma

Consider observations x1 , x2 , . . . , xn of a random variable X

and two potential hypotheses (distributions) p1 and p2 they

could have been based upon.

Consider the test for hypothesis p1 to be given as

( x1 , xn

2 , . . . , xn ) ∈ A where o

p (x0 ,x0 ,...,x0 )

A = x = ( x10 , x20 , . . . , xn0 ) p21 (x10 ,x20 ,...,xn0 ) ≥ C with some

n

1 2

C ∈ R+ .

Assuming the rate α of false negatives p1 (Ā) to be given.

Generated by p1 , but not in A

If β is the rate of false positives p2 (A)

Then: any test with false negative rate α0 ≤ α has false

positive rate β0 ≥ β.

(Cover and Thomas, 2006)

Daniel Polani

Information Theory in Intelligent Decision Making

Neyman-Pearson Lemma II

Proof

(Cover and Thomas, 2006)

Let A as above and B some other acceptance region; χA and χB

be the indicator functions. Then for all x:

[χA (x) − χB (x)] [ p1 (x) − Cp2 (x)] ≥ 0 .

Multiplying out & integrating:

0≤

∑( p1 − Cp2 ) − ∑( p1 − Cp2 )

A

B

= (1 − α) − Cβ − (1 − α0 ) + Cβ0

= C ( β0 − β) − (α − α0 )

Daniel Polani

Information Theory in Intelligent Decision Making

Neyman-Pearson Lemma III

Consideration

assume events x

i.i.d.

test becomes:

p1 ( x i )

∏ p2 ( x i ) ≥ C

i

logarithmize:

p1 ( x i )

∑ log p2 (xi ) ≥ κ

(:= log C )

i

Daniel Polani

Information Theory in Intelligent Decision Making

Neyman-Pearson Lemma IV

Consideration

assume events x

i.i.d.

test becomes:

p1 ( x i )

∏ p2 ( x i ) ≥ C

i

Note

Average “evidence” growth per sample

p1 ( X )

E log

p2 ( X )

p (x)

= ∑ p ( x ) log 1

p2 ( x )

x ∈X

logarithmize:

p1 ( x i )

∑ log p2 (xi ) ≥ κ

(:= log C )

i

Daniel Polani

Information Theory in Intelligent Decision Making

Neyman-Pearson Lemma V

Consideration

assume events x

i.i.d.

test becomes:

p1 ( x i )

∏ p2 ( x i ) ≥ C

i

Note: Kullback-Leibler Divergence

Average “evidence” growth per sample

p1 ( X )

DKL ( p1 || p2 ) = E p1 log

p2 ( X )

p (x)

= ∑ p1 ( x ) log 1

p2 ( x )

x ∈X

logarithmize:

p1 ( x i )

∑ log p2 (xi ) ≥ κ

(:= log C )

i

Daniel Polani

Information Theory in Intelligent Decision Making

Neyman-Pearson Lemma VI

900

"0.40_vs_0.60.dat"

"0.50_vs_0.60.dat"

"0.55_vs_0.60.dat"

800

700

log sum

600

500

400

300

200

100

0

-100

0

2000

4000

6000

8000

10000

samples

Daniel Polani

Information Theory in Intelligent Decision Making

Neyman-Pearson Lemma VII

900

"0.40_vs_0.60.dat"

"0.50_vs_0.60.dat"

"0.55_vs_0.60.dat"

dkl_04*x

dkl_05 * x

dkl_055 * x

800

700

log sum

600

500

400

300

200

100

0

-100

0

2000

4000

6000

8000

10000

samples

Daniel Polani

Information Theory in Intelligent Decision Making

Part I

Information Theory — Motivation

Daniel Polani

Information Theory in Intelligent Decision Making

Structural Motivation

Intrinsic Pathways to Information Theory

Information

Theory

Structural Motivation

Intrinsic Pathways to Information Theory

Information

Theory

optimal

communication

Structural Motivation

Intrinsic Pathways to Information Theory

Shannon

axioms

Information

Theory

optimal

communication

Structural Motivation

Intrinsic Pathways to Information Theory

physical

entropy

Shannon

axioms

Information

Theory

optimal

communication

Structural Motivation

Intrinsic Pathways to Information Theory

physical

entropy

Laplace’s

principle

Shannon

axioms

Information

Theory

optimal

communication

Structural Motivation

Intrinsic Pathways to Information Theory

physical

entropy

Laplace’s

principle

typicality

theory

Shannon

axioms

Information

Theory

optimal

communication

Structural Motivation

Intrinsic Pathways to Information Theory

physical

entropy

Laplace’s

principle

typicality

theory

optimal Bayes

Shannon

axioms

Information

Theory

optimal

communication

Structural Motivation

Intrinsic Pathways to Information Theory

physical

entropy

Laplace’s

principle

typicality

theory

Shannon

axioms

Information

Theory

optimal Bayes

Rate Distortion

optimal

communication

Structural Motivation

Intrinsic Pathways to Information Theory

physical

entropy

Laplace’s

principle

Shannon

axioms

Information

Theory

typicality

theory

optimal

communication

information

geometry

optimal Bayes

Rate Distortion

Daniel Polani

Information Theory in Intelligent Decision Making

Structural Motivation

Intrinsic Pathways to Information Theory

physical

entropy

Laplace’s

principle

Shannon

axioms

Information

Theory

typicality

theory

optimal

communication

information

geometry

optimal Bayes

Rate Distortion

AI

Daniel Polani

Information Theory in Intelligent Decision Making

Optimal Communication

Codes

task: send messages (disambiguate states) from sender to

receiver

consider self-delimiting codes (without extra delimiting

character)

simple example: prefix codes

Def.: Prefix Codes

codes where none is a prefix of another code

Daniel Polani

Information Theory in Intelligent Decision Making

Prefix Codes

0

0

0

1

1

1

0

1

0

Daniel Polani

Information Theory in Intelligent Decision Making

Kraft Inequality

Theorem

Assume events x ∈ X = { x1 , x2 , . . . xk } are coded using prefix

codewords based on alphabet size b = |B|, with lengths

l1 , l2 , . . . , lk for the respective events, then one has

k

∑ bl

i =1

i

≤1.

Proof Sketch

(Cover and Thomas, 2006)

Let lmax be the length of the longest codeword. Expand tree fully

to level lmax . Fully expanded leaves are either: 1. codewords; 2.

descendants of codewords; 3. neither.

An li codeword has blmax −li full-tree descendants, which must be

different for the different codewords and there cannot be more

than blmax in total. Hence

∑ bl

max − li

≤ blmax

Remark

The converse also holds.

Daniel Polani

Information Theory in Intelligent Decision Making

Considerations — Most compact code

Assume

Want to code stream of events

x ∈ X appearing with probability

p ( x ).

Minimize

Average code length:

E[ L] = ∑i p( xi ) li under

Note

1 try to make l as small as

i

possible

2 make b − li as large as possible

3 limited by Kraft inequality;

ideally becoming equality

Result

Differentiating Lagrangian

∑b

− li

=1

!

constraint ∑i b−li = 1

∑ p ( x i ) li + λ ∑ b − l

i

i

i

w.r.t. l gives codeword

lengths for “shortest” code:

i

li = − logb p( xi )

as li are integers, that’s typically not exact

Daniel Polani

Information Theory in Intelligent Decision Making

Considerations — Most compact code

Assume

Want to code stream of events

x ∈ X appearing with probability

p ( x ).

Minimize

Average code length:

E[ L] = ∑i p( xi ) li under

Note

1 try to make l as small as

i

possible

2 make b − li as large as possible

3 limited by Kraft inequality;

ideally becoming equality

Result

Differentiating Lagrangian

∑b

− li

!

constraint ∑i b−li = 1

∑ p ( x i ) li + λ ∑ b − l

i

i

i

w.r.t. l gives codeword

lengths for “shortest” code:

=1

i

li = − logb p( xi )

as li are integers, that’s typically not exact

Average Codeword Length

= ∑ p( xi ) · li = − ∑ p( x ) log p( x )

i

x

In the following, assume binary log.

Daniel Polani

Information Theory in Intelligent Decision Making

Entropy

Def.: Entropy

Consider the random variable X. Then the entropy H ( X ) of X is

defined as

H (X)

:= − ∑ p( x ) log p( x )

x

with convention 0 log 0 ≡ 0

Daniel Polani

Information Theory in Intelligent Decision Making

Entropy

Def.: Entropy

Consider the random variable X. Then the entropy H ( X ) of X is

defined as

H (X)

:= − ∑ p( x ) log p( x )

x

with convention 0 log 0 ≡ 0

Interpretations

average optimal codeword length

uncertainty (about next sample of X)

physical entropy

much more . . .

Quote

“Why don’t you call it entropy. In the first place, a mathematical

development very much like yours already exists in Boltzmann’s

statistical mechanics, and in the second place, no one understands

entropy very well, so in any discussion you will be in a position of

advantage.”

John von Neumann

Daniel Polani

Information Theory in Intelligent Decision Making

Entropy

Def.: Entropy

Consider the random variable X. Then the entropy H ( X ) of X is

defined as

H ( X )[≡ H ( p)] := − ∑ p( x ) log p( x )

x

with convention 0 log 0 ≡ 0

Interpretations

average optimal codeword length

uncertainty (about next sample of X)

physical entropy

much more . . .

Quote

“Why don’t you call it entropy. In the first place, a mathematical

development very much like yours already exists in Boltzmann’s

statistical mechanics, and in the second place, no one understands

entropy very well, so in any discussion you will be in a position of

advantage.”

John von Neumann

Daniel Polani

Information Theory in Intelligent Decision Making

Meditation

Probability/Code Mismatch

Consider events x following a probability p( x ), but modeler

assuming mistakenly probability q( x ), with optimal code lengths

− log q( x ). Then “code length waste per symbol” given by

− ∑ p( x ) log q( x ) + ∑ p( x ) log p( x )

x

x

= ∑ p( x ) log

x

p( x )

q( x )

= DKL ( p||q)

Daniel Polani

Information Theory in Intelligent Decision Making

Part II

Types

Daniel Polani

Information Theory in Intelligent Decision Making

A Tip of Types

(Cover and Thomas, 2006)

Method of Types: Motivation

consider sequences with same empirical distribution

how many of these with a particular distribution

probability of such a sequence

Sketch of the Method

consider binary event set X = {0, 1}

w.l.o.g.

consider sample x (n) = ( x1 , . . . , xn ) ∈ X n

(n)

the type px is the empirical distribution of symbols y ∈ X in

sample x (n) . I.e. px(n) (y) counts how often symbol y appears

in x (n) . Let Pn be set of types with denominator n.

or dividing n

for p ∈ Pn , call the set of all sequences x (n) ∈ X n with type p

the type class C (p) = { x (n) |px(n) = p}.

Daniel Polani

Information Theory in Intelligent Decision Making

Type Theorem

Type Count

If |X | = 2, one has |Pn | = n + 1 different types for sequences of

length n.

easy to generalize

Important

Pn grows only polynomially, but X n grows exponentially with n.

It follows that (at least one) type must contain exponentially many

sequences. This corresponds to the “macrostate” in physics.

Theorem

(Cover and Thomas, 2006)

If x1 , x2 , . . . , xn is an i.i.d. drawn sample sequence drawn from q,

then the probability of x (n) depends only on its type and is given by

2−n[ H (px(n) )+ DKL (px(n) ||q)]

Corollary

If x (n) has type q, then its probability is given by

2−nH (q)

A large value of H (q) indicates many possible candidates x (n) and

high uncertainty, a small value few candidates and low uncertainty.

here, we interpret probability q as type

Daniel Polani

Information Theory in Intelligent Decision Making

Part III

Laplace’s Principle and Friends

Daniel Polani

Information Theory in Intelligent Decision Making

Laplace’s Principle of Insufficient Reason I

Scenario

Consider X . A probability distribution is assumed on X , but it is

unknown.

Laplace’s principle of insufficient reason states that, in absence of

any reason to assume that the outcomes are inequivalent, the

probability distribution on X is assumed as equidistribution.

Question

How to generalize when something is known?

Daniel Polani

Information Theory in Intelligent Decision Making

Answer: Types

Dominant Sample Sequence

Remember: sequence probability of sequences in type class C (q)

2−nH (q)

A priori, a probability q maximizing H (q) will generate dominating

sequence types dominating all others.

Maximum Entropy Principle

Maximize: H (q) with respect to q

Result: equidistribution q( x ) =

Daniel Polani

1

|X |

Information Theory in Intelligent Decision Making

Sanov’s Theorem I

Theorem

Consider i.i.d. sequence

X1 , X2 , . . . , Xn of random variables,

distributed according to q( X ). Let

further E be a set of probability

distributions.

E

p∗

Then (amongst other), if E is closed

and with p∗ = arg min p∈E D ( p||q),

one has

q

1

log q(n) (E ) −→ − D ( p∗ ||q)

n

Daniel Polani

Information Theory in Intelligent Decision Making

Sanov’s Theorem II

Interpretation

p is unknown, but one knows constraints for p (e.g. some

!

condition, such as some mean value Ū = ∑ x p( x )U ( x ) must be

attained, i.e. the set E is given), then the dominating types are

those close to p∗ .

Special Case

if prior q is equidistribution (indifference), then minimizing D ( p||q)

under constraints E is equivalent to maximizing H ( p) under these

constraints.

Jaynes’ Maximum Entropy Principle

Daniel Polani

Information Theory in Intelligent Decision Making

Sanov’s Theorem III

Jaynes’ Principle

generalization of Laplace’s Principle

maximally uncommitted distribution

Daniel Polani

Information Theory in Intelligent Decision Making

Maximum Entropy Distributions I

No constraints

We are interested in maximizing

H ( X ) = − ∑ p( x ) log p( x )

x

over all probabilities p. The probability p lives in the simplex

∆ = {q ∈ R|X | | ∑i qi = 1, qi ≥ 0}

The maximization requires to respect constraints, of which we now

!

consider only ∑ x p( x ) = 1.

The edge constraints happen not to be invoked here.

Daniel Polani

Information Theory in Intelligent Decision Making

Maximum Entropy Distributions II

No constraints

Unconstrained maximization via Lagrange:

max[− ∑ p( x ) log p( x ) + λ ∑ p( x )]

p

x

x

Taking derivative ∇ p(x) gives

!

− log p( x ) − 1 + λ = 0

. Thus p( x ) = eλ−1 ≡ 1/|X | — equidistribution

Daniel Polani

Information Theory in Intelligent Decision Making

Maximum Entropy Distributions

Linear Constraints

Constraints are now

∑ p( x ) = 1

!

x

∑ p( x ) f ( x ) =

!

f¯ .

x

Derive Lagrangian

0=

− ∑ p( x ) log p( x ) + λ ∑ p( x ) + µ ∑ p( x ) f ( x )

x

x

x

− log p( x ) − 1 + λ + µ f ( x ) = 0

so that one has

Boltzmann/Gibbs Distribution

p ( x ) = e λ −1+ µ f ( x )

1

= eµ f ( x)

Z

Daniel Polani

Information Theory in Intelligent Decision Making

Maximum Entropy Distributions

Linear Constraints

Constraints are now

∑ p( x ) = 1

!

x

∑ p( x ) f ( x ) =

!

f¯ .

x

Derive Lagrangian

0 = ∇ P [ − ∑ p( x ) log p( x ) + λ ∑ p( x ) + µ ∑ p( x ) f ( x )]

x

x

x

− log p( x ) − 1 + λ + µ f ( x ) = 0

so that one has

Boltzmann/Gibbs Distribution

p ( x ) = e λ −1+ µ f ( x )

1

= eµ f ( x)

Z

Daniel Polani

Information Theory in Intelligent Decision Making

Part IV

Kullback-Leibler and Friends

Daniel Polani

Information Theory in Intelligent Decision Making

Conditional Kullback-Leibler

DKL can be conditional

DKL [ p(Y | x )||q(Y | x )]

DKL [ p(Y | X )||q(Y || X )] =

Daniel Polani

∑ p(x) DKL [ p(Y |x)||q(Y |x)]

x

Information Theory in Intelligent Decision Making

Kullback-Leibler and Bayes

(Biehl, 2013)

Want to estimate p( x |θ ), where θ is the parameter. Observe y.

Seek “best” q( x |y) for this y in the following sense:

1

minimize DKL of true distribution to model distribution q

DKL [ p( x |θ )||q( x |y)]

min

q

Daniel Polani

Information Theory in Intelligent Decision Making

Kullback-Leibler and Bayes

(Biehl, 2013)

Want to estimate p( x |θ ), where θ is the parameter. Observe y.

Seek “best” q( x |y) for this y in the following sense:

1

minimize DKL of true distribution to model distribution q

2

averaged over possible observations y

min

q

∑ p(y|θ ) DKL [ p(x|θ )||q(x|y)]

y

Daniel Polani

Information Theory in Intelligent Decision Making

Kullback-Leibler and Bayes

(Biehl, 2013)

Want to estimate p( x |θ ), where θ is the parameter. Observe y.

Seek “best” q( x |y) for this y in the following sense:

1

minimize DKL of true distribution to model distribution q

2

averaged over possible observations y

3

averaged over θ

min

q

Z

dθ p(θ )

∑ p(y|θ ) DKL [ p(x|θ )||q(x|y)]

y

Daniel Polani

Information Theory in Intelligent Decision Making

Kullback-Leibler and Bayes

(Biehl, 2013)

Want to estimate p( x |θ ), where θ is the parameter. Observe y.

Seek “best” q( x |y) for this y in the following sense:

1

minimize DKL of true distribution to model distribution q

2

averaged over possible observations y

3

averaged over θ

min

q

Z

dθ p(θ )

∑ p(y|θ ) DKL [ p(x|θ )||q(x|y)]

y

Result

q( x |y) is the Bayesian inference obtained from p(y| x ) and p( x )

Daniel Polani

Information Theory in Intelligent Decision Making

Conditional Entropies

Special Case: Conditional Entropy

H (Y | X = x ) := − ∑ p(y| x ) log p(y| x )

y

H (Y | X ) := − ∑ p( x ) ∑ p(y| x ) log p(y| x )

x

y

Information

Reduction of entropy (uncertainty) by knowing another variable

I ( X; Y ) := H (Y ) − H (Y | X )

= H ( X ) − H ( X |Y )

= H ( X ) + H (Y ) − H ( X, Y )

= DKL [ p( x, y)|| p( x ) p(y)]

Daniel Polani

Information Theory in Intelligent Decision Making

Part V

Towards Reality

Daniel Polani

Information Theory in Intelligent Decision Making

Rate/Distortion Theory

Code below specifications

Reminder

Information is about sending messages. We considered most

compact codes over a given noiseless channel. Now consider the

situation where either:

1

2

channel is not noiseless but has noisy characteristics p( x̂ | x ) or

we cannot afford to spend average of H ( X ) bits per symbol

to transmit

Question

What happens? Total collapse of transmission

Daniel Polani

Information Theory in Intelligent Decision Making

Rate/Distortion Theory I

Distortion

“Compromise”

don’t longer insist on perfect transmission

accept compromise, measure distortion d( x, x̂ ) between

original x and transmitted x̂

small distortion good, large distortion “baaad”

Theorem: Rate Distortion Function

Given p( x ) for generation of symbols X,

R( D ) :=

min

p( x̂ | x )

E[d( X,X̂ )]= D

I ( X; X̂ )

where the mean is over p( x, x̂ ) = p( x̂ | x ) p( x ).

Daniel Polani

Information Theory in Intelligent Decision Making

Rate/Distortion Theory II

Distortion

1.8

r(x)

1.6

1.4

1.2

1

0.8

0.6

0.4

0.2

0

0

0.2

0.4

Daniel Polani

0.6

0.8

1

Information Theory in Intelligent Decision Making

First Example: Infotaxis

(Vergassola et al., 2007)

Daniel Polani

Information Theory in Intelligent Decision Making

Information Theory

in Intelligent Decision Making

Applications

Daniel Polani

Adaptive Systems and Algorithms Research Groups

School of Computer Science

University of Hertfordshire, United Kingdom

March 5, 2015

Daniel Polani

Information Theory in Intelligent Decision Making

Thank You

Informationtheoretic PA-Loop

Invariants,

Empowerment

Alexander Klyubin

Christoph Salge

Cornelius Glackin

EC (FEELIX

GROWING, FP6),

NSF, ONR, DARPA,

FHA

Relevant Information

Chrystopher Nehaniv

Collective

Naftali Tishby

Empowerment

Thomas Martinetz

Philippe Capdepuy

Jan Kim

Collective Systems

Digested

Malte Harder

Information

World Structure,

Christoph Salge

Graphs,

Continuous

Empowerment in

Empowerment

Games

Tobias Jung

Tom Anthony

Peter Stone

Sensor Evolution,

Information

distribution over the

PA-Loop

Sander van Dijk

Alexandra Mark

Achim Liese

Information Flow,

PA-Loop Models

Nihat Ay

Further

Contributions

Mikhail Prokopenko

Lars Olsson

Philippe Capdepuy

Malte Harder

Simon McGregor

This work was partially supported by

FP7 ICT-270219

Daniel Polani

Information Theory in Intelligent Decision Making

Part VI

Crash Introduction

Daniel Polani

Information Theory in Intelligent Decision Making

Modelling Cognition: Motivation from Biology

Question

Why/how did cognition evolve in biology?

Observations in biology

sensors often highly optimized:

detection of few molecules (moths)

(Dusenbery, 1992)

detection of few or individual photons (humans/toads)

(Hecht et al., 1942; Baylor et al., 1979)

auditive sense operates close to thermal noise

(Denk and Webb, 1989)

cognitive processing very expensive

(Laughlin et al., 1998; Laughlin, 2001)

Daniel Polani

Information Theory in Intelligent Decision Making

Conclusions

Evidence

sensors often operate at physical limits

evolutionary pressure for high cognitive functions

But What For?

close the cycle

actions matter

Daniel Polani

Information Theory in Intelligent Decision Making

Conclusions

Evidence

sensors often operate at physical limits

evolutionary pressure for high cognitive functions

But What For?

close the cycle

actions matter

Entscheidend ist, was hinten rauskommt.

Daniel Polani

Information Theory in Intelligent Decision Making

Conclusions

Evidence

sensors often operate at physical limits

evolutionary pressure for high cognitive functions

But What For?

close the cycle

actions matter

Entscheidend ist, was hinten rauskommt.

Trade-Offs

sharpening sensors

improve processing

boosting actuators

Was man nicht im Kopf hat, muss man in den

Beinen haben.

Daniel Polani

Information Theory in Intelligent Decision Making

Part VII

Information

Daniel Polani

Information Theory in Intelligent Decision Making

Decisions, Decisions

Challenge

Linking sensors, processing and actuators

The Physical and the Biological

Physics:

given dynamical equations etc.

known (in principle)

Biological Cognition:

no established unique model

complex, difficult to untangle

Daniel Polani

Information Theory in Intelligent Decision Making

Decisions, Decisions

Challenge

Linking sensors, processing and actuators

The Physical and the Biological

Physics:

given dynamical equations etc.

known (in principle)

Biological Cognition:

Robotic Cognition:

no established unique model

complex, difficult to untangle

many near-equivalent incompatible

solutions and architectures

often specific and hand-designed

Problem

Considerable arbitrariness in treatment of cognition

Daniel Polani

Information Theory in Intelligent Decision Making

Idea

Issues

uniform treatment of cognition

distinguish:

essential

incidental

aspects of computation

Proposal: “Covariant” Modeling of Computation

Physics:

observations may depend on “coordinate system”

for same underlying phenomenon

Cognition:

computation may depend on architecture

but essentially computes “the same concepts”

Bottom Line

“coordinate-” (mechanism-)free view of cognition?

Daniel Polani

Information Theory in Intelligent Decision Making

Landauer’s Principle

Fundamental Limits for Information Processing

On lowest level: cannot fully separate physics and information

processing

Consequence: erasure of information from a “memory” creates

heat

Connection: of energy and information

Wt+1

Wt

(Wt , Mt )

(Wt+1 , Mt+1 )

Mt

Daniel Polani

Mt + 1

Information Theory in Intelligent Decision Making

Informational Invariants: Beyond Physics

Law of Requisite Variety

(Ashby, 1956; Touchette and Lloyd, 2000, 2004)

Ashby: “only variety can destroy variety”

extension by Touchette/Lloyd

Open-Loop Controller: max. entropy reduction

∗

∆Hopen

. . . Wt−3

Wt−1

Wt−2

A t −3

A t −2

Wt+1

Wt

A t −1

Daniel Polani

At

Wt+2 . . .

A t +1

Information Theory in Intelligent Decision Making

Informational Invariants: Beyond Physics

Law of Requisite Variety

(Ashby, 1956; Touchette and Lloyd, 2000, 2004)

Ashby: “only variety can destroy variety”

extension by Touchette/Lloyd

Open-Loop Controller: max. entropy reduction

∗

∆Hopen

Closed-Loop Controller: max. entropy reduction

∗

∆Hclosed ≤ ∆Hopen

+ I (Wt ; At )

. . . Wt−3

Wt−1

Wt−2

A t −3

A t −2

Wt+1

Wt

A t −1

Daniel Polani

At

Wt+2 . . .

A t +1

Information Theory in Intelligent Decision Making

Informational Invariants: Scenario

Core Statement

Task: consider e.g. navigational task

Informationally: reduction of entropy of initial (arbitrary) state

Example:

\tex[c][c][1][0]{y}

10

5

0

−5

−10

−10

−5

0

5

10

\tex[c][c][1][0]{x}

Daniel Polani

Information Theory in Intelligent Decision Making

Information Bookkeeping

Bayesian Network

. . . Wt−3

St −3

Wt−1

Wt−2

A t −3

. . . Mt −3

St −2

A t −2

St −1

Mt −2

Daniel Polani

Wt+1

Wt

A t −1

Mt −1

St

At

Mt

Wt+2 . . .

St +1

A t +1

Mt +1 . . .

Information Theory in Intelligent Decision Making

Information Bookkeeping

Bayesian Network

. . . Wt−3

St −3

Wt−2

A t −3

St −2

Wt−1

A t −2

St −1

Daniel Polani

Wt+1

Wt

A t −1

St

At

St +1

Wt+2 . . .

A t +1

Information Theory in Intelligent Decision Making

Information Bookkeeping

Bayesian Network

. . . Wt−3

St−3

Wt−2

A t −3

St−2

Wt−1

A t −2

St−1

Wt+1

Wt

A t −1

St

At

St+1

Wt+2 . . .

A t +1

Informational “Conservation Laws”

Total Sensor History: S(t) = (S0 , S1 , . . . , St−1 )

Result:

lim I (S(t) ; W0 ) = H (W0 )

t→∞

(Klyubin et al., 2007), and see also (Ashby, 1956; Touchette and Lloyd, 2000, 2004)

Daniel Polani

Information Theory in Intelligent Decision Making

Observations

Key Motto

There is no perpetuum mobile of 3rd kind.

Information Balance Sheet

Task Invariant: H (W0 ) determines minimum information

required to get to center

Task Variant: but can be spread/concentrated differently over

time

environment and agents (“stigmergy”)

sensors and memory

(Klyubin et al., 2004a,b, 2007; van Dijk et al., 2010)

Note: invariance is purely entropic: indifferent to task

Next Step

refine towards specific tasks

Daniel Polani

Information Theory in Intelligent Decision Making

Observations

Key Motto

There is no perpetuum mobile of 3rd kind.

Actually, rather, there may be no free lunch, but sometimes there is free beer.

Information Balance Sheet

Task Invariant: H (W0 ) determines minimum information

required to get to center

Task Variant: but can be spread/concentrated differently over

time

environment and agents (“stigmergy”)

sensors and memory

(Klyubin et al., 2004a,b, 2007; van Dijk et al., 2010)

Note: invariance is purely entropic: indifferent to task

Next Step

refine towards specific tasks

Daniel Polani

Information Theory in Intelligent Decision Making

Information for Decision Making

Replace gradient follower by general policy π

Dynamics

S t −1

. . . S t −2

π

π

A t −2

S t +1

St

π

S t +2 . . .

π

A t −1

At

A t +1

Utility

V π ( s ) : = E π [ R t + R t +1 + · · · | s ]

a

0

a

= ∑ π ( a|s) ∑ Pss

0 R ss0 + V ( s )

a

Daniel Polani

s0

Information Theory in Intelligent Decision Making

A Parsimony Principle

Traditional MDP

Task: find best policy π ∗

Traditional RL: does not consider decision costs

Credo: information processing expensive in biology!

(Laughlin et al., 1998; Laughlin, 2001; Polani, 2009)

Hypothesis: organisms trade off information-processing costs with

task payoff

(Tishby and Polani, 2011; Polani, 2009; Laughlin, 2001)

Therefore: include information cost and expand to I-MDP

(Polani et al., 2006; Tishby and Polani, 2011)

Principle of Information Parsimony

minimize I (S; A) (relevant information) at fixed utility level

Daniel Polani

Information Theory in Intelligent Decision Making

Motto

It is a very sad thing that nowadays there is so little useless

information.

Oscar Wilde

Daniel Polani

Information Theory in Intelligent Decision Making

Relevant Information and its Policies

Computation

Via Lagrangian formalism:

(Stratonovich, 1965; Polani et al., 2006; Belavkin, 2008, 2009; Still and Precup, 2012; Saerens et al., 2009; Tishby

and Polani, 2011)

find:

min I (S; A) − βE[V π (S)]

π

β → ∞: policy is optimal while informationally parsimonious!

β finite: policy suboptimal at fixed level E[V π (S)] while

informationally parsimonious

I (S; A) as well as V π depend on π

Expectation

for higher utility, more relevant information required

and vice versa

Daniel Polani

Information Theory in Intelligent Decision Making

Experiments

Scenario

Define

a , Ra )

(Pss

0

ss0

A

by:

States: grid world

Actions: north, east, south,

west

Reward: action produces a

“reward” of -1 until

goal reached

B

Experiment

Trade off utility and relevant

information

Question

Form of expected trade-off?

Daniel Polani

Information Theory in Intelligent Decision Making

Experiment — Find the Corner

0

E[Q(S,A)]

-10

-20

-30

-40

-50

0

0.2

0.4

0.6

I(S;A)

0.8

1

Daniel Polani

1.2

Information Theory in Intelligent Decision Making

Experiment — Find the Corner

Optimal Case

0

goal B has higher utility

than A

E[Q(S,A)]

-10

but needs a lot more

information per step

-20

-30

Suboptimal Case

-40

goal B much worse than

goal A

-50

0

0.2

0.4

0.6

I(S;A)

0.8

1

1.2

for same information cost

Daniel Polani

Information Theory in Intelligent Decision Making

Experiment — With a Twist I

Experiment Revisited

grid-world again

consider only goal A

cost as before

The “Twist”

(Polani, 2011)

permute directions north, east, south, west!

random fixed permutation of directions for each state

a , R a ) by ( P̃ a , R̃ a ) where

replace (Pss

0

ss0

ss0

ss0

σ ( a)

s

a

P̃ss

0 : = P ss0

σ ( a)

s

a

R̃ss

0 : = R ss0

Daniel Polani

Information Theory in Intelligent Decision Making

Experiment — With a Twist II

Expectation

a , R̃ a ) remains

as a traditional MDP, “twisted” MDP (P̃ss

0

ss0

exactly equivalent:

same optimal values

e ∗ ( s ), s ∈ S

V ∗ (s) = V

same optimal policy after undoing twist

pre-/post-twist policies equivalent via

e π (s, a) = Qπ̃ (s, σs ( a))

Q

π (s, a) = π̃ (s, σs ( a))

Daniel Polani

Information Theory in Intelligent Decision Making

Experiment With a Twist: Uh-Oh!

0

E[V(S)]

-10

-20

-30

-40

-50

0

0.2

0.4

0.6

I(S;A)

0.8

1

Daniel Polani

1.2

Information Theory in Intelligent Decision Making

Experiment With a Twist: Uh-Oh!

Optimal Case

sanity check: utility same

for original and twisted

0

E[V(S)]

-10

but latter needs a lot

more information per step

-20

-30

-40

-50

0

0.2

0.4

0.6

I(S;A)

0.8

1

1.2

Suboptimal Case

twisted MDP becomes

much worse than original

at same information cost

Daniel Polani

Information Theory in Intelligent Decision Making

Intermediate Conclusions

Insights

as traditional MDP both experiments fully equivalent

as I-MDP, however . . .

significant difference between

agent “taking actions with it” and

having “realigned” set of actions at each step

embodiment allows to offload informational effort

(eg. Paul, 2006; Pfeifer and Bongard, 2007)

Daniel Polani

Information Theory in Intelligent Decision Making

Part VIII

Goal-Relevant Information

Daniel Polani

Information Theory in Intelligent Decision Making

Towards Multiple Goals

Extension

assume family of tasks (e.g. multiple goals)

action now depends on both state and goals

S t −1

S t +1

St

A t −1

At

G

Goal-Relevant Information

I ( G; At |st ) = H ( At |st ) − H ( At | Gt , st )

Daniel Polani

Information Theory in Intelligent Decision Making

Towards Multiple Goals

Extension

assume family of tasks (e.g. multiple goals)

action now depends on both state and goals

S t −1

S t +1

St

A t −1

At

G

Goal-Relevant Information (Regularized)

min I ( G; At |St ) − βE[V π (St , G, At )]

π ( at |st ,g)

Daniel Polani

Information Theory in Intelligent Decision Making

Goal-Relevant Information

I ( G; At |st )

Daniel Polani

Information Theory in Intelligent Decision Making

I ( St ; A t | G )

Goal-Relevant and Sensor Information Trade-Offs

0.6

0.5

0.4

0.3

0.2

0.1

0

α=0

α=1

0

0.2 0.4 0.6 0.8

1

1.2 1.4 1.6

I ( G; At |St )

Lagrangian

min (1 − α) I ( G; At |St ) + αI (St ; At | G ) − βE[V π (St , G, At )]

π ( at |st ,g)

Daniel Polani

Information Theory in Intelligent Decision Making

Information “Caching”

Note

not only the how much of goal-relevant information matters

but also the which

Consider

Accessible History (Context): e.g.

A t −1 = ( A 0 , A 1 , . . . , A t −1 )

“Cache Fetch”: new goal-relevant information not already used

I ( At ; G |At−1 ) = H ( At |At−1 ) − H ( At | G, At−1 )

Daniel Polani

Information Theory in Intelligent Decision Making

Subgoals

I ( A t ; G | A t −1 , s )

new goal information

I ( A t −1 ; G | A t , s )

discarded goal information

(van Dijk and Polani, 2011; van Dijk and Polani, 2013)

Daniel Polani

Information Theory in Intelligent Decision Making

Subgoals

I ( A t ; G | A t −1 , s )

new goal information

I ( A t −1 ; G | A t , s )

discarded goal information

(van Dijk and Polani, 2011; van Dijk and Polani, 2013)

Psychological Connections?

Crossing doors causes forgetting

(see also Radvansky et al., 2011)

Daniel Polani

Information Theory in Intelligent Decision Making

Efficient Relevant Goal Information

(van Dijk and Polani, 2013)

“Most Efficient” Goal

G −→ G̃1 −→ A ←− S

min

I ( G̃1 ;A|S)≥C

I ( G; G̃1 )

Daniel Polani

Information Theory in Intelligent Decision Making

Efficient Relevant Goal Information

(van Dijk and Polani, 2013)

February 14, 2013

14

17:28 WSPC/INSTRUCTION FILE

acs12

S.G. van Dijk and D. Polani

“Most Efficient” Goal

G −→ G̃1 −→ A ←− S

min

I ( G̃1 ;A|S)≥C

I ( G; G̃1 )

(a) |G̃1 | = 3

(b) |G̃1 | = 4

(c) |G̃1 | = 5

(d) |G̃1 | = 6

Fig. 8: Goal clusters induced by the bottleneck G̃1 on the primary goal-information

pathway in a 6-room grid world navigation task. Figures (a) to (d) show the mappings for increasing cardinality of the bottleneck variable.

distribution for this pathway:

Daniel Polani

Information

Theory

in Intelligent

min I(G;

G̃2 ) subj.

to I(St ; ADecision

t |G̃2 ) ≥ CI2Making

(7)

Making State Predictive for Actions

“Most Enhancive” Goal

G −→ G̃2 −→ A ←− S

min

I (S;A| G̃2 )≥C

I ( G; G̃2 )

Daniel Polani

Information Theory in Intelligent Decision Making

Making State Predictive for Actions

Informational Constraints-Driven Organization in Goal-Directed Behavior

17

“Most Enhancive” Goal

G −→ G̃2 −→ A ←− S

min

I (S;A| G̃2 )≥C

I ( G; G̃2 )

(a) |G � | = 4

(b) |G � | = 5

(c) |G � | = 6

(d) |G � | = 7

Fig. 10: Goal clusters induced by the bottleneck G̃2 on the secondary, stateinformation

goal-information

pathway Decision

in a 9-room

grid world naviDaniel

Polani modulating

Information

Theory in Intelligent

Making

Making State Predictive for Actions

Informational Constraints-Driven Organization in Goal-Directed Behavior

17

“Most Enhancive” Goal

G −→ G̃2 −→ A ←− S

min

I (S;A| G̃2 )≥C

I ( G; G̃2 )

Insights

“spillover” ignoring local

boundaries

(a) |G � | = 4

(b) |G � | = 5

(c) |G � | = 6

(d) |G � | = 7

action information

induces global “frame of

reference”

depends on action

consistency

Fig. 10: Goal clusters induced by the bottleneck G̃2 on the secondary, stateinformation

goal-information

pathway Decision

in a 9-room

grid world naviDaniel

Polani modulating

Information

Theory in Intelligent

Making

Part IX

Empowerment: Motivation

Daniel Polani

Information Theory in Intelligent Decision Making

Universal Utilities

Problems

in biology, success criterium is survival

concept of a “task” and “reward” is not sharp

“search space” too large for full-fledged success feedback

Daniel Polani

Information Theory in Intelligent Decision Making

Universal Utilities

Problems

in biology, success criterium is survival

concept of a “task” and “reward” is not sharp

“search space” too large for full-fledged success feedback

pure Darwinism: feedback by death

Daniel Polani

Information Theory in Intelligent Decision Making

Universal Utilities

Problems

in biology, success criterium is survival

concept of a “task” and “reward” is not sharp

“search space” too large for full-fledged success feedback

pure Darwinism: feedback by death

this is very sparse

Notes

Homeostasis: provides dense networks to guide living beings

Problem:

specific to particular organisms

designed on case-to-case basis for artificial agents

more generalizable perspective in view of success of

evolution?

Daniel Polani

Information Theory in Intelligent Decision Making

Idea

Universal Drives and Utilities

Core Idea: adaptational feedback should be dense and rich

artificial curiosity, learning progress, autotelic principle,

intrinsic reward

(Schmidhuber, 1991; Kaplan and Oudeyer, 2004; Steels, 2004; Singh et al., 2005)

homeokinesis, and predictive information

(Der, 2001; Ay et al., 2008)

Physical Principle:

causal entropic forcing

(Wissner-Gross and Freer, 2013)

Daniel Polani

Information Theory in Intelligent Decision Making

Present Ansatz

Use Embodiment

optimize informational fit into the sensorimotor niche

maximization of potential

to inject information into the environment (via actuators)

and recapture it from the environment (via sensors)

(Klyubin et al., 2005a,b; Nehaniv et al., 2007; Klyubin et al., 2008)

Daniel Polani

Information Theory in Intelligent Decision Making

Here: Empowerment

Motto

“Being in control of one’s destiny

is good.”

(Jung et al., 2011)

Daniel Polani

Information Theory in Intelligent Decision Making

Here: Empowerment

Motto

“Being in control of one’s destiny

and knowing it

is good.”

(Jung et al., 2011)

Daniel Polani

Information Theory in Intelligent Decision Making

Here: Empowerment

Motto

“Being in control of one’s destiny

and knowing it

is good.”

(Jung et al., 2011)

More Precisely

information-theoretic version of

controllability (being in control of destiny)

observability (knowing about it)

combined

Daniel Polani

Information Theory in Intelligent Decision Making

Formalism

Bayesian Network

. . . Wt−3

St −3

Wt−1

Wt−2

A t −3

. . . Mt −3

St −2

A t −2

St −1

Mt −2

Wt+1

Wt

A t −1

St

At

Mt

Mt −1

Wt+2 . . .

St +1

A t +1

Mt +1 . . .

(Klyubin et al., 2005a,b; Nehaniv et al., 2007; Klyubin et al., 2008)

Daniel Polani

Information Theory in Intelligent Decision Making

Formalism

Bayesian Network

. . . Wt−3

St −3

Wt−2

A t −3

St −2

Wt−1

A t −2

St −1

Wt+1

Wt

A t −1

St

At

St +1

Wt+2 . . .

A t +1

(Klyubin et al., 2005a,b; Nehaniv et al., 2007; Klyubin et al., 2008)

Daniel Polani

Information Theory in Intelligent Decision Making

Formalism

Bayesian Network

. . . Wt−3

St −3

Wt−2

A t −3

St −2

Wt−1

A t −2

St −1

Wt+1

Wt

A t −1

St

At

St +1

Wt+2 . . .

A t +1

(Klyubin et al., 2005a,b; Nehaniv et al., 2007; Klyubin et al., 2008)

Daniel Polani

Information Theory in Intelligent Decision Making

Formalism

Bayesian Network

. . . Wt−3

St −3

Wt−2

A t −3

St −2

Wt−1

A t −2

St −1

Wt+1

Wt

A t −1

St

At

St +1

Wt+2 . . .

A t +1

(Klyubin et al., 2005a,b; Nehaniv et al., 2007; Klyubin et al., 2008)

Daniel Polani

Information Theory in Intelligent Decision Making

Formalism

Bayesian Network

. . . Wt−3

St −3

Wt−2

A t −3

St −2

Wt−1

A t −2

St −1

Wt+1

Wt

A t −1

St

At

St +1

Wt+2 . . .

A t +1

(Klyubin et al., 2005a,b; Nehaniv et al., 2007; Klyubin et al., 2008)

Daniel Polani

Information Theory in Intelligent Decision Making

Formalism

Bayesian Network

. . . Wt−3

St −3

Wt−2

A t −3

St −2

Wt−1

A t −2

St −1

Wt+1

Wt

A t −1

St

At

St +1

Wt+2 . . .

A t +1

(Klyubin et al., 2005a,b; Nehaniv et al., 2007; Klyubin et al., 2008)

Daniel Polani

Information Theory in Intelligent Decision Making

Formalism

Bayesian Network

. . . Wt−3

St −3

Wt−2

A t −3

St −2

Wt−1

A t −2

St −1

Wt+1

Wt

A t −1

St

At

St +1

Wt+2 . . .

A t +1

(Klyubin et al., 2005a,b; Nehaniv et al., 2007; Klyubin et al., 2008)

Daniel Polani

Information Theory in Intelligent Decision Making

Formalism

Bayesian Network

. . . Wt−3

St −3

Wt−2

A t −3

St −2

Wt−1

A t −2

St −1

Wt+1

Wt

A t −1

St

At

St +1

Wt+2 . . .

A t +1

(Klyubin et al., 2005a,b; Nehaniv et al., 2007; Klyubin et al., 2008)

Daniel Polani

Information Theory in Intelligent Decision Making

Formalism

Bayesian Network

. . . Wt−3

St −3

Wt−2

A t −3

St −2

Wt−1

A t −2

St −1

Wt+1

Wt

A t −1

St

At

St +1

Wt+2 . . .

A t +1

(Klyubin et al., 2005a,b; Nehaniv et al., 2007; Klyubin et al., 2008)

Daniel Polani

Information Theory in Intelligent Decision Making

Formalism

Bayesian Network

. . . Wt−3

St −3

Wt−2

A t −3

St −2

Wt−1

A t −2

St −1

Wt+1

Wt

A t −1

St

At

St +1

Wt+2 . . .

A t +1

(Klyubin et al., 2005a,b; Nehaniv et al., 2007; Klyubin et al., 2008)

Daniel Polani

Information Theory in Intelligent Decision Making

Formalism

Bayesian Network

. . . Wt−3

St −3

Wt−1

Wt−2

A t −3

St −2

A t −2

Wt+1

Wt

St −1

A t −1

St

At

St +1

Wt+2 . . .

A t +1

“Free Will” Actions

Empowerment: Formal Definition

E( k ) : =

max

p( at−k ,at−k+1 ,...,at−1 )

I ( A t − k , A t − k +1 , . . . , A t −1 ; S t )

(Klyubin et al., 2005a,b; Nehaniv et al., 2007; Klyubin et al., 2008)

Daniel Polani

Information Theory in Intelligent Decision Making

Formalism

Bayesian Network

. . . Wt−3

St −3

Wt−1

Wt−2

A t −3

St −2

A t −2

St −1

Wt+1

Wt

A t −1

St

At

St +1

Wt+2 . . .

A t +1

“Free Will” Actions

Empowerment: Formal Definition

max

p( at−k ,at−k+1 ,...,at−1 |wt−k )

E( k ) ( w t − k ) : =

I ( A t − k , A t − k +1 , . . . , A t −1 ; S t | w t − k )

(Klyubin et al., 2005a,b; Nehaniv et al., 2007; Klyubin et al., 2008)

Daniel Polani

Information Theory in Intelligent Decision Making

Formalism

Bayesian Network

. . . Wt−3

St −3

Wt−1

Wt−2

A t −3

St −2

A t −2

Wt+1

Wt

St −1

A t −1

St

At

St +1

Wt+2 . . .

A t +1

“Free Will” Actions

Empowerment: Formal Definition

E( k ) ( w t − k ) : =

max

(k)

(k)

p ( at−k | wt−k )

I ( A t − k ; St | w t − k )

(Klyubin et al., 2005a,b; Nehaniv et al., 2007; Klyubin et al., 2008)

Daniel Polani

Information Theory in Intelligent Decision Making

Empowerment — a Universal Utility

Notes

Empowerment E(k) (w) defined

given horizon k, i.e. local

given starting state w (or context, for POMDPs)

i.e. empowerment is function of state, “utility”

However

only defined by world dynamics

no reward function assumed

Daniel Polani

Information Theory in Intelligent Decision Making

Empowerment — Notes

Properties of Empowerment

want to maximize potential information flow

could be injected through the actuators

into the environment

and recaptured by sensors in the future

potential influence on the environment

which is detectable through agent sensors

a only

determined by embodiment Pss

0

a

no external reward Rss0

Bottom Line

information-theoretic controllability/observability

informational efficiency of sensorimotor niche

Daniel Polani

Information Theory in Intelligent Decision Making

Other Interpretations

Related Concepts

mobility

money

affordance

graph centrality

(Anthony et al., 2008)

antithesis to “helplessness”

(Seligman and Maier, 1967; Overmier and Seligman, 1967)

Think Strategic

Tactics is what you do when you have a plan

Strategy is what you do when you haven’t

Daniel Polani

Information Theory in Intelligent Decision Making

Part X

First Examples

Daniel Polani

Information Theory in Intelligent Decision Making

Maze Empowerment

maze average distance

E ∈ [1; 2.32]

E ∈ [1.58; 3.70] E ∈ [3.46; 5.52] E ∈ [4.50; 6.41]

Daniel Polani

Information Theory in Intelligent Decision Making

Empowerment vs. Average Distance

**

*

6.0

***

*

*

**

***

*

*

**

*

*

*

*

**

**

**

EE

5.5

*

*

*

5.0

*

*

*

*

4.5

*

6

8

10

12

14

*

16

d

Daniel Polani

Information Theory in Intelligent Decision Making

Box Pushing

stationary box

pushable box

E ∈ [5.86, 5.93]

E = log2 61 ≈ 5.93 bit

E ∈ [5.86, 5.93]

E ∈ [5.93, 7.79]

box invisible

to agent

box visible to

agent

Daniel Polani

Information Theory in Intelligent Decision Making

In the Continuum:

Pendulum Swing-up Task w/o Reward

(Jung et al., 2011)

Dynamics

pendulum (length l = 1, mass m = 1, grav g = 9.81, friction µ = 0.05)

ϕ̈(t) =

−µ ϕ̇(t) + mgl sin( ϕ(t)) + u(t)

ml 2

with state st = ( ϕ(t), ϕ̇(t)) and continuous control u ∈ [−5, 5].

system time discretized to ∆ = 0.05 sec

discretize actions to u ∈ {−5, −2.5, 0, +2.5, +5}

Goal

To provide this system with some matching purpose, consider

pendulum swing-up task

Comparison

empowerment-based control

traditional optimal control

Daniel Polani

Information Theory in Intelligent Decision Making

Results: Performance

Performance of optimal policy (FVI+KNN on 1000x1000 grid)

Performance of maximally empowered policy (3−step)

5

2

phi

phidot

phi

phidot

4

1

3

0

2

−1

1

−2

0

−3

−1

−4

−2

0

1

2

3

4

5

Time (sec)

6

7

8

9

10

−5

0

1

2

3

4

5

Time (sec)

6

7

8

9

10

Phase plot of ϕ and ϕ̇ when following the respective greedy policy from the last slide. Note that for ϕ, the y-axis

Danielupright,

Polani the goal

Information

shows the height of the pendulum (+1 means

state). Theory in Intelligent Decision Making

Results: “Explored” Space

Empowerment−based Exploration

6

4

φ’ [rad/s]

2

0

Action 0

−2

Action 1

Action 2

Action 3

−4

Action 4

−6

−pi

−pi/2

Daniel Polani

0

φ [rad]

pi/2

pi

Information Theory in Intelligent Decision Making

Empowerment: Acrobot

(Jung et al., 2011)

Setting

two-linked pendulum

actuation in hip only

Idea

Add LQR control to bang-bang control

Daniel Polani

Information Theory in Intelligent Decision Making

Acrobot: Demo

Daniel Polani

Information Theory in Intelligent Decision Making

Block’s World

(Salge, 2013)

Properties

scenario with modifiable world

deterministic (i.e. empowerment is log n where n is the

number of reachable states in horizon k)

agent can incorporate, place, destroy blocks and move

estimated via (highly incomplete) sampling

Empowered “Minecrafter”

(Salge, 2013)

Daniel Polani

Information Theory in Intelligent Decision Making

Explorer Accompanying Robot

(Glackin et al., 2015)

Consortium

Demonstrator II

Daniel Polani

Information Theory in Intelligent Decision Making

Part XI

References

Daniel Polani

Information Theory in Intelligent Decision Making

Anthony, T., Polani, D., and Nehaniv, C. L. (2008). On preferred

states of agents: how global structure is reflected in local

structure. In Bullock, S., Noble, J., Watson, R., and Bedau,

M. A., editors, Artificial Life XI: Proceedings of the Eleventh

International Conference on the Simulation and Synthesis of

Living Systems, Winchester 5–8. Aug., pages 25–32. MIT

Press, Cambridge, MA.

Ashby, W. R. (1956). An Introduction to Cybernetics. Chapman &

Hall Ltd.

Ay, N., Bertschinger, N., Der, R., Güttler, F., and Olbrich, E.

(2008). Predictive information and explorative behavior of

autonomous robots. European Journal of Physics B,

63:329–339.

Baylor, D., Lamb, T., and Yau, K. (1979). Response of retinal

rods to single photons. Journal of Physiology, London,

288:613–634.

Belavkin, R. (2008). The duality of utility and information in

optimally learning systems. In Proc. 7th IEEE International

Conference on ’Cybernetic Intelligent Systems’. IEEE Press.

Daniel Polani

Information Theory in Intelligent Decision Making

Belavkin, R. V. (2009). Bounds of optimal learning. In Adaptive

Dynamic Programming and Reinforcement Learning, 2009.

ADPRL’09. IEEE Symposium on, pages 199–204. IEEE.

Biehl, M. (2013). Kullback-leibler and bayes. Internal Memo.

Cover, T. M. and Thomas, J. A. (2006). Elements of Information

Theory. Wiley, 2nd edition.

Denk, W. and Webb, W. W. (1989). Thermal-noise-limited

transduction observed in mechanosensory receptors of the

inner ear. Phys. Rev. Lett., 63(2):207–210.

Der, R. (2001). Self-organized acqusition of situated behavior.

Theory Biosci., 120:1–9.

Dusenbery, D. B. (1992). Sensory Ecology. W. H. Freeman and

Company, New York.

Glackin, C., Salge, C., Trendafilov, D., Greaves, M., Polani, D.,

Leu, A., Haque, S. J. U., Slavnić, S., , and Ristić-Durrant, D.

(2015). An information-theoretic intrinsic motivation model

for robot navigation and path planning.

Daniel Polani

Information Theory in Intelligent Decision Making

Hecht, S., Schlaer, S., and Pirenne, M. (1942). Energy, quanta and

vision. Journal of the Optical Society of America, 38:196–208.

Jung, T., Polani, D., and Stone, P. (2011). Empowerment for

continuous agent-environment systems. Adaptive Behaviour,

19(1):16–39. Published online 13. January 2011.

Kaplan, F. and Oudeyer, P.-Y. (2004). Maximizing learning

progress: an internal reward system for development. In Iida,

F., Pfeifer, R., Steels, L., and Kuniyoshi, Y., editors,

Embodied Artificial Intelligence, volume 3139 of LNAI, pages

259–270. Springer.

Klyubin, A., Polani, D., and Nehaniv, C. (2007). Representations

of space and time in the maximization of information flow in

the perception-action loop. Neural Computation,

19(9):2387–2432.

Klyubin, A. S., Polani, D., and Nehaniv, C. L. (2004a).

Organization of the information flow in the perception-action

loop of evolved agents. In Proceedings of 2004 NASA/DoD

Conference on Evolvable Hardware, pages 177–180. IEEE

Computer Society.

Daniel Polani

Information Theory in Intelligent Decision Making

Klyubin, A. S., Polani, D., and Nehaniv, C. L. (2004b). Tracking

information flow through the environment: Simple cases of

stigmergy. In Pollack, J., Bedau, M., Husbands, P., Ikegami,

T., and Watson, R. A., editors, Artificial Life IX: Proceedings

of the Ninth International Conference on Artificial Life, pages

563–568. MIT Press.

Klyubin, A. S., Polani, D., and Nehaniv, C. L. (2005a). All else

being equal be empowered. In Advances in Artificial Life,

European Conference on Artificial Life (ECAL 2005), volume

3630 of LNAI, pages 744–753. Springer.

Klyubin, A. S., Polani, D., and Nehaniv, C. L. (2005b).

Empowerment: A universal agent-centric measure of control.

In Proc. IEEE Congress on Evolutionary Computation, 2-5

September 2005, Edinburgh, Scotland (CEC 2005), pages

128–135. IEEE.

Klyubin, A. S., Polani, D., and Nehaniv, C. L. (2008). Keep your

options open: An information-based driving principle for

sensorimotor systems. PLoS ONE, 3(12):e4018.

Daniel Polani

Information Theory in Intelligent Decision Making

Laughlin, S. B. (2001). Energy as a constraint on the coding and

processing of sensory information. Current Opinion in

Neurobiology, 11:475–480.

Laughlin, S. B., de Ruyter van Steveninck, R. R., and Anderson,

J. C. (1998). The metabolic cost of neural information.

Nature Neuroscience, 1(1):36–41.

Nehaniv, C. L., Polani, D., Olsson, L. A., and Klyubin, A. S.

(2007). Information-theoretic modeling of sensory ecology:

Channels of organism-specific meaningful information. In

Laubichler, M. D. and Müller, G. B., editors, Modeling

Biology: Structures, Behaviour, Evolution, The Vienna Series

in Theoretical Biology, pages 241–281. MIT press.

Overmier, J. B. and Seligman, M. E. P. (1967). Effects of

inescapable shock upon subsequent escape and avoidance

responding. Journal of Comparative and Physiological

Psychology, 63:28–33.

Paul, C. (2006). Morphological computation: A basis for the

analysis of morphology and control requirements. Robotics

and Autonomous Systems, 54(8):619–630.

Daniel Polani

Information Theory in Intelligent Decision Making

Pfeifer, R. and Bongard, J. (2007). How the Body Shapes the Way

We think: A New View of Intelligence. Bradford Books.

Polani, D. (2009). Information: Currency of life? HFSP Journal,

3(5):307–316.

Polani, D. (2011). An informational perspective on how the

embodiment can relieve cognitive burden. In Proc. IEEE

Symposium Series in Computational Intelligence 2011 —

Symposium on Artificial Life, pages 78–85. IEEE.

Polani, D., Nehaniv, C., Martinetz, T., and Kim, J. T. (2006).

Relevant information in optimized persistence vs. progeny

strategies. In Rocha, L. M., Bedau, M., Floreano, D.,

Goldstone, R., Vespignani, A., and Yaeger, L., editors, Proc.

Artificial Life X, pages 337–343.

Radvansky, G. A., Krawietz, S. A., and Tamplin, A. K. (2011).

Walking through doorways causes forgetting: Further

explorations. The Quarterly Journal of Experimental

Psychology, 64(8):1632–1645.

Daniel Polani

Information Theory in Intelligent Decision Making

Saerens, M., Achbany, Y., Fuss, F., and Yen, L. (2009).

Randomized shortest-path problems: Two related models.

Neural Computation, 21:2363–2404.

Salge, C. (2013). Block’s world. Presented at GSO 2013.

Schmidhuber, J. (1991). A possibility for implementing curiosity

and boredom in model-building neural controllers. In Meyer,

J. A. and Wilson, S. W., editors, Proc. of the International

Conference on Simulation of Adaptive Behavior: From

Animals to Animats, pages 222–227. MIT Press/Bradford

Books.

Seligman, M. E. P. and Maier, S. F. (1967). Failure to escape

traumatic shock. Journal of Experimental Psychology, 74:1–9.

Singh, S., Barto, A. G., and Chentanez, N. (2005). Intrinsically

motivated reinforcement learning. In Proceedings of the 18th

Annual Conference on Neural Information Processing Systems

(NIPS), Vancouver, B.C., Canada.

Steels, L. (2004). The autotelic principle. In Iida, F., Pfeifer, R.,

Steels, L., and Kuniyoshi, Y., editors, Embodied Artificial

Daniel Polani

Information Theory in Intelligent Decision Making

Intelligence: Dagstuhl Castle, Germany, July 7-11, 2003,

volume 3139 of Lecture Notes in AI, pages 231–242. Springer

Verlag, Berlin.

Still, S. and Precup, D. (2012). An information-theoretic approach

to curiosity-driven reinforcement learning. Theory in

Biosciences, 131(3):139–148.

Stratonovich, R. (1965). On value of information. Izvestiya of

USSR Academy of Sciences, Technical Cybernetics, 5:3–12.

Tishby, N. and Polani, D. (2011). Information theory of decisions

and actions. In Cutsuridis, V., Hussain, A., and Taylor, J.,

editors, Perception-Action Cycle: Models, Architecture and

Hardware, pages 601–636. Springer.

Touchette, H. and Lloyd, S. (2000). Information-theoretic limits of

control. Phys. Rev. Lett., 84:1156.

Touchette, H. and Lloyd, S. (2004). Information-theoretic approach

to the study of control systems. Physica A, 331:140–172.

van Dijk, S. and Polani, D. (2011). Grounding subgoals in

information transitions. In Proc. IEEE Symposium Series in

Daniel Polani

Information Theory in Intelligent Decision Making

Computational Intelligence 2011 — Symposium on Adaptive

Dynamic Programming and Reinforcement Learning, pages

105–111. IEEE.

van Dijk, S. and Polani, D. (2013). Informational

constraints-driven organization in goal-directed behavior.

Advances in Complex Systems, 16(2-3). Published online, 30.

April 2013, DOI:10.1142/S0219525913500161.

van Dijk, S. G., Polani, D., and Nehaniv, C. L. (2010). What do

you want to do today? relevant-information bookkeeping in

goal-oriented behaviour. In Proc. Artificial Life, Odense,

Denmark, pages 176–183.

Vergassola, M., Villermaux, E., and Shraiman, B. I. (2007).

’infotaxis’ as a strategy for searching without gradients.

Nature, 445:406–409.

Wissner-Gross, A. D. and Freer, C. E. (2013). Causal entropic

forcing. Physics Review Letters, 110(168702).

Daniel Polani

Information Theory in Intelligent Decision Making