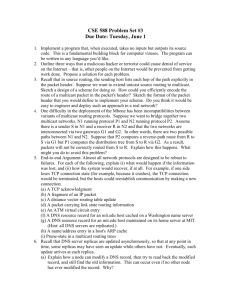

Universal IP Multicast Delivery - Warriors of the Net

advertisement