Probability - people.stat.sfu.ca

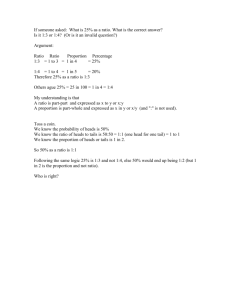

advertisement

Probability

Role of probability in statistics:

Gather data by probabilistic (random) mechanism.

Use probability to predict results of experiment

under assumptions.

Compute probability of error larger than given

amount.

Compute probability of given departure between

prediction and results under assumption.

Decide whether or not assumptions likely realistic.

101

Outline in randomized clinical trial context: Salk

vaccine.

Suppose vaccine useless – no cases of polio

prevented, none caused.

Between treatment and control total of 56+142=198

cases.

Each child assigned to treatment / control with

chance 1/2.

Is a 56 – 142 split likely in 198 coin tosses?

Actual chance of 56 or fewer heads in 198

tosses is:

4 × 10−10

(4 in 10 billion).

Conclusion. Random assignment almost certainly not explanation for difference.

102

Chance of exactly 56 heads? 2.6 × 10−10

Chance of exactly 99 heads? 0.056 or so.

No outcome particularly likely.

Evidence for effective vaccine: low number of

cases in treatment group.

We judge evidence against hypothesis of no

vaccine effect by computing:

Probability of getting evidence against hypothesis as strong as, or stronger than, the evidence

we did get assuming hypothesis true.

Literary technique: foreshadowing – we come

back to this logic in Chapter 14,

103

Where did all these numbers come from?

Rules, jargon of probability:

Do chance / random experiment: toss thumbtack on each screen.

Possible outcomes: each tack can land point

up or tipped over.

The Sample Space

Outcome number

1

2

3

4

Screen

Left Right

up

up

up

over

over up

over over

Short

hand

UU

UO

OU

OO

Sample space has 4 elements: {U U, U O, OU, U U }.

104

Probability: assign to each possible a number,

the probability of that outcome.

Rules for the numbers:

all probabilities are greater than or equal to 0.

all probabilities are less than or equal to 1.

the probabilities to each possible outcome must

add up to 1.

We assign probabilities to other things like:

Probability the two thumbtacks land opposite

to each other?

P (U O) + P (OU )

P is for probability.

105

BUT: how do we assign the four numbers?

Make simple assumptions.

Use rules of probability.

Simpler experiment: toss coin on each screen.

Possible outcomes:

{HH, HT, T H, T T }

Use interpretation of probability: P (HH) is

long-run fraction of times HH occurs.

In the long run which of four possibilities should

occur most often?

None. H should be about as common as T

on each screen and when H shows up on left

screen H and T should be equally common on

right screen.

Argument by symmetry: all four outcomes

have same chance.

106

So:

1

P (HH) = P (HT ) = P (T H) = P (T T ) =

4

Does the same go for thumbtacks?

NO: thumbtack not symmetric in the same

way.

But: suppose long run fraction of U on left

were say 1/3 and long run fraction of U on

right were say 1/4. (Different tacks!)

Long run fraction of U U would be

1 1

×

3 4

“Of the trials where left tack turns out U ,

should see 1/4 have U on right screen — no

contact/influence between screens.”

Jargon: left, right screen results independent.

107

This would lead to

Outcome

Prob

UU

UO

OU

OO

1

12

3

12

2

12

6

12

Where do the 1/3 and 1/4 come from?

Experimentation – empirical measurement.

Hypothesis / theory – as in genetics.

Symmetry (not in this example, though)

Physics, maybe.

Only empirical method likely to work in this

experiment.

108

Return to Salk trial. If no vaccine effect then:

number of cases in treatment like number of

heads in 198 tosses of fair coin. (Treat number

of cases as predestined.)

How do we compute chance of 56 heads in 198

tosses.

Method 1: exact calculation based on rules of

probability.

Leads to Binomial distribution.

Gives answer

1

198 × 197 × · · · × 1

× 198

(56 × · · · × 1) × (142 × · · · × 1) 2

109

Commentary:

Uselessly difficult to compute, even with calculator.

Care need to compute accurately on computer.

Can do just as well with a normal approximation.

Method two: use central limit theorem.

Next: some details of Method 1.

Then: details of Method 2.

110

Some examples to illustrate ideas underlying

binomial distribution.

Toss coin twice.

The Sample Space

Toss

1 2

T T

T H

H T

H H

#

Heads

0

1

1

2

111

Convert to chances for 0, 1, 2 heads:

Distribution of Number of Heads

# Heads

Probability

0

1

2

1

4

2

4

1

4

Notice: 22 = 4 possible outcomes.

All chances of form:

# of ways divided by 4.

112

Next three tosses:

The Sample Space

Toss

1 2 3

T T T

T T H

T H T

H T T

T H H

H T H

H H T

H H H

#

Heads

0

1

1

1

2

2

2

3

Prob

1/8

1/8

1/8

1/8

1/8

1/8

1/8

1/8

113

Distribution of Number of Heads

# Heads

Probability

0

1

2

3

1

8

3

8

3

8

1

8

Notice: 23 = 8 possible outcomes.

All chances of form:

# ways / 8.

114

In general: toss coin n times.

2n possible outcomes.

each chance is 2n.

Count up number of ways to get desired number of heads.

Chance of that many heads is

# ways / 2n.

115

What if it had been a thumbtack?

More difficult to compute chances.

Use rules:

Jargon “get 56 heads” is an event — collection of all outcomes where there are 56 heads

and 142 tails.

Simpler case:

Toss coin three times:

Some events:

Get 2 heads

Get 0 heads

Get at least 2 heads

{HHT, HT H, T HH}

{T T T }

{HHT, HT H, T HH, HHH}

116

Rules of probability: in following A, B shorthand for two events. S is collection of all possible outcomes, the sample space.

1) 0 ≤ P (A) ≤ 1

2) P (S) = 1.

3) P (A doesn’t happen) = 1 − P (A).

4) if it is impossible for both A and B to happen

at the same time and C is the event “either A

happens or B happens or both happen” then

P (C) = P (A) + P (B)

5) if A and B are independent and D is the

event “both A and B happen” then

P (D) = P (A) × P (B)

4) is the addition rule

5) is the multiplication rule.

117

Independent means: whether or not A happens doesn’t influence chance B happens.

Example: back to two thumbtacks.

A is “left tack lands U ”

B is “right tack lands U ”

Suppose P (A) = 1/3 and P (B) = 1/4.

If I tell you A happened then you should still

think probability that B will happen is 1/4 –

no way for result of left toss to influence result

of right toss.

D is event both A and B happen, that is, get

UU.

Then

P (D) = P (A) × P (B) =

1 1

1

× =

3 4

12

118

Recognizing use of Binomial distribution:

1) repeat same basic experiment a fixed number, n say, of times.

2) each trial results either in something happening (called “SUCCESS” or ’S’) or not happening (“FAILURE” or ‘F’);

3) trials independent. Outcome of one does

not influence any other.

4) prob of success on each trial is same, say p.

If so then

P (exactly k successes)

n!

pk (1 − p)n−k

=

k!(n − k)!

Notation in other books:

n

n!

=

= nCk = Ckn.

k!(n − k)!

k

119

In this course: we don’t memorize the formula.

How is formula derived: use rules of probability

above and combinatorics or counting.

Graphical presentation of binomial probabilities: draw histogram. Area of bar = chance of

corresponding value.

0.0

0.2

0.4

Example: toss coin twice; n = 2, p = 1/2.

−0.5

0.0

0.5

1.0

1.5

2.0

2.5

120

Total area of all bars is 1.

0.0

0.2

n = 3, p = 0.5

0

1

2

3

121

0.00

0.10

0.20

n = 16, p = 0.5

2

4

6

8

10

12

14

0.00

0.06

n = 64, p = 0.5

20

25

30

35

40

45

122

0.00

0.03

n = 256, p = 0.5

110

120

130

140

150

123

Now try p = 1/4.

0.0 0.2 0.4

n = 2, p = 1/4.

−0.5

0.0

0.5

1.0

1.5

2.0

2.5

0.0

0.2

0.4

n = 3, p = 1/4

0

1

2

3

124

0.00 0.10 0.20

n = 16, p = 1/4

0

2

4

6

8

0.00

0.06

n = 64, p = 1/4

5

10

15

20

25

125

0.00

0.03

n = 256, p = 1/4

50

60

70

80

126

Notice increasing symmetry.

Notice general shape of normal curve.

Now superimpose normal curves!

Idea: compute probabilities by adding up areas

of bars or make normal approximation.

To do so: need mean and standard deviation

of histogram!

Mean is µ = np

SD is σ =

q

np(1 − p).

127

Superimpose normal curves. p = 0.5

0.0 0.2 0.4

n=2

−0.5

0.0

0.5

1.0

1.5

2.0

2.5

0.0

0.2

0.4

n=3

0

1

2

3

128

0.00

0.10

0.20

n = 16

2

4

6

8

10

12

14

0.00

0.06

n = 64

20

25

30

35

40

45

0.00

0.03

n = 256

110

120

130

140

150

129

p = 0.25

0.0

0.3

0.6

n=2

−0.5

0.0

0.5

1.0

1.5

2.0

2.5

0.0

0.2

0.4

n=3

0

1

2

3

130

0.00 0.10 0.20

n = 16

0

2

4

6

8

0.00

0.06

n = 64

20

25

30

35

40

45

0.00

0.03

n = 256

110

120

130

140

150

131

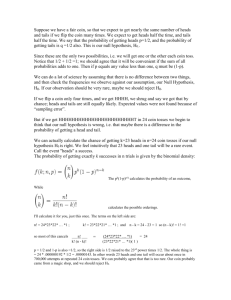

Making normal approximations to compute binomial probabilities:

Identify range:

Compute mean and standard deviation.

Convert range to standard deviation units.

Look up area in normal table.

132

Example:

Probability of 1 or 2 heads in 3 tosses of fair

coin.

1) Range is 0.5 to 2.5. Reason for halfs: edges

of bars at -0.5,0.5,1.5, and so on.

2) Mean is µ = np = 3 ∗ 0.5 = 1.5

SD is σ =

q

3 ∗ 0.5 ∗ (1 − 0.5) = 0.866.

Convert to standard deviation units:

0.5 − 1.5

2.5 − 1.5

to

0.866

0.866

or -1.15 to 1.15.

Look up area: 0.7499≈0.75.

Correct answer is 3/4=0.75.

133

Salk vaccine examples.

If the vaccine is ineffective then number of polio cases in treatment group is like number of

heads in 198 tosses of a fair coin.

That is: Binomial distribution for number of

cases in treatment.

Reasoning: 198 cases destined regardless of

outcome of randomization. Each case assigned

to treatment with same chance, 1/2. The

cases are assigned independently.

Chance of 56 heads or fewer in 198 tosses:

Limits: 56.5 or fewer.

Mean is µ = 198 ∗ 0.5 = 99.

√

SD is σ = 198 ∗ 0.5 ∗ 0.5 = 7.04.

134

Convert 56.5 to standard deviation units:

56.5 − 99

= −6.04

7.03

Off end of the tables!

Chance ≤ 0.0003 from tables.

But actual chance from software is: 7.7×10−10.

Interpretation: either the hypothesis above (where

I wrote ‘if’) is wrong or something extremely

unlikely has happened; conclude hypothesis of

no treatment effect is wrong.

135

Why not compute chance of exactly 56 heads

instead of 56 or fewer?

Another example:

Imagine toss coin 10,000 times.

exactly 5,000 heads?

Chance of

Range is 4999.5 to 5000.5.

Mean is µ = 5000.

√

SD is σ = 10000 ∗ 0.5 ∗ 0.5 = 50.

Convert range to standard units:

5000.5 − 5000

4999.5 − 5000

to

50

50

136

This is -0.01 to 0.01 so chance is approximately 0.0080.

Notice: even most likely outcome is not very

likely!

In hypothesis testing we compute chance of

results “as extreme as or more extreme than’

the results we actually got assuming some hypothesis is true. If the chance comes out small

we conclude the hypothesis is (likely) not true.

137

![2*V[X]=1/n2*np(1-p)=p(1-p)/n 1833.0 5.05.0 !8!6 !14 )6 ( = = = XP](http://s3.studylib.net/store/data/008711824_1-0d6d751ef61e41cbf10ab5a47ea15653-300x300.png)