Chapter 8 8.1 Not binomial: There is not fixed number of trials n (i.e.

advertisement

184

Chapter 8

Chapter 8

8.1 Not binomial: There is not fixed number of trials n (i.e., there is no definite upper limit on

the number of defects) and the different types of defects have different probabilities.

8.2 Yes: 1) “Success” means person says “Yes” and “failure” means person says “No.” (2) We

have a fixed number of observations (n = 100). (3) It is reasonable to believe that each response

is independent of the others. (4) It is reasonable to believe each response has the same

probability of “success” (saying “yes”) since the individuals are randomly chosen from a large

city.

8.3 Yes: 1) “Success” means reaching a live person and “failure” is any other outcome. (2) We

have a fixed number of observations ( n = 15). (3) It is reasonable to believe that each call is

independent of the others. (4) Each randomly-dialed number has chance p = 0.2 of reaching a

live person.

8.4 Not binomial: There is no fixed number of attempts (n).

8.5 Not binomial: Because the student receives instruction after incorrect answers, her

probability of success is likely to increase.

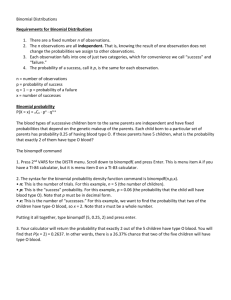

8.6 The number who say they never have time to relax has (approximately) a binomial

distribution with parameters n = 500 and p = 0.14. 1) “Success” means the respondent “never

has time to relax” and “failure” means the respondent “has time to relax.” (This is a good

example to point out why “success” and “failure” should be referred to as labels.) 2) We have a

fixed number of observations (n = 500). 3) It is reasonable to believe each response is

independent of the others. 4) The probability of “success” may vary from individual to

individual (think about retired individuals versus parents versus students), but the opinion polls

provide a reasonable approximation for the probability in the entire population.

8.7 Let X = the number of children with type O blood. X is B(5, 0.25).

⎛5⎞

P ( X = 3) = ⎜ ⎟ (0.25)3 (0.75)2 = 10(0.25)3 (0.75)2 0.0879

⎝ 3⎠

8.8 Let X = the number of broccoli plans that you lose. X is B(10, 0.05).

⎛ 10 ⎞

⎛10 ⎞

P ( X ≤ 1) = P ( X = 0 ) + P ( X = 1) = ⎜ ⎟ (0.05)0 (0.95)10 + ⎜ ⎟ (0.05)1(0.95)9

⎝0⎠

⎝1⎠

9

= (0.95)10 + 10 ( 0.05 )( 0.95 ) 0.9139

8.9 Let X = the number of children with blood type O. X is B(5, 0.25).

⎛5⎞

P ( X ≥ 1) = 1 − P( X = 0) = 1 − ⎜ ⎟ (0.25)0 (0.75)5 = 1 − (0.75)5 1 − 0.2373 = 0.7627

⎝0⎠

8.10 Let X = the number of players who graduate. X is B(20, 0.8).

The Binomial and Geometric Distributions

185

⎛ 20 ⎞

⎛ 20 ⎞

(a) P( X = 11) = ⎜ ⎟ (0.8)11(0.2)9 0.0074 (b) P ( X = 20) = ⎜ ⎟ (0.8)20 (0.2)0 0.0115

⎝ 11 ⎠

⎝ 20 ⎠

(c) P ( X < 20) = 1 − P ( X = 20 ) 1 − 0.0115 = 0.9985

8.11 Let X = the number of Hispanics on the committee. X is B(15, 0.3).

⎛ 15 ⎞

⎛ 15 ⎞

(a) P( X = 3) = ⎜ ⎟ (0.3)3 (0.7)12 0.1701 (b) P( X = 0) = ⎜ ⎟ (0.3)0 (0.7)15 = 0.0047

⎝3⎠

⎝0⎠

8.12 Let X = the number of men called. X is B(30, 0.7).

⎛ 30 ⎞

(a) P( X = 20) = ⎜ ⎟ (0.7)20 (0.3)10 0.1416 (b) P(1st woman is the 4th call) =

⎝ 20 ⎠

( 0.7 )3 ( 0.3) 0.1029 .

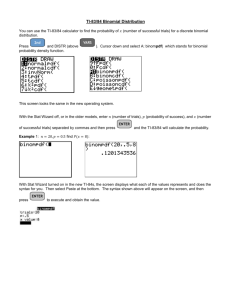

8.13 Let X = the number of children with blood type O. X is B(5, 0.25). (a) P ( X =2) =

binompdf(5, 0.25, 2) = 0.2637. (b) A table with the values of X, the pdf, and the cdf is shown

below.

X

0

1

2

3

4

5

pdf P(X) 0.2373 0.3955 0.2637 0.0879 0.0146 0.0010

cdf F(X) 0.2373 0.6328 0.8965 0.9844 0.9990 1.0000

(c) The probabilities given in the table above for P(X) add to 1. (d) A probability histogram is

shown below on the left.

0.4

Probability

0.3

0.2

0.1

0.0

0

1

2

3

4

Count of children with type O blood

5

(e) See the table above for the cumulative probabilities. Cumulative distribution histograms are

shown below for the number of children with type O blood (left) and the number of free throws

made (right). Both cumulative distributions show bars that “step up” to one, but the bars in the

cumulative histogram for the number of children with type O blood get taller sooner. That is,

there are fewer steps and the steps are bigger.

Chapter 8

1.0

1.0

0.8

0.8

Cumulative probability

Cumulative probability

186

0.6

0.4

0.2

0.0

0.6

0.4

0.2

0

1

2

3

4

Count of children with type O blood

5

0.0

0

1

2

3

4

5

6

7

8

Count of free throws made

9

10

11

12

8.14 Let X = the number of correct answers. X is B(50, 0.5). (a) P(X ≥ 25) = 1 − P(X ≤ 24) = 1

− binomcdf (50, 0.5, 24) 1 − 0.4439 = 0.5561. (b) P(X ≥ 30) = 1 − P(X ≤ 29) = 1 − binomcdf

(50, 0.5, 29) 1 − 0.8987 = 0.1013. (c) P(X ≥ 32) = 1 − P(X ≤ 31) = 1 − binomcdf (50, 0.5, 31)

1 − 0.9675 = 0.0325.

8.15 (a) Let X = the number of correct answers. X is B(10, 0.25). The probability of at least

one correct answer is P(X ≥ 1) = 1 − P(X = 0) = 1 − binompdf (10,0.25,0) 1 − 0.0563 =

0.9437. (b) Let X = the number of correct answers. P(X ≥ 1) = 1 − P(X = 0). P(X = 0) is the

probability of getting none of the questions correct, or every question wrong. Note that this is

not a binomial random variable because each question has a different probability of a success.

The probability of getting the first question wrong is 2/3, the second question wrong is 3/4 and

the third question wrong is 4/5. The probability of getting all of the questions wrong is P(X = 0)

= (2/3)×(3/4)×(4/5) = 0.4, because Erin is guessing so the responses to different questions are

independent. Thus, P(X ≥ 1) = 1 – P(X = 0) = 1 – 0.4 = 0.6.

8.16 (a) Yes: 1) “Success” means having an incarcerated parent and “failure” is not having an

incarcerated parent. (2) We have a fixed number of observations ( n = 100). (3) It is reasonable

to believe that the responses of the children are independent. (4) Each randomly selected child

has probability p = 0.02 of having an incarcerated parent. (b) P(X = 0) is the probability that

none of the 100 selected children has an incarcerated parent. P(X = 0) = binompdf (100, 0.02, 0)

0.1326 and P(X= 1) = binompdf (100, 0.02, 1) 0.2707. (c) P(X ≥ 2) = 1 − P(X ≤ 1) = 1 −

binomcdf (100, 0.02, 1) 1 − 0.4033 = 0.5967. Alternatively, by the addition rule for mutually

exclusive events, P(X ≥ 2) = 1 − (P(X = 0) + P(X = 1)) = 1 − (0.1326 + 0.2707) = 1 − 0.4033 =

0.5967.

8.17 Let X = the number of players who graduate. X is B(20, 0.8). (a) P(X =11) =

binompdf(20, 0.8, 11) 0.0074. (b) P(X = 20) = binompdf(20, 0.8, 20) = 0.820 0.0115. (c)

P(X ≤ 19) = 1 – P(X=20) 1 − 0.0015 = 0.9985.

⎛10 ⎞

8.18 (a) n = 10 and p = 0.25. (b) P( X = 2) = ⎜ ⎟ (0.25)2 (0.75)8 = binompdf(10, 0.25, 2) ⎝2⎠

0.2816. (c) P ( X ≤ 2) = P ( X = 0 ) + P ( X = 1) + P ( X = 2 ) = binomcdf(10, 0.25, 2) 0.5256.

The Binomial and Geometric Distributions

187

8.19 (a) np = 2500 × 0.6 = 1500, n(1 − p) = 2500 × 0.4 = 1000; both values are greater than 10, so

the conditions are satisfied. (b) Let X = the number of people in the sample who find shopping

frustrating. X is B(2500, 0.6). Then P(X ≥ 1520) = 1 − P(X ≤ 1519) = 1 − binomcdf (2500, 0.6,

1519) = 1 − 0.7868609113 = 0.2131390887, which rounds to 0.213139. The probability correct

to six decimal places is 0.213139. (c) P(X ≤ 1468) = binomcdf (2500, 0.6, 1468) 0.0994.

Using the Normal approximation to the binomial, P(X ≤ 1468) 0.0957, a difference of 0.0037.

8.20 Let X be the number of 1’s and 2’s; then X has a binomial distribution with n = 90 and p =

0.477 (in the absence of fraud). Using the calculator or software, we find P( X ≤ 29) =

binomcdf(90, 0.477, 29) 0.0021. Using the Normal approximation (the conditions are

satisfied), we find a mean of 42.93 and standard deviation of σ = 90 × 0.477 × 0.523 4.7384 .

29 − 42.93 ⎞

⎛

Therefore, P ( X ≤ 29) P ⎜ Z ≤

⎟ = P ( Z ≤ −2.94 ) = 0.0016 . Either way, the

4.7384 ⎠

⎝

probability is quite small, so we have reason to be suspicious.

8.21 (a) The mean is µ X = np = 20×0.8 = 16. (b) The standard deviation is

σ X = (20)(0.8)(0.2) = 3.2 1.7889 . (c) If p = 0.9 then σ X = 20 × 0.9 × 0.1 1.3416 , and if p

= 0.99 then σ X = 20 × 0.99 × 0.01 0.4450 . As the probability of “success” gets closer to 1 the

standard deviation decreases. (Note that as p approaches 1, the probability histogram of the

binomial distribution becomes increasingly skewed, and thus there is less and less chance of

getting an observation an appreciable distance from the mean.)

8.22 If H is the number of home runs, with a binomial(n = 509, p = 0.116) distribution, then H

has mean µ H = np = 509 × 0.116 59.0440 and standard deviation

σ H = 509 × 0.116 × 0.884 7.2246 home runs. Therefore,

70 − 59.0440 ⎞

⎛

P ( H ≥ 70) P ⎜ Z ≥

⎟ = P ( Z ≥ 1.52 ) = 0.0643 . Using a calculator or software, we

7.2246 ⎠

⎝

find that the exact value is 1−binomcdf(509, 0.116, 69) = 0.0763741347 or about 0.0764.

8.23 (a) Let X = the number of people in the sample of 400 adults from Richmond who approve

of the President’s response. The count X is approximately binomial. 1) “Success” means the

respondent “approves” and “failure” means the respondent “does not approve.” 2) We have a

fixed number of observations (n = 400). 3) It is reasonable to believe each response is

independent of the others. 4) The probability of “success” may vary from individual to

individual (think about people with affiliations in different political parties), but the national

survey will provide a reasonable approximate probability for the entire nation. (b) P(X ≤ 358) =

binomcdf(400, 0.92, 358) 0.0441. (c) The expect number of approvals is µ X = 400×0.92 =

368 and the standard deviation is σ X = 400 × 0.92 × 0.08 = 29.44 5.4259 approvals. (d) Using

358 − 368 ⎞

⎛

the Normal approximation, P ( X ≤ 358 ) P ⎜ Z ≤

⎟ = P ( Z ≤ −1.84 ) = 0.0329 , a

5.4259 ⎠

⎝

188

Chapter 8

difference of 0.0112. The approximation is not very accurate, but note that p is close to 1 so the

exact distribution is skewed.

8.24 (a) The mean is µ X = np = 1500 × 0.12 = 180 blacks and the standard deviation is

σ X = 1500 × 0.12 × 0.88 12.5857 blacks. The Normal approximation is quite safe: n×p = 180

and n×(1 − p) = 1320 are both more than 10. We compute

195 − 180 ⎞

⎛ 165 − 180

P (165 ≤ X ≤ 195 ) P ⎜

≤Z≤

⎟ = P ( −1.19 ≤ Z ≤ 1.19 ) 0.7660 . (Exact

12.5857 ⎠

⎝ 12.5857

computation of this probability with a calculator or software gives 0.7820.)

8.25 The command cumSum (L2) → L3 calculates and stores the values of P(X ≤ x) for x = 0, 1,

2, …, 12. The entries in L3 and the entries in L4 defined by binomcdf(12,0.75, L1) → L4 are

identical.

8.26 (a) Answers will vary. The observations for one simulation are: 0, 0, 4, 0, 1, 0, 1, 0, 0, and

1, with a sum of 7. For these data, the average is x = 0.7 . Continuing this simulation, 10 sample

means were obtained: 0.7, 0.6, 0.6, 1.0, 1.4, 1.5, 1.0, 0.9, 1.2, and 0.8. The mean of these sample

means is 0.97, which is close to 1, and the standard deviation of these means is 0.316, which is

close to 0.9847 / 10 0.3114 . (Note: Another simulation produced sample means of 0.8, 0.9,

0.5, 0.9, 1.4, 0.5, 1.6, 0.5, 1.0, and 1.8, which have an average of 0.99 and a standard deviation of

0.468. There is more variability in the standard deviation.) (b) For n = 25, one simulation

produced sample means of 1.5, 2.2, 3.2, 2.1, 3.2, 1.7, 2.6, 2.7, 2.4, and 2.5, with a mean of 2.41

and a standard deviation of 0.563. For n = 50, one simulation produced sample means of 4.3,

5.5, 5.0, 4.7, 5.0, 5.1, 4.7, 3.8, 4.7, and 6.3, with a mean of 4.91 and a standard deviation of

0.672. (c) As the number of switches increases from 10 to 25 and then 50, the sample mean also

increases from 1 to 2.5 and then 5. As the sample size increases from 10 to 25 and then from 25

to 50, the spread of x values increases. The number of simulated samples stays the same at 10,

but σ changes from 10 × 0.1× 0.9 0.9847 to 25 × 0.1× 0.9 = 1.5 and then

50 × 0.1× 0.9 2.1213 .

8.27 (a) Let S denote the number of contaminated eggs chosen by Sara. S has a binomial

distribution with n = 3 and p = 0.25; i.e., S is B(3, 0.25) (b) Using the calculator and letting 0 ⇒

a contaminated egg and 1, 2 or 3 ⇒ good egg, simulate choosing 3 eggs by RandInt(0, 3, 3).

Repeating this 50 times leads to 30 occasions when at least one of the eggs is contaminated;

30

P ( S ≥ 1) = 0.6 . (c) P ( S ≥ 1) = 1 − P ( S = 0 ) = 1 − binompdf(3, 0.25, 0) = 1 − (0.75)3 50

0.5781. The value obtained by simulation is close to the exact probability; the difference is

0.0219.

8.28 (a) We simulate 50 observations of X = the number of students out of 30 with a loan by

using the command randBin (1, 0.65, 30) → L1: sum (L1). Press ENTER 50 times. Then sort

the list from largest to smallest using the command SortD(L1) (this command is found on the TI

83/84 under Stat → EDIT → 3:SortD) and then look to see how many values are greater than 24.

Only one of the simulated values was greater than 24, so the estimated probability is 1/50 = 0.02.

The Binomial and Geometric Distributions

189

(b) Using the calculator, we find P ( X > 24 ) = 1 − P ( X ≤ 24 ) = 1 − binomcdf(30, 0.65, 24) 1

− 0.9767 = 0.0233. (c) Using the Normal approximation, we find

24 − 19.5 ⎞

⎛

P ( X > 24 ) P ⎜ Z >

⎟ = P ( Z > 1.72 ) = 0.0427 . The Normal approximation is not very

2.6125 ⎠

⎝

good in this situation, because n×(1−p) = 10.5 is very close to the cutoff for our rule of thumb.

The difference between the two probabilities in (b) and (c) is 0.0194. Note that the simulation

provides a better approximation than the Normal distribution.

8.29 Let X = the number of 0s among n random digits. X is B(n, 0.1). (a) When n = 40, P(X =

4) = binompdf(40, 0.1, 4) 0.2059. (b) When n = 5, P(X ≥ 1) = 1 − P(X = 0) = 1 – (0.9)5 1

− 0.5905 = 0.4095.

8.30 (a) The probability of drawing a white chip is 15/50 = 0.3. The number of white chips

in 25 draws is B(25, 0.3). Therefore, the expected number of white chips is 25×0.3 = 7.5.

(b) The probability of drawing a blue chip is 10/50 = 0.2. The number of blue chips in

25 draws is B(25, 0.2). Therefore, the standard deviation of the number of blue chips is

25 × 0.2 × 0.8 = 2 blue chips. (c) Let the digits 0, 1, 2, 3, 4 fl red chip, 5, 6, 7 fl white chip, and

8, 9 flblue chip. Draw 25 random digits from Table B and record the number of times that you

get chips of various colors. Using the calculator, you can draw 25 random digits using the

command randInt (0, 9, 25) → L1. Repeat this process 50 times (or however many times you

like) to simulate multiple draws of 25 chips. A sample simulation of a single 25-chip draw using

the TI-83 yielded the following result:

Digit

0 1 2 3 4 5 6 7 8 9

Frequency 4 3 4 2 1 2 0 2 1 6

This corresponds to drawing 14 red chips, 4 white chips, and 7 blue chips.

(d) The expected number of blue chips is 25×0.2 = 5, and the standard deviation is 2. It is very

likely that you will draw 9 or fewer blue chips. The actual probability is binomcdf (25, 0.2, 9) 0.9827. (e) You are almost certain to draw 15 or fewer blue chips; the probability is binomcdf

(25, 0.2, 15) 0.999998.

8.31 (a) A binomial distribution is not an appropriate choice for field goals made by the National

Football League player, because given the different situations the kicker faces, his probability of

success is likely to change from one attempt to another. (b) It would be reasonable to use a

binomial distribution for free throws made by the NBA player because we have n = 150

attempts, presumably independent (or at least approximately so), with chance of success p = 0.8

each time.

8.32 (a) Yes: 1) “Success” means the adult “approves” and “failure” means the adult

“disapproved.” 2) We have a fixed number of observations (n = 1155). 3) It is reasonable to

believe each response is independent of the others. 4) The probability of “success” may vary

from individual to individual, but a national survey will provide a reasonable approximate

probability for the entire nation. (b) Not binomial: There are no separate “trials” or “attempts”

being observed here. (c) Yes: Let X = the number of wins in 52 weeks. 1) “Success” means Joe

“wins” and “failure” means Joe “loses.” 2) We have a fixed number of observations (n = 52). 3)

190

Chapter 8

The results from one week to another are independent. 4) The probability of winning stays the

same from week to week.

8.33 (a) Answers will vary. A table of counts is shown below.

Line Number

101 107 113 119 120 126 132 138 142 146

Count of zeros

3

5

6

3

2

3

2

3

4

9

A dotplot and a boxplot are shown below.

9

8

2

3

4

5

6

Count of zeros

7

8

9

Count of zeros

7

6

5

4

3

2

The sample mean for these 10 lines is 4 zeros and the standard deviation is about 2.16 zeros.

The distribution is clearly skewed to the right. (b) The number of zeros is binomial because 1)

“Success” is a digit of zero and “failure” is any other digit. 2) The number of digits on each line

is n = 40. We are equally likely to get any of the 10 digits in any position so 3) the trials are

independent and 4) the probability of “success” is p = 0.1 for each digit examined. (c) As the

number of lines used increases, the mean gets closer to 4, the standard deviation becomes closer

to 1.8974 and the shape will still be skewed to the right because we are simulating a binomial

distribution with n = 40 and p = 0.1. (d) Dan is right, the number of zeros in 400 digits will be

approximately normal with a mean of 40 and a standard deviation of 6. As n increases,

n × 0.1 > 10 and n × 0.9 > 10 so the conditions are satisfied and we can use a Normal distribution

with a mean of n × 0.1 and a standard deviation of n × 0.1× 0.9 to approximate the Binomial(n,

0.1) distribution. However, the conditions are not satisfied for one line so the simulated

distribution will not become approximately normal, no matter how many additional rows are

examined.

8.34 (a) n = 20 and p = 0.25. (b) The mean is µ = 20 × 0.25 = 5 . (c) The probability of getting

⎛ 20 ⎞

exactly five correct guesses is P( X = 5) = ⎜ ⎟ (0.25)5 (0.75)15 0.2023 .

⎝5⎠

8.35 Let X = the number of correctly answered questions. X is B(10, 0.2). (a) P(X = 0) 0.1074. (b) P(X = 1) 0.2684. (c) P(X = 2) 0.3020. (d) P(X ≤ 3) 0.8791. (e) P(X > 3) =

1 − P(X ≤ 3) 1 − 0.8791 = 0.1209. (f) P(X = 10) 0.0000! (g) A probability distribution

table is shown below.

X

P(X)

0

0.1074

1

0.2685

2

0.3020

The Binomial and Geometric Distributions

191

3

0.2013

4

0.0881

5

0.0264

6

0.0055

7

0.0008

8

0.0001

9

0.000004

10

0.000000

(h) The expected number of correct answers is 10×0.25 = 2.5.

8.36 Let X = the number of truthful persons classified as deceptive. X is B(12, 0.2). (a) The

probability that the lie detector classifies all 12 as truthful is

⎛12 ⎞

P ( X = 0) = ⎜ ⎟ (0.2)0 (0.8)12 0.0687 , and the probability that at least one is classified as

⎝0⎠

deceptive is P ( X ≥ 1) = 1 − P ( X = 0 ) 1 − 0.0687 = 0.9313 . (b) The mean number who will be

classified as deceptive is 12×0.2 = 2.4 applicants and the standard deviation is

12 × 0.2 × 0.8 1.3856 applicants. (c) P ( X ≤ 2.4) = P ( X ≤ 2 ) 0.5583 , using binomcdf(12,

0.2, 2).

8.37 In this case, n = 20 and the probability that a randomly selected basketball player graduates

is p =0.8. We will estimate P(X ≤ 11) by simulating 30 observations of X = number graduated

and computing the relative frequency of observations that are 11 or smaller. The sequence of

calculator commands are: randBin(1,0.8,20) → L1 : sum(L1) → L2(1), where 1’s represent

players who graduated. Press Enter until 30 numbers are obtained. The actual value of P(X ≤

11) is binomcdf(20, 0.8, 11) 0.0100.

8.38 (a) 1) “Success” is getting a response, and “Failure” is not getting a response. 2) We have

a fixed number of trials (n = 150). 3) It is reasonable to believe that the business decisions to

respond or not are independent. 4) The probability of success (responding) is 0.5 for each

business. (b) The mean is 150×0.5 = 75 responses. (c) The approximate probability is

70 − 75 ⎞

⎛

P ( X ≤ 70 ) P ⎜ Z ≤

⎟ = P ( Z ≤ −0.82 ) = 0.2061 ; using unrounded values and software

6.1237 ⎠

⎝

yields 0.2071. The exact probability is about 0.2313. (d) Use 200, since 200×0.5 = 100.

8.39 (a) Let X = the number of auction site visitors. X is B(12, 0.5). (b) P(X = 8 ) =

binompdf(12, 0.5, 8) 0.1209; P(X ≥ 8) = 1 − P(X ≤ 7) = 1 − binomcdf(12, 0.5 ,7) 1 −

0.8062 = 0.1938.

8.40 (a) Let X = the number of units where antibodies are detected. X is B(20, 0.99) (b) The

probability that all 20 contaminated units are detected is

⎛ 20 ⎞

P( X = 20) = ⎜ ⎟ (0.99)20 (0.01)0 0.8179 , using binompdf(20, 0.99, 20), and the probability

⎝ 20 ⎠

192

Chapter 8

that at least one unit is not detected is P ( X < 20) = P ( X ≤ 19 ) 0.1821 , using binomcdf(20,

0.99, 19). (c) The mean is 19.8 units and the standard deviation is 0.445 units.

8.41 (a) Yes: 1) “success” is getting a tail, “failure” is getting a head, and a trial is the flip of a

coin, 2) the probability of getting a tail on each flip is p = 0.5, 3) the outcomes on each flip are

independent, and 4) we are waiting for the first tail. (b) No: The variable of interest is the

number of times both shots are made, not the number of trials until the first success is obtained.

(c) Yes: 1) “success” is getting a jack, “failure” is getting something other than a jack, and a trial

is drawing of a card, 2) the probability of drawing a jack is p = 4/52 = 0.0769, 3) the

observations are independent because the card is replaced each time, and 4) we are waiting for

the first jack. (d) Yes: 1) “success” is matching all 6 numbers, “failure” is not matching all 6

numbers, and a trial is the Match 6 lottery game on a particular day, 2) the probability of winning

1

0.000000142 , 3) the observations are independent, and 4) we are waiting for the

is p =

⎛ 44 ⎞

⎜ ⎟

⎝6⎠

first win. (e) No: the probability of a “success,” getting a red marble, changes from trial to trial

because the draws are made without replacement. Also, you are interested in getting 3 successes,

rather than just the first success.

0.5

1.0

0.4

0.8

Cumulative probability

Probability

8.42 (a) 1) “Success” is rolling a prime number, “failure” is rolling number that is not prime,

and a trial is rolling a die, 2) the probability of rolling a prime is p = 0.5, 3) the observations,

outcomes from rolls, are independent, and 4) we are waiting for the first prime number. (b) The

first five possible values of the random variable and their corresponding probabilities are shown

in the table below

X

1

2

3

4

5

P(X) 0.5 0.25 0.125 0.0625 0.03125

F(X) 0.5 0.75 0.875 0.9375 0.96875

(c) A probability histogram is shown below (left). (d) The cumulative probabilities are shown in

the third row of the table above, and a cumulative probability histogram is shown below (right).

0.3

0.2

0.1

0.4

0.2

0.0

(e)

0.6

1

∞

2

∑ ( 0.5)

i =1

3

i

=

4

5

6

Number of rolls

0.5

= 1.

1 − 0.5

7

8

9

10

0.0

1

2

3

4

5

6

Number of rolls

7

8

9

10

The Binomial and Geometric Distributions

193

8.43 (a) 1) “Success” is a defective hard drive, “failure” is a working hard drive, and a trial is a

test of a hard drive, 2) the probability of a defective hard drive is p = 0.03, 3) the observations,

results of the tests on different hard drives, are independent, and 4) we are waiting for the first

defective hard drive. The random variable of interest is X = number of hard drives tested in

5 −1

order to find the first defective. (b) P ( X = 5 ) = (1 − 0.03) × 0.03 0.0266 (c) The first four

entries in the table for the pdf of X are shown below.; P( X = x) for p =0.03 and X = 1, 2, 3 and

4 = 0.03, 0.0291, 0.0282 and 0.0274.

X

1

2

3

4

P(X) 0.03 0.291 0.0282 0.0274

8.44 The probability of getting the first success on the fourth trial for parts (a), (c), and (d) in

Exercise 8.41 is: (a) P ( X = 4 ) = (1 − 0.5 )

⎛

⎛ 44 ⎞ ⎞

and (d) P ( X = 4 ) = ⎜1 − 1 ⎜ ⎟ ⎟

⎝ 6 ⎠⎠

⎝

4 −1

×

4 −1

4 ⎞

⎛

× 0.5 = 0.0625 , (c) P ( X = 4 ) = ⎜ 1 − ⎟

⎝ 52 ⎠

4 −1

×

4

0.0605 ,

52

1

0.0000001 .

⎛ 44 ⎞

⎜ ⎟

⎝6⎠

0.5

1.0

0.4

0.8

Cumulative probability

Probability

8.45 (a) The random variable of interest is X = number of flips required in order to get the first

head. X is a geometric random variable with p = 0.5. (b) The first five possible values of the

random variable and their corresponding probabilities are shown in the table below

X

1

2

3

4

5

P(X) 0.5 0.25 0.125 0.0625 0.03125

F(X) 0.5 0.75 0.875 0.9375 0.96875

A probability histogram is shown below (left). (d) The cumulative probabilities are shown in the

third row of the table above, and a cumulative probability histogram is shown below (right).

0.3

0.2

0.1

0.0

0.6

0.4

0.2

1

2

3

4

5

6

Number of rolls

⎛

⎝

8.46 (a) P ( X > 10 ) = ⎜1 −

7

8

9

10

0.0

1

2

3

4

5

6

Number of rolls

7

8

9

10

10

1⎞

⎟ 0.4189 . (b) P ( X > 10 ) = 1 − P ( X ≤ 10 ) = 1 −geometcdf(1/12, 10)

12 ⎠

1 − 0. 5811 = 0.4189.

8.47 (a) The cumulative probabilities for the first 10 values of X are: 0.166667, 0.305556,

0.421296, 0.517747, 0.598122, 0.665102, 0.720918, 0.767432, 0.806193, and 0.838494. A

cumulative probability histogram is shown below.

194

Chapter 8

0.9

0.8

Cumulative probability

0.7

0.6

0.5

0.4

0.3

0.2

0.1

0.0

1

2

3

4

5

6

Number of rolls

7

8

9

10

(b) P( X >10) = (1 – 1/6)10 = (5/6)10 = 0.1615 or 1 − P(X ≤ 10) 1 − 0.8385 = 0.1615. (c)

Using the calculator, geometcdf(1/6,25) = 0.989517404 and geometcdf(1/6,26) = 0.9912645033,

so the smallest positive integer k for which P( X ≤ k) > 0.99 is 26.

8.48 Let X = the number of applicants who need to be interviewed in order to find one who is

fluent in Farsi. X is a geometric random variable with p = 4% = 0.04. (a) The expected number

1

1

= 25 .

of interviews in order to obtain the first success (applicant fluent in Farsi) is µ = =

p 0.04

(b) P(X > 25) = (1 − 0.04)25 = (0.96)25 0.3604; P(X > 40) = (0.96)40 0.1954.

8.49 (a) We must assume that the shots are independent, and the probability of success is the

same for each shot. A “success” is a missed shot, so the p = 0.2. (b) The first “success” (miss) is

the sixth shot, so P(X = 6) = (1 −p)n-1(p) = (0.8)5×0.2 = 0.0655. (c) P( X ≤ 6) = 1 − P( X > 6) = 1

− (1 − p )6 = 1 – (0.8)6 0.7379. Using a calculator, P( X ≤ 6) = geometcdf(0.2, 6) 0.7379.

8.50 (a) There are 23 = 8 possible outcomes, and only two of the possible outcomes (HHH and

TTT) do not produce a winner. Thus, P(no winner) = 2/8 = 0.25. (b) P(winner) = 1 − P(no

winner) = 1 − 0.25 = 0.75. (c) Let X = number of rounds (tosses) until someone wins. 1)

“Success” is getting a winner, “failure” is not getting a winner, and a trial is one round (each

person tosses a coin) of the game, 2) the probability of success is 0.75, 3) the observations are

independent, and 4) we are waiting for the first win. Thus, X is a geometric random variable.

(d) The first seven possible values of the random variable X and their corresponding probabilities

and cumulative probabilities are shown in the table below.

X

1

2

3

4

5

6

7

P(X) 0.75 0.1875 0.046875 0.011719 0.00293 0.000732 0.000183

F(X) 0.75 0.9375 0.98438 0.99609 0.99902 0.99976 0.99994

(e) P(X ≤ 2) = 0.75 + 0.1875 = 0.9375. (f) P(X > 4) = (0.25)4 0.0039. (g) The expected

1

1

1.3333 . (h) Let 1 fl heads and 0 fl

number of rounds for someone to win is µ = =

p 0.75

tails, and enter the command randInt (0, 1, 3) and press ENTER 25 times. In a simulation, we

recorded the following frequencies:

X

1

2

3

Freq.

21

3

1

Relative Freq. 0.84 0.12

0.04

The Binomial and Geometric Distributions

195

The relative frequencies are not far away from the calculated probabilities of 0.75, 0.1875, and

0.046875 in part (d). Obviously, a larger number of trials would result in better agreement

because the relative frequencies will converge to the corresponding probabilities.

8.51 (a) Geometric: 1) “Success” is selecting a red marble, “failure” is not selecting a red

marble, and a trial is a selection from the jar, 2) the probability of selecting a red marble is

p=

20 4

= 0.5714 , 3) the observations, results of the marble selection, are independent because

35 7

the marble is placed back in the jar after the color is noted, and 4) we are waiting for the first red

marble. The random variable of interest is X = number of marbles you must draw to find the

first red marble. (b) The probability of getting a red marble on the second draw is

⎛ 4⎞

P ( X = 2) = ⎜ 1 − ⎟

⎝ 7⎠

2 −1

⎛4⎞

⎜ ⎟ = 0.2449 . The probability of drawing a red marble by the second draw is

⎝7⎠

4 ⎛ 3 ⎞⎛ 4 ⎞

P ( X ≤ 2) = P ( X = 1) + P ( X = 2) = + ⎜ ⎟⎜ ⎟ 0.8163 . The probability that it takes more than 2

7 ⎝ 7 ⎠⎝ 7 ⎠

2

⎛ 4⎞

draws to get a red marble is P( X > 2) = ⎜1 − ⎟ 0.1837 . (c) Using TI-83 commands:

⎝ 7⎠

seq(X,X,1,20)→L1, geompdf(4/7,L1)→L2 and geomcdf(4/7,L1)→L3 [or cumsum(L2)→L3]. The

first ten possible values of the random variable X and their corresponding probabilities and

cumulative probabilities are shown in the table below.

X

P(X)

F(X)

1

0.5714

0.5714

2

0.2449

0.8163

3

0.105

0.9213

4

0.045

0.9663

5

0.0193

0.9855

6

0.0083

0.9938

7

0.0035

0.9973

8

0.0015

0.9989

9

0.0007

0.9995

10

0.0003

0.9998

(d) A probability histogram (left) and a cumulative probability histogram (right) are shown

below.

0.6

1.0

Cumulative probability

0.5

Probability

0.4

0.3

0.2

0.6

0.4

0.2

0.1

0.0

0.8

1

2

3

4

5

6

7

Number of marbles

8

9

10

0.0

1

2

3

4

5

6

7

Number of marbles

8

9

10

8.52 (a) No. Since the marbles are being drawn without replacement and the population (the set

of all marbles in the jar) is so small, the probability of getting a red marble is not independent

from draw to draw. Also, a geometric variable measures the number of trials required to get the

first success; here, we are looking for the number of trials required to get two successes. (b) No.

Even though the results of the draws are now independent, the variable being measured is still

not the geometric variable. This random variable has a distribution known as the negative

binomial distribution. (c) The probability of getting a red marble on any draw is

p=

20 4

= 0.5714 . Let the digits 0, 1, 2, 3 fl a red marble is drawn, 4, 5, 6 fl some other color

35 7

196

Chapter 8

marble is drawn, and 7, 8, 9 fl digit is disregarded. Start choosing random digits from Table B,

or use the TI-83 command randInt (0, 9, 1) repeatedly. After two digits in the set 0, 1, 2, 3 have

been chosen, stop the process and count the number of digits in the set {0, 1, 2, 3, 4, 5, 6} that

have been chosen up to that point; this count represents the observed value of X. Repeat the

process until the desired number of observations of X has been obtained. Here are some sample

simulations using the TI- 83 (with R = red marble, O = other color marble, D = disregard):

7

0 4 3

X=3

D

R O R

9

0 8 6 2

X=3

D

R D O R

9

7 3 2

X=2

etc.

D

D R R

For 30 repetitions, we recorded the following frequencies:

X

2

3

4

5

6

7

8

Freq.

16

5

5

3

0

0

1

Relative Freq. 0.5333 0.1667 0.1667

0.1

0.0

0.0 0.0333

A simulated probability histogram for the 30 repetitions is shown below. The simulated

distribution is skewed to the right, just like the probability histogram in Exercise 8.51, but the

two distributions are not the same.

60

50

Percent

40

30

20

10

0

2

3

4

5

6

Number of draws

7

8

8.53 “Success” is getting a correct answer. The random variable of interest is X = number of

questions Carla must answer until she gets one correct. The probability of success is p = 1/5 =

5 −1

0.2 (all choices are equally likely to be selected). (b) P ( X = 5) = (1 − 0.2 ) × 0.2 0.0819 . (c)

P ( X > 4) = (1 − 0.2 ) 0.4096 . (d) The first five possible values of the random variable X and their

4

corresponding probabilities are shown in the table below.

X

1

2

3

4

5

P(X) 0.2 0.16

0.128

0.1024

0.0819

(e) The expected number of questions Carla must answer to get one correct is µ X =

1

= 5.

0.2

8.54 (a) If “success” is having a son and the probability of success is p = 0.5, then the average

number of children per family is µ =

1

= 2 . (b) The expected number of girls in this family is

0.5

µ = 2 × 0.5 = 1 . Alternatively, if the average number of children is 2, and the last child is a boy,

The Binomial and Geometric Distributions

197

then the average number of girls per family is 2 − 1 = 1. (c) Let an even digit represent a boy,

and an odd digit represent a girl. Read random digits until an even digit occurs. Count number of

digits read. Repeat many times, and average the counts. Beginning on line 101 in Table B and

simulating 50 trials, the average number of children per family is 1.96, and the average number

of girls is 0.96. These averages are very close to the expected values.

8.55 Letting G = girl and B = boy, the outcomes are: {G, BG, BBG, BBBG, BBBB}. A

“success” is having a girl. (b) The random variable X can take on the values of 0, 1, 2, 3 and 4.

The multiplication rule for independent events can be used to obtain the probability distribution

table for X below.

X

0

1

2

3

4

P(X) ⎛ 1 ⎞ ⎛ 1 ⎞2 1 ⎛ 1 ⎞3 1 ⎛ 1 ⎞4 1 ⎛ 1 ⎞4 1

⎜ ⎟ =

⎝2⎠ 4

⎜ ⎟

⎝2⎠

Note that

∑ P( X ) = 1 .

⎜ ⎟ =

⎝2⎠ 8

⎜ ⎟ =

⎝ 2 ⎠ 16

⎜ ⎟ =

⎝ 2 ⎠ 16

(c) Let Y = number of children produced until first girl is born. Then Y is

a geometric variable for Y = 1 to 4 but not for values greater than 4 because the couple stops

having children after 4. Note that BBBB is not included in the event Y= 4. The multiplication

rule for independent events can be used to obtain the probability distribution table for Y below.

Y

1

2

3

4

2

3

4

P(Y) ⎛ 1 ⎞ ⎛ 1 ⎞ 1 ⎛ 1 ⎞ 1

1

⎛1⎞

⎜ ⎟ =

⎝2⎠ 4

⎜ ⎟ =

⎝ 2 ⎠ 16

Note that this is not a valid distribution since ∑ P(Y ) < 1 . The difficulty lies in the fact that one

⎜ ⎟

⎝2⎠

⎜ ⎟ =

⎝2⎠ 8

of the possible outcomes, BBBB, cannot be written in terms of Y. (d) If T is the total number of

children in the family, then the probability distribution of T is shown in the table below.

T

1

2

3

4

2

3

4

P(T) ⎛ 1 ⎞ ⎛ 1 ⎞ 1 ⎛ 1 ⎞ 1 ⎛ 1 ⎞ ⎛ 1 ⎞ 4 2 1

⎜ ⎟ =

⎝2⎠ 8

⎜ ⎟ =

⎝2⎠ 4

⎜ ⎟

⎝2⎠

⎜ ⎟ +⎜ ⎟ = =

⎝ 2 ⎠ ⎝ 2 ⎠ 16 8

The expected number of children for this couple is µT = 1×0.5 + 2×0.25 + 3×0.125 + 4×0.125 =

1.875. (e) P(T >1.875) = 1 − P(T = 1) = 0.5 or P(T > 1.875) = P(T = 2) + P(T = 3) + P(T = 4) =

0.25 + 0.125 + 0.125 = 0.5. (f) P(having a girl) = 1 − P(not having a girl) = 1 − P(BBBB) = 1 −

0.0625 = 0.9375.

8.56 Let 0, 1, 2, 3, 4 fl Girl and 5, 6, 7, 8, 9 fl Boy. Beginning with line 130 in Table B, the

simulated values are:

690

BBG

3

53

G

2

0

B

1

|

|

|

51

BG

2

|

64

BG

2

4

G

1

|

0

G

1

|

71

BG

2

|

663

BBG

3

|

81

BG

2

64

BG

2

|

|

2

G

1

|

7871

BBBG

4

89872

BBBBG

5

|

81

BG

2

|

0

G

1

|

74

BG

2

|

1

G

1

|

|

0

G

1

972

BBG

3

|

951

BBG

3

|

4

G

1

|

|

50

BG

2

784

BBG

3

|

50

BG

2

198

Chapter 8

The average number of children is 52/25 = 2.08, which is close to the expected value of 1.875.

8.57 Find the mean of 25 randomly generated observations of X ; the number of children in the

family. We can create a suitable string of random digits (say of length 100) by using the

command randInt(0,9,100)→L1. Let the digits 0 to 4 represent a “boy” and 5 to 9 represent a

“girl.” Scroll down the list and count the number of children until you get a “girl number” or “4

boy numbers in a row,” whichever comes first. The number you have counted is X, the number

of children in the family. Continue until you have 25 values of X. The average for repetitions is

x=

45

=1.8, which is very close to the expected value of µ = 1.875.

25

10

1.0

8

0.8

6

0.6

Log(Y)

Expected value (Y)

8.58 (a) The table of probabilities p and expected values 1/p is shown below.

X:

0.10

0.20

0.30

0.40

0.50

0.60

0.70

0.80

0.90

Y:

10

5 3.3333

2.5

2 1.6667 1.4286

1.25 1.1111

(b) A scatterplot is shown below on the left.

4

0.4

2

0.2

0.0

0

0.0

0.1

0.2

0.3

0.4

0.5

0.6

Probability (X)

0.7

0.8

0.9

-1.0

-0.8

-0.6

-0.4

-0.2

0.0

Log(X)

(c) From Chapter 4, the power law model is Y = aX p , we transform the data by taking the

logarithm of both sides to obtain the linear model log(Y ) = log(a) + p log( X ) . Thus, we need to

compute the logarithms of X and Y. (d) A scatterplot of the transformed data is shown above on

the right. Notice, that the transformed data follow a linear pattern. (e) The correlation for the

1

transformed data is approximately r = −1. (f) The estimated power function is Ŷ = X −1 = .

X

(g) The power function illustrates the fact that the mean of a geometric random variable is equal

to the reciprocal of the probability p of success: µ =

1

.

p

CASE CLOSED!

(a) The proportion of successes for all trials is 88/288 0.3056. The proportion of successes

for high confidence is 55/165 0.3333, for medium confidence is 12/48 = 0.25, and for low

confidence is 21/75 = 0.28. If Olof Jonsson is simply guessing, then we would expect his

proportion of successes to be 0.25. Overall, he has done slightly better than expected, especially

when he has high confidence. (b) Yes, this result does indicate evidence of psychic ability. Let

X = number of correct guesses in 288 independent trials. X is B(288, 0.25). The probability of

observing 88 or more successes if Olof is simply guessing is P(X ≥ 88) = 1 − P(X ≤ 87) = 1 −

binomcdf(288, 0.25, 87) 1 − 0.9810 = 0.0190. There is a very small chance that Olof could

The Binomial and Geometric Distributions

199

guess correctly 88 or more times in 288 trials. (Note: A formal hypothesis test of H 0 : p = 0.25

versus H1 : p > 0.25 yields an approximate test statistic of Z = 2.18 and an approximate P-value

of 0.015, so we have statistically significant evidence that Olof is doing better than guessing.

Students will learn more about hypothesis testing procedures very soon.) (c) The probability of

making a correct guess on the first draw is 1/4 = 0.25. If you are told that your first guess is

incorrect, then the revised probability of making a correct guess on the second draw is 1/3 =

0.3333. If you are then told that your second guess is incorrect, then the revised probability of

making a correct guess is 1/2 = 0.5. Finally, if you are told that your first three guesses are

incorrect, then your revised probability of making a correct guess is 1/1 = 1. (d) The sum of the

probabilities of guessing correctly on the first, second, third, and fourth draws without

replacement is 1/4 + 1/3 + 1/2 + 1 = 2 1/12 or about 2.0833. Thus, the proportion of correct

guesses would be 2.0833/4 or about 0.5208. The expected number of correct guesses without

replacement is 288×0.5208=150, which is much higher than the expected number of correct

guesses without replacement, 288×0.25=72. If some of the computer selections were made

without replacement, then the expected number of correct guesses would increase. (e) Let X =

number of runs in precognition mode. Since the computer randomly selected the mode

(precognition or clairvoyance) with equal probability, X is B(12, 0.5). The probability of getting

3 or fewer runs in precognition mode is P(X ≤ 3) = binomcdf(12, 0.5, 3) 0.073, somewhat

unlikely.

8.59 (a) Not binomial: the opinions of a husband and wife are not independent. (b) Not

binomial: “success” is responding yes, “failure” is responding no, and a trial is the opinion

expressed by the fraternity member selected. We have a fixed number of trail, n = 25, but these

trial are not independent and the probability of “success” is not the same for each fraternity

member.

8.60 Let N be the number of households with 3 or more cars. Then N has a binomial

⎛12 ⎞

0

12

12

distribution with n = 12 and p = 0.2. (a) P ( N = 0 ) = ⎜ ⎟ ( 0.2 ) ( 0.8 ) = ( 0.8 ) 0.0687 .

⎝0⎠

P ( N ≥ 1) = 1 − P ( N = 0 ) = 1 − ( 0.8 ) 0.9313 . (b) The mean is µ = np = 12 × 0.2 = 2.4 and the

12

standard deviation is σ = np (1 − p ) = 12 × 0.2 × 0.8 1.3856 households. (c)

P ( N > 2.4 ) = 1 − P ( N ≤ 2 ) 1 − 0.5583 = 0.4417 .

8.61 (a) The distribution of X will be symmetric; the shape depends on the value of the

probability of success. When p = 0.5, the distribution of X will always be symmetric. (b) The

values of X and their corresponding probabilities and cumulative probabilities are shown in the

table below.

X

0

1

2

3

4

5

6

7

P(X) 0.0078 0.0547 0.1641 0.2734 0.2734 0.1641 0.0547 0.0078

F(X) 0.0078 0.0625 0.2267 0.5000 0.7734 0.9375 0.9922 1.0000

A probability histogram (left) and a cumulative probability histogram (right) are shown below.

200

Chapter 8

0.30

1.0

Cumulative probability

0.25

Probability

0.20

0.15

0.10

0.6

0.4

0.2

0.05

0.00

0.8

0

1

2

3

4

Number of brothers

5

6

7

0.0

0

1

2

3

4

Number of brothers

5

6

7

(c) P(X = 7) = 0.0078125

8.62 Let X = the result of the first toss (0 = tail, 1 = head) and Y = the number of trials to get the

first head (1). (a) A record of the 50 repetitions is shown below.

X Y X Y X Y X Y X Y

1 1 1 1 0 5 1 1 1 1

0 2 1 1 1 1 0 2 0 2

1 1 1 1 1 1 0 2 0 4

0 4 0 4 1 1 0 4 1 1

1 1 1 1 1 1 1 1 0 2

1 1 0 5 1 1 1 1 1 1

1 1 0 3 0 2 1 1 0 2

0 2 1 1 1 1 1 1 1 1

0 10 1 1 1 1 1 1 0 4

1 1 0 2 1 1 1 1 1 1

(b) Based on the results above, the probability of getting a head is 32/50 = 0.64, not a very good

estimate. (c) A frequency table for the number of tosses needed to get the first head is shown

below. Based on the frequency table, the probability that the first head appears on an oddnumbered toss is (32 + 1 + 2)/50 = 0.70. This estimate is not bad, since the theoretical

probability is 2/3 or about 0.6667.

Y

1

2

3

4

5

10

N=

Count

32

9

1

5

2

1

50

Percent

64.00

18.00

2.00

10.00

4.00

2.00

8.63 Let X = number of southerners out of 20 who believe they have been healed by prayer.

Then X is a binomial random variable with n = 20 and p = 0.46. (a) P(X = 10) = binompdf((20,

0.46, 10) 0.1652. (b) P(10< X <15) = P(11 ≤ X ≤14) = binomcdf(20, 0.46, 14) −

binomcdf(20, 0.46, 10) 0.9917 – 0.7209 = 0.2708 or P(10≤ X ≤15) = binomcdf(20, 0.46, 15) −

binomcdf(20, 0.46, 9) 0.9980– 0.5557 = 0.4423, depending on your interpretation of

“between” being exclusive or inclusive. (c) P(X >15) = 1 − P(X ≤ 15) = 1 − 0.9980 0.002.

(d) P(X <8) = P(X ≤ 7) = binomcdf(20, 0.46, 7) 0.2241.

The Binomial and Geometric Distributions

201

8.64 Let X = the number of schools out of 20 who say they have a soft drink contract. X is

binomial with n = 20 and p = 0.62. (a) P(X = 8) = binompdf(20, 0.62, 8) 0.0249 (b) P(X ≤ 8)

= binomcdf(20, 0.62, 8) 0.0381 (c) P(X ≥ 4) = 1 − P(X ≤ 3) 1 − 0.00002 = 0.99998 (d) P(4

≤ X ≤ 12) = P(X ≤ 12) − P(X ≤ 3) = binomcdf(20, 0.62, 12) − binomcdf(20, 0.62, 3) 0.51078

− 0.00002 = 0.51076. (e) The random variable of interest is defined at the beginning of the

solution and the probability distribution table is shown below.

X

P(X)

X

P(X)

0

0.000000

11

0.144400

1

0.000000

12

0.176700

2

0.000002

13

0.177415

3

0.000020

14

0.144733

4

0.000135

15

0.094458

5

0.000707

16

0.048161

6

0.002882

17

0.018489

7

0.009405

18

0.005028

8

0.024935

19

0.000863

9

0.054244

20

0.000070

10

0.097353

(f) The probability histogram for X is shown below.

0.20

Probability

0.15

0.10

0.05

0.00

0

1

2

3

4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20

Number of schools with soft drink contracts

8.65 (a) X, the number of positive tests, has a binomial distribution with parameters n = 1000

and p = 0.004. (b) µ = np =1000×0.004 = 4 positive tests. (c) To use the Normal approximation,

we need np and n (1 − p ) both bigger than 10, and as we saw in (b), np = 4.

8.66 Let X = the number of customers who purchase strawberry frozen yogurt. Then X is a

binomial random variable with n = 10 and p = 0.2. The probability of observing 5 or more

orders for strawberry frozen yogurt among 10 customers is P(X ≥ 5) = 1 − P(X ≤ 4) = 1 −

binomcdf(10, 0.2, 4) 1 − 0.9672 = 0.0328. Even though there is only about a 3.28% chance of

observing 5 or more customers who purchase strawberry frozen yogurt, these rare events do

occur in the real world and in our simulations. The moral of the story is that the regularity that

helps us to understand probability comes with a large number of repetitions and a large number

of trials.

202

Chapter 8

8.67 X is geometric with p = 0.325. (a) P(X =1) = 0.325. (b) P(X ≤ 3) = P(X = 1) + P(X = 2) +

P(X = 3) = 0.325+(1−0.325)2-1(0.325) + (1−0.325)3-1(0.325) 0.6925. Alternatively, P(X ≤ 3)

= 1 − P(X > 3) = 1 − 0.6753 0.6925. (c) P(X > 4) = (1−0.325)4 0.2076. (d) The expected

number of times Roberto will have to go to the plate to get his first hit is µ =

1

1

=

3.0769 ,

p 0.325

or just over 3 at bats. (e) Use the commands: seq(X,X,1,10)→L1, geompdf(0.325,L1)→L2 and

geomcdf(0.325,L1)→L3 . (f) A probability histrogram (left) and a cumulative probability

histogram (right) are shown below.

0.35

1.0

0.30

Cumulative probability

0.8

Probability

0.25

0.20

0.15

0.10

0.6

0.4

0.2

0.05

0.00

1

2

3

4

5

6

7

Number of at-bats

8

9

10

0.0

1

2

3

4

5

6

7

Number of at-bats

8

9

10

8.68 (a) By the 68–95–99.7 rule, the probability of any one observation falling within the

interval µ − σ to µ + σ is about 0.68. Let X = the number of observations out of 5 that fall

within this interval. Assuming that the observations are independent, X is B(5, 0.68). Thus,

P(X = 4) = binompdf (5, 0.68, 4) 0.3421. (b) By the 68–95–99.7 rule, 95% of all observations

fall within the interval µ − 2σ to µ + 2σ . Thus, 2.5% (half of 5%) of all observations will fall

above µ + 2σ . Let X = the number of observations that must be taken before we observe one

falling above µ + 2σ . Then X is geometric with p = 0.025. Thus, P(X = 4) = (1 − 0.025)3×0.025

= (0.975)3×0.025 0.0232.