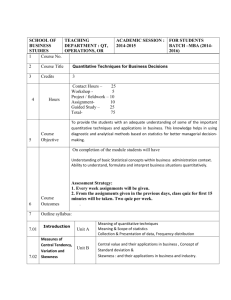

Statistics

advertisement

Hui Bian Office for Faculty Excellence Fall 2011 • Purpose of data screening •To find wrong entries •To find extreme responses or outliers •To see if data meet the statistical assumptions of analysis you are going to use. 2 • Distribution diagnosis • • • • Frequency tables Histograms and bar graphs Stem-and-leaf plots Box plots 3 • Frequencies procedure provides statistics and graphical display to describe your variables. • Frequency tables can give you: frequency counts, percentages, cumulative percentages, mean, median, mode, sum, standard deviation, variance. Skewness and kurtosis, etc. • Assumptions • Tabulations and percentages are useful for categorical data. • Mean and standard deviation are based on normal theory and are appropriate for quantitative variables. • Robust statistics, such as median, percentiles, and quartiles are appropriate for quantitative variables that may or may not meet the assumption of normality. • Example: what are the distributions of demographic variables? Such as age, gender, and grade (Q1, Q2, Q3). • First recode Q1 into Q1r because we want real ages for the participants. • How to recode Q1? • Recode Q1 into Q1r • Obtain frequency table: Analyze > Descriptive Statistics > Frequencies 9 • Click Statistics to get this window • Click Charts to get this window 11 • Frequency statistics • Frequency statistics • Skewness and kurtosis are statistics that characterize the shape and symmetry of the distribution • Skewness: a measure of the asymmetry of a distribution. The normal distribution is symmetric and has a skewness value of zero. • Positive skewness: a long right tail. • Negative skewness: a long left tail. 13 • Skewness • Kurtosis: a measure of the extent to which observations cluster around a central point. For a normal distribution, the value of the kurtosis statistic is zero. • Leptokurtic data values are more peaked (positive kurtosis) than normal distribution. • Platykurtic data values are flatter and more dispersed along the X axis (negative kurtosis) than normal distribution. 15 16 • Unacceptable level of skewness and kurtosis: departure from normality • Some use: ± 0.5 • Some use: ± 1.00 • As a guideline, a skewness value more than twice its standard error is taken to indicate a departure from symmetry (from SPSS help menu). 17 • Frequency tables of Q2 and Q3 • From frequency table of Q3, we decide that we do not want Ungraded or Other grade group in our data analysis. • Then, we need to recode Q3. • How to recode Q3 into Q3r • Keep four levels (9th - 12th ). • Recode the fifth level (Ungraded or Other grade) into system missing. • Run frequency for Q3r. • Recode Q3 into Q3r • Histogram 1. It is plotted along an equal interval scale. The height of each bar is the count of values of a variable. 2. It shows the shape, center, and spread of the distribution. 3. A normal curve helps you judge whether the data are normally distributed. • Example: Frequency of Q41(frequency of drinking) • The Explore procedure produces summary statistics and graphical displays, either for all of your cases or separately for groups of cases. Reasons of using Explore procedure: • • • • • Data screening Outlier identification Description Assumption checking Characterizing differences among subpopulations (groups of cases). 23 • Exploring the data can help • • • to determine whether the statistical techniques that you are considering for data analysis are appropriate. the exploration may indicate that you need to transform the data if the technique requires a normal distribution. or you may decide that you need nonparametric tests. 24 • Statistics: Mean, median, standard error, variance, standard deviation , range, skewness, kurtosis, and confidence interval for the means, the Kolmogorov-Smirnov statistic with a Lilliefors significance level for testing normality, and the Shapiro-Wilk statistic. Boxplots, stem-and-leaf plots, histograms, normality plots, and spread-versus-level plots with Levene tests and transformations. • Data: quantitative variables or categorical variables with a reasonable number of distinct categories. • Assumptions: the distribution of your data does not have to be symmetric or normal. • Example: let’s explore Q41 again, by Q2 (gender). • Obtain Explore: Analyze > Descriptive Statistics > Explore • Click Statistics to get this window • Click Plots to get this window Descriptives Q2 What is your sex Q41 How many days drink Female alcohol 30 days Statistic Mean 95% Confidence Interval for Lower Bound 1.73 Mean Upper Bound 1.79 5% Trimmed Mean .013 1.63 Median 1.00 Variance 1.230 Std. Deviation 1.109 Minimum 1 Maximum 7 Range 6 Interquartile Range Male Std. Error 1.76 1 Skewness 1.647 .029 Kurtosis 2.501 .058 1.85 .015 Mean 95% Confidence Interval for Lower Bound 1.83 Mean Upper Bound 1.88 5% Trimmed Mean Median 1.69 1.00 Variance 1.732 Std. Deviation 1.316 Minimum 1 Maximum 7 Range 6 Interquartile Range 1 Skewness 1.726 .028 Kurtosis 2.624 .055 • Test of normality The normality assumption is not satisfied. • Stem-and-Leaf Plots: use the original data values to display the distribution's shape. Female Male • Stem-and-Leaf Plots • Normal Q-Q Plots: • The straight line in the plot represents expected values when the data are normally distributed. • A normal distribution is indicated if the data points fall on or very near the diagonal line. 32 • Normal Q-Q Plot of Q41among Females • Normal Q-Q Plot of Q41among Males • Why use box plots? • Provide some indication of the data's symmetry and skewness. • We can detect outliers. • By using a boxplot for each categorical variable side-by-side on the same graph, one quickly can compare data sets. • Box plots • A boxplot splits the data set into quartiles. • Calculate the median and the quartiles (the lower quartile is the 25th percentile and the upper quartile is the 75th percentile). • For 25th percentile, 25% of the data values are below it. • The box represents the middle 50% of the data--the "body" of the data. 36 No outliers Response variable Median Highest value 25th percentile Smallest value 37 • Box plots of Q41 by gender Median • Box Position: • The location of the box within two outer lines can provide insight on the normality of the sample's distribution. • When the box is not centered between the outer lines, the sample may be positively or negatively skewed. • If the box is shifted significantly to the low end, it is positively skewed; if the box is shifted significantly to the high end, it is negatively skewed. • Box Size • The size of the box can provide an estimate of the kurtosis. • A very thin box relative to the outer lines indicates a distribution with a thinner peak. • A wider box relative to the outer lines indicates a wider peak. The wider the box, the more U-shaped the distribution becomes. • If there is a violation of statistical assumptions, you might need a data transformation to correct this matter. • Concern: interpretation problem • Log transformation, square root, logarithm, inverse, reflect and square root, reflect and logarithm, and reflect and inverse 41 • The Crosstabs procedure forms two-way and multiway tables and provides a variety of tests and measures of association for two-way tables. • Crosstabs’ statistics and measures of association are computed for two-way tables only. • Example 1: the association (independence) of gender (Q2) and physical activity (Q80r). • Q2 has two levels: female and male • Q80r has four levels: 0 days, 1-2 days, 3-5 days, and more than 5 days • Obtain Crosstabs: Analyze > Descriptive Statistics > Crosstabs • Click Statistics to get this window • Click Cell to get this window • Crosstabs statistics (SPSS): Chi-square • For tables with two rows and two columns, select Chisquare to calculate the Pearson chi-square, the likelihood-ratio chi-square, Fisher’s exact test, and Yates’ corrected chi-square. • For 2 × 2 tables, Fisher’s exact test is computed when columns in a larger table has a cell with an expected frequency of less than 5 (more than 20%). • Yates’ corrected chi-square is computed for all other 2 × 2 tables. 47 • Crosstabs: Chi-square • For tables with any number of rows and columns, select Chi-square to calculate the Pearson chi-square and the likelihood-ratio chi-square. • When both table variables are quantitative, Chisquare yields the linear-by-linear association test. 48 • Q80r by Q2 • Chi-square results • Nominal. For nominal data (no intrinsic order, such as Catholic, Protestant, and Jewish), you can select Contingency coefficient, Phi (coefficient) and Cramer’s V, Lambda (symmetric and asymmetric lambdas and Goodman and Kruskal’s tau), and Uncertainty coefficient. 51 • Ordinal. For tables in which both rows and columns contain ordered values, select Gamma (zero-order for 2-way tables and conditional for 3-way to 10-way tables), Kendall’s tau-b, and Kendall’s tau-c. • For predicting column categories from row categories, select Somers’ d. 52 • Nominal by Interval. When one variable is categorical and the other is quantitative, select Eta. The categorical variable must be coded numerically. 53 • Kappa. Cohen's kappa measures the agreement between the evaluations of two raters when both are rating the same object (interrater reliability). A value of 1 indicates perfect agreement. A value of 0 indicates that agreement is no better than chance. Kappa is available only for tables in which both variables use the same category values and both variables have the same number of categories. • Example 2. Whether Q24 (ever consider suicide) and Q25 (ever make suicide plan) agree each other. • Sometimes, one measure is from an objective test and another is self-report measure. • We need to get Kappa statistic. 55 • Example 2: SPSS output 56 • Kappa statistics Kappa = 0.589 with p < 0.001. This measure of agreement, while statistically significant, is only marginally convincing. As a rule of thumb values of Kappa from 0.40 to 0.59 are considered moderate, 0.60 to 0.79 substantial, and 0.80 outstanding. Most statisticians prefer for Kappa values to be at least 0.6 and most often higher than 0.7 before claiming a good level of agreement. • Risk. For 2 x 2 tables, a measure of the strength of the association between the presence of a factor and the occurrence of an event. • relative risk (RR) is the risk of an event (or of developing a disease) relative to exposure. Relative risk is a ratio of the probability of the event occurring in the exposed group versus a non-exposed group. 58 • Example 3: examine the effect of thinking about suicide on suicide behavior. We have two groups: Q24, thinking about suicide (yes) and not thinking about suicide (no). Then, they plan suicide, Q25 (yes/no). 59 • Risk • Relative Risk: is the risk of making suicide plan relative to thinking about suicide. Relative risk is a ratio of the probability of making plan (yes) in the thinking about suicide group versus a non-thinking about suicide group. Relative risk • RR = (1274/2231)/(483/13926)= 16.46 • It means the risk of making plan for people thinking about suicide is more than 16 times that of people non-thinking about suicide. • Odds ratio is a way of comparing whether the probability of making suicide plan is the same for thinking and non-thinking groups. • Odds ratio = (1274/957)/(483/13443)=37.05 • This odds ratio illustrates that making plan is far more likely in the thinking group. 62 • Bivariate correlation is about the association of two variables. Research question is like: whether two variables are related to each other. Bivariate correlation statistic: Pearson productmoment coefficient (Pearson correlation: r). 63 • Before calculating a correlation coefficient, screen your data for outliers and evidence of a linear relationship. • Pearson’s correlation coefficient is a measure of linear association. • Two variables can be perfectly related, but if the relationship is not linear, Pearson’s correlation coefficient is not an appropriate statistic for measuring their association. 64 • Data. Use symmetric quantitative variables for Pearson’s correlation coefficient and quantitative variables or variables with ordered categories for Spearman’s rho and Kendall’s tau-b. • Assumptions. Pearson’s correlation coefficient assumes that each pair of variables is bivariate normal. 65 • Correlation Coefficients. For quantitative, normally distributed variables, choose the Pearson correlation coefficient. • If your data are not normally distributed or have ordered categories, choose Kendall’s tau-b or Spearman, which measure the association between rank orders. 66 • Example: Correlation between Q3r and Q80 • Go to Analyze > Correlate > Bivariate 67 • SPSS output 68 • Correlation coefficients range in value from –1 (a perfect negative relationship) and +1 (a perfect positive relationship). • A value of 0 indicates no linear relationship. When interpreting your results, be careful not to draw any cause-and-effect conclusions due to a significant correlation. 69 Pearson correlation: -.064 Kendall’s tau-b : -.051 Question: the coefficients are small but significant, why? • For large samples, it is easy to achieve significance, and one must pay attention to the strength of the correlation to determine if the relationship explains very much. 70 • Partial correlation coefficients describe the linear relationship between two variables while controlling for the effects of one or more additional variables. • Correlations are measures of linear association 71 • Example: Is there a relationship between Q39 and Q80 while controlling Q81? • Analyze > Correlate > Partial 72 • SPSS output 73 T test One-Sample T test Independent-Samples T test Paired-Samples T test One-way ANOVA 74 • One-Samples T Test: this procedure tests whether the mean of a single variable differs from a specified constant. • Example: we want to know whether the mean of Q80 is different from 5 days (we use 5-day as cutoff) • Recode Q80 into 0 = 0 days, 1 = 1 day, 2 = 2 days … 7= 7 days. • Go to Analyze > Compare Means > One-Samples T Test 75 SPSS output 76 Independent-Samples T test The Independent-Samples T Test procedure compares means for two groups of cases. Example: whether there is a difference in Q80 between males and females. Go to Analyze > Compare Means > IndependentSamples T Test 77 Independent-Samples T test 78 Independent-Samples T test: SPSS output 79 • Paired-Samples T Test: This procedure compares the means of two variables for a single group. • The procedure computes the differences between values of the two variables for each case and tests whether the average differs from 0. • Each subject has two measures, often called before and after measures. An alternative design for which this test is used is a matched-pairs or case-control study, in which each record in the data file contains the response for one person and also for his or her matched control subject. 80 • Paired-Sample T Test • Use combined data set from the example of Merging data sets. • We want to know if there is a difference in a05 (school performance) across the time after intervention. • We focus on one group. So we need to select cases from group 1. • Go to Analyze > Compare Means > Paired-Sample T Test 81 • Paired-Sample T Test: 82 • Paired-Sample T Test: SPSS output 83