LIUC lecture 7

advertisement

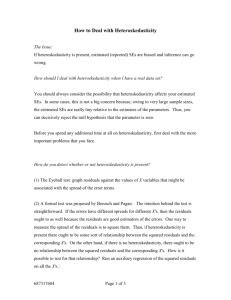

Quantitative Methods for Economics, Finance and Management (A86050 – F86050) Matteo Manera – matteo.manera@unimib.it Marzio Galeotti – marzio.galeotti@unimi.it 1 This material is taken and adapted from Guy Judge’s Introduction to Econometrics page at the University of Portsmouth Business School http://judgeg.myweb.port.ac.uk/ECONMET/ 2 Econometric “problems” Heteroskedasticity Normality of the disturbances Multicollinearity Autocorrelation Bias 3 Econometric “problems” Heteroskedasticity What does it mean? The variance of the error term is not constant What are its consequences? The least squares results are no longer efficient and t tests and F tests results may be misleading How can you detect the problem? Plot the residuals against each of the regressors or use one of the more formal tests How can I remedy the problem? Respecify the model – look for other missing variables; perhaps take logs or choose some other appropriate functional form; or make sure relevant variables are expressed “per capita” Consumption function example (cross-section data): credit worthiness as a missing variable? Scatter diagram and fitted line Consumption 400 300 200 100 0 0 100 200 Income 300 400 The homoskedastic case The heteroskedastic case The consequences of heteroskedasticity OLS estimators are still unbiased (unless there are also omitted variables) However OLS estimators are no longer efficient or minimum variance The formulae used to estimate the coefficient standard errors are no longer correct (show) • so the t-tests will be misleading (if the error variance is positively related to an independent variable then the estimated standard errors are biased downwards and hence the t-values will be inflated) • confidence intervals based on these standard errors will be wrong Detecting heteroskedasticity Visual inspection of scatter diagram or the residuals Goldfeld-Quandt test suitable for a simple form of heteroskedasticity Breusch-Pagan test a test of more general forms of heteroskedastcity Residual plots Plot residuals against one variable at a time Goldfeld-Quandt test Suppose it looks as if σui = σuXi i.e. the error variance is proportional to the square of one of the X’s Rank the data according to the culprit variable and conduct an F test using RSS2/RSS1 where these RSS are based on regressions using the first and last [n-c]/2 observations [c is a central section of data usually about 25% of n] Reject H0 of homoskedasticity if Fcal > Ftables Breusch-Pagan test Regress the squared residuals on a constant, the original regressors, the original regressors squared and, if enough data, the cross-products of the Xs The null hypothesis of no heteroskedasticity will be rejected if the value of the test statistic is “too high” (P-value too low) Both χ2 and F forms are available Remedies Respecification of the model Include relevant omitted variable(s) Express model in log-linear form or some other appropriate functional form Express variables in per capita form Transform the variables so that the transformed model has a homoskedastic error (show this case) Where respecification won’t solve the problem use robust Heteroskedastic Consistent Standard Errors (due to White, 1980) Normality of the disturbances Test null hypothesis of normality Use χ2 test with 2 degrees of freedom At 5% level reject H0 if χ2 > 5.99 non-normality may reflect outliers or a skewed distribution of residuals Reset test Originated by Ramsey (1969) Tests for functional form mis-specification Run regression and get fitted values Regress Y on X’s and powers of fitted Y’s If these additional regressors are significant (judged by an F test) then the original model is mis-specified Multicollinearity What does it mean? A high degree of correlation amongst the explanatory variables What are its consequences? It may be difficult to separate out the effects of the individual regressors. Standard errors may be overestimated and t-values depressed. Note: a symptom may be high R2 but low t-values How can you detect the problem? Examine the correlation matrix of regressors - also carry out auxiliary regressions amongst the regressors. Look at the Variance Inflation Factors NOTE: be careful not to apply t tests mechanically without checking for multicollinearity multicollinearity is a data problem, not a misspecification problem Variance Inflation Factor (VIF) Multicollinearity inflates the variance of an estimator: VIFJ = 1/(1-RJ2) where RJ2 measures the R2 from a regression of Xj on the other X variable/s ⇒serious multicollinearity problem if VIFJ>5 Specification and mis-specification tests Suppose we have in mind a model that we might call our “maintained hypothesis” Yi = b0 + b1 X1i + b2 X2i +..........+ bkXki+ ui Tests of all types of restrictions on the b values are sometimes called specification tests Our maintained hypothesis also makes a number of assumptions about the disturbance term u. We must check the validity of these assumptions. These tests are called mis-specification tests (or diagnostic tests)