Sound-driven enhancement of vision: disentangling detection

advertisement

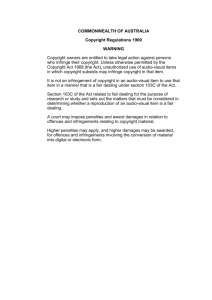

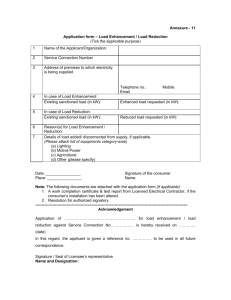

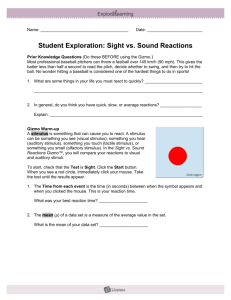

J Neurophysiol 109: 1065–1077, 2013. First published December 5, 2012; doi:10.1152/jn.00226.2012. Sound-driven enhancement of vision: disentangling detection-level from decision-level contributions Alexis Pérez-Bellido,1 Salvador Soto-Faraco,2,3 and Joan López-Moliner1,4 1 Departament de Psicologia Bàsica, Universitat de Barcelona, Barcelona, Spain; 2Departament de Tecnologies de la Informació i les Comunicacions, Universitat Pompeu Fabra, Barcelona, Spain; 3Institució Catalana de Recerca i Estudis Avançats (ICREA), Barcelona, Spain; and 4Institute for Brain Cognition and Behaviour (IR3C), Barcelona, Spain Submitted 15 March 2012; accepted in final form 29 November 2012 Pérez-Bellido A, Soto-Faraco S, López-Moliner J. Sound-driven enhancement of vision: disentangling detection-level from decision-level contributions. J Neurophysiol 109: 1065–1077, 2013. First published December 5, 2012; doi:10.1152/jn.00226.2012.—Cross-modal enhancement can be mediated both by higher-order effects due to attention and decision making and by detection-level stimulus-driven interactions. However, the contribution of each of these sources to behavioral improvements has not been conclusively determined and quantified separately. Here, we apply psychophysical analysis based on Piéron functions in order to separate stimulus-dependent changes from those accounted by decision-level contributions. Participants performed a simple visual speeded detection task on Gabor patches of different spatial frequencies and contrast values, presented with and without accompanying sounds. On one hand, we identified an additive crossmodal improvement in mean reaction times across all types of visual stimuli that would be well explained by interactions not strictly based on stimulus-driven modulations (e.g., due to reduction of temporal uncertainty and motor times). On the other hand, we singled out an audio-visual benefit that strongly depended on stimulus features such as frequency and contrast. This particular enhancement was selective to low-visual spatial frequency stimuli, optimized for magnocellular sensitivity. We therefore conclude that interactions at detection stages and at decisional processes in response selection that contribute to audio-visual enhancement can be separated online and express on partly different aspects of visual processing. sensory integration; reaction time; psychophysics; audio-visual stimuli; magnocellular pathway; race models WATCHING A MOVIE is not only a visual experience, attending a concert is not only acoustic, and stroking our pet is not only tactile. Instead, perception in everyday life typically involves input from various sensory modalities. In consequence, the nervous system of complex animals, including humans, has evolved to maximize the information by exploiting correlations between multimodal inputs. In recent years, a growing body of literature indicates the existence of multisensory interactions in a wide variety of contexts. For example, neurophysiological evidence from animal studies has described multisensory responses of neurons in the deep layers of the superior colliculus (SC), a subcortical structure that receives ascending inputs from auditory, visual, and somatosensory pathways and subserves reflexive stimulus-driven behaviors such as orienting head and eye movements (e.g., Meredith and Stein 1983, 1986). Some of these neurons are characterized by their enhanced responses to multisensory compared with unisensory Address for reprint requests and other correspondence: J. López-Moliner, Departament de Psicologia Bàsica, Universitat de Barcelona, Barcelona, Spain (e-mail: j.lopezmoliner@ub.edu). www.jn.org stimulation, typically when sensory inputs coming from different modalities are in approximate temporal synchrony and spatially overlapping (Meredith and Stein 1996; Wallace et al. 1993, 1998). Complementary behavioral studies have demonstrated that multisensory stimulation leads to benefits such as lower detection thresholds (e.g., Noesselt et al. 2010; Wuerger et al. 2003), increased precision (e.g., Ernst and Bülthoff 2004), and quicker motor responses (e.g., Morrell 1972; Nozawa et al. 1994). One of the most often cited, yet still controversial, cases of multisensory facilitation is the enhancement of visual perception as a function of concurrent acoustic input. Here, we examine the basis of this acoustic facilitation of visual processing in behavior. Behavioral evidence for auditory enhancement of vision is illustrated by improvements in orienting responses to audiovisual, compared with visual-only, stimuli (in cats, Stein et al. 1989); faster and more accurate saccadic reactions (Colonius and Arndt 2001; Corneil et al. 2002; Diederich and Colonius 2004); increases in brightness judgments of a visual stimulus accompanied by a sound (Stein et al. 1996); and improvements in sensitivity to different aspects of visual stimuli (Bolognini et al. 2005; Frassinetti et al. 2002; Jaekl and Soto-Faraco 2010; Manjarrez et al. 2007). However, some controversy still surrounds the underlying sources of these audio-visual enhancements. Despite the fact that these improvements are generally attributed to changes in early sensory processes at detection stage as a result of hard-wired integrative mechanisms (Lakatos et al. 2007; Stein 1998), many of these cross-modal enhancements cannot be dissociated from effects at decisional stages of processing that are more general in scope (Doyle and Snowden 2001; Lippert et al. 2007; Odgaard et al. 2003). For example, several authors have argued that sound-induced enhancement of vision can be attributed to a reduction in uncertainty within the spatial and temporal domains, without necessarily implying early interactions at sensory level. Indeed, generic mechanisms such as the involuntary orienting of spatial attention to the location of a sound have been shown to enhance early perceptual processing within the visual modality (e.g., McDonald et al. 2000, 2005; Spence and Driver 1994), without necessarily involving any early sensory interaction between sound and vision. Likewise, in the temporal domain, an abrupt sound can considerably reduce the temporal uncertainty about the time of visual target presentation (e.g., Lippert et al. 2007). Thus, according to this alternative view, cross-modal enhancement would be explained by cross-modal cuing effects, which have been widely demonstrated in the attention literature (e.g., Driver and Spence 1998). Discerning the contribution of these 0022-3077/13 Copyright © 2013 the American Physiological Society 1065 1066 SOUND-DRIVEN ENHANCEMENT OF VISION cross-modal cuing effects from early cross-modal convergence leading to enhanced detection, as a classical sensory integration account would propose, is critical when characterizing the nature of multisensory interactions (Driver and Noesselt 2008; McDonald et al. 2001). The nature of auditory-induced enhancement of visual processing has previously been addressed by measuring detection thresholds or changes in visual sensitivity but, as discussed above, with mixed conclusions (Frassinetti et al. 2002; Jaekl and Soto-Faraco 2010; Lippert et al. 2007; McDonald et al. 2000; Noesselt et al. 2008). Studying the temporal dynamics of audio-visual perception has also provided interesting insights about the nature of such interactions. For example, at a neurophysiological level, multisensory stimulation leads to shorter neuronal latencies (Rowland et al. 2007). Rowland et al. found that initial neural responses in individual SC neurons of anesthetized cats speed up in response to audio-visual stimuli. Additionally, Giard and Peronnet (1999) provided physiological evidence in humans that audio-visual stimuli produced neural activity in the visual cortex as early as 40 ms after stimulus, above and beyond that evoked by visual-only stimuli. It is remarkable that, considering that possible sensory-based effects may express at (and lead to) shorter processing latencies, previous behavioral studies addressing the nature of auditory-induced visual enhancement (see above) have typically used off-line detection tasks that are not so sensitive to latency shifts. Note that, as we discuss below, the many studies using the redundant signals approach on audio-visual reaction times (RTs) cannot tell whether the speed-up in latencies is due to enhancement of vision specifically. Here, we decided to apply a RT paradigm specifically focused on measuring visual processing, with the aim of capturing any latency aspect involved in auditory-induced visual enhancement. When two or more signals are presented together in different channels, simple detection responses are faster on average than when only one signal is present—a well-known phenomenon termed the redundant signals effect (RSE; Kinchla 1974). Previous studies have modeled such multisensory RT advantages as a function of unisensory RTs by applying the race model inequality test (RMI) (Colonius and Arndt 2001; Miller 1982; but see Otto and Mamassian 2012 for a criticism). According to many authors, this paradigm allows one to infer how these signals are used in detection. If the auditory signal and the visual signal are processed independently at sensory level and combined during a decision stage, the distribution of RTs to audio-visual events cannot be significantly faster than the probability summation model predictions based on the RTs to unisensory targets (Raab 1962). If, on the other hand, audio-visual RTs are faster than those predicted by the probabilistic summation (race) model, then it is inferred that these signals are effectively integrated within a common channel prior to decision. This latter kind of interaction has been accurately described by linear or coactivation models (Colonius and Arndt 2001; Miller 1982; Nickerson 1973). This RMI has been extensively applied in the multisensory domain (see Cappe et al. 2009; Colonius and Arndt 2001; Laurienti et al. 2004; Leo et al. 2008). However, a key aspect of this approach is that the task is not modality selective (that is, participants respond to any signal regardless of whether it is auditory or visual). Therefore, regardless of recent criticisms (Otto and Mamassian 2012), the RSE model is simply not adequate when the goal is to ascertain how, specifically, one sensory modality is enhanced by the presence of the other, as in the present study. For this reason, we started by using a different RT paradigm well known in the visual literature that exploits the fact that RTs to visual events decrease monotonically with stimulus intensity following a power function, typically referred to as the Piéron function (Piéron 1914): RT ⫽ kC⫺␣ ⫹ t0 (1) The function in Eq. 1 captures different separate contributions to response latency across variations in stimulus contrast (C). In this model, two parameters (k and ␣) modulate the decay of RTs caused by stimulus-dependent variables, while t0 modulations reflect the variation in RTs due to stimulus-independent factors (Pins and Bonnet 1996; Plainis and Murray 2000). In particular, k accounts for the time it takes for sensory information to reach threshold, that is, the gain rate in the accumulation of sensory evidence. In turn, ␣ is an exponent that characterizes a given sensory modality. Finally, in this framework t0 represents the asymptotic RT, which reflects a time constant that includes processing latencies intrinsic of the sensory pathway and motor time of the effector system (Pins and Bonnet 2000). One important aspect for the present study is that variations in response latencies caused by differences in stimulus uncertainties, task complexity, or decision making cumulate additively and equally across all levels of contrast or any other stimulus variation, thus adding to the parameter t0 (Pins and Bonnet 1996). This assumption has been explicitly tested by Carpenter (2004) with visual stimuli. In particular, using this framework, Carpenter showed that the contributions of contrast variations to overall RT are associated to detection processes, whereas variations concerning higher-order factors (such as the probability of the stimulus requiring a response or not) are linked to decision processes leading to shifts in t0. Applying this approach to the cross-modal case, we will address how the different parameters of the Piéron function account for changes in RT following the addition of sound to an imperative visual stimulus. This paradigm will therefore allow us to discern higher-level effects from interactions occurring due to stimulus-driven processing at the detection level between vision and sound (see Data analysis). Given that previous studies have suggested that differences in multisensory integration may depend on the particular visual pathways involved (Jaekl and Harris 2009; Jaekl and SotoFaraco 2010; Leo et al. 2008), we included a range of spatial frequencies (SFs) putatively engaging the magnocellular (M) and parvocellular (P) pathways to different extents. We chose SF values of 0.3 c/°, 0.74 c/°, and 5.93 c/° given that frequencies above 4 c/° are thought to stimulate sustained channels more specifically than frequencies below (Legge 1978; Levi et al. 1979). Therefore the low SFs 0.3 c/° and 0.74 c/° in our stimuli are assumed to primarily stimulate the M-pathway, while the intermediate frequency 5.93 c/° is predicted to elicit relatively more activation in the P-channel. We hypothesize that sound-induced visual enhancements that are dependent of contrast (i.e., detection level) should be modulated by SF and will therefore lead to differences in the gain parameter k across SFs. In particular, stimulus-driven benefits should concentrate in the low SFs. On the other hand, interactions produced by the sound that are not contrast dependent (e.g., decision stage; Carpenter 2004) should in principle J Neurophysiol • doi:10.1152/jn.00226.2012 • www.jn.org SOUND-DRIVEN ENHANCEMENT OF VISION accumulate equally across all contrast levels, being mainly reflected in changes of the asymptotic parameter t0. Moreover, this sensory unspecific improvement driven by high-level processes is expected to occur not only invariantly across contrast levels but also across SFs. Note that, in a strict sense, it is not logically possible to discard the influence of sensory-level interactions on t0, so that the nonsensory interpretation of changes in t0 is just a conservative interpretation. Of course, this does not invalidate the interpretation of the k parameter, which would remain representative of the rate at which evidence is accumulated at the detection stage across levels of contrast (i.e., gain). MATERIALS AND METHODS Experiment 1 Methods. PARTICIPANTS. Two of the authors and three naive volunteers (24 – 42 yr old; 3 women, 2 men) from the University of Barcelona participated in this experiment. All subjects had normal hearing and normal or corrected-to-normal vision by self-report and gave informed consent prior to participating. The study received the approval of the University of Barcelona ethical committee. MATERIALS. Visual stimuli consisted of vertical Gabor patches [Gaussian window standard deviation (SD) ⫽ 0.25; mean luminance ⫽ 23.4 cd/m2] subtending a visual angle of 2°, with SF of 0.3, 0.74, or 5.93 c/°, depending on the experimental condition. They were presented at the center of a Philips 22-in. CRT monitor (Brilliance 202P4) placed at a 1-m viewing distance. These Gabor patches were presented at varying levels of contrast in six steps ranging from 0.05 to 0.822 (Michelson contrast) on a logarithmic scale. Auditory stimuli were 340-ms broadband white noise bursts [58 dB (A) SPL; 10-ms on-/off-ramps] delivered from two loudspeaker cones located at either side of the monitor. Auditory and visual stimulus timing accuracy and precision were calibrated with a Black Box Toolkit oscilloscope (Black Box Toolkit). PROCEDURE. Prior to each session, participants adapted to the room lighting conditions and to the screen luminance for 5 min and then were presented with a training block of 20 trials. Participants were asked to press a joystick button as soon as they detected a visual stimulus (Gabor patch) at the center of the monitor. Each block contained 180 trials including 20% catch trials, where no visual stimulus was present. Catch trials encouraged participants to selectively attend visually and discouraged responses based on the auditory component. Half of the total trials (including half of the catch trials) contained an auditory stimulus. All types of trials (sound vs. no sound, 1067 with vs. without visual stimulus, and contrast level of the visual stimulus) were randomly intermixed throughout each block while the SF was blocked. The beginning of each trial was signaled by a central fixation cross (which remained throughout the trial), and, after a variable delay (randomly chosen from 500 ms to 1,500 ms), the target stimulus was presented for 340 ms (Fig. 1). After a response deadline of 1,500 ms, the screen blanked and a 2,000-ms delay led to the beginning of the next trial. Subjects typically completed four blocks of 180 trials of one SF per session, with short resting breaks between blocks. Each session was run on a different day, and the experiment lasted six sessions (2 sessions for each SF). The order of the sessions was randomized to prevent learning effects. Overall, each participant performed an average of 80 (minimum of 60) valid trials per condition (with and without sound) and level of contrast. Data analysis. RT to visual stimuli is determined by contrast level and SF (Breitmeyer 1975; Harwerth and Levi 1978). The decrease in RTs to increments in contrast is well accounted for by the Piéron function in Eq. 1. As discussed in the introduction, the slope of this function (determined by the parameters k and ␣ in Eq. 1) captures variations of RT caused by variations in stimulus properties. In many cases it is possible to express the slope with a single parameter by assuming an ␣ exponent of ⫺1, which is a particular case of the general function often applied in visual psychophysics (see Eq. 2; Murray and Plainis 2003; Plainis and Murray 2000; see Fitting of ␣ parameter below): RT ⫽ k · 1 C ⫹ t0 (2) In this case, the slope k can be directly interpreted as the gain (rate) at which the system accumulates sensory evidence about the presence of a stimulus (k is inversely proportional to sensitivity, so that sensitivity ⫽ 1/k). Interestingly, within this framework the parameter k has been shown to be modulated by the different sensitivity of the P- and the M-pathways to different levels of contrast and SFs (Murray and Plainis 2003; Plainis and Murray 2000). Specifically, low values of k (indicating higher sensitivity) have been reported for low SFs compared with higher SFs (for a more detailed discussion of the paradigm see Murray and Plainis 2003; Plainis and Murray 2000). In the present study, we extrapolate this framework to the multisensory domain in order to investigate how sound modulates the processing of a visual (Gabor) stimulus. We aim to reveal potential frequency-specific modulations by looking at RTs as a function of stimulus contrast. That is, we contend that efficient visual processing will lead to shallower slopes (lower k, indicating higher sensitivity). If present, this reduction in RTs is expected to be stronger at lowcontrast than at high-contrast visual stimuli, because there is more room for improvement in sensory processing in that case. Fig. 1. Schematic representation of a trial sequence. After a randomly chosen interval following the onset of the fixation cross (500 –1,500 ms), a Gabor stimulus (visual target) was presented on 80% of the trials (therefore, this is the proportion of trials requiring response). The remaining 20% were catch trials (no visual target). Fifty percent of the trials (across target-bearing and catch trials) contained a sound temporally aligned with the visual event, thus making an equal proportion of visual and audio-visual trials. (The relative size of the Gabor has been scaled up for clarity of presentation; see details in text.) J Neurophysiol • doi:10.1152/jn.00226.2012 • www.jn.org 1068 SOUND-DRIVEN ENHANCEMENT OF VISION In terms of cross-modal enhancement, it is predicted that if audiovisual interactions produce changes in the rate of accumulation of sensory evidence, this will be reflected as reductions in k (i.e., the slope)1 with respect to visual-only trials. Moreover, we hypothesize that this slope modulation should reveal some specificity for low SFs, in agreement with the results of previous investigations (Jaekl and Soto-Faraco 2010; Leo et al. 2008). Other benefits due to sound that are not strictly modulating sensitivity are also expected, but in this case the modulation should concern the constant t0. Abrupt sounds are indeed known to speed up motor responses independently of the stimulus features (Nickerson 1973; Valls-Solé et al. 1995), by reductions in temporal uncertainty (Lippert et al. 2007) or altering the decision making process (Doyle and Snowden 2001; Odgaard et al. 2003). These mechanisms are assumed to be equally effective across different levels of contrast. Hence, we contend that decreases in RTs caused by these higher-order decisional processes not originated in the detection phase will thus be captured by the parameter t0, in agreement with Carpenter (2004) and Pins and Bonnet (1996). As mentioned above, the t0 term of Eq. 2 reflects the intercept of the function. Pins and Bonnet (1996; see also Carpenter 2004) already tested how the slope and the asymptote of the Piéron function vary under task manipulations that involved, for example, stimulus uncertainty, to demonstrate that high-order effects produce changes in the asymptotic parameter t0 (while the slope remained unaltered). Fitting ␣ parameter. As pointed out before, the oft-used simplification of the original Piéron function shown in Eq. 2 allows one to 1 Note that this pattern can be loosely related to the rule of inverse effectiveness, often present in the multisensory literature (Rowland et al. 2007; Stein et al. 2009). However, the conditions to put this idea to the test are not optimal, since in this case the strength of the acoustic event is not particularly weak. relate RTs to the reciprocal of contrast (C⫺1) with slope k and intercept t0. This simplification involves the assumption that the parameter ␣ in the original Piéron functions is ⫺1. We thought it important to test this initial assumption, and did so in two ways. First, we fitted the Piéron function in its original form to our data, leaving ␣ as a free parameter, and calculated its variability with a nonlinear method (Marquardt-Levenberg algorithm). The result was in general compatible with an ␣ ⫽ ⫺1 (with only 4 exceptions from the 30 possible combinations; see Fig. 2). To secure further the viability of this assumption, and considering that the nonlinear fitting of the exponent is not fully conclusive and could depend on the algorithm used, we also proceeded with an approach based on linear fitting. We tested for the linearity of the data points when plotted against the reciprocal of the contrast (therefore, this time keeping ␣ fixed to ⫺1). The linearity of the distributions was assessed with a likelihood ratio test (per subject and condition; P ⬍ 0.05 in all cases). Since both approaches led to converging evidence, it was safe to accept an ␣ ⫽ ⫺1. Results and discussion. Mean RTs were calculated after filtering out individual data points falling 2 SDs from the mean for each distribution of RTs per condition and participant (⬍4.5% of the original data was rejected). Simple visual inspection of the averaged data in Fig. 3 shows how responses in audio-visual trials were systematically faster and less variable than in visual-only trials (see also Table 1). We adjusted the RT data as a linear function of the reciprocal of contrast (Eq. 2) for each different visual frequency and modality combination (Fig. 3) in order to estimate the corresponding slopes (i.e., the gain parameter k) and t0. Below, we report statistically significant results only, and unless otherwise noted, all other effects and interactions were nonsignificant. First, we ran simple paired Fig. 2. Individual ␣ parameter values and their confidence intervals across visual spatial frequencies (SFs) for the visual (A) and audio-visual (B) conditions for the data in experiment 1. These values correspond to fits of the Piéron function when leaving ␣ as a free parameter (see text for details). Each column represents 1 participant. Except for 4 of the 30 fitted values, the ␣ parameter was not significantly different from ⫺1. J Neurophysiol • doi:10.1152/jn.00226.2012 • www.jn.org SOUND-DRIVEN ENHANCEMENT OF VISION 1069 Fig. 3. Response times (RTs) as a function of the reciprocal of contrast (1/C) for each SF (from left to right) and modality combination (plotted in different shades of gray) in experiment 1. Small symbols represent individual means of each participant (each based on an average of 80 RTs, min ⫽ 60), whereas large symbols represent the interparticipant average. Lines represent the linear least-squares regression fits (thin dotted and thick solid lines for individual and average data, respectively). It can be appreciated that that the slope of these fits (k parameter) is shallower in the audio-visual condition compared with the visual condition at the low SFs. The thin solid black line in each plot represents the prediction of the probability summation model according to the LATER model. t-tests on the k parameter across SFs within the visual modality (threshold for significance set to P ⫽ 0.033 for multiple comparisons adjustment; Benjamini and Hochberg 1995). This was done for comparison with previous visual studies. As expected, maximal sensitivity (low k parameter) was seen for SF ⫽ 0.74 c/° compared with SF ⫽ 0.3 c/° [t(8)⫽ ⫺3.344, P ⫽ 0.01] and SF ⫽ 5.93 c/° [t(8) ⫽ 9.694, P ⬍ 0.001]. These data are thus consistent with Plainis and Murray (2000) and in agreement with the typical contrast sensitivity curves for SFs in the visual literature. To test the effect of sound on visual sensitivity, we then ran an ANOVA on the gain parameter k with modality of presentation (visual vs. audio-visual) and visual SF (0.3, 0.74, and 5.93 c/°) as independent variables. This analysis revealed a main effect of modality, confirming that responses to audio-visual trials were faster than responses to visual trials (F1,4 ⫽ 14.79, P ⫽ 0.018), and a significant interaction between modality and SF (F2,8 ⫽ 4.5925, P ⫽ 0.046). Following up on this interaction, we ran paired t-tests between the audio-visual and visual conditions at each level of SF. These revealed a significant increase in sensitivity (i.e., lower k parameter) in the audio-visual condition with respect to the visual-only condition for SF ⫽ 0.3 c/° [t(8)⫽ ⫺9.352, P ⬍ 0.001] and 0.74 c/° [t(8) ⫽ ⫺5.548, P ⬍ 0.001] but not for 5.93 c/° stimuli (Fig. 4A). This reduction in slope is compatible with audio-visual interaction at detection stages, that is, the system accumulates visual evidence at a faster rate when the sound is present, thereby increasing sensitivity. Moreover, our data suggest that this enhancement is stronger at low contrast values. We ran another ANOVA on the intercepts (t0) extracted from the fits to Eq. 2, using the same independent variables as before (SF and modality). This analysis revealed a significant main effect of presentation modality caused by a shift in t0 indicating an overall RT decrement (a speed-up of 35 ms on average) in the audio-visual condition with respect to the visual-only condition (F1,4 ⫽ 97.24, P ⬍ 0.001). This enhancement was equivalent for all SFs tested (see Fig. 4B), as revealed by the lack of significant interaction between SF and modality (F1,4 ⫽ 0.418, P ⫽ 0.672). The main effect of SF was also insignificant (F1,4 ⫽ 0.770, P ⫽ 0.494). One possible concern with the modulation in slope arising from these analyses is the potential impact of erroneous responses triggered by the sound, which are only possible in the audio-visual condition. To address this, we analyzed the proportion of false alarms (FAs, corresponding to erroneous responses to catch trials). The percentage of FAs in the no-sound catch trials was very close to 0% for all participants and conditions (no significant differences). However, participants committed an average of 17% FAs in the sound-present catch trials (no differences between SFs). In any case, the linear regression between parameter k and FA rate (Fig. 5) did not reveal any significant or near-significant positive or negative correlation, suggesting that these FAs to sound were not causing variations in the slope (k parameter) of the functions. On the other hand, the percentage of misses (failing to respond to a target when present) was very low overall (0.016% on average) and statistically equivalent across SFs and presentation modality (as expected from the suprathreshold nature Table 1. Visual and audio-visual RTs and latency of FA responses in auditory-only catch trials 0.050 Visual Audio-visual 0.087 0.153 0.268 0.469 0.822 FA Contrast SF, c/° RT, s SD RT, s SD RT, s SD RT, s SD RT, s SD RT, s SD RT, s SD 0.3 0.74 5.93 0.3 0.74 5.93 0.384 0.359 0.377 0.304 0.297 0.330 0.146 0.093 0.059 0.097 0.070 0.059 0.328 0.323 0.346 0.275 0.272 0.299 0.072 0.054 0.052 0.055 0.053 0.050 0.311 0.307 0.325 0.265 0.265 0.283 0.064 0.056 0.062 0.057 0.046 0.057 0.303 0.300 0.313 0.260 0.263 0.271 0.050 0.053 0.052 0.054 0.049 0.039 0.296 0.300 0.302 0.255 0.255 0.264 0.060 0.075 0.058 0.043 0.041 0.037 0.294 0.293 0.300 0.254 0.256 0.264 0.050 0.050 0.046 0.041 0.048 0.058 0.263 0.266 0.258 0.042 0.048 0.036 Values are averages and SDs of visual and audio-visual reaction times (RTs) and latency of false alarm (FA) responses in auditory-only catch trials. Although this is an averaged representation of the data, in the simulations we applied the LATER model considering each participant individually. SF, spatial frequency. J Neurophysiol • doi:10.1152/jn.00226.2012 • www.jn.org 1070 SOUND-DRIVEN ENHANCEMENT OF VISION Fig. 4. A: interparticipant averages for audio-visual and visual sensitivities (1/k) in experiment 1. B: average audio-visual and visual values for the intercept (t0). Results are presented separately for each SF. Each symbol represents the averaged interparticipant intercept, and error bars represent the associated SD. of the stimuli). Please note that a signal detection approach (i.e., calculating parameter d=) is not applicable here, given that the paradigm attempts to address changes in visual sensitivity from differences in processing time (RT) and not from accuracy measurements (i.e., FAs are mostly due to motor anticipations, not to incorrect detections). The results described above are clear in that RTs to visual events are speeded up by sounds and that this enhancement comprises both a gain in stimulus-driven information toward detection as well as a more generic, across-the-board facilitation that can be attributed to higher-order mechanisms. The former source of gain is sensitive to stimulus parameters such as SF and aligns well with previous results suggesting a stronger engagement of the magnocellular visual pathway in multisensory integration. The latter source of enhancement, on the other hand, captures well the interpretations of some previous studies showing the role of accessory sounds in reducing uncertainty (Lippert et al. 2007) and speeding up motor pathways (Valls-Solé et al. 1995). Previous literature has often characterized the mechanism underlying multisensory enhancement by testing whether the data from the cross-modal condition surpass the prediction of a probability summa- Fig. 5. Correlation plot between visual and audio-visual individual sensitivity (1/k) and % of false alarms in experiment 1. tion model based on the unisensory data (e.g., Arnold et al. 2010; Meyer et al. 2005). Despite some recent fundamental criticism depending on the paradigm involved (Otto and Mamassian 2012; Pannunzi et al. 2012), this approach has been used frequently and in a variety of contexts to evaluate the nature of multisensory enhancement. Probability summation assumes that the speed-up in audiovisual trials arises from a statistical advantage when responses can be based on two independent inputs that race toward reaching a threshold (Green 1958; Quick 1974; Treisman 1998; Wuerger et al. 2003). Given that, in the present experiment, participants were asked to respond exclusively to the visual stimulus and not to the sounds, probability summation would not appear adequate to model the present task. We nevertheless consider it relevant to bridge our present results with this common test for integration, as it has been quite influential in the literature so far. We decided to do so in two ways: First, we used the FA data from experiment 1 to simulate auditory RTs in order to be able to calculate the outcome of a probability summation model (see analysis below). Second, we reproduced the stimulus conditions of experiment 1 in a new test in which participants were asked to respond to both modalities, thus effectively adopting the standard paradigm of probability summation (experiment 2, reported below). Assessing probability summation. Judging from the FAs to soundonly trials (17% on average; Fig. 5), a percentage of the correct responses in the audio-visual RT distributions could have been triggered by uncontrolled responses toward the auditory stimulus. We decided to evaluate the possible impact of probability summation in our results assuming that a percentage of the RTs to the audio-visual trials originated from anticipations to sound. First, we obtained the averages and SDs of the individual RTs to the visual-only stimuli and those of FAs in catch trials with sound (Table 1; note that FA RT distributions were filtered in the same manner as the correct RTs in experiment 1). To compute the prediction of the probabilistic summation model, we applied a modified version of the LATER model. LATER assumes that sensory signals start a race to threshold in an ergodic rate, with the winning signal triggering the response (Carpenter and Williams 1995; Logan et al. 1984). We adapted the model to the present experimental design by assuming that only in a percentage of the audio-visual trials would intrusions from FAs win the race to threshold against the visual stimulus (this proportion was adjusted for each SF and observer, according to the empirical data from individual auditory catch trial conditions). Data of the probabilistic summation model were simulated by randomly selecting RTs from the visual and the FA distributions (9,000 simulations per point). Sensitivity (1/k) was computed from the newly modeled chronometric distributions (represented in J Neurophysiol • doi:10.1152/jn.00226.2012 • www.jn.org SOUND-DRIVEN ENHANCEMENT OF VISION Fig. 3 by the thin solid black line). The empirical audio-visual k parameter was significantly lower (higher sensitivity) than the theoretical k calculated from the probability summation model for the 0.3 c/° and 0.74 c/° SFs [t(8) ⫽ ⫺14.91, P ⬍ 0.0005 and t(8) ⫽ ⫺3.484, P ⫽ 0.025, respectively], but there were no significant differences for the 5.93 c/° condition. Thus, according to this analysis, while a probability summation model based on intrusive FAs to sound may eventually account for the RT shift in the 5.93 c/° SF, it cannot account for the gains produced in the remaining, lower SFs. The analysis above helps to reassure us, in a new fashion, that audio-visual responses in this experiment were genuinely benefited by interactions at the stage of detection, not (only) mediated by intrusive FAs to sound. However, there is an established literature suggesting that error and consequent physiological error negativity (which reflects the manifestation of an error detection system that checks actual behavior against an internal goal) have a marked effect on physiological processes, even when participants are not aware of their mistakes (e.g., Nieuwenhuis et al. 2001). For this reason, an RT to FAs might not be completely suitable for a conclusive test/reject of the probability summation hypothesis. Furthermore, the size of the FA RT distributions was based on 25 trials on average per participant and SF, thus making for a low reliability of the corresponding mean and SDs. We therefore decided, in experiment 2, to apply the RMI in a more traditional form (Miller 1982; Raab 1962), in order to validate our paradigm and the results of experiment 1 against a host of previous literature. Experiment 2 In this experiment we applied the RMI (Cappe et al. 2009; Colonius and Arndt 2001; Laurienti et al. 2004; Leo et al. 2008; Raab 1962) by directly collecting responses to unisensory visual and auditory trials and estimating Miller’s bound (Ulrich et al. 2007) for our stimuli. As noted above, recent studies (e.g., Otto and Mamassian 2012) have argued that Miller’s bound might not be adequate to reveal sensory interactions beyond purely statistical advantage. It is not within the scope of this study to claim otherwise, but this paradigm should suffice to back up the results from experiment 1 by comparison with a known and widely used approach to multisensory interactions. According to past literature, Miller’s bound is the maximal RT gain that can be explained by purely statistical facilitation when decisions are based on two independent sources of information. This is represented mathematically by Eq. 3, where the probability of detecting an audio-visual signal (AV) is the sum of the individual probabilities of detecting the visual (V) and the auditory (A) signals. ⱍ ⱍ ⱍ p共T ⬍ t AV兲 ⱕ p共T ⬍ t V兲 ⫹ p共T ⬍ t A兲 (3) If the results from experiment 1 were to be confirmed in this paradigm, we would expect to find the largest specific RT enhancement for the audio-visual RTs (relative to the unimodal RTs) at the lowest SF conditions. Methods. PARTICIPANTS. Seven new volunteers naive to the purpose of the experiment (23–29 yr old; 4 women, 3 men) were recruited among students from the University of Barcelona. All had normal hearing and normal or corrected-to-normal vision by self-report and gave informed consent prior to participation. The study received the approval of the University of Barcelona ethical committee. MATERIALS AND PROCEDURE. The experimental apparatus, timeline, and stimuli were identical to those used in experiment 1, except that we simplified the design by using only two SFs (0.3 and 5.93 c/°, lowest and highest in experiment 1, respectively), and two levels of contrast (0.05 and 0.822 Michelson contrast units, lowest and highest in experiment 1). Participants were asked to respond as fast as possible to any stimulus (visual, auditory, or audio-visual, indistinctly). Each participant ran 600 trials divided in 3 blocks of 200 trials (45 min in 1071 total) where all SFs and contrasts appeared randomly intermixed. Prior to the experimental session, participants dark-adapted for 5 min and performed 40 warm-up trials. In total every subject performed 45 valid trials for each visual, audio-visual, SF, and contrast condition and 180 auditory trials (in order to have the same number of visual, auditory, and audio-visual trials per block). Each block also included catch trials (amounting to 10% of the total) where no stimulus was presented and thus participants had to refrain from responding. Results and discussion. Just as in experiment 1, we filtered RTs below and above 2 SDs from the mean for each condition and participant (leading to the rejection of ⬍6% of the data) and obtained the empirical cumulative distribution functions. Participants responded to ⬍4% of the catch trials; therefore erroneous anticipations can be ruled out as a making a major contribution to the pattern of results. A 2 ⫻ 2 ⫻ 3 (contrast ⫻ SF ⫻ modality) repeated-measures ANOVA was conducted on the RTs. This analysis revealed a significant main effect of SF (F1,6 ⫽ 9.15, P ⫽ 0.0023), contrast level (F1,6 ⫽ 208.87, P ⬍ 0.0001), and modality (F2,12 ⫽ 168.62, P ⬍ 0.0001). All the interactions were also significant (all P ⬍ 0.001 except SF ⫻ contrast, which was marginally significant, F1,6 ⫽ 0.439, P ⫽ 0.0532). As can be seen in Fig. 6, RTs were faster for high-contrast and for low-SF visual stimuli, in consonance with previous literature (Breitmeyer 1975; Legge 1978) and with the results of experiment 1. Moreover, RTs were also generally faster for the auditory-only stimuli than for the visual-only stimuli. According to our expectations, responses to audio-visual stimuli were significantly faster than the visual-only and auditory-only responses in all conditions, with the exception of the low-contrast 5.93 c/° SF condition, where audioalone RTs were as fast as audio-visual RTs. Interestingly, the audiovisual absolute enhancement was larger at the high-contrast conditions, contradicting what the inverse effectiveness law would predict. However, it is important to point out that considering direct RTs to make the comparison might be misleading. For instance, if we consider the cumulative RT distribution functions presented in Fig. 6, bottom, it is also evident that the probabilistic overlap between visual and auditory unisensory conditions is also larger at high-contrast conditions. This means that just by means of probability summation overall audio-visual response speed-up should be larger, but mostly because of statistical reasons. Thus we calculated the relative index of enhancement for each type of visual stimulus by obtaining the Miller’s bound (for a specific description of the method, see Ulrich et al. 2007). This is normally considered the maximal possible speed-up in RT obtained by simple probabilistic advantage when two signals are independent. Data were binned in 10 quantiles (10% increments), and at each quantile (see Fig. 7) we tested the alternative hypothesis that audiovisual RTs were faster than the prediction of the Miller’s bound. The shaded areas in Fig. 7 indicate the quantiles where the race model was violated (i.e., the audio-visual RT was significantly faster). The 0.3 c/° SF manifested the highest proportion of such violations, with significant differences in 6 quantiles in the low-contrast condition and 4 in the high-contrast condition. The analysis of the 5.93 c/° SF did not reflect any enhancement for the low-contrast condition and only three significant violations in the high-contrast condition. In all cases, all significant violations of the race model encompassed adjacent time bins. The results of experiment 2 shed new light on the interpretation of the results of experiment 1. In particular, the reduction in the slope at low SFs in experiment 1, according to the analysis of the Piéron functions, seems to correspond to the specific sensitivity enhancement found for the low SF in experiment 2, accomplishing a convergence criterion. From previous literature, changes in the slope of the Piéron function are highly determined by the low-contrast values (Murray and Plainis 2003). The inverse effectiveness principle, often proposed in J Neurophysiol • doi:10.1152/jn.00226.2012 • www.jn.org 1072 SOUND-DRIVEN ENHANCEMENT OF VISION Fig. 6. Interparticipant average RTs in experiment 2 (error bars represent confidence intervals) plotted as a function of contrast and modality. The 2 tested SFs are represented in different columns. Insets: plots of cumulative Gaussians fitted to the empirical cumulative distribution functions for the RTs in the auditory (light gray and dashed line), visual (medium gray and dotted line), and audio-visual (dark gray and continuous line) conditions. multisensory integration, might be a logical candidate to explain our results, given that the Miller’s bound violation in experiment 2 was greater (for SF ⫽ 0.3 c/°) at the low-contrast condition.2 This conclusion is in principle independent of the potential problems for the claim that the race model may not be a good test of probability summation as recently pointed out by Otto and Mamassian (2012). In short, we use the race model here as a relative index to quantify the benefits produced across SFs in audio-visual trials. The main conclusion to be extracted from this experiment is that our results support the paradigm applied in experiment 1, showing that multisensory interactions are larger when visual stimuli correspond to low SFs and contrast levels. GENERAL DISCUSSION The brain combines incoming sensory information from various modalities in order to optimize the organism’s interaction with the environment. Various animal and human studies have highlighted the putative neural mechanisms supporting these enhancements (Calvert et al. 2001; Meredith and Stein 1986; Schroeder and Foxe 2005; Smiley and Falchier 2009; Watkins et al. 2006). However, one important, and yet unresolved, question refers to the specific case of the putative visual enhancement produced by concurrent sounds. As highlighted in the introduction, previous RT studies addressing 2 Note that there was also a Miller’s bound violation at the SF ⫽ 5.93 c/° high-contrast condition. Although it might seem contradictory with what the rule of inverse effectiveness postulates, this result can be accommodated because high-contrast stimuli, regardless of frequency, tend to engage the magnocellular visual pathway as well (as pointed out by different authors; Mitov and Totev 2005; Thomas et al. 1999). audio-visual integration (Cappe et al. 2009; Colonius and Arndt 2001; Laurienti et al. 2004; Leo et al. 2008; Miller 1982) have reported improvements in detection that cannot be accounted for solely as a result of a statistical advantage. Nevertheless, while these studies have consistently revealed the existence of cross-modal enhancements, they cannot dissociate and quantify the different sources contributing to the crossmodal benefit. One important constraint related to the classical approach based on the RSE is that responses in this task are given to any stimulus indiscriminately (i.e., visual or auditory), and consequently the RSE paradigm fails to describe with precision detection changes over one specific sensory modality. Hence, we decided to apply a different paradigm based on the analysis of Piéron functions on visual response latencies, in order to study specific changes in visual processing resulting from stimulus-driven interactions with a concurrent sound. Our results provide evidence for the coexistence of two functionally distinct mechanisms that support the enhancement of vision by sound: First, presenting a sound synchronously with a visual stimulus can decrease RTs as a result of processes not necessarily supported by sensory interactions. This source of enhancement may be a consequence of the superior temporal resolution of the auditory system (Lippert et al. 2007), in addition to (or in combination with) the potential alerting effects of sounds leading to increases in overt motor activation, shortening response latency (startle effect; Valls-Solé et al. 1995). In line with the nonsensory specific nature of these mechanisms, their contribution to RT speed-up was equivalent across all intensities of visual contrast and SFs tested. Such J Neurophysiol • doi:10.1152/jn.00226.2012 • www.jn.org SOUND-DRIVEN ENHANCEMENT OF VISION 1073 Fig. 7. Race model inequality tests in experiment 2. Black squares represent the empirical average RTs in the audio-visual condition binned in 10 quantiles, while gray squares represent the prediction of the probability summation model obtained from the auditory-only and visual-only RT distributions (Miller’s bound). Error bars represent SE. Shaded areas indicate quantiles where the race model was significantly violated (P ⬍ 0.05). nonsensory specific enhancement was clearly reflected in the reduction in the asymptotic term of the Piéron functions. Most importantly, however, our results also provide strong evidence another source of enhancement, based on sensory interactions between visual and auditory inputs at the detection stage. This interaction was clearly dependent on stimulus variations and, as we contend, it would be produced by a modulation of the rate at which visual sensory evidence is accrued (though, alternatively, it could also be based on a reduction of the sensory threshold needed to elicit a response; see below). In any case, this effect was reflected in the change of the slope of the Piéron function (gain parameter k), which is interpreted as an increase in the sensitivity of the visual system. From a computational point of view, this advantage in detection can be explained in at least two alternative ways. On one hand, visual and auditory signals may be integrated following a linear summation model that assumes convergence of the sensory inputs from the two modalities into a single physiological or psychophysical channel before a decision regarding the presence of the stimulus (Meyer et al. 2005; López-Moliner and Soto-Faraco 2007; Morrone et al. 1995). A second possibility is that the sound reduces the sensory threshold (for example, by increasing visual cortex excitability; Romei et al. 2009) to trigger the response. (Please note that although the main conclusions of this report are not affected by which of these two particular alternatives is true, we attempted to tell these two models of enhancement apart at the detection level, without conclusive results; see APPENDIX). What remains clear so far is that, regardless of the particular mechanism, an important part of the auditory-induced enhancement of vision reported here is due to changes at the detection stages of stimulus processing. To validate the original paradigm applied in experiment 1, and bridge our results with extant multisensory literature, in experiment 2 we followed the classical RSE approach. We showed that sensory interactions conformed well to the pattern of findings in experiment 1. Interestingly, and in stark contrast with the nonspecific component of the audio-visual enhancement, both experiments converged in showing that improvements at the detection level of processing were selective for low SFs. Further analyses of the slopes in experiment 1 allowed us to address this audio-visual enhancement across the different levels of contrast. As demonstrated by Murray and Plainis (2003), the low-intensity contrast region determines the slope; hence the marked reduction of the slope in the soundpresent condition could be construed as a behavioral analog of the inverse effectiveness rule, often cited in the multisensory integration literature (Meredith and Stein 1983; Wallace et al. 1998), that is, the lower the contrast intensity, the higher the benefit produced by the sound (Fig. 3, Fig. 7) and, in consequence, the audio-visual gain. Congruently, experiment 2 also yielded larger sensory interactions for the critical low-SF condition at the low-contrast stimuli. One might perhaps think that the reductions in RT in the audio-visual conditions could be accounted for in terms of response preparation (Nickerson 1973). According to this perspective, when one target and one accessory stimulus are presented in temporal synchrony, the longer the processing J Neurophysiol • doi:10.1152/jn.00226.2012 • www.jn.org 1074 SOUND-DRIVEN ENHANCEMENT OF VISION time for the target, the more time the accessory stimulus has to work as a warning signal and prepare the system for responding to that target, leading to advantages in RTs. However, our present results in experiments 1 (Table 1) and 2 (Fig. 6) showed that while visual latencies are slower for the high SF, the multisensory benefits in RT are more likely to occur at the low SFs, thus effectively rejecting response preparation as a viable account for the present pattern of results. Most previous studies conducted in multisensory research have not directly addressed possible differences in audio-visual integration as a function of the well-known functional division in visual processing between M- and P-pathways. In the present study, the component of enhancement linked to the detection stage revealed here was constrained to low SFs, and thus the present findings bear on the potentially important role of the low-frequency-tuned magnocellular visual pathway in multisensory detection (see Jaekl and Soto-Faraco 2010 for related findings). This fits well with prior knowledge concerning the physiology underlying low-level audio-visual interaction in the M-pathway and its functionality in terms of mediating detection of intensity changes. Moreover, from a neurophysiological perspective this dominance of the M-pathway in multisensory interaction for detection is indirectly supported by two additional lines of evidence. First, the SC, which has been frequently considered as determinant for multisensory enhancement (Leo et al. 2008; Meredith and Stein 1986, 1996), receives connections from the magnocellular layers of the lateral geniculate nucleus (LGN) of the thalamus via cortical area V1 (Berson 1988), whereas evidence for connections from the P-pathway is scant (Livingstone and Hubel 1988; Merigan and Maunsell 1993). Second, anatomical inputs into V1 from the auditory cortex occur primarily in the representation of the peripheral visual field (Falchier et al. 2002), a region of space that is mainly represented by the M-pathway (Silveira and Perry 1991). Third, another relevant feature of the M-pathway is its specific sensitivity to transient stimulation. Some recent studies have suggested that pure transient information is integrated (Andersen and Mamassian 2008) and it could be determinant for multisensory associations (Van der Burg et al. 2010; Werner and Noppeney 2011). Although our results support the important contribution of the M-pathway to multisensory interactions in detection, it is important to take into account the fact that the features of the experiment probably prioritize fast detection responses and, in consequence, the processing under this particular visual pathway, which has shorter latencies. Therefore, our results do not necessarily negate a possible role of the P-pathway in driving multisensory interactions in other kinds of tasks more specifically tapping at parvocellular processing (i.e., cross-modal object recognition; Giard and Peronnet 1999; Jaekl and Harris 2009; Molholm et al. 2002). Nevertheless, more research specifically addressing this dissociation should be considered in the future. Considering an explanation based on the linear summation hypothesis, the reduction in slope (gain parameter k) observed for the lower SFs is described as the stimulus energy reaching the detection threshold at a faster rate because of the concomitant presence of a sound that is linearly added to the visual signal. The slope (k ⫽ /c) then consists of a threshold and the rate c that determines the accumulation to this threshold when the channel is stimulated by a given amount of energy E. According to this description an accurate estimate of k will depend on how well the energy values (E) of the stimuli used to fit the model are chosen. In our main analyses of experiment 1 we modeled the RTs as a function of vision (visual contrast) alone, as this was the modality the participants were asked to respond to. Hence, here we evaluate the possibility that the race to the threshold in the audio-visual condition was also determined by the auditory signals, at least for the lower SFs. To determine whether the present data are consistent with a linear summation model, we estimated the specific contribution of the auditory stimulus to the different audio-visual conditions. Specifically, we fitted the equation (Eq. A8; see Mathematical Derivation of Linear Summation Model below) and included an additional term (Ea) as the only free parameter to account for the auditory stimulus contribution. We set (k ⫽ /c) for each SF to their respective values estimated from the visual-alone condition under the assumption that the threshold and the gain of the channel do not depend on the nature of the stimulation. That is, we consider that the threshold used to trigger the response is the same for both stimulation conditions (visual only and audio-visual). We set t0 to the values obtained with the Piéron equation in the audio-visual condition, as this reflects the nonspecific enhancement of RT due to sound. The linear model with a single parameter fitted the data perfectly. As dictated by the physical stimuli shown to the participants, Ea denotes a constant stimulation (i.e., the sound was always the same). However, the estimated contribution of this parameter to the final RT is inversely proportional to the contrast for each SF and, importantly, inversely proportional to SF, considering that the estimated values for Ea were 0.053 and 0.035 for the 0.3 and 0.74 c/° SFs, while that for 5.93c/° was nearly 0. In other words, the contribution of the sound to visual detection is greatest at low SFs. Importantly, this selective contribution accurately describes the data while keeping the slope (channel gain) at the same value as that obtained in the visual condition. Conclusion Mathematical Derivation of Linear Summation Model The present psychophysical analysis of RTs allowed us to shed light on the contribution of different sources of interaction leading to sound-induced enhancement of vision, one of the most often-cited but still controversial cases of cross-modal gain. First, the results of this study provide clear evidence The means of visual RTs measured as a function of stimulus contrast are usually well described by the Piéron function: showing that concurrent auditory signals result in an increase in sensitivity to visual stimuli, at a detection-specific level. This increase in sensitivity is largest at low SF and low contrast values of the visual stimulus. Second, the present paradigm allowed us to isolate and quantify the reduction in RTs to audio-visual stimuli as a consequence of more general processes that are separable from the reduction resulting from interactions at the detection stage. Although previous literature had hinted at the potential mixed contribution of detectionlevel and decision-level processes in multisensory enhancement, clear-cut evidence for the contribution of each type of source within the same paradigm was scant. APPENDIX Linear Summation Hypothesis RT ⫽ kC⫺␣ ⫹ t0 (A1) Let us assume that the response mechanism is based on an accumu- J Neurophysiol • doi:10.1152/jn.00226.2012 • www.jn.org SOUND-DRIVEN ENHANCEMENT OF VISION lator model. The stimulus-dependent latency component (t ⫽ kC⫺␣) is then a function of the time it takes for the mechanism to accumulate evidence (E, contrast in our case) until it reaches a threshold (): t⫽ (A2) cE where cE denotes the rate at which the system accumulates the evidence. A more general form of Eq. A2 underlies decisional response mechanisms like the LATER model (Carpenter and Williams 1995). RT can then be expressed as RT ⫽ cE ⫹ t0 (A3) Combining Eqs. A2 and A3 and taking the logarithms we obtain logt ⫽ log共RT ⫺ t0兲 ⫽ log ⫺ logE c (A4) A more general form of Eq. A4 is easily obtained by adding the extra parameter ␣ ⫽ ⫺1 and letting k ⫽ /c: log共RT ⫺ t0兲 ⫽ log共t兲 ⫽ log共k兲 ⫺ ␣logE (A5) By taking the exponential at both sides of Eq. A5 we obtain the Piéron function (Eq. A1): RT ⫽ k · E ⫺␣ ⫹ t0 (A6) Therefore the larger the rate at which the sensory evidence accumulates, the smaller the parameter k. Any specific sensory effect must then affect the stimulus-dependent part of Eq. A1: t ⫽ E⫺1 ⫽ c cE (A7) If a visual (E) and an auditory (Ea, constant in our case) signal were integrated within a single channel during the race to the threshold without changing the rate c in a linear summation fashion, t⫽ c共E ⫹ Ea兲 ⫽ c 共E ⫹ Ea兲⫺1 (A8) The true sensory benefit could be captured in the audio-visual condition by a RT model that takes into account an auditory signal in the input with a slope k ⫽ /c not different from the visual slope and an additive parameter t0 that is not different from the estimate in Eq. A6, in which the auditory system is not considered. Visual Threshold Reduction by Sound Hypothesis Alternatively, the reduction of the slope value in the lower SFs could be caused by an increase in the excitability of the system by the sound, leading to a reduction (Ha) of the threshold in the audiovisual conditions: RT ⫽ 关共 ⫺ Ha兲 ⁄ c兴 ⫹ t0 (A9) Therefore, we fitted Eq. A9 with fixed values of and c whose ratio (/c) matched the slope observed in the visual condition (Ha ⫽ 0.0). In this way, we can obtain an approximation of Ha for the different SFs. The estimated values were 2.07 and 1.977 for 0.3 c/° and 0.74 c/°, respectively, and nearly 0.0 for the high-frequency condition 5.93 c/°. This pattern was very similar to that shown by assuming changes in the rate of sensory evidences accumulation; however, after statistical comparison of the goodness of fit for the linear summation against the varying threshold model, the linear summation model results were slightly better in all the SFs but only in a significant way for the 0.3 c/° SF (F2,3 ⫽ 15.64, P ⫽ 0.023). However, we do not interpret these fittings as being conclusive about what particular 1075 mechanism best explains the results; nevertheless, we can claim that the changes in the slope are linked to sensory processing independently of the model applied and are specifically larger for the low-SF visual channels than for the high-SF channels, highlighting their stimulus dependence. ACKNOWLEDGMENTS We thank Dr. Phil Jaekl for his helpful comments on an earlier version of this article. GRANTS This research was supported by the Spanish Ministry of Science and Innovation (PSI2010-15867, PSI2010-15426, and Consolider INGENIO CSD2007-00012), Comissionat per a Universitats i Recerca del DIUE-Generalitat de Catalunya (SGR2009-092, SGR2009-308), and the European Research Council (StG-2010263145). DISCLOSURES No conflicts of interest, financial or otherwise, are declared by the author(s). AUTHOR CONTRIBUTIONS Author contributions: A.P.-B. performed experiments; A.P.-B. and J.L.-M. analyzed data; A.P.-B., S.S.-F., and J.L.-M. interpreted results of experiments; A.P.-B. and J.L.-M. prepared figures; A.P.-B., S.S.-F., and J.L.-M. drafted manuscript; A.P.-B., S.S.-F., and J.L.-M. edited and revised manuscript; A.P.-B., S.S.-F., and J.L.-M. approved final version of manuscript; A.P.-B and J.L.-M. conception and design of research. REFERENCES Andersen TS, Mamassian P. Audiovisual integration of stimulus transients. Vision Res 48: 2537–2544, 2008. Arnold DH, Tear M, Schindel R, Roseboom W. Audio-visual speech cue combination. PloS One 5: e10217, 2010. Benjamini Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc B 57: 289 –300, 1995. Berson DM. Retinal and cortical inputs to cat superior colliculus: composition, convergence and laminar specificity. Prog Brain Res 75: 17–26, 1988. Bolognini N, Frassinetti F, Serino A, Làdavas E. “Acoustical vision” of below threshold stimuli: interaction among spatially converging audiovisual inputs. Exp Brain Res 160: 273–282, 2005. Breitmeyer BG. Simple reaction time as a measure of the temporal response properties of transient and sustained channels. Vision Res 15: 1411–1412, 1975. Calvert GA, Hansen PC, Iversen SD, Brammer MJ. Detection of audiovisual integration sites in humans by application of electrophysiological criteria to the BOLD effect. Neuroimage 14: 427– 438, 2001. Cappe C, Thut G, Romei V, Murray MM. Selective integration of auditoryvisual looming cues by humans. Neuropsychologia 47: 1045–1052, 2009. Carpenter RH. Contrast, probability, and saccadic latency?: evidence for independence of detection and decision. Curr Biol 14: 1576 –1580, 2004. Carpenter RH, Williams ML. Neural computation of log likelihood in control of saccadic eye movements. Nature 377: 59 – 62, 1995. Colonius H, Arndt P. A two-stage model for visual-auditory interaction in saccadic latencies. Percept Psychophys 63: 126 –147, 2001. Corneil BD, Van Wanrooij M, Munoz DP, Van Opstal AJ. Auditory-visual interactions subserving goal-directed saccades in a complex scene. J Neurophysiol 88: 438 – 454, 2002. Diederich A, Colonius H. Bimodal and trimodal multisensory enhancement: effects of stimulus onset and intensity on reaction time. Percept Psychophys 66: 1388 –1404, 2004. Doyle MC, Snowden RJ. Identification of visual stimuli is improved by accompanying auditory stimuli: the role of eye movements and sound location. Perception 30: 795– 810, 2001. Driver J, Spence C. Cross-modal links in spatial attention. Philos Trans R Soc Lond B Biol Sci 353: 1319 –1331, 1998. J Neurophysiol • doi:10.1152/jn.00226.2012 • www.jn.org 1076 SOUND-DRIVEN ENHANCEMENT OF VISION Driver J, Noesselt T. Multisensory interplay reveals crossmodal influences on “sensory-specific” brain regions, neural responses, and judgments. Neuron 57: 11–23, 2008. Ernst MO, Bülthoff HH. Merging the senses into a robust percept. Trends Cogn Sci 8: 162–169, 2004. Falchier A, Clavagnier S, Barone P, Kennedy H. Anatomical evidence of multimodal integration in primate striate cortex. J Neurosci 22: 5749 –5759, 2002. Frassinetti F, Bolognini N, Làdavas E. Enhancement of visual perception by crossmodal visuo-auditory interaction. Exp Brain Res 147: 332–343, 2002. Giard MH, Peronnet F. Auditory-visual integration during multimodal object recognition in humans: a behavioral and electrophysiological study. J Cogn Neurosci 11: 473– 490, 1999. Green DM. Detection of multiple component signals in noise. J Acoust Soc Am 30: 904 –911, 1958. Harwerth RS, Levi DM. Reaction time as a measure of suprathreshold grating detection. Vision Res 18: 1579 –1586, 1978. Jaekl PM, Harris LR. Sounds can affect visual perception mediated primarily by the parvocellular pathway. Vis Neurosci 26: 477– 486, 2009. Jaekl PM, Soto-Faraco S. Audiovisual contrast enhancement is articulated primarily via the M-pathway. Brain Res 1366: 85–92, 2010. Kinchla RA. Detecting target elements in multielement arrays?: a confusability model. Perception 15: 149 –158, 1974. Lakatos P, Chen CM, O’Connell MN, Mills A, Schroeder CE. Neuronal oscillations and multisensory interaction in primary auditory cortex. Neuron 53: 279 –292, 2007. Laurienti PJ, Kraft RA, Maldjian JA, Burdette JH, Wallace MT. Semantic congruence is a critical factor in multisensory behavioral performance. Exp Brain Res 158:, 405– 414, 2004. Legge GE. Sustained and transient mechanisms in human vision: temporal and spatial properties. Vision Res 18: 69 – 81, 1978. Leo F, Bertini C, di Pellegrino G, Làdavas E. Multisensory integration for orienting responses in humans requires the activation of the superior colliculus. Exp Brain Res 186: 67–77, 2008. Levi DM, Harwerth RS, Manny RE. Suprathreshold spatial frequency detection and binocular interaction in strabismic and anisometropic amblyopia. Invest Ophthalmol Vis Sci 18: 714 –725, 1979. Lippert M, Logothetis NK, Kayser C. Improvement of visual contrast detection by a simultaneous sound. Brain Res 1173: 102–109, 2007. Livingstone MS, Hubel DH. Do the relative mapping densities of the magnoand parvocellular systems vary with eccentricity? J Neurosci 8: 4334 – 4339, 1988. Logan GD, Cowan WB, Davis KA. On the ability to inhibit simple and choice reaction time responses: a model and a method. J Exp Psychol Hum Percept Perform 10: 276 –291, 1984. López-Moliner J, Soto-Faraco S. Vision affects how fast we hear sounds move. J Vis 7: 6.1– 6.7, 2007. Manjarrez E, Mendez I, Martinez L, Flores A, Mirasso CR. Effects of auditory noise on the psychophysical detection of visual signals: crossmodal stochastic resonance. Neurosci Lett 415: 231–236, 2007. McDonald JJ, Teder-Sälejärvi WA, Hillyard SA. Involuntary orienting to sound improves visual perception. Nature 407: 906 –908, 2000. McDonald JJ, Teder-Sälejärvi WA, Ward LM. Multisensory integration and crossmodal attention effects in the human brain. Science 292: 1791, 2001. McDonald JJ, Teder-Sälejärvi WA, Di Russo F, Hillyard SA. Neural basis of auditory-induced shifts in visual time-order perception. Nat Neurosci 8: 1197–1202, 2005. Meredith MA, Stein BE. Visual, auditory, and somatosensory convergence on cells in superior colliculus results in multisensory integration. J Neurophysiol 56: 640 – 662, 1986. Meredith MA, Stein BE. Spatial determinants of multisensory integration in cat superior colliculus neurons. J Neurophysiol 75: 1843–1857, 1996. Meredith M, Stein B. Interactions among converging sensory inputs in the superior colliculus. Science 221: 389 –391, 1983. Merigan WH, Maunsell JH. How parallel are the primate visual pathways? Annu Rev Neurosci 16: 369 – 402, 1993. Meyer GF, Wuerger SM, Röhrbein F, Zetzsche C. Low-level integration of auditory and visual motion signals requires spatial co-localisation. Exp Brain Res 166: 538 –547, 2005. Miller J. Divided attention: evidence for coactivation with redundant signals. Cogn Psychol 14: 247–279, 1982. Mitov D, Totev T. How many pathways determine the speed of grating detection? Vision Res 45: 821– 825, 2005. Molholm S, Ritter W, Murray MM, Javitt DC, Schroeder CE, Foxe JJ. Multisensory auditory-visual interactions during early sensory processing in humans?: a high-density electrical mapping study. Cogn Brain Res 14: 115–128, 2002. Morrell F. Visual system’s view of acoustic space. Nature 238: 44 – 46, 1972. Morrone MC, Burr DC, Vaina LM. Two stages of visual processing for radial and circular motion. Nature 376: 507–509, 1995. Murray IJ, Plainis S. Contrast coding and magno/parvo segregation revealed in reaction time studies. Vision Res 43: 2707–2719, 2003. Nickerson RS. Intersensory facilitation of reaction time: energy summation or preparation enhancement? Psychol Rev 80: 489 –509, 1973. Nieuwenhuis S, Ridderinkhof KR, Blom J, Band GP, Kok A. Error-related brain potentials are differentially related to awareness of response errors: evidence from an antisaccade task. Psychophysiology 38: 752–760, 2001. Noesselt T, Tyll S, Boehler CN, Budinger E, Heinze HJ, Driver J. Sound-induced enhancement of low-intensity vision: multisensory influences on human sensory-specific cortices and thalamic bodies relate to perceptual enhancement of visual detection sensitivity. J Neurosci 30: 13609 –13623, 2010. Noesselt T, Bergmann D, Hake M, Heinze HJ, Fendrich R. Sound increases the saliency of visual events. Brain Res 1220: 157–163, 2008. Nozawa G, Reuter-Lorenz PA, Hughes HC. Parallel and serial processes in the human oculomotor system: bimodal integration and express saccades. Biol Cybern 72: 19 –34, 1994. Odgaard EC, Arieh Y, Marks LE. Cross-modal enhancement of perceived brightness: sensory interaction versus response bias. Percept Psychophys 65: 123–132, 2003. Otto TU, Mamassian P. Noise and correlations in parallel perceptual decision making. Curr Biol 22: 1391–1396, 2012. Pannunzi M, Pérez-Bellido A, Baños AP, López-Moliner J, Deco G, Soto-Faraco S. Scrutinizing integrative effects in a multi-stimuli detection task. Seeing Perceiving 25: 100, 2012. Piéron H. Recherches sur les lois de variation des temps de latence sensorielle en fonction des intensités excitatrices. L’Année Psychol 20: 17–96, 1914. Pins D, Bonnet C. The Piéron function in the threshold region. Percept Psychophys 62: 127–136, 2000. Pins D, Bonnet C. On the relation between stimulus intensity and processing time?: Pieron’s law and choice reaction time. Perception 58: 390 – 400, 1996. Plainis S, Murray IJ. Neurophysiological interpretation of human visual reaction times: effect of contrast, spatial frequency and luminance. Neuropsychologia 38: 1555–1564, 2000. Quick RF. A vector-magnitude model of contrast detection. Kybernetik 16: 65– 67, 1974. Raab DH. Statistical facilitation of simple reaction times. Trans NY Acad Sci 24: 574 –590, 1962. Romei V, Murray MM, Cappe C, Thut G. Preperceptual and stimulusselective enhancement of low-level human visual cortex excitability by sounds. Curr Biol? 19: 1799 –1805, 2009. Rowland BA, Quessy S, Stanford TR, Stein BE. Multisensory integration shortens physiological response latencies. J Neurosci 27: 5879 –5884, 2007. Schroeder CE, Foxe J. Multisensory contributions to low-level, “unisensory” processing. Curr Opin Neurobiol 15: 454 – 458, 2005. Silveira LC, Perry VH. The topography of magnocellular projecting ganglion cells (M-ganglion cells) in the primate retina. Neuroscience 40: 217–237, 1991. Smiley JF, Falchier A. Multisensory connections of monkey auditory cerebral cortex. Hear Res 258: 37– 46, 2009. Spence C, Driver J. Covert orienting in audition: endogenous and exogenous mechanisms. J Exp Psychol Hum Percept Perform 20: 555–574, 1994. Stein BE. Neural mechanisms for synthesizing sensory information and producing adaptive behaviors. Exp Brain Res 123: 124 –135, 1998. Stein BE, Stanford TR, Ramachandran R, Perrault TJ, Rowland BA. Challenges in quantifying multisensory integration: alternative criteria, models, and inverse effectiveness. Exp Brain Res 198: 113–126, 2009. Stein BE, London N, Wilkinson LK, Price DD. Enhancement of perceived visual intensity by auditory stimuli: a psychophysical analysis. J Cogn Neurosci 8: 497–506, 1996. Stein BE, Meredith MA, Huneycutt WS, McDade L. Behavioral indices of multisensory integration: orientation to visual cues is affected by auditory stimuli. J Cogn Neurosci 1: 12–24, 1989. J Neurophysiol • doi:10.1152/jn.00226.2012 • www.jn.org SOUND-DRIVEN ENHANCEMENT OF VISION Thomas JP, Fagerholm P, Bonnet C. One spatial filter limits speed of detecting low and middle frequency gratings. Vision Res 39: 1683–1693, 1999. Treisman M. Combining information: probability summation and probability averaging in detection and discrimination. Psychol Methods 3: 252–265, 1998. Ulrich R, Miller J, Schröter H. Testing the race model inequality: an algorithm and computer programs. Behav Res Methods 39: 291–302, 2007. Valls-Solé J, Solé A, Valldeoriola F, Muñoz E, Gonzalez LE, Tolosa ES. Reaction time and acoustic startle in normal human subjects. Neurosci Lett 195: 97–100, 1995. Van der Burg E, Cass J, Olivers CN, Theeuwes J, Alais D. Efficient visual search from synchronized auditory signals requires transient audiovisual events. PloS One 5: e10664, 2010. 1077 Wallace MT, Meredith MA, Stein BE. Converging influences from visual, auditory, and somatosensory cortices onto output neurons of the superior colliculus. J Neurophysiol 69: 1797–1809, 1993. Wallace MT, Meredith MA, Stein BE. Multisensory integration in the superior colliculus of the alert cat. J Neurophysiol 80: 1006 –1010, 1998. Watkins S, Shams L, Tanaka S, Haynes JD, Rees G. Sound alters activity in human V1 in association with illusory visual perception. Neuroimage 31: 1247–1256, 2006. Werner S, Noppeney U. The contributions of transient and sustained response codes to audiovisual integration. Cereb Cortex 21: 920 –931, 2011. Wuerger SM, Hofbauer M, Meyer GF. The integration of auditory and visual motion signals at threshold. Percept Psychophys 65: 1188 –1196, 2003. J Neurophysiol • doi:10.1152/jn.00226.2012 • www.jn.org