Chapter 3

PROBABILITY AND STOCHASTIC PROCESSES

God does not play dice with the universe.

—Albert Einstein

Not only does God definitely play dice, but He sometimes confuses us by throwing them

where they cannot be seen.

—Stephen Hawking

Abstract

This chapter aims to provide a cohesive overview of basic probability concepts,

starting from the axioms of probability, covering events, event spaces, joint and

conditional probabilities, leading to the introduction of random variables, discrete probability distributions (PDP) and probability density functions (PDFs).

Then follows an exploration of random variables and stochastic processes including cumulative distribution functions (CDFs), moments, joint densities, marginal densities, transformations and algebra of random variables. A number of

useful univariate densities (Gaussian, chi-square, non-central chi-square, Rice,

etc.) are then studied in turn. Finally, an introduction to multivariate statistics is provided, including the exterior product (used instead of the conventional

determinant in matrix r.v. transformations), Jacobians of random matrix transformations and culminating with the introduction of the Wishart distribution.

Multivariate statistics are instrumental in characterizing multidimensional problems such as array processing operating over random vector or matrix channels.

Throughout the chapter, an emphasis is placed on complex random variables,

vectors and matrices, because of their unique importance in digital communications.

91

92

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

This material to appear as part of the book Space-Time Methods, vol. 1:

Space-Time Processing

c

�2009

by S«ebastien Roy. All rights reserved.

1.

Introduction

The theory of probability and stochastic processes is of central importance

in many core aspects of communication theory, including modeling of information sources, of additive noise, and of channel characteristics and fluctuations.

Ultimately, through such modeling, probability and stochastic processes are instrumental in assessing the performance of communication systems, wireless

or otherwise.

It is expected that most readers will have at least some familiarity with this

vast topic. If that is not the case, the brief overview provided here may be

less than satisfactory. Interested readers who wish for a fuller treatment of

the subject from an engineering perspective may consult a number of classic

textbooks [Papoulis and Pillai, 2002], [Leon-Garcia, 1994], [Davenport, 1970].

The subject matter of this book calls for the development of a perhaps lesserknown, but increasingly active, branch of statistics, namely multivariate statistical theory. It is an area whose applications — in the field of communication

theory in general, and in array processing / MIMO systems in particular —

have grown considerably in recent years, mostly thanks to the usefulness and

polyvalence of the Wishart distribution. To supplement the treatment given

here, readers are directed to the excellent textbooks [Muirhead, 1982] and [Andersen,1958].

2. Probability

Experiments, events and probabilities

Definition 3.1. A probability experiment or statistical experiment consists

in performing an action which may result in a number of possible outcomes,

the actual outcome being randomly determined.

For example, rolling a die and tossing a coin are probability experiments. In

the first case, there are 6 possible outcomes while in the second case, there are

2.

Definition 3.2. The sample space S of a probability experiment is the set of

all possible outcomes.

In the case of a coin toss, we have

S = {t, h} ,

(3.1)

93

Probability and stochastic processes

where t denotes “tails” and h denotes “heads”.

Another, slightly more sophisticated, probability experiment could consist

in tossing a coin five times and defining the outcome as being the total number

of heads obtained. Hence, the sample space would be

S = {1, 2, 3, 4, 5} .

(3.2)

Definition 3.3. An event E occurs if the outcome of the experiment is part of

a predetermined subset (as defined by E) of the sample space S.

For example, let the event A correspond to the obtention of an even number

of heads in the five-toss experiment. Therefore, we have

A = {2, 4} .

(3.3)

A single outcome can also be considered an event. For instance, let the event

B correspond to the obtention of three heads in the five-toss experiment, i.e.

B = {3}. This is also called a single event or a sample point (since it is a

single element of the sample space).

Definition 3.4. The complement of an event X consists of all the outcomes

(sample points) in the sample space S that are not in event X.

For example, the complement of event A consists in obtaining an odd number of heads, i.e.

Ā = {1, 3, 5} .

(3.4)

Two events are considered mutually exclusive if they have no outcome in

common. For instance, A and Ā are mutually exclusive. In fact, an event and

its complement must by definition be mutually exclusive. The events C = {1}

and D = {3, 5} are also mutually exclusive, but B = {3} and D are not.

Definition 3.5. Probability (simple definition): If an experiment has N possible and equally-likely exclusive outcomes, and M ≤ N of these outcomes

constitute an event E, then the probability of E is

P (E) =

M

.

N

(3.5)

Example 3.1

Consider a die roll. If the die is fair, all sample point are equally likely. Defining event Ek as corresponding to outcome / sample point k, where k ranges

from 1 to 6, we have

1

P (Ek ) = , k = 1 . . . 6.

6

Given an event F = {1, 2, 3, 4}, we find

P (F ) =

4

2

= ,

6

3

94

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

and

2

1

= .

6

3

Definition 3.6. The sum or union of two events is an event that contains all

the outcomes in the two events.

P (F̄ ) =

For example,

C ∪ D = {1} ∪ {3, 5} = {1, 3, 5} = Ā.

(3.6)

Therefore the event Ā corresponds to the occurence of event C or event D.

Definition 3.7. The product or intersection of two events is an event that

contains only the outcomes that are common in the two events.

For example

and

Ā ∩ B = {1, 3, 5} ∩ {3} = {3} ,

(3.7)

{1, 2, 3, 4} ∩ Ā = {1, 2, 3, 4} ∩ {1, 3, 5} = {1, 3} .

(3.8)

Therefore, the intersection corresponds to the occurence of one event and

the other.

It is noteworthy that the intersection of two mutually exclusive events yields

the null event, e. g.

E ∩ Ē = ∅,

(3.9)

where ∅ denotes the null event.

A more rigorous definition of probability calls for the statement of four postulates.

Definition 3.8. Probability (rigorous definition): Given that each event E is

associated with a corresponding probability P (E),

Postulate 1: The probability of a given event E is such that P (E) ≥ 0.

Postulate 2: The probability associated with the null event is zero, i.e.

P (∅) = 0.

Postulate 3: The probability of the event corresponding to the entire sample

space (referred to as the certain event) is 1, i.e. P (S) = 1.

Postulate 4: Given a number N of mutually exclusive events X1 , X2 ,

. . . XN , then the probability of the union of these events is given by

N

�

� �

P ∪N

X

=

P (Xi ).

i=1 i

i=1

(3.10)

95

Probability and stochastic processes

From postulates 1, 3 and 4, it is easy to deduce that the probability of an

event E must necessarily satisfy the condition

P (X) ≤ 1.

The proof is left as an exercise.

Twin experiments

It is often of interest to consider two separate experiments as a whole. For

example, if one probability experiment consists of a coin toss with event space

S = {h, t}, two consecutive (or simultaneous) coin tosses constitute twin experiments or a joint experiment. The event space of such a joint experiment is

therefore

S2 = {(h, h), (h, t), (t, h), (t, t)} .

(3.11)

Let Xi (i = 1, 2) correspond to an outcome on the first coin toss and Yj (j =

1, 2) correspond to an outcome on the second coin toss. To each joint outcome

(Xi , Yj ) is associated a joint probability P (Xi , Yj ). This corresponds naturally

to the probability that events Xi and Yj occurred and it can therefore also be

written P (Xi ∩ Yj ). Suppose that given only the set of joint probabilities, we

wish to find P (X1 ) and P (X2 ), that is, the marginal probabilities of events X1

and X2 .

Definition 3.9. The marginal probability of an event E is, in the context of

a joint experiment, the probability that event E occurs irrespective of the other

constituent experiments in the joint experiment.

In general, a twin experiment has outcomes Xi , i = 1, 2, . . . , N1 , for the

first experiment and Yj , j = 1, 2, . . . , N2 for the second experiment. If all the

Yj are mutually exclusive, the marginal probability of Xi is given by

P (Xi ) =

N2

�

P (Xi , Yj ) .

(3.12)

j=1

By the same token, if all the Xi are mutually exclusive, we have

P (Yj ) =

N1

�

P (Xi , Yj ) .

(3.13)

i=1

Now, suppose that the outcome of one of the two experiments is known, but

not the other.

Definition 3.10. The conditional probability of an event Xi given an event

Yj is the probability that event Xi will occur given that Yj has occured.

96

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

The conditional probability of Xi given Yj is defined as

P (Xi |Yj ) =

P (Xi , Yj )

.

P (Yj )

(3.14)

P (Yj |Xi ) =

P (Xi , Yj )

.

P (Xi )

(3.15)

Likewise, we have

A more general form of the above two relations is known as Bayes’ rule.

Definition 3.11. Bayes’ rule: Given {Y1 , . . . , YN }, a set of N mutually exclusive events whose union forms the entire sample space S, and X is any

arbitrary event in S with non-zero probability (P (X) ≥ 0), then

P (Yj |X) =

=

=

P (X, Yj )

P (X)

P (Yj ) P (X|Yj )

P (X)

P (Yj ) P (X|Yj )

.

�N

j=1 P (Yj ) P (X|Yj )

(3.16)

(3.17)

Another important concern in a twin experiment is whether or not the occurrence of one event (Xi ) influences the probability that another event (Yj )

will occur, i.e. whether the events Xi and Yj are independent.

Definition 3.12. Two events X and Y are said to be statistically independent

if P (X|Y ) = P (X) and P (Y |X) = P (Y ).

For instance, in the case of the twin coin toss joint experiment, the events

X1 = {h} and X2 = {t} — being the potential outcomes of the first coin

toss — are independent from the events Y1 = {h} and Y2 = {t}, the potential

outcomes of the second coin toss. However, X1 is certainly not independent

from X2 since the occurence of X1 precludes the occurence of X2 .

Typically, independence results if the two events considered are generated

by physically separate probability experiments (e. g. two different coins).

Example 3.2

Consider a twin die toss. The results on one die is independent from the other

since there is no physical linkage between the two dice. However, if we reformulate the joint experiment as follows:

Experiment 1: the outcome is the sum of the two dice and corresponds to

the event Xi , i=1, 2, . . . , 11. The corresponding sample space is SX =

{2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12}.

Probability and stochastic processes

97

Experiment 2: the outcome is the magnitude of the difference between the

two dice and corresponds to the event Yj , j=1, 2, . . . , 6. The corresponding

sample space is SY = {0, 1, 2, 3, 4, 5}.

In this case, the two experiments are linked since they are both derived from

the same dice toss. For example, if Xi = 4, then Yj can only take the values

{0, 2}. We therefore have statistical dependence.

From (3.14), we find the following:

Definition 3.13. Multiplicative rule: Given two events X and Y , the probability that they both occur is

P (X, Y ) = P (X)P (Y |X) = P (Y )P (X|Y ).

(3.18)

Hence, the probability that X and Y occur is the probability that one of

these events occurs times the probability that the other event occurs, given that

the first one has occurred.

Furthermore, should events X and Y be independent, the above reduces to

(special multiplicative rule):

P (X, Y ) = P (X)P (Y ).

3.

(3.19)

Random variables

In the preceding section, it was seen that a statistical experiment is any operation or physical process by which one or more random measurements are

made. In general, the outcome of such an experiment can be conveniently

represented by a single number.

Let the function X(s) constitute such a mapping, i.e. X(s) takes on a value

on the real line as a function of s where s is an arbitrary sample point in the

sample space S. Then X(s) is a random variable.

Definition 3.14. A function whose value is a real number and is a function of

an element chosen in a sample space S is a random variable or r.v..

Given a die toss, the sample space is simply

S = {1, 2, 3, 4, 5, 6} .

(3.20)

In a straightforward manner, we can define a random variable X(s) = s

which takes on a value determined by the number of spots found on the top

surface of the die after the roll.

A slightly less obvious mapping would be the following

�

−1 if s = 1, 3, 5,

X(s) =

(3.21)

1

if s = 2, 4, 6,

98

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

which is an r.v. that takes on a value of 1 if the die roll is even and -1 otherwise.

Random variables need not be defined directly from a probability experiment, but can actually be derived as functions of other r.v.’s. Going back to

example 3.2, and letting D1 (s) = s be an r.v. associated with the first die and

D2 (s) = s with the second die, we can define the r.v’s

X = D1 + D2 ,

Y = |D1 − D2 |,

(3.22)

(3.23)

where X is an r.v. corresponding to the sum of the dice and defined as X(sX ) =

sX , where sX is an element of the sample space SX = {2, 3, 4, 5, 6, 7,

8, 9, 10, 11, 12}. The same holds for Y , but with respect to sample space

SY = {0, 1, 2, 3, 4, 5}.

Example 3.3

Consider a series of N consecutive coin tosses. Let us define the r.v. X as

being the total number of heads obtained. Therefore, the sample space of X is

SX = {0, 1, 2, 3, . . . , N } .

(3.24)

Furthermore, we define an r.v. Y = X

N , it being the ratio of the number of heads

to the total number of tosses. If N = 1, the sample

� space

�Y corresponds to the

1

set

{0,

1}.

If

N

=

2,

the

sample

space

becomes

0,

,

1

, and if N = 10, it is

2

� 1 2

�

0, 10 , 10 , . . . , 1 .

Hence, the variable Y is always constrained between 0 and 1; however, if

we let N tend towards infinity, it can take an infinite number of values within

this interval (its sample space is infinite) and it thus becomes a continuous r.v.

Definition 3.15. A continuous random variable is an r.v. which is not restricted to a discrete set of values, i.e. it can take any real value within a

predetermined interval or set of intervals.

It follows that a discrete random variable is an r.v. restricted to a finite

set of values (its sample space is finite) and corresponds to the type of r.v. and

probability experiments discussed so far.

Typically, discrete r.v.’s are used to represent countable data (number of

heads, number of spots on a die, number of defective items in a sample set,

etc.) while continuous r.v.’s are used to represent measurable data (heights,

distances, temperatures, electrical voltages, etc.).

Probability distribution

Since each value that a discrete random variable can assume corresponds to

an event or some quantification / mapping / function of an event, it follows that

each such value is associated with a probability of occurrence.

99

Probability and stochastic processes

Consider the variable X in example 3.3. If N = 4, we have the following:

x

P (X = x)

0

1

2

3

4

1

16

4

16

6

16

4

16

1

16

Assuming a fair coin, the underlying sample space is made up of sixteen

outcomes

S = {tttt, ttth, ttht, tthh, thtt, thth, thht, thhh, httt, htth, htht,

hthh, hhtt, hhth, hhht, hhhh} ,

(3.25)

1

and each of its sample points is equally likely with probability 16

. Only one of

1

these outcomes (tttt) has no heads; it follows that P (X = 0) = 16

. However,

four outcomes have one head and three tails (httt, thtt, ttht, ttth), leading to

4

to P (X = 1) = 16

. Furthermore, there are 6 possible combinations of 2 heads

6

and 2 tails, yielding P (X = 2) = 16

. By symmetry, we have P (X = 3) =

4

1

P (X = 1) = 16 and P (X = 4) = P (X = 0) = 16

.

Knowing that the number of combinations of N distinct objects taken n at

a time is

�

�

N!

N

=

,

(3.26)

n

n!(N − n)!

the probabilities tabulated above (for N = 4) can be expressed with a single

formula, as a function of x:

� �

1

4

P (X = x) =

, x = 0, 1, 2, 3, 4.

(3.27)

x 16

Such a formula constitutes the discrete probability distribution of X.

Suppose now that the coin is not necessarily fair and is characterized by

a probability p of getting heads and a probability 1 − p of getting tails. For

arbitrary N , we have

�

�

N

P (X = x) =

px (1 − p)N −x , x ∈ {0, 1, . . . , N } ,

(3.28)

x

which is known as the binomial distribution.

Probability density function

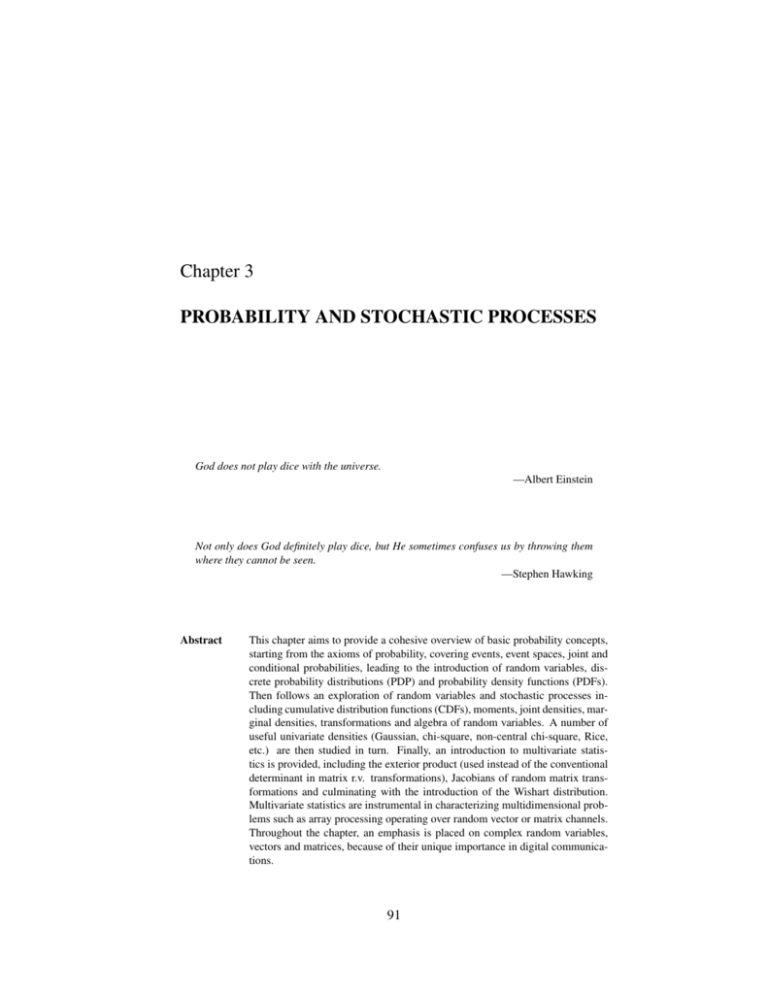

Consider again example 3.3. We have found that the variable X has a discrete probability distribution of the form (3.28). Figure 3.1 shows histograms

of P (X = x) for increasing values of N . It can be seen that as N gets large,

the histogram approaches a smooth curve and its “tails” spread out. Ultimately,

if we let N tend towards infinity, we will observe a continuous curve like the

one in Figure 3.1d.

100

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

The underlying r.v. is of the continuous variety, and Figure 3.1d is a graphical representation of its probability density function (PDF). While a PDF

cannot be tabulated like a discrete probability distribution, it certainly can be

expressed as a mathematical function. In the case of Figure 3.1d, the PDF is

expressed

2

− x2

1

fX (x) = √

e 2σX ,

2πσX

(3.29)

2 is the variance of the r.v. X. This density function is the allwhere σX

important normal or Gaussian distribution. See the Appendix for a derivation

of this PDF which ties in with the binomial distribution.

0.2

0.25

0.15

P (X = x)

P (X = x)

0.2

0.15

0.1

0.1

0.05

0.05

0

0

2

1

3

4

5

6

7

8

1

9

2

3

4

5

6

7

8

x

(a) N = 8

(b) N = 16

0.14

0.14

0.12

0.12

0.1

0.1

P (X = x)

P (X = x)

9 10 11 12 13 14 15 16 17

x

0.08

0.06

0.08

0.06

0.04

0.04

0.02

0.02

0

1

5

10

20

15

25

30

33

x

(c) N = 32

0

0

5

10

20

15

25

30

x

(d) Gaussian PDF

Figure 3.1. Histograms of the discrete probability function of the binomial distributions with

(a) N = 8; (b) N = 16; (c) N = 32; and (d) a Gaussian PDF with the same mean and variance

as the binomial distribution with N = 32.

A PDF has two important properties:

1. fX (x) ≥ 0 for all x, since a negative probability makes no sense;

�∞

2. −∞ fX (x)dx = 1 which is the continuous version of postulate 3 from

section 2.

101

Probability and stochastic processes

It is often of interest to determine the probability that an r.v. takes on a

value which is smaller than a predetermined threshold. Mathematically, this is

expressed

� x

FX (x) = P (X ≤ x) =

fX (α)dα, −∞ < x < ∞,

(3.30)

−∞

where fX (x) is the PDF of variable X and FX (x) is its cumulative distribution function (CDF). A CDF has three outstanding properties:

1. FX (−∞) = 0 (obvious from (3.30));

2. FX (∞) = 1 (a consequence of property 2 of PDFs);

3. FX (x) increases in a monotone fashion from 0 to 1 (from (3.30) and property 1 of PDFs).

Furthermore, the relation (3.30) can be inverted to yield

dFX (x)

.

(3.31)

dx

It is also often useful to determine the probability that an r.v. X takes on a

value falling in an interval [x1 , x2 ]. This can be easily accomplished using the

PDF of X as follows:

� x2

� x2

� x1

P (x1 < X ≤ x2 ) =

fX (x)dx =

fX (x)dx −

fX (x)dx

fX (x) =

x1

−∞

= FX (x2 ) − FX (x1 ).

−∞

(3.32)

This leads us to the following counter-intuitive observation. If the PDF is

continuous and we wish to find P (X = x1 ), it is insightful to proceed as

folllows:

lim

P (x1 < X ≤ x2 )

x2 → x1

� x2

lim

=

fX (x)dx

x2 → x1 x1

� x2

= fX (x1 ) lim

dx

x2 → x1 x1

P (X = x1 ) =

= fX (x1 ) lim

(x2 − x1 )

x2 → x1

= 0.

(3.33)

102

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

Hence, the probability that X takes on exactly a given value x1 is null.

Intuitively, this is a consequence of the fact that X can take on an infinity of

values and the “sum” (integral) of the associated probabilities must be equal to

1.

However, if the PDF is not continuous, the above observation doesn’t necessarily hold. A discrete r.v., for example, not only has a discrete probability

distribution, but also a corresponding PDF. Since the r.v. can only take on a

finite number of values, its PDF is made up of Dirac impulses at the locations

of the said values.

For the binomial distribution, we have

�

N �

�

N

fX (x) =

pn (1 − p)N −n δ(x − n),

n

(3.34)

n=0

and, for a general discrete r.v. with a sample space of N elements, we have

fX (x) =

N

�

n=1

P (X = xn )δ(x − xn ).

(3.35)

Moments and characteristic functions

What exactly is the mean of a random variable? One intuitive answer is that

it is the “most likely” outcome of the underlying experiment. However, while

this is not far from the truth for well-behaved PDFs (which have a single peak

at, or in the vicinity of, their mean), it is misleading since the mean, in fact,

may not even be a part of the PDF’s support (i.e. its sample space or range of

possible values). Nonetheless, we refer to the mean of an r.v. as its expected

value, denoted by the expectation operator �·�. It is defined

�X� = µX =

�

∞

xfX (x)dx,

(3.36)

−∞

and it also happens to be the first moment of the r.v. X.

The expectation operator bears its name because it is indeed the best “educated guess” one can make a priori about the outcome of an experiment given

the associated PDF. While it may indeed lie outside the set of allowable values

of the r.v., it is the quantity that will on average minimize the error between X

and its a priori estimate X̂ = �X�. Mathematically, this is expressed

�X� = min

X̂

��

��

�

�

�X − X̂ � .

(3.37)

103

Probability and stochastic processes

Although this is a circular definition, it is insightful, especially if we expand

the right-hand side into its integral representation:

�X� = min

X̂

�

∞

−∞

�

�

�

�

�X̂ − x� fX (x)dx.

(3.38)

For another angle to this concept, consider a series of N trials of the same

experiment. If each trial is unaffected by the others, the outcomes are associated with a set of N independent and identically distributed (i.i.d.) r.v.’s

X1 , . . . , XN . The average of these outcomes is itself an r.v. given by

Y =

N

�

Xn

n=1

N

(3.39)

.

As N gets large, Y will tend towards the mean of the Xn ’s and in the limit

Y = lim

N →∞

N

�

Xn

n=1

N

= �X� ,

(3.40)

where �X� = �X1 � = . . . = �XN �. This is known in statistics as the weak

law of large numbers.

In general, the k th raw moment is defined as

� � � ∞

Xk =

xk fX (x)dx,

(3.41)

−∞

where the law of large numbers still holds since

� ∞

� � � ∞

k

k

X =

x fX (x)dx =

zfZ (z)dz = �Z� ,

−∞

(3.42)

−∞

where fZ (z) is the PDF of Z = X k .

In the same manner, we can find the expectation of any arbitrary function

Y = g(X) as follows

� ∞

�Y � = �g(X)� =

g(x)fX (x)dx.

(3.43)

−∞

One such useful function is Y = (X − µX )k where µX = �X� is the mean

of X.

Definition 3.16. The expectation of Y = (X − µX )k is the k th central moment of the r.v. X.

104

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

From (3.43), we have

�

� �

k

�Y � = (X − µX ) =

∞

−∞

(x − µX )k fX (x)dx.

(3.44)

Definition 3.17. The 2nd central moment �X − µX �2 is called the variance

and its square root is the standard deviation.

2 , is given by

The variance of X, denoted σX

� ∞

2

σX =

(x − µX )2 fX (x)dx,

(3.45)

−∞

and it is useful because (like the standard deviation), it provides a measure of

the degree of dispersion of the r.v. X about its mean.

Expanding the quadratic form (x − µX )2 in (3.45) and integrating term-byterm, we find

� �

2

σX

= X 2 − 2µX �X� + µ2X

� �

= X 2 − 2µ2X + µ2X

� �

= X 2 − µ2X .

(3.46)

In deriving the above, we have inadvertently exploited two of the properties

of the expectation operator:

Property 3.1. Given the expectation of a sum, where each term may or may

not involve the same random variable, it is equal to the sum of the expectations,

i.e.

�

�X + Y � = �X� + �Y � ,

�

� �

X + X 2 = �X� + X 2 .

Property 3.2. Given the expectation of the product of an r.v. by a deterministic

quantity α, the said quantity can be removed from the expectation, i.e.

�αX� = α �X� .

These two properties stem readily from the (3.43) and the properties of integrals.

Theorem 3.1. Given the expectation of a product of random variables, it is

equal to the product of expectations, i.e.

�XY � = �X� �Y � ,

if and only if the two variables X and Y are independent.

105

Probability and stochastic processes

Proof. We can readily generalize (3.43) for the multivariable case as follows:

�

�

∞

∞

···

�g(X1 , X2 , · · · , XN )� =

g (X1 , X2 , · · · , XN ) ×

−∞

�

�� −∞�

N -fold

fX1 ,··· ,XN (x1 , · · · xN ) dx1 · · · dxN . (3.47)

Therefore, we have

�XY � =

�

∞

−∞

�

∞

xyfX,Y (x, y)dxdy,

(3.48)

−∞

where, if and only if X and Y are independent, the density factors to yield

� ∞� ∞

�XY � =

xyfX (x)fY (y)dxdy

−∞ −∞

� ∞

� ∞

=

xfX (x)dx

yfY (y)dy

−∞

∞

= �X� �Y � .

(3.49)

The above theorem can be readily extended to the product of N independent variables or N expressions of independent random variables by applying

(3.46).

Definition 3.18. The characteristic function (C. F.) of an r.v. X is defined

� ∞

� jtX �

φX (jt) = e

=

ejtX fX (x)dx,

(3.50)

where j =

√

−∞

−1.

It can be seen that the integral is in fact an inverse Fourier transform in the

variable t. It follows that the inverse of (3.50) is

� ∞

1

fX (x) =

φX (jt)e−jtx dt.

(3.51)

2π −∞

Characteristic functions play a role similar to the Fourier transform. In other

words, some operations are easier to perform in the characteristic function domain than in the PDF domain. For example, there is a direct relationship between the C. F. and the moments of a random variable, allowing the latter to be

obtained without integration (if the C. F. is known).

106

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

The said relationship involves evaluation of the k th derivative of the C. F. at

t = 0, i. e.

�

� �

k

�

k

k d φX (jt) �

X = (−j)

.

(3.52)

dtk �t=0

As will be seen later, characteristic functions are also useful in determining

the PDF of sums of random variables. Furthermore, since the crossing into the

C. F. domain is, in fact, an inverse Fourier transform, all properties of Fourier

transforms hold.

Functions of one r.v.

Consider an r.v. Y defined as a function of another r.v. X, i.e.

Y = g(X).

(3.53)

If this function is uniquely invertible, we have X = g −1 (Y ) and

FY (y) = P (Y ≤ y) = P (g(X) ≤ y) = P (X ≤ g −1 (y))

= FX (g −1 (y)).

(3.54)

Differentiating with respect to y allows us to relate the PDFs of X and Y :

�

�

d

fX g −1 (y)

dy

�

�

�

�

d g −1 (y)

d

=

FX g −1 (y)

−1

d (g (y))

dy

�

�

� −1 � d g −1 (y)

d

=

FX g (y)

d (g −1 (y))

dy

� −1 �

�

� d g (y)

= fX g −1 (y)

dy

fY (y) =

(3.55)

(3.56)

In the general case, the equation Y = g(X) may have more than one root.

If there are N real roots denoted x1 (y), x2 (y), . . . , xN (y), the PDF of Y is

given by

�

�

N

�

� ∂xn (y) �

�.

fY (y) =

fX (xn (y)) ��

(3.57)

∂y �

n=1

Example 3.4

Let Y = AX + B where A and B are arbitrary constants. Therefore, we have

X = Y −B

A and

�

�

∂

y−B

A

∂y

=

1

A

107

Probability and stochastic processes

.

It follows that

1

fY (y) = fX

A

�

y−B

A

�

.

(3.58)

Suppose that X follows a Gaussian distribution with a mean of zero and a

2 . Hence,

variance of σX

2

fX (x) = √

− x2

1

e 2σX ,

2πσX

(3.59)

and

(y−B)2

fY (y) = √

− 2 2

1

e 2A σX .

2πσX A

(3.60)

2 A2 and its mean

The r.v. Y is still a Gaussian variate, but its variance is σX

is B.

Pairs of random variables

In performing multiple related experiments or trials, it becomes necessary

to manipulate multiple r.v.’s. Consider two r.v.’s X1 and X2 which stem from

the same experiment or from twin related experiments. The probability that

X1 < x1 and X2 < x2 is determined from the joint CDF

P (X1 < x1 , X2 < x2 ) = FX1 ,X2 (x1 , x2 ).

(3.61)

Furthermore, the joint PDF can be obtained by derivation:

fX1 ,X2 (x1 , x2 ) =

∂ 2 FX1 ,X2 (x1 , x2 )

.

∂x1 ∂x2

(3.62)

Following the concepts presented in section 2 for probabilities, the PDF

of the r.v. X1 irrespective of X2 is termed the marginal PDF of X1 and is

obtained by “averaging out” the contribution of X2 to the joint PDF, i.e.

� ∞

fX1 (x1 ) =

fX1 ,X2 (x1 , x2 )dx2 .

(3.63)

−∞

According to Bayes’ rule, the conditional PDF of X1 given that X2 = x2

is given by

fX1 ,X2 (x1 , x2 )

fX1 |X2 (x1 |x2 ) =

.

(3.64)

fX2 (x2 )

108

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

Transformation of two random variables

Given 2 r.v.’s X1 and X2 with joint PDF fX1 ,X2 (x1 , x2 ), let Y1 = g(X1 , X2 ),

Y2 = g2 (X1 , X2 ), where g1 (X1 , X2 ) and g2 (X1 , X2 ) are 2 arbitrary singlevalued continuous functions of X1 and X2 .

Let us also assume that g1 and g2 are jointly invertible, i.e.

X1 = h1 (Y1 , Y2 ),

X2 = h2 (Y1 , Y2 ),

(3.65)

where h1 and h2 are also single-valued and continuous.

The application of the transformation defined by g1 and g2 amounts to a

change in the coordinate system. If we consider an infinitesimal rectangle of

dimensions ∆y1 ×∆y2 in the new system, it will in general be mapped through

the inverse transformation (defined by h1 and h2 ) to a four-sided curved region

in the original system (see Figure 3.2). However, since the region is infinitesimally small, the curvature induced by the transformation can be abstracted out.

We are left with a parallelogram and we wish to calculate its area.

y2

∆x2

w

∆y1

h1 , h2

α

∆x1

∆y2

g1 , g2

v

y1

Figure 3.2. Coordinate system change under transformation (g1 , g2 ).

This can be performed by relying on the tangential vectors v and w. The

sought-after area is then given by

A = ∆x1 ∆x2 = �v�2 �w�2 sin α∆y1 ∆y2

�

�

v[1] w[1]

= det

∆y1 ∆y2 ,

v[2] w[2]

(3.66)

where the scaling factor is denoted

�

�

��

�

A

v[1] w[1] ��

�

J=

= det

,

v[2] w[2] �

∆y1 ∆y2 �

(3.67)

and is the Jacobian J of the transformation. The Jacobian embodies the scaling

effects of the transformation and ensures that the new PDF will integrate to

unity.

109

Probability and stochastic processes

Thus, the joint PDF of Y1 and Y2 is given by

fY1 ,Y2 (y1 , y2 ) = JfX1 ,X2 (g1−1 (y1 , y2 ), g2−1 (y1 , y2 )).

From the tangential vectors, the Jacobian is defined

�

�

�� �

� ��

∂h1 (x1 ,x2 )

∂h2 (x1 ,x2 )

�

� �

∂y ��

�

�

∂x

∂x

J = �det ∂h1 (x11,x2 ) ∂h2 (x11,x2 ) � = ��det

.

�

�

∂x �

∂x

∂x

2

(3.68)

(3.69)

2

In the above, it has been assumed that the mapping between the original

and the new coordinate system was one-to-one. What if several regions in the

original domain map to the same rectangular area in the new domain? This

implies that the system

Y1 = h1 (X1 , X2 ),

(3.70)

Y2 = h2 (X1 , X2 ),

�

�

� �

�

�

(K) (K)

(1) (1)

(2) (2)

. Then all

has several solutions x1 , x2 , x1 , x2 , . . . , x1 , x2

these solutions (or roots) contribute equally to the new PDF, i.e.

fY1 ,Y2 (y1 , y2 ) =

K

�

k=1

�

� �

�

(k) (k)

(k) (k)

fX1 ,X2 x1 , x2 J x1 , x2 .

(3.71)

Multiple random variables

Transformation of N random variables

It is often the case (and especially in multidimensional signal processing

problems, which constitute a main focal point of the present book) that a relatively large set of random variables must be considered collectively. It can

be considered then that such a set of variables represent the state of a single

stochastic process.

Given an arbitrary number N of random variables X1 , . . . , XN which collectively behave according to joint PDF fX1 ,X2 ,··· ,XN (x1 , x2 , . . . , xN ), a transformation is defined by the system of equations

y = g(x),

(3.72)

where x and y are N × 1 vectors, and g is an N × 1 vector function of vector

x.

The reverse transformation may have one or more solutions, i.e.

x(k) = gk−1 (y),

where K is the number of solutions.

k ∈ [1, 2, · · · , K],

(3.73)

110

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

The Jacobian corresponding to the kth solution is given by

�

�

��

�

∂gk−1 (x) ��

�

Jk = �det

�,

�

�

∂x

(3.74)

which is simply a generalization (with a slight change in notation) of (3.69).

This directly leads to

fy (y) =

K

�

k=1

�

�

fx gk−1 (y) Jk .

(3.75)

Joint characteristic functions

Given a set of N random variables characterized by a joint PDF, it is relevant

to define a corresponding joint characteristic function.

Definition 3.19. The joint characteristic function of a set of r.v.’s X1 , X2 ,

. . . , XN with joint PDF fX1 ,X2 ,··· ,XN (x1 , x2 , . . . , xN ) is given by

�

�

�

φX1 ,X2 ,··· ,XN (t1 , t2 , . . . , tN ) = ej(t1 x1 +t2 x2 +···tN xN ) =

∞

�

∞

···

ej(t1 x1 +···tN xN ) fX1 ,··· ,XN (x1 , . . . , xN )dx1 · · · dxN .

−∞

−∞

�

��

�

N -fold

Algebra of random variables

Sum of random variables

Suppose we want to characterize an r.v. which is defined as the sum of two

other independent r.v.’s, i.e.

Y = X1 + X2 .

(3.76)

One way to attack this problem is to fix one r.v., say X2 , and treat this as a

transformation from X1 to Y . Given X2 = x2 , we have

Y = g(X1 ),

(3.77)

g(X1 ) = X1 + x2 ,

(3.78)

g −1 (Y ) = Y − x2 .

(3.79)

where

and

111

Probability and stochastic processes

It follows that

∂g −1 (y)

∂y

= fX1 (Y − x2 ).

fY |X2 (y|x2 ) = fX1 (y − x2 )

(3.80)

Furthermore, we know that

fY (y) =

=

�

∞

�−∞

∞

−∞

fY,X2 (y, x2 )dx2

fY |X2 (y|x2 )fX2 (x2 )dx2

which, by substituting (3.80), becomes

� ∞

fY (y) =

fX1 (y − x2 )fX2 (x2 )dx2 .

(3.81)

(3.82)

−∞

This is the general formula for the sum of two independent r.v.’s and it can

be observed that it is in fact a Fourier convolution of the two underlying PDFs.

It is common knowledge that a convolution becomes a simple multiplication in the Fourier transform domain. The same principle applies here with

characteristic functions and it is easily demonstrated.

Consider a sum of N independent random variables:

Y =

N

�

(3.83)

Xn .

n=1

The characteristic function of Y is given by

�

�

� � PN

φY (jt) = ejtY = ejt n=1 Xn .

Assuming that the Xn ’s are independent, we have

�N

�

N

N

�

�

� jtXn � �

jtXn

φY (jt) =

e

e

=

φXn (jt).

=

n=1

n=1

(3.84)

(3.85)

n=1

Therefore, the characteristic function of a sum of r.v.’s is the product of

the constituent C.F.’s. The corresponding PDF is obtainable via the Fourier

transform

� ∞ �

N

1

fY (y) =

φXn (jt)e−jyt dt.

(3.86)

2π −∞

n=1

112

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

What if the random variables are not independent and exhibit correlation?

Consider again the case of two random variables; this time, the problem is

conveniently addressed by an adequate joint transformation. Let

Y = g1 (X1 , X2 ) = X1 + X2 ,

Z = g2 (X1 , X2 ) = X2 ,

(3.87)

(3.88)

where Z is not a useful r.v. per se, but was included to allow a 2 × 2 transformation.

The corresponding Jacobian is

�

�

� 1 0 �

�

� = 1.

J =�

(3.89)

−1 1 �

Hence, we have

fY,Z (y, z) = fX1 ,X2 (y − z, z),

(3.90)

and we can find the marginal PDF of Y with the usual integration procedure,

i.e.

� ∞

fY (y) =

fY,Z (y, z)fZ (z)dz

−∞

� ∞

=

fX1 ,X2 (y − x2 , x2 )fX2 (x2 )dx2 .

(3.91)

−∞

Products of random variables

A similar approach applies to products of r.v.’s. Consider the following

product of two r.v.’s:

Z = X1 X2 .

(3.92)

We can again fix X2 and obtain the conditional PDF of Z given X2 = x2 as

was done for the sum of two r.v.’s. Given that g(X1 ) = X1 x2 and g −1 (Z) =

Z

x2 , this approach yields

� �

� �

z

∂

z

fZ|X2 (z|x2 ) = fX1

x2 ∂z x2

� �

1

z

=

fX1

.

(3.93)

x2

x2

Therefore, we have

fZ (z) =

=

�

∞

fZ|X2 (z|x2 )fX2 (x2 )dx2

� �

z

1

fX1

fX2 (x2 ) dx2 .

x2

x2

−∞

−∞

� ∞

(3.94)

113

Probability and stochastic processes

This formula happens to be the Mellin convolution of fX1 (x) and fX2 (x)

and it is related to the Mellin tranform in the same way that Fourier convolution

is related to the Fourier transform (see the overview of Mellin transforms in

subsection 3).

Hence, given a product of N independent r.v.’s

Z=

N

�

Xn ,

(3.95)

n=1

we can immediately conclude that the Mellin transform of fZ (z) is the product

of the Mellin transforms of the constituent r.v.’s, i.e.

MfZ (s) =

N

�

n=1

MfX (s) = [MfX (s)]N .

(3.96)

It follows that we can find the corresponding PDF through the inverse Mellin

transform. This is expressed

�

1

fZ (z) =

MfZ (s) x−s dx,

(3.97)

2π L±∞

where one particular integration path was chosen, but others are possible (see

chapter 2, section 3, subsection on Mellin transforms).

4.

Stochastic processes

Many observable parameters that are considered random in the world around

us are actually functions of time, e.g. ambient temperature and pressure, stock

market prices, etc. In the field of communications, actual useful message signals are typically considered random, although this might seem counterintuitive. The randomness here relates to the unpredictability that is inherent in

useful communications. Indeed, if it is known in advance what the message

is (the message is predetermined or deterministic), there is no point in transmitting it at all. On the other hand, the lack of any fore-knowledge about the

message implies that from the point-of-view of the receiver, the said message

is a random process or stochastic process. Moreover, the omnipresent white

noise in communication systems is also a random process, as well as the channel gains in multipath fading channels, as will be seen in chapter 4.

Definition 3.20. Given a random experiment with a sample space S, comprising outcomes λ1 , λ2 , . . . , λN , and a mapping between every possible outcome

λ and a set of corresponding functions of time X(t, λ), then this family of

functions, together with the mapping and the random experiment, constitutes a

stochastic process.

114

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

In fact, a stochastic process is a function of two variables; the outcome

variable λ which necessarily belongs to sample space S, and time t, which can

take any value between −∞ and ∞.

Definition 3.21. To a specific outcome λi in a stochastic process corresponds

a single time function X(t, λi ) = xi (t) called a member function or sample

function of the said process.

Definition 3.22. The set of all sample functions in a stochastic process is called

an ensemble.

We have established that for a given outcome λi , X(t, λi ) = xi (t) is a

predetermined function out of the ensemble of possible functions. If we fix

t to some value t1 instead of fixing the outcome λ, then X(t1 , λ) becomes

a random variable. At another time instant, we find another random variable X(t2 , λ) which is most likely correlated with X(t1 , λ). It follows that

a stochastic process can also be seen as a succession of infinitely many joint

random variables (one for each defined instant in time) with a given joint distribution. Any set of instances for these r.v.’s constitutes one of the member

functions. While this view is conceptually helpful, it is hardly practical for

manipulating processes given the infinite number of individual r.v.’s required.

Instead of continuous time, it is often sufficient to consider only a predetermined set of time instants t1 , t2 , . . . , tN . In that case, the set of random

variables X(t1 ), X(t2 ), . . . , X(tN ) becomes a random vector x with a PDF

fx (x), where x = [X(t1 ), X(t2 ), · · · , X(tN )]T . Likewise, a CDF can be

defined:

Fx (x) = P (X(t1 ) ≤ x1 , X(t2 ) ≤ x2 , . . . , X(tN ) ≤ xN ) .

(3.98)

Sometimes, it is more productive to consider how the process is generated.

Indeed, a large number of infinitely precise time functions can in some cases

result from a relatively simple random mechanism, and such simple models

are highly useful to the communications engineer, even when they are approximations or simplifications of reality. Such parametric modeling as a function

of one or more random variables can be expressed as follows:

X(t, λ) = g1 (Y1 , Y2 , · · · , YN , t),

(3.99)

where g1 is a function of the underlying r.v.’s Y1 , Y2 , . . . , YN and time t, and

λ = g2 (Y1 , Y2 , · · · , YN ),

(3.100)

where g2 is a function of the r.v.’s {Yn }, which together uniquely determine

the outcome λ.

115

Probability and stochastic processes

Since at a specific time t a process is essentially a random variable, it follows

that a mean can be defined as

� ∞

�X(t)� = µX (t) =

x(t)fX(t) (x)dx.

(3.101)

−∞

Other important statistics are defined below.

Definition 3.23. The joint moment

�

RXX (t1 , t2 ) = �X(t1 )X(t2 )� =

∞

−∞

�

∞

−∞

x1 x2 fX(t1 ),X(t2 ) (x1 , x2 )dx1 dx2 ,

is known as the autocorrelation function.

Definition 3.24. The joint central moment

µXX (t1 , t2 ) = �(X(t1 ) − µX (t1 )) (X(t2 ) − µX (t2 ))�

= RXX (t1 , t2 ) − µX (t1 )µX (t2 ).

is known as the autocovariance function.

The mean, autocorrelation, and autocovariance functions as defined above

are designated ensemble statistics since the averaging is performed with respect to the ensemble of possible functions at a specific time instant. Other

joint moments can be defined in a straightforward manner.

Example 3.5

Consider the stochastic process defined by

X(t) = sin(2πfc t + Φ),

(3.102)

where fc is a fixed frequency and Φ is a uniform random variable taking values

between 0 and 2π. This is an instance of a parametric description where the

process is entirely defined by a single random variable Φ.

The ensemble mean is

� ∞

µX (t) =

xfX(t) (x)dx

=

−∞

2π

�

0

1

=

2π

= 0,

sin(2πfc t + x)fΦ (x)dx

�

0

2π

sin(2πfc t + x)dx

(3.103)

116

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

and the autocorrelation function is

� ∞� ∞

RXX (t1 , t2 ) =

x1 x2 fX(t1 ),X(t2 ) (x1 , x2 )dx1 dx2

=

−∞

2π

�

−∞

sin(2πfc t1 + φ) sin(2πfc t2 + φ)fΦ (φ)dφ

�� 2π

1

cos (2πfc (t2 − t1 )) dφ

4π 0

�

� 2π

−

cos (2πfc (t1 + t2 ) + 2φ) dφ

0

=

0

=

=

1

[2π cos (2πfc (t2 − t1 )) − 0]

4π

1

cos (2πfc (t2 − t1 )) .

2

(3.104)

Definition 3.25. If all statistical properties of a stochastic process are invariant

to a change in time origin, i.e. X(t, λ) is statistically equivalent to X(t +

T, λ), for any t, and T is any arbitrary time shift, then the process is said to be

stationary in the strict sense.

Stationarity in the strict sense implies that for any set of N time instants t1 ,

t2 , . . . , tN , the joint PDF of X(t1 ), X(t2 ), . . . , X(tN ) is identical to the joint

PDF of X(t1 + T ), X(t2 + T ), . . . , X(tN + T ). Equivalently, it can be said

that a process is strictly stationary if

�

� �

�

X k (t) = X k (0) , for all t, k.

(3.105)

However, a less stringent definition of stationarity is often useful, since strict

stationarity is both rare and difficult to determine.

Definition 3.26. If the ensemble mean and autocorrelation function of a stochastic process are invariant to a change of time origin, i.e.

�X(t)� = �X(t + T )� ,

RXX (t1 , t2 ) = RXX (t1 + T, t2 + T ),

for any t, t1 , t2 and T is any arbitrary time shift, then the process is said to be

stationary in the wide-sense or wide-sense stationary.

In the field of communications, it is typically considered sufficient to satisfy

the wide-sense stationarity (WSS) conditions. Hence, the expression “stationary process” in much of the literature (and in this book!) usually implies WSS.

Gaussian processes constitute a special case of interest; indeed, a Gaussian

process that is WSS is also automatically stationary in the strict sense. This is

117

Probability and stochastic processes

by virtue of the fact that all high-order moments of the Gaussian distribution

are functions solely of its mean and variance.

Since the point of origin t1 becomes irrelevant, the autocorrelation function

of a stationary process can be specified as a function of a single argument, i.e.

RXX (t1 , t2 ) = RXX (t1 − t2 ) = RXX (τ ),

(3.106)

where τ is the delay variable.

Property 3.3. The autocorrelation function of a stationary process is an even

function (symmetric about τ = 0), i.e.

RXX (τ ) = �X(t1 )X(t1 − τ )� = �X(t1 + τ )X(t1 )� = RXX (−τ ). (3.107)

Property 3.4. The autocorrelation function with τ = 0 yields the average

energy of the process, i.e.

�

�

RXX (0) = X 2 (t) .

Property 3.5. (Cauchy-Schwarz inequality)

|RXX (τ )| ≤ RXX (0),

.

Property 3.6. If X(t) is periodic, then RXX (τ ) is also periodic, i.e.

X(t) = X(t + T ) → RXX (τ ) = RXX (τ + T ).

It is straightforward to verify whether a process is stationary or not if an

analytical expression is available for the process. For instance, the process of

example 3.5 is obviously stationary. Otherwise, typical data must be collected

and various statistical tests performed on it to determine stationarity.

Besides ensemble statistics, time statistics can be defined with respect to a

given member function.

Definition 3.27. The time-averaged mean of a stochastic process X(t) is

given by

� T

2

1

MX = lim

X(t, λi )dt,

T →∞ T − T

2

where X(t, λi ) is a member function.

Definition 3.28. The time-averaged autocorrelation function is defined

1

RXX (τ ) = lim

T →∞ T

�

T

2

− T2

X(t, λi )X(t + τ, λi )dt.

118

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

Unlike the ensemble statistics, the time-averaged statistics are random variables; their actual values depend on which member function is used for time

averaging.

Obtaining ensemble statistics requires averaging over all member functions

of the sample space S. This requires either access to all said member functions,

or perfect knowledge of all the joint PDFs characterizing the process. This

is obviously not possible when observing a real-world phenomenon behaving

according to a stochastic process. The best that we can hope for is access to

time recordings of a small set of member functions. This makes it possible to

compute time-averaged statistics.

The question that arises is: can a time-averaged statistic be employed as an

approximation of the corresponding ensemble statistic?

Definition 3.29. Ergodicity is the property of a stochastic process by virtue of

which all its ensemble statistics are equal to its corresponding time-averaged

statistics.

Not all processes are ergodic. It is also very difficult to determine whether a

process is ergodic in the strict sense, as defined above. However, it is sufficient

in practice to determine a limited form of ergodicity, i.e. with respect to one

or two basic statistics. For example, a process X(t) is said to be ergodic in

the mean if µX = MX . Similarly, a process is said to be ergodic in the autocorrelation if RXX = RXX . An ergodic process is necessarily stationary,

although stationarity does not imply ergodicity.

Joint and complex stochastic processes

Given two joint stochastic process X(t) and Y (t), they are fully defined at

two respective sets of time instants {t1,1 , t1,2 , . . . , t1,M } and {t2,1 , t2,2 , . . . , t2,N }

by the joint PDF

fX(t1,1 ),X(t1,2 ),··· ,X(t1,M ),Y (t2,1 ),Y (t2,2 ),··· ,Y (t2,N ) (x1 , x2 , . . . , xM , y1 , y2 , . . . , yN ) .

Likewise, a number of useful joint statistics can be defined.

Definition 3.30. The joint moment

RXY (t1 , t2 ) = �X(t1 )Y (t2 )� =

�

∞

−∞

�

∞

−∞

xyfX(t1 ),Y (t2 ) (x, y)dxdy

is the cross-correlation function of the processes X(t) and Y (t).

Definition 3.31. The cross-covariance of X(t) and Y (t) is

µXY (t1 , t2 ) = �X(t1 )Y (t2 )� = RXY (t1 , t2 ) − µX (t1 )µY (t2 ).

119

Probability and stochastic processes

X(t) and Y (t) are jointly wide sense stationary if

�X m (t)Y n (t)� = �X m (0)Y n (0)� ,

for all t, m and n.

(3.108)

If X(t) and Y (t) are individually and jointly WSS, then

RXY (t1 , t2 ) = RXY (τ ),

µXY (t1 , t2 ) = µXY (τ ).

(3.109)

(3.110)

Property 3.7. If X(t) and Y (t) are individually and jointly WSS, then

RXY (τ ) = RY X (−τ ),

µXY (τ ) = µY X (−τ ).

The above results from the fact that �X(t)Y (t + τ )� = �X(t − τ )Y (t)�.

The two processes are said to be statistically independent if and only if

their joint distribution factors, i.e.

fX(t1 ),Y (t2 ) (x, y) = fX(t1 ) (x)fY (t2 ) (y).

(3.111)

They are uncorrelated if and only if µXY (τ ) = 0 and orthogonal if and

only if RXY (τ ) = 0.

Property 3.8. (triangle inequality)

|RXY (τ )| ≤

1

[RXX (0) + RY Y (0)] .

2

Definition 3.32. A complex stochastic process is defined

Z(t) = X(t) + jY (t),

where X(t) and Y (t) are joint, real stochastic processes.

Definition 3.33. The complex autocorrelation function (autocorrelation function of a complex process) is defined

1

�Z(t1 )Z ∗ (t2 )�

2

1

=

�[X(t1 ) + jY (t1 )] [X(t2 ) − jY (t2 )]�

2

1

=

[RXX (t1 , t2 ) + RY Y (t1 , t2 )]

2

j

+ [RY X (t1 , t2 ) − RXY (t1 , t2 )] .

2

RZZ (t1 , t2 ) =

Property 3.9. If Z(t) is WSS (implying that X(t) and Y (t) are individually

and jointly WSS), then

RZZ (t1 , t2 ) = RZZ (t1 − t2 ) = RZZ (τ ).

(3.112)

120

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

Definition 3.34. The complex crosscorrelation function of two complex random processes Z1 (t) = X1 (t) + jY1 (t) and Z2 (t) = X2 (t) + jY2 (t) is defined

1

RZ1 Z2 (t1 , t2 ) =

�Z1 (t1 )Z2∗ (t2 )�

2

1

=

[RX1 X1 (t1 , t2 ) + RX2 X2 (t1 , t2 )]

2

j

+ [RY1 X2 (t1 , t2 ) − RX1 Y2 (t1 , t2 )] .

2

Property 3.10. If the real and imaginary parts of two complex stochastic processes Z1 (t) and Z2 (t) are individually and pairwise WSS, then

1 ∗

∗

RZ

(τ ) =

�Z (t)Z2 (t − τ )�

1 Z2

2 1

1 ∗

=

�Z (t + τ )Z2 (t)�

2 1

= RZ2 Z1 (−τ ).

(3.113)

Property 3.11. If the real and imaginary parts of a complex random process

Z(t) are individually and jointly WSS, then

∗

(τ ) = RZZ (−τ ).

RZZ

(3.114)

Linear systems and power spectral densities

How does a linear system behave when a stochastic process is applied at

its input? Consider a linear, time-invariant system with impulse response h(t)

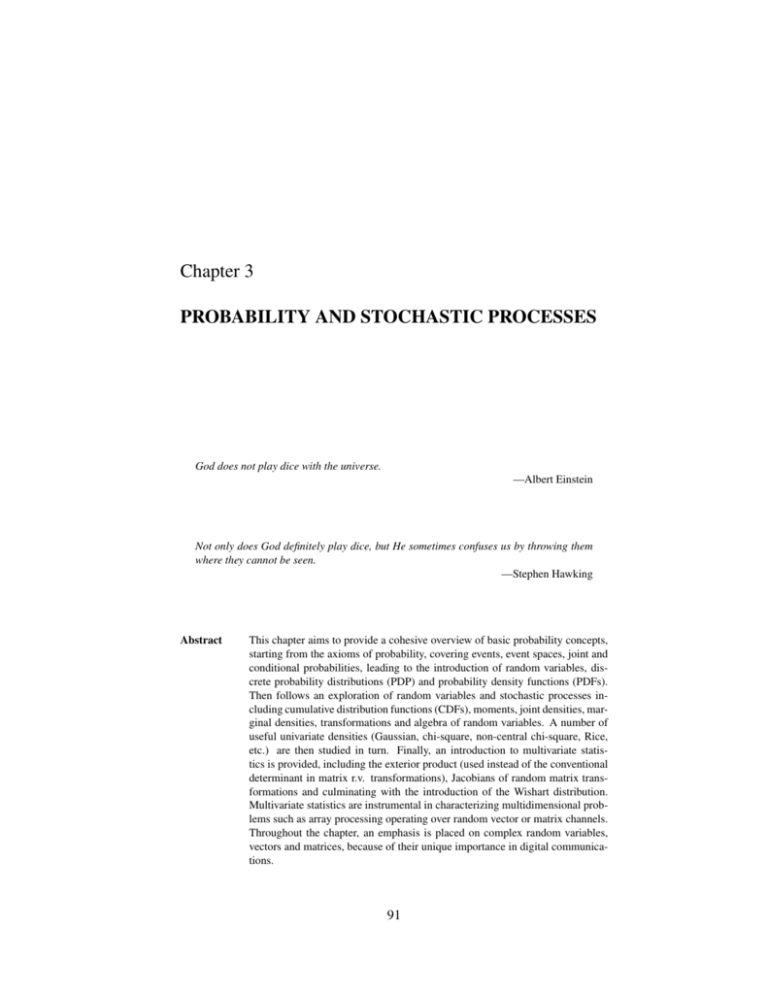

having for input a stationary random process X(t), as depicted in Figure 3.3. It

is logical to assume that its output will be another stationary stochastic process

Y (t) and that it will be defined by the standard convolution integral

� ∞

Y (t) =

X(τ )h(t − τ )dτ.

(3.115)

−∞

X(t)

Y (t)

h(t)

Figure 3.3. Linear system with impulse response h(t) and stochastic process X(t) at its input.

The expectation of Y (t) is then given by

�� ∞

�

�Y (t)� =

X(τ )h(t − τ )dτ

−∞

� ∞

=

�X(τ )� h(t − τ )dτ,

−∞

(3.116)

121

Probability and stochastic processes

where, by virtue of the stationarity of X(t), the remaining expectation is actually a constant, and we have

� ∞

�Y (t)� = µX

h(t − τ )dτ

−∞

� ∞

= µX

h(τ )dτ

−∞

= µX H(0),

(3.117)

where H(f ) is the Fourier transform of h(t).

Let us now determine the crosscorrelation function of Y (t) and X(t). We

have

RY X (t, τ ) = �Y (t)X ∗ (t − τ )�

�

�

� ∞

∗

=

X (t − τ )

X(t − a)h(a)da

−∞

� ∞

=

�X ∗ (t − τ )X(t − a)� h(a)da

−∞

� ∞

=

RXX (τ − a)h(a)da.

(3.118)

−∞

Since the right-hand side of the above is independent of t, it can be deduced

that X(t) and Y (t) are jointly stationary. Furthermore, the last line is also a

convolution integral, i.e.

RY X (τ ) = RXX (τ ) ∗ h(τ ).

(3.119)

The autocorrelation function of Y (t) can be derived in the same fashion:

RY Y (τ ) = �Y (t)Y ∗ (t − τ )�

�

�

� ∞

∗

=

Y (t − τ )

X(t − a)h(a)da

−∞

� ∞

=

�Y ∗ (t − τ )X(t − a)� h(a)da

�−∞

∞

=

RY X (a − τ )h(a)da

−∞

= RY X (−τ ) ∗ h(τ )

= RXX (−τ ) ∗ h(−τ ) ∗ h(τ )

= RXX (τ ) ∗ h(−τ ) ∗ h(τ ).

(3.120)

Given that X(t) at any given instant is a random variable, how can the spectrum of X(t) be characterized? Intuitively, it can be assumed that there is

122

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

a different spectrum X(f, λ) for every member function X(t, λ). However,

stochastic processes are in general infinite energy signals which implies that

their Fourier transform in the strict sense does not exist. In the time domain, a

process is characterized essentially through its mean and autocorrelation function. In the frequency domain, we resort to the power spectral density.

Definition 3.35. The power spectral density (PSD) of a random process X(t)

is a spectrum giving the average (in the ensemble statistic sense) power in the

process at every frequency f .

The PSD can be found simply by taking the Fourier transform of the autocorrelation function, i.e.

� ∞

SXX (f ) =

RXX (τ )e−j2πf τ dτ,

(3.121)

−∞

which obviously implies that the autocorrelation function can be found from

the PSD SXX (f ) by performing an inverse transform, i.e.

� ∞

RXX (τ ) =

SXX (f )ej2πf τ df.

(3.122)

−∞

This bilateral relation is known as the Wiener-Khinchin theorem and its

proof is left as an exercise.

Definition 3.36. The cross power spectral density (CPSD) between two random processes X(t) and Y (t) is a spectrum giving the ensemble average product between every frequency component of X(t) and every corresponding frequency component of Y (t).

As could be expected, the CPSD can also be computed via a Fourier transform

� ∞

SXY (f ) =

RXY (τ )ej2πf τ dτ.

(3.123)

−∞

By taking the Fourier transform of properties 3.10 and 3.11, we find the

following:

Property 3.12.

Property 3.13.

∗

SXY

(f ) = SY X (f ).

∗

SXX

(f ) = SXX (f ).

The CPSD between the input process X(t) and the output process Y (t) of

a linear system can be found by simply taking the Fourier transform of 3.117.

Thus, we have

SY X (f ) = H(f )SXX (f ).

(3.124)

123

Probability and stochastic processes

Likewise, the PSD of Y (t) is obtained by taking the Fourier transform of

(3.119), which yields

SY Y (f ) = H(f ) ∗ H ∗ (f )SXX (f )

= |H(f )|2 SXX (f ).

(3.125)

It is noteworthy that, since RXX (f ) is an even function, its Fourier transform SXX (f ) is necessarily real. This is logical, since according to the definition, the PSD yields the average power at every frequency (and complex power

makes no sense). It also follows that the average total power of X(t) is given

by

�

�

P = X 2 (t) = RXX (0)

� ∞

=

SXX (f )df.

(3.126)

−∞

Discrete stochastic processes

If a stochastic process is bandlimited (i.e. its PSD is limited to a finite

interval of frequencies) either because of its very nature or because it results

from passing another process through a bandlimiting filter, then it is possible to

characterize it fully with a finite set of time instants by virtue of the sampling

theorem.

Given a deterministic signal x(t), it is bandlimited if

X(f ) = F{x(t)} = 0,

for |f | > W ,

(3.127)

where W corresponds to the highest frequency in s(t). Recall that according

to the sampling theorem, x(t) can be uniquely determined by the set of its

samples taken at a rate of fs ≥ 2W samples / s, where the latter inequality

constitutes the Nyquist criterion and the minimum rate 2W samples / s is

known as the Nyquist rate. Sampling at the Nyquist rate, the sampled signal

is

�

� �

�

∞

�

k

k

xs (t) =

x

δ t−

,

(3.128)

2W

2W

k=−∞

and it corresponds to the discrete sequence

�

�

k

x[k] = x

.

2W

(3.129)

Sampling theory tells us that x[k] contains all the necessary information

to reconstruct x(t). Since the member functions of a stochastic process are

individually deterministic, the same is true for each of them and, by extension,

for the process itself. Hence, a bandlimited process X(t) is fully characterized

124

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

by the sequence of random variables X[k] corresponding to a sampling set at

the Nyquist rate. Such a sequence X[k] is an instance of a discrete stochastic

process.

Definition 3.37. A discrete stochastic process X[k] is an ensemble of discrete

sequences (member functions) x1 [k], x2 [k], . . . , xN [k] which are mapped to

the outcomes λ1 , λ2 , . . . , λN making up the sample space S of a corresponding

random experiment.

The mth moment of X[k] is

�X [k]� =

m

�

∞

−∞

xm fX[k] (x)dx,

(3.130)

its autocorrelation is defined

� ∞� ∞

�

1�

∗

RXX [k1 , k2 ] =

X k1 X k 2 =

xy ∗ fXk1 ,Xk2 (x, y)dxdy, (3.131)

2

−∞ −∞

and its autocovariance is given by

µXX [k1 , k2 ] = RXX [k1 , k2 ] − �X[k1 ]� �X[k2 ]� .

(3.132)

If the process is stationary, then we have

RXX [k1 , k2 ] = RXX [k1 − k2 ],

µXX [k1 , k2 ] = µXX [k1 − k2 ] = RXX [k1 − k2 ] − µ2X . (3.133)

The power spectral density of a discrete process is, naturally enough, computed using the discrete Fourier transform, i.e.

SXX (f ) =

∞

�

RXX [k]e−j2πf k ,

(3.134)

k=−∞

with the inverse relationship being

� 1/2

RXX [k] =

SXX (f )ej2πf k df.

(3.135)

−1/2

Hence, as with any discrete Fourier transform, the PSD SXX (f ) is periodic.

More precisely, we have SXX (f ) = SXX (f + n) where n is any integer.

Given a discrete-time linear time-invariant system with a discrete impulse

response h[k] = h(tk ), the output process Y [k] of this system when a process

X[k] is applied at its input is given by

Y [k] =

∞

�

n=−∞

h[n]X[k − n],

(3.136)

125

Probability and stochastic processes

which constitutes a discrete convolution.

The mean of the output can be computed as follows:

= �Y [k]�

� ∞

�

�

=

h[n]X[k − n]

µY

n=−∞

=

∞

�

h[n] �X[k − n]�

n=−∞

∞

�

= µX

h[n] = µX H(0),

(3.137)

n=−∞

where H(0) is the DC component of the system’s frequency transfer function.

Likewise, the autocorrelation function of the output is given by

RY Y [k] =

=

1 ∗

�Y [n]Y [n + k]�

2�

�

∞

∞

�

�

1

∗

∗

h [m]X [n − m]

h[l]X[k + n − l]

2 m=−∞

l=−∞

=

=

∞

�

∞

�

m=−∞ l=−∞

∞

∞

�

�

m=−∞ l=−∞

h∗ [m]h[l] �X ∗ [n − m]X[k + n − l]�

h∗ [m]h[l]RXX [k + m − l],

(3.138)

which is in fact a double discrete convolution.

Taking the discrete Fourier transform of the above expression, we obtain

SY Y (f ) = SXX (f ) |Hs (f )|2 ,

(3.139)

which is exactly the same as for continuous processes, except that SXX (f ) and

SY Y (f ) are the periodic PSDs of discrete processes, and Hs (f ) is the periodic

spectrum of the sampled version of h(t).

Cyclostationarity

The modeling of signals carrying digital information implies stochastic processes which are not quite stationary, although it is possible in a sense to treat

them as stationary and thus obtain the analytical convenience associated with

this property.

Definition 3.38. A cyclostationary stochastic process is a process with nonconstant mean (it is therefore not stationary, neither in the strict sense nor the

126

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

wide-sense) such that the mean and autocorrelation function are periodic in

time with a given period T .

Consider a random process

S(t) =

∞

�

k=−∞

a[k]g(t − kT ),

(3.140)

where {a[k]} is a sequence of complex random variables having a mean µA

and autocorrelation function RAA [n] (so that the sequence A[k] = {a[k]} is a

stationary discrete random process), and g(t) is a real pulse-shaping function

considered to be 0 outside the interval t ∈ [0, T ]. The mean of S(t) is given by

µS = µA

∞

�

k=−∞

g(t − nT ),

(3.141)

and it can be seen that it is periodic with period T .

Likewise, the autocorrelation function is given by

RSS (t, t + τ ) =

=

1 ∗

�S (t)S(t + τ )�

2�

�

∞

∞

�

�

1

∗

a [k]g(t − kT )

a[l]g(t + τ − lT )

2

k=−∞

=

=

1

g(t − kT )g(t + τ − lT ) �a∗ [k]a[l]�

2

−∞

k=−∞

∞ �

∞

�

k=−∞ −∞

It is easy to see that

l=−∞

∞ �

∞

�

g(t − kT )g(t + τ − lT )RAA [l − k]. (3.142)

RSS (t, t + τ ) = RSS (t + kT, t + τ + kT ),

for any integer k.

(3.143)

The fact that such processes are not stationary is inconvenient. For example, it is awkward to derive a PSD from the above autocorrelation function, because there are 2 variables involved (t and τ ) and this calls for a 2-dimensional

Fourier transform. However, it is possible to sidestep this issue by observing that averaging the autocorrelation function over one period T removes any

dependance upon t.

Definition 3.39. The period-averaged autocorrelation function of a cyclostationary process is defined

�

1 T /2

RSS (t, t + τ )dt.

R̄SS (τ ) =

T −T /2

127

Probability and stochastic processes

It is noteworthy that the period-averaged autocorrelation function is an ensemble statistic and should not be confused with the time-averaged statistics

defined earlier. Based on this, the power spectral density can be simply defined as

� ∞

�

�

SSS (f ) = F R̄SS (τ ) =

R̄SS (τ )ej2πf τ dτ.

(3.144)

−∞

5.

Typical univariate distributions

In this section, we examine various types of random variables which will

be found useful in the following chapters. We will start by studying three

fundamental distributions: the binomial, Gaussian and uniform laws. Because

complex numbers play a fundamental role in the modeling of communication

systems, we will then introduce complex r.v.’s and the associated distributions.

Most importantly, we will dwell on the complex Gaussian distribution, which

will then serve as a basis for deriving other useful distributions: chi-square,

F-distribution, Rayleigh and Rice. Finally, the Nakagami-m and lognormal

distributions complete our survey.

Binomial distribution

We have already seen in section 2 that a discrete random variable following

a binomial distribution is characterized by the PDF

�

N �

�

N

fX (x) =

pn (1 − p)N −n δ(x − n).

n

(3.145)

n=0

We can find the CDF by simply integrating the above. Thus we have

�

N �

�

N

pn (1 − p)N −n δ(α − n)dα

n

−∞

�

FX (x) =

x

n=0

�

N

�

=

n=0

where

It follows that

�

x

−∞

N

n

�

pn (1 − p)N −n

δ(α − n)dα =

FX (x) =

�

�

x

−∞

δ(α − n)dα, (3.146)

1, if n < x

0, otherwise

�

�x� �

�

N

pn (1 − p)N −n ,

n

n=0

(3.147)

(3.148)

128

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

where �x� denotes the floor operator, i.e. it corresponds to the nearest integer

which is smaller or equal to x.

The characteristic function is given by

�

� ∞�

N �

N

φX (jt) =

pn (1 − p)N −n δ(x − n)ejtx dx

n

−∞

n=0

�

N

�

=

n=0

N

n

�

pn (1 − p)N −n ejtn ,

(3.149)

which, according to the binomial theorem, reduces to

φX (jt) = (1 − p + pejt )N .

(3.150)

Furthermore, the Mellin transform of the binomial PDF is given by

�

� ∞�

N �

N

MfX (s) =

pn (1 − p)N −n δ(x − n)xs−1 dx

n

−∞

n=0

�

N

�

=

n=0

N

n

�

pn (1 − p)N −n ns−1 ,

which implies that the kth moment is

�

N �

� � �

N

k

X =

pn (1 − p)N −n nk .

n

(3.151)

(3.152)

n=0

If k = 1, we have

�

N �

�

N

pn (1 − p)N −n n

�X� =

n

n=0

N

�

N!

pn (1 − p)N −n

(N − n)!(n − 1)!

n=0

�

N

−1 �

�

�

N −1

= Np

pn (1 − p)N −1−n

�

n

n� =−1

�

�

N −1

�

where n = n − 1 and, noting that

= 0, we have

−1

�

N

−1 �

�

�

�

N −1

�X� = N p

pn (1 − p)N −1−n

�

n

�

=

(3.153)

n =0

= N p(1 − p + p)N −1

= N p.

(3.154)

129

Probability and stochastic processes

Likewise, if k = 2, we have

�

X

2

�

�

N �

�

N

=

pn (1 − p)N −n n2

n

n=0

= Np

N

−1 �

�

n� =0

N −1

n�

�

= N p (N − 1)p

N

−1 �

�

n� =0

N −1

n�

�

N

−2

�

n�� =0

�

�

�

pn (1 − p)N −1−n (n� + 1)

�

N −2

n��

n�

�

��

��

pn (1 − p)N −2−n +

N −1−n�

p (1 − p)

�

,

(3.155)

where n� = n − 1 and n�� = n − 2 and, applying the binomial theorem

� 2�

X

= N (N − 1)p2 + N p

= N p(1 − p) + N 2 p2 .

If follows that the variance is given by

� �

2

σX

= X 2 − �X�2

= N p(1 − p) + N 2 p2 − N 2 p2

= N p(1 − p)

(3.156)

(3.157)

Uniform distribution

A continuous r.v. X characterized by a uniform distribution can take any

value within an interval [a, b] and this is denoted X ∼ U (a, b). Given a smaller

interval [∆a, ∆b] of width ∆ such that ∆a ≥ a and ∆b ≤ b, we find that, by

definition

P (X ∈ [∆a , ∆b ]) = P (∆a ≤ X ≤ ∆b ) =

∆

,

b−a

(3.158)

regardless of the position of the interval [∆a , ∆b ] within the range [a, b].

The PDF of such an r.v. is simply

� 1

b−a , if x ∈ [a, b],

fX (x) =

(3.159)

0,

elsewhere,

or, in terms of the step function u(x),

fX (x) =

1

[u(x − a) − u(x − b)] .

b−a

(3.160)

130

SPACE-TIME METHODS, VOL. 1: SPACE-TIME PROCESSING

The corresponding CDF is

FX (x) =

0,

x

b−a ,

1,

if x < a,

if x ∈ [a, b]

if x > b,

(3.161)

which, in terms of the step function is conveniently expressed

x

FX (x) =

[u(x − a) − u(x − b)] + u(x − b).

b−a

The characteristic function is obtained as follows:

� b

1 jtx

φX (jt) =

e dx

a b−a

� jtx �b

1

e

=

b − a jt a

�

�

1

=

ejtb − ejta .

jt(b − a)

The Mellin transform is equally elementary:

� b s−1

x

MfX (s) =

dx

a b−a

� s �b

1

x

=

b−a s a

1 bs − as

=

.

b−a s

It follows that

� �

1 bk+1 − ak+1

Xk =

.

b−a

k+1

(3.162)

(3.163)

(3.164)

(3.165)

Gaussian distribution

From Appendix 3.A and Example 3.4, we know that the PDF of a Gaussian

r.v. is

(x−µX )2

−

1

2

√

fX (x) =

e 2σX ,

(3.166)

2πσX

2 is the variance and µ is the mean of X.

where σX

X

The corresponding CDF is

� x

FX (x) =

fX (α)dα

−∞

=

√

1

2πσX

�

x

−∞

−

e

α−µX

2σ 2

X

dα,

(3.167)

131

Probability and stochastic processes

α−µ

√ X,

2σX

which, with the variable substitution u =

becomes

� x−µ

√ X

2σX

1

2

FX (x) = √

e−u du

π

�−∞

�

��

x−µ

1

√ X

1

+

erf

,

2�

� 2σX ��

|x−µX |

1

√

=

2 1 − erf

2σX

�

�

|

= 1 erfc |x−µ

√ X

2

2σ

if x − µX > 0,

(3.168)

otherwise,

X

where erf(·) is the error function and erfc(·) is the complementary error function.

Figure 3.4, shows the PDF and CDF of the Gaussian distribution.

0.12

0.1

fX (x)

0.08

0.06

0.04

0.02

0

−15

−10